Themistoklis Charalambous

Pareto-Optimal Sampling and Resource Allocation for Timely Communication in Shared-Spectrum Low-Altitude Networks

Oct 30, 2025Abstract:Guaranteeing stringent data freshness for low-altitude unmanned aerial vehicles (UAVs) in shared spectrum forces a critical trade-off between two operational costs: the UAV's own energy consumption and the occupation of terrestrial channel resources. The core challenge is to satisfy the aerial data freshness while finding a Pareto-optimal balance between these costs. Leveraging predictive channel models and predictive UAV trajectories, we formulate a bi-objective Pareto optimization problem over a long-term planning horizon to jointly optimize the sampling timing for aerial traffic and the power and spectrum allocation for fair coexistence. However, the problem's non-convex, mixed-integer nature renders classical methods incapable of fully characterizing the complete Pareto frontier. Notably, we show monotonicity properties of the frontier, building on which we transform the bi-objective problem into several single-objective problems. We then propose a new graph-based algorithm and prove that it can find the complete set of Pareto optima with low complexity, linear in the horizon and near-quadratic in the resource block (RB) budget. Numerical comparisons show that our approach meets the stringent timeliness requirement and achieves a six-fold reduction in RB utilization or a 6 dB energy saving compared to benchmarks.

Distributed Average Consensus in Wireless Multi-Agent Systems with Over-the-Air Aggregation

Jul 30, 2025Abstract:In this paper, we address the average consensus problem of multi-agent systems over wireless networks. We propose a distributed average consensus algorithm by invoking the concept of over-the-air aggregation, which exploits the signal superposition property of wireless multiple-access channels. The proposed algorithm deploys a modified version of the well-known Ratio Consensus algorithm with an additional normalization step for compensating for the arbitrary channel coefficients. We show that, when the noise level at the receivers is negligible, the algorithm converges asymptotically to the average for time-invariant and time-varying channels. Numerical simulations corroborate the validity of our results.

Distributed Target Tracking based on Localization with Linear Time-Difference-of-Arrival Measurements: A Delay-Tolerant Networked Estimation Approach

Dec 22, 2024

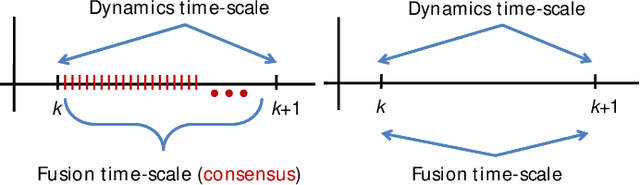

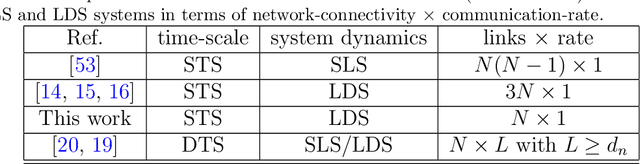

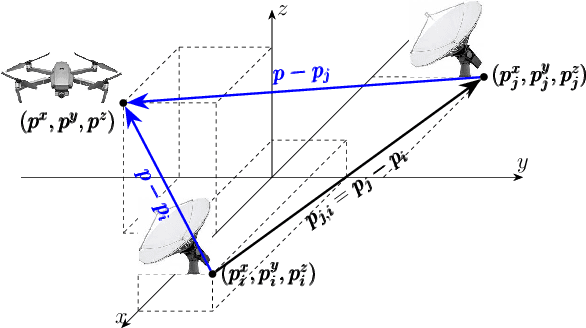

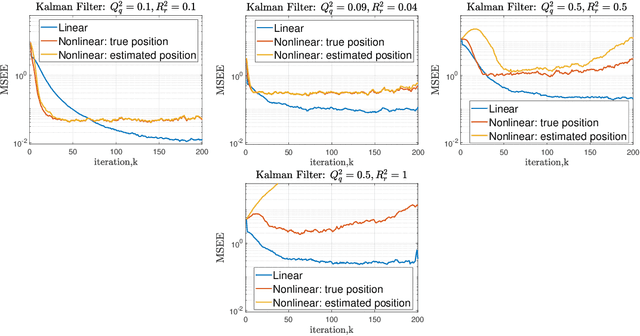

Abstract:This paper considers target tracking based on a beacon signal's time-difference-of-arrival (TDOA) to a group of cooperating sensors. The sensors receive a reflected signal from the target where the time-of-arrival (TOA) renders the distance information. The existing approaches include: (i) classic centralized solutions which gather and process the target data at a central unit, (ii) distributed solutions which assume that the target data is observable in the dense neighborhood of each sensor (to be filtered locally), and (iii) double time-scale distributed methods with high rates of communication/consensus over the network. This work, in order to reduce the network connectivity in (i)-(ii) and communication rate in (iii), proposes a distributed single time-scale technique, which can also handle heterogeneous constant data-exchange delays over the static sensor network. This work assumes only distributed observability (in contrast to local observability in some existing works categorized in (ii)), i.e., the target is observable globally over a (strongly) connected network. The (strong) connectivity further allows for survivable network and $q$-redundant observer design. Each sensor locally shares information and processes the received data in its immediate neighborhood via local linear-matrix-inequalities (LMI) feedback gains to ensure tracking error stability. The same gain matrix works in the presence of heterogeneous delays with no need of redesigning algorithms. Since most existing distributed estimation scenarios are linear (based on consensus), many works use linearization of the existing nonlinear TDOA measurement models where the output matrix is a function of the target position.

Maximum Correntropy Criterion Kalman Filter For Indoor Quadrotor Navigation Under Intermittent Measurements

Mar 16, 2023Abstract:We present a multisensor fusion framework for the onboard real-time navigation of a quadrotor in an indoor environment. The framework integrates sensor readings from an Inertial Measurement Unit (IMU), a camera-based object detection algorithm, and an Ultra-WideBand (UWB) localisation system. Often the sensor readings are not always readily available, leading to inaccurate pose estimation and hence poor navigation performance. To effectively handle and fuse sensor readings, and accurately estimate the pose of the quadrotor for tracking a predefined trajectory, we design a Maximum Correntropy Criterion Kalman Filter (MCC-KF) that can manage intermittent observations. The MCC-KF is designed to improve the performance of the estimation process when is done with a Kalman Filter (KF), since KFs are likely to degrade dramatically in practical scenarios in which noise is non-Gaussian (especially when the noise is heavy-tailed). To evaluate the performance of the MCC-KF, we compare it with a previously designed Kalman filter by the authors. Through this comparison, we aim to demonstrate the effectiveness of the MCC-KF in handling indoor navigation missions. The simulation results show that our presented framework offers low positioning errors, while effectively handling intermittent sensor measurements.

Onboard Real-Time Multi-Sensor Pose Estimation for Indoor Quadrotor Navigation with Intermittent Communication

Dec 03, 2022Abstract:We propose a multisensor fusion framework for onboard real-time navigation of a quadrotor in an indoor environment, by integrating sensor readings from an Inertial Measurement Unit (IMU), a camera-based object detection algorithm, and an Ultra-WideBand (UWB) localization system. The sensor readings from the camera-based object detection algorithm and the UWB localization system arrive intermittently, since the measurements are not readily available. We design a Kalman filter that manages intermittent observations in order to handle and fuse the readings and estimate the pose of the quadrotor for tracking a predefined trajectory. The system is implemented via a Hardware-in-the-loop (HIL) simulation technique, in which the dynamic model of the quadrotor is simulated in an open-source 3D robotics simulator tool, and the whole navigation system is implemented on Artificial Intelligence (AI) enabled edge GPU. The simulation results show that our proposed framework offers low positioning and trajectory errors, while handling intermittent sensor measurements.

DTAC-ADMM: Delay-Tolerant Augmented Consensus ADMM-based Algorithm for Distributed Resource Allocation

Aug 30, 2022

Abstract:Latency is inherent in almost all real-world networked applications. In this paper, we propose a distributed allocation strategy over multi-agent networks with delayed communications. The state of each agent (or node) represents its share of assigned resources out of a fixed amount (equal to overall demand). Every node locally updates its state toward optimizing a global allocation cost function via received information of its neighbouring nodes even when the data exchange over the network is heterogeneously delayed at different links. The update is based on the alternating direction method of multipliers (ADMM) formulation subject to both sum-preserving coupling-constraint and local box-constraints. The solution is derivative-free and holds for general (not necessarily differentiable) convex cost models. We use the notion of augmented consensus over undirected networks to model delayed information exchange and for convergence analysis. We simulate our \textit{delay-tolerant} algorithm for

Linear TDOA-based Measurements for Distributed Estimation and Localized Tracking

Apr 26, 2022

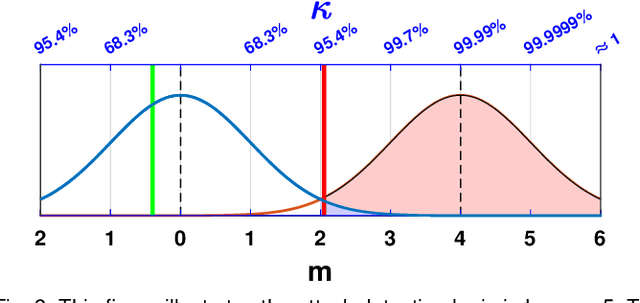

Abstract:We propose a linear time-difference-of-arrival (TDOA) measurement model to improve \textit{distributed} estimation performance for localized target tracking. We design distributed filters over sparse (possibly large-scale) communication networks using consensus-based data-fusion techniques. The proposed distributed and localized tracking protocols considerably reduce the sensor network's required connectivity and communication rate. We, further, consider $\kappa$-redundant observability and fault-tolerant design in case of losing communication links or sensor nodes. We present the minimal conditions on the remaining sensor network (after link/node removal) such that the distributed observability is still preserved and, thus, the sensor network can track the (single) maneuvering target. The motivation is to reduce the communication load versus the processing load, as the computational units are, in general, less costly than the communication devices. We evaluate the tracking performance via simulations in MATLAB.

Distributed Anomaly Detection and Estimation over Sensor Networks: Observational-Equivalence and Q-Redundant Observer Design

Apr 04, 2022

Abstract:In this paper, we study stateless and stateful physics-based anomaly detection scenarios via distributed estimation over sensor networks. In the stateful case, the detector keeps track of the sensor residuals (i.e., the difference of estimated and true outputs) and reports an alarm if certain statistics of the recorded residuals deviate over a predefined threshold, e.g., \chi^2 (Chi-square) detector. Instead, only instantaneous deviation of the residuals raises the alarm in the stateless case without considering the history of the sensor outputs and estimation data. Given (approximate) false-alarm rate for both cases, we propose a probabilistic threshold design based on the noise statistics. We show by simulation that increasing the window length in the stateful case may not necessarily reduce the false-alarm rate. On the other hand, it adds unwanted delay to raise the alarm. The distributed aspect of the proposed detection algorithm enables local isolation of the faulty sensors with possible recovery solutions by adding redundant observationally-equivalent sensors. We, then, offer a mechanism to design Q-redundant distributed observers, robust to failure (or removal) of up to Q sensors over the network.

Distributed Finite-Sum Constrained Optimization subject to Nonlinearity on the Node Dynamics

Mar 28, 2022

Abstract:Motivated by recent development in networking and parallel data-processing, we consider a distributed and localized finite-sum (or fixed-sum) allocation technique to solve resource-constrained convex optimization problems over multi-agent networks (MANs). Such networks include (smart) agents representing an intelligent entity capable of communication, processing, and decision-making. In particular, we consider problems subject to practical nonlinear constraints on the dynamics of the agents in terms of their communications and actuation capabilities (referred to as the node dynamics), e.g., networks of mobile robots subject to actuator saturation and quantized communication. The considered distributed sum-preserving optimization solution further enables adding purposeful nonlinear constraints, for example, sign-based nonlinearities, to reach convergence in predefined-time or robust to impulsive noise and disturbances in faulty environments. Moreover, convergence can be achieved under minimal network connectivity requirements among the agents; thus, the solution is applicable over dynamic networks where the channels come and go due to the agent's mobility and limited range. This paper discusses how various nonlinearity constraints on the optimization problem (e.g., collaborative allocation of resources) can be addressed for different applications via a distributed setup (over a network).

Distributed Detection and Mitigation of Biasing Attacks over Multi-Agent Networks

Sep 20, 2021

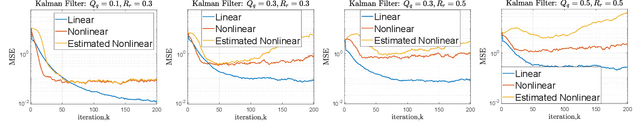

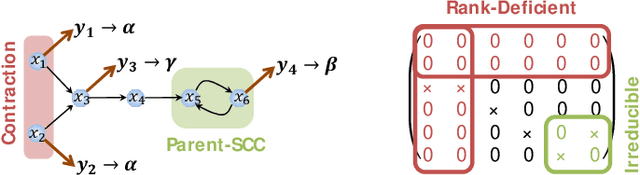

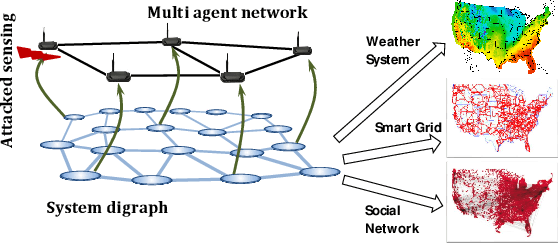

Abstract:This paper proposes a distributed attack detection and mitigation technique based on distributed estimation over a multi-agent network, where the agents take partial system measurements susceptible to (possible) biasing attacks. In particular, we assume that the system is not locally observable via the measurements in the direct neighborhood of any agent. First, for performance analysis in the attack-free case, we show that the proposed distributed estimation is unbiased with bounded mean-square deviation in steady-state. Then, we propose a residual-based strategy to locally detect possible attacks at agents. In contrast to the deterministic thresholds in the literature assuming an upper bound on the noise support, we define the thresholds on the residuals in a probabilistic sense. After detecting and isolating the attacked agent, a system-digraph-based mitigation strategy is proposed to replace the attacked measurement with a new observationally-equivalent one to recover potential observability loss. We adopt a graph-theoretic method to classify the agents based on their measurements, to distinguish between the agents recovering the system rank-deficiency and the ones recovering output-connectivity of the system digraph. The attack detection/mitigation strategy is specifically described for each type, which is of polynomial-order complexity for large-scale applications. Illustrative simulations support our theoretical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge