Tanmay Jain

Limited Data, Unlimited Potential: A Study on ViTs Augmented by Masked Autoencoders

Oct 31, 2023

Abstract:Vision Transformers (ViTs) have become ubiquitous in computer vision. Despite their success, ViTs lack inductive biases, which can make it difficult to train them with limited data. To address this challenge, prior studies suggest training ViTs with self-supervised learning (SSL) and fine-tuning sequentially. However, we observe that jointly optimizing ViTs for the primary task and a Self-Supervised Auxiliary Task (SSAT) is surprisingly beneficial when the amount of training data is limited. We explore the appropriate SSL tasks that can be optimized alongside the primary task, the training schemes for these tasks, and the data scale at which they can be most effective. Our findings reveal that SSAT is a powerful technique that enables ViTs to leverage the unique characteristics of both the self-supervised and primary tasks, achieving better performance than typical ViTs pre-training with SSL and fine-tuning sequentially. Our experiments, conducted on 10 datasets, demonstrate that SSAT significantly improves ViT performance while reducing carbon footprint. We also confirm the effectiveness of SSAT in the video domain for deepfake detection, showcasing its generalizability. Our code is available at https://github.com/dominickrei/Limited-data-vits.

Latency-Memory Optimized Splitting of Convolution Neural Networks for Resource Constrained Edge Devices

Jul 19, 2021

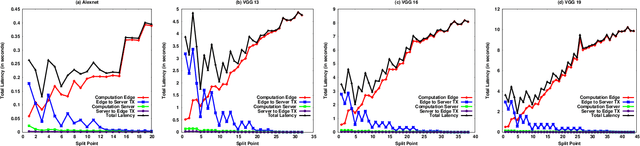

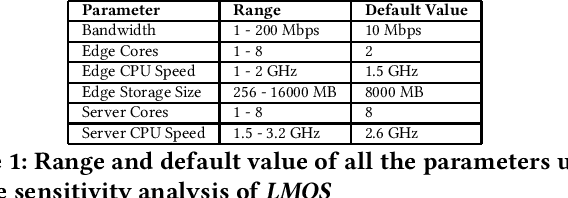

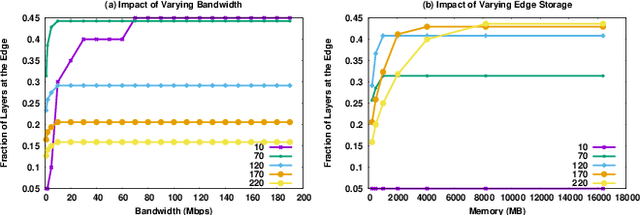

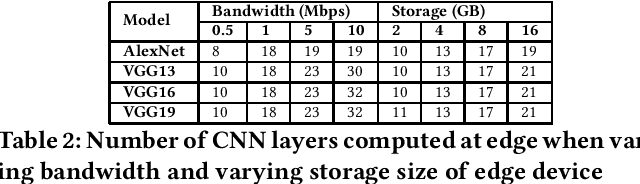

Abstract:With the increasing reliance of users on smart devices, bringing essential computation at the edge has become a crucial requirement for any type of business. Many such computations utilize Convolution Neural Networks (CNNs) to perform AI tasks, having high resource and computation requirements, that are infeasible for edge devices. Splitting the CNN architecture to perform part of the computation on edge and remaining on the cloud is an area of research that has seen increasing interest in the field. In this paper, we assert that running CNNs between an edge device and the cloud is synonymous to solving a resource-constrained optimization problem that minimizes the latency and maximizes resource utilization at the edge. We formulate a multi-objective optimization problem and propose the LMOS algorithm to achieve a Pareto efficient solution. Experiments done on real-world edge devices show that, LMOS ensures feasible execution of different CNN models at the edge and also improves upon existing state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge