Rajeev Shorey

SmartSplit: Latency-Energy-Memory Optimisation for CNN Splitting on Smartphone Environment

Nov 01, 2021

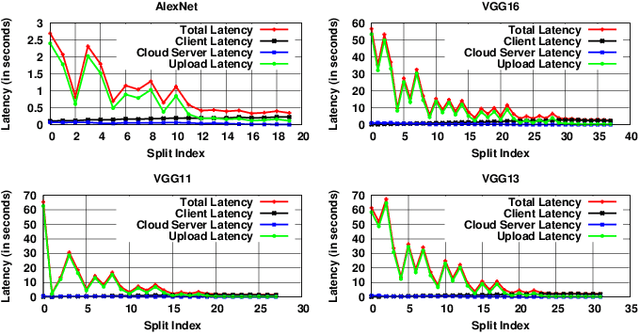

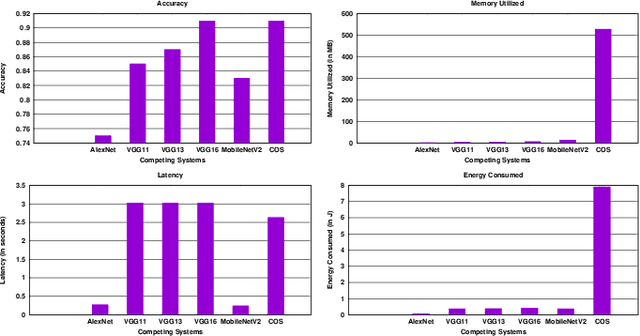

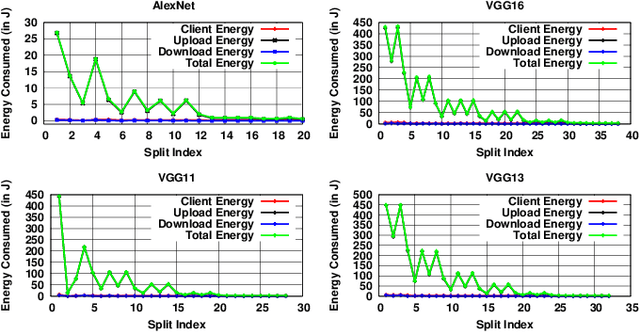

Abstract:Artificial Intelligence has now taken centre stage in the smartphone industry owing to the need of bringing all processing close to the user and addressing privacy concerns. Convolution Neural Networks (CNNs), which are used by several AI applications, are highly resource and computation intensive. Although new generation smartphones come with AI-enabled chips, minimal memory and energy utilisation is essential as many applications are run concurrently on a smartphone. In light of this, optimising the workload on the smartphone by offloading a part of the processing to a cloud server is an important direction of research. In this paper, we analyse the feasibility of splitting CNNs between smartphones and cloud server by formulating a multi-objective optimisation problem that optimises the end-to-end latency, memory utilisation, and energy consumption. We design SmartSplit, a Genetic Algorithm with decision analysis based approach to solve the optimisation problem. Our experiments run with multiple CNN models show that splitting a CNN between a smartphone and a cloud server is feasible. The proposed approach, SmartSplit fares better when compared to other state-of-the-art approaches.

FedFm: Towards a Robust Federated Learning Approach For Fault Mitigation at the Edge Nodes

Nov 01, 2021

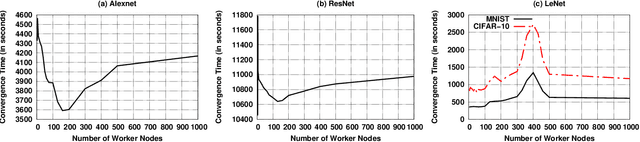

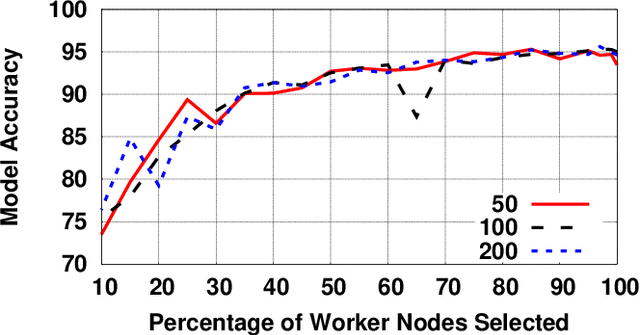

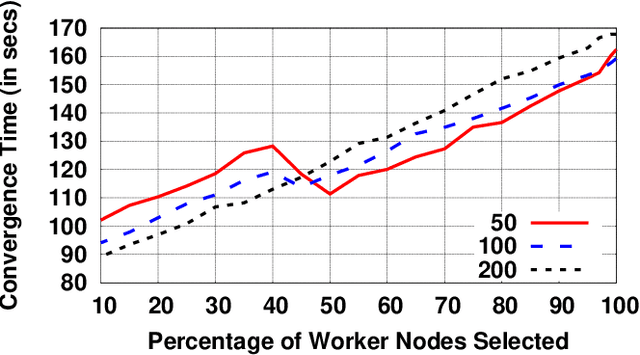

Abstract:Federated Learning deviates from the norm of "send data to model" to "send model to data". When used in an edge ecosystem, numerous heterogeneous edge devices collecting data through different means and connected through different network channels get involved in the training process. Failure of edge devices in such an ecosystem due to device fault or network issues is highly likely. In this paper, we first analyse the impact of the number of edge devices on an FL model and provide a strategy to select an optimal number of devices that would contribute to the model. We observe how the edge ecosystem behaves when the selected devices fail and provide a mitigation strategy to ensure a robust Federated Learning technique.

Latency-Memory Optimized Splitting of Convolution Neural Networks for Resource Constrained Edge Devices

Jul 19, 2021

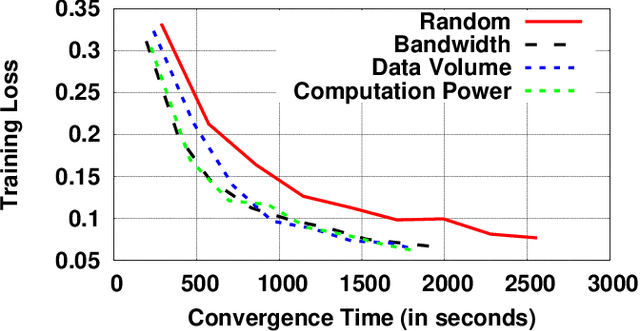

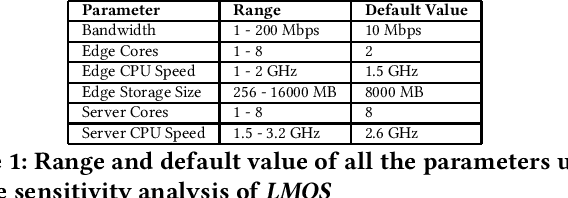

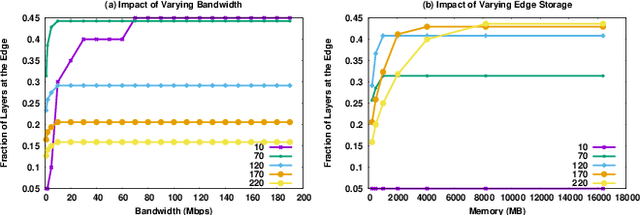

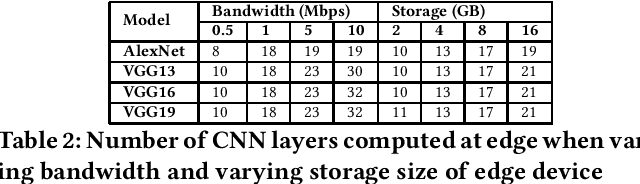

Abstract:With the increasing reliance of users on smart devices, bringing essential computation at the edge has become a crucial requirement for any type of business. Many such computations utilize Convolution Neural Networks (CNNs) to perform AI tasks, having high resource and computation requirements, that are infeasible for edge devices. Splitting the CNN architecture to perform part of the computation on edge and remaining on the cloud is an area of research that has seen increasing interest in the field. In this paper, we assert that running CNNs between an edge device and the cloud is synonymous to solving a resource-constrained optimization problem that minimizes the latency and maximizes resource utilization at the edge. We formulate a multi-objective optimization problem and propose the LMOS algorithm to achieve a Pareto efficient solution. Experiments done on real-world edge devices show that, LMOS ensures feasible execution of different CNN models at the edge and also improves upon existing state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge