Taiyi Wang

A New Paradigm in Tuning Learned Indexes: A Reinforcement Learning Enhanced Approach

Feb 07, 2025

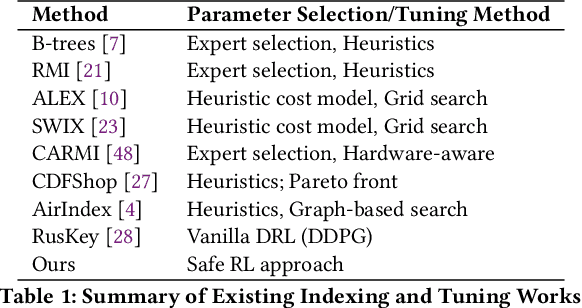

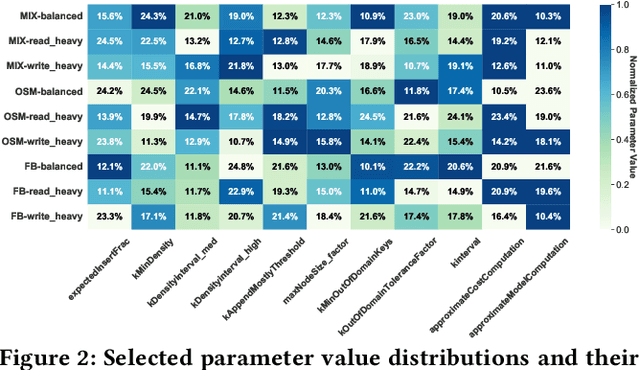

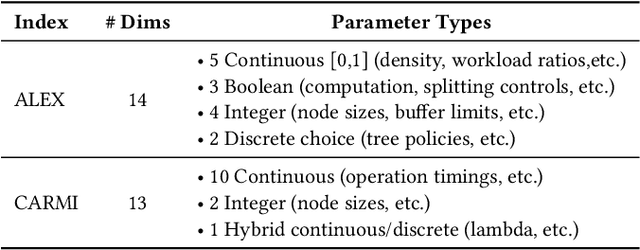

Abstract:Learned Index Structures (LIS) have significantly advanced data management by leveraging machine learning models to optimize data indexing. However, designing these structures often involves critical trade-offs, making it challenging for both designers and end-users to find an optimal balance tailored to specific workloads and scenarios. While some indexes offer adjustable parameters that demand intensive manual tuning, others rely on fixed configurations based on heuristic auto-tuners or expert knowledge, which may not consistently deliver optimal performance. This paper introduces LITune, a novel framework for end-to-end automatic tuning of Learned Index Structures. LITune employs an adaptive training pipeline equipped with a tailor-made Deep Reinforcement Learning (DRL) approach to ensure stable and efficient tuning. To accommodate long-term dynamics arising from online tuning, we further enhance LITune with an on-the-fly updating mechanism termed the O2 system. These innovations allow LITune to effectively capture state transitions in online tuning scenarios and dynamically adjust to changing data distributions and workloads, marking a significant improvement over other tuning methods. Our experimental results demonstrate that LITune achieves up to a 98% reduction in runtime and a 17-fold increase in throughput compared to default parameter settings given a selected Learned Index instance. These findings highlight LITune's effectiveness and its potential to facilitate broader adoption of LIS in real-world applications.

OCMDP: Observation-Constrained Markov Decision Process

Nov 12, 2024Abstract:In many practical applications, decision-making processes must balance the costs of acquiring information with the benefits it provides. Traditional control systems often assume full observability, an unrealistic assumption when observations are expensive. We tackle the challenge of simultaneously learning observation and control strategies in such cost-sensitive environments by introducing the Observation-Constrained Markov Decision Process (OCMDP), where the policy influences the observability of the true state. To manage the complexity arising from the combined observation and control actions, we develop an iterative, model-free deep reinforcement learning algorithm that separates the sensing and control components of the policy. This decomposition enables efficient learning in the expanded action space by focusing on when and what to observe, as well as determining optimal control actions, without requiring knowledge of the environment's dynamics. We validate our approach on a simulated diagnostic task and a realistic healthcare environment using HeartPole. Given both scenarios, the experimental results demonstrate that our model achieves a substantial reduction in observation costs on average, significantly outperforming baseline methods by a notable margin in efficiency.

HiBO: Hierarchical Bayesian Optimization via Adaptive Search Space Partitioning

Oct 31, 2024

Abstract:Optimizing black-box functions in high-dimensional search spaces has been known to be challenging for traditional Bayesian Optimization (BO). In this paper, we introduce HiBO, a novel hierarchical algorithm integrating global-level search space partitioning information into the acquisition strategy of a local BO-based optimizer. HiBO employs a search-tree-based global-level navigator to adaptively split the search space into partitions with different sampling potential. The local optimizer then utilizes this global-level information to guide its acquisition strategy towards most promising regions within the search space. A comprehensive set of evaluations demonstrates that HiBO outperforms state-of-the-art methods in high-dimensional synthetic benchmarks and presents significant practical effectiveness in the real-world task of tuning configurations of database management systems (DBMSs).

DistRL: An Asynchronous Distributed Reinforcement Learning Framework for On-Device Control Agents

Oct 18, 2024

Abstract:On-device control agents, especially on mobile devices, are responsible for operating mobile devices to fulfill users' requests, enabling seamless and intuitive interactions. Integrating Multimodal Large Language Models (MLLMs) into these agents enhances their ability to understand and execute complex commands, thereby improving user experience. However, fine-tuning MLLMs for on-device control presents significant challenges due to limited data availability and inefficient online training processes. This paper introduces DistRL, a novel framework designed to enhance the efficiency of online RL fine-tuning for mobile device control agents. DistRL employs centralized training and decentralized data acquisition to ensure efficient fine-tuning in the context of dynamic online interactions. Additionally, the framework is backed by our tailor-made RL algorithm, which effectively balances exploration with the prioritized utilization of collected data to ensure stable and robust training. Our experiments show that, on average, DistRL delivers a 3X improvement in training efficiency and enables training data collection 2.4X faster than the leading synchronous multi-machine methods. Notably, after training, DistRL achieves a 20% relative improvement in success rate compared to state-of-the-art methods on general Android tasks from an open benchmark, significantly outperforming existing approaches while maintaining the same training time. These results validate DistRL as a scalable and efficient solution, offering substantial improvements in both training efficiency and agent performance for real-world, in-the-wild device control tasks.

IA2: Leveraging Instance-Aware Index Advisor with Reinforcement Learning for Diverse Workloads

Apr 10, 2024

Abstract:This study introduces the Instance-Aware Index Advisor (IA2), a novel deep reinforcement learning (DRL)-based approach for optimizing index selection in databases facing large action spaces of potential candidates. IA2 introduces the Twin Delayed Deep Deterministic Policy Gradient - Temporal Difference State-Wise Action Refinery (TD3-TD-SWAR) model, enabling efficient index selection by understanding workload-index dependencies and employing adaptive action masking. This method includes a comprehensive workload model, enhancing its ability to adapt to unseen workloads and ensuring robust performance across diverse database environments. Evaluation on benchmarks such as TPC-H reveals IA2's suggested indexes' performance in enhancing runtime, securing a 40% reduction in runtime for complex TPC-H workloads compared to scenarios without indexes, and delivering a 20% improvement over existing state-of-the-art DRL-based index advisors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge