Syed Rafay Hasan

System Integration of Xilinx DPU and HDMI for Real-Time inference in PYNQ Environment with Image Enhancement

Dec 18, 2023Abstract:Use of edge computing in application of Computer Vision (CV) is an active field of research. Today, most CV applications make use of Convolutional Neural Networks (CNNs) to inference on and interpret video data. These edge devices are responsible for several CV related tasks, such as gathering, processing and enhancing, inferencing on, and displaying video data. Due to ease of reconfiguration, computation on FPGA fabric is used to achieve such complex computation tasks. Xilinx provides the PYNQ environment as a user-friendly interface that facilitates in Hardware/Software system integration. However, to the best of authors' knowledge there is no end-to-end framework available for the PYNQ environment that allows Hardware/Software system integration and deployment of CNNs for real-time input feed from High Definition Multimedia Interface (HDMI) input to HDMI output, along with insertion of customized hardware IPs. In this work we propose an integration of rea\textbf{L}-time image \textbf{E}nancement IP with \textbf{A}I inferencing engine in the \textbf{P}ynq environment (\textbf{LEAP}), that integrates HDMI, AI acceleration, image enhancement in the PYNQ environment for Xilinx's Microprocessor on Chip (MPSoC) platform. We evaluate our methodology with two well know CNN models, Resnet50 and YOLOv3. To validate our proposed methodology, LEAP, a simple image enhancement algorithm, histogram equalization, is designed and integrated in the FPGA fabric along with Xilinx's Deep Processing Unit (DPU). Our results show successful implementation of end-to-end integration using completely open source information.

A Case Study of Image Enhancement Algorithms' Effectiveness of Improving Neural Networks' Performance on Adverse Images

Dec 18, 2023

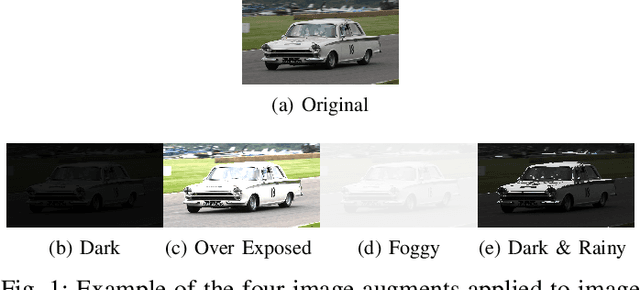

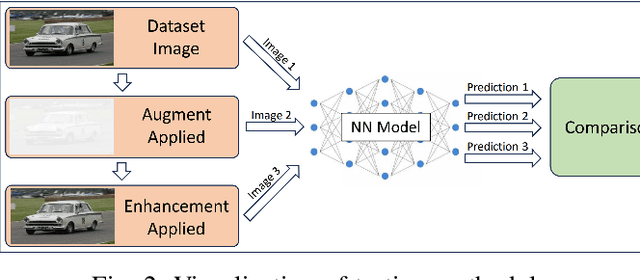

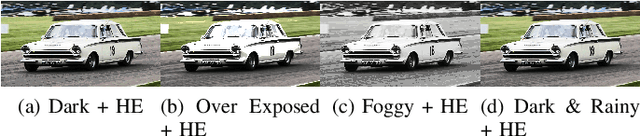

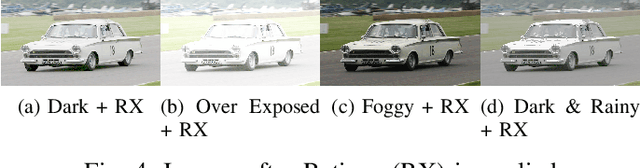

Abstract:Neural Networks (NNs) have become indispensable for applications of Computer Vision (CV) and their use has been ever-growing. NNs are commonly trained for long periods of time on datasets like ImageNet and COCO that have been carefully created to represent common "real-world" environments. When deployed in the field, such as applications of autonomous vehicles, NNs can encounter adverse scenarios that degrade performance. Using image enhancements algorithms to enhance images before being inferenced on a NN model poses an intriguing alternative to retraining, however, published literature on the effectiveness of this solution is scarce. To fill this knowledge gap, we provide a case study on two popular image enhancement algorithms, Histogram Equalization (HE) and Retinex (RX). We simulate four types of adverse scenarios an autonomous vehicle could encounter, dark, over exposed, foggy, and dark & rainy weather conditions. We evaluate the effectiveness of HE and RX using several well established models:, Resnet, GoogleLeNet, YOLO, and a Vision Transformer.

Towards Enabling Dynamic Convolution Neural Network Inference for Edge Intelligence

Feb 18, 2022

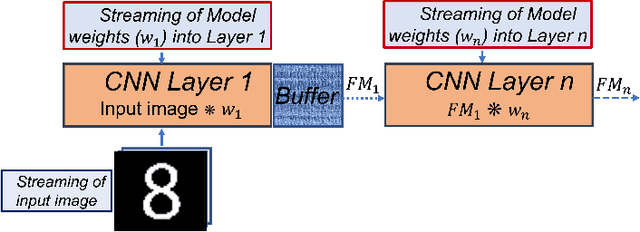

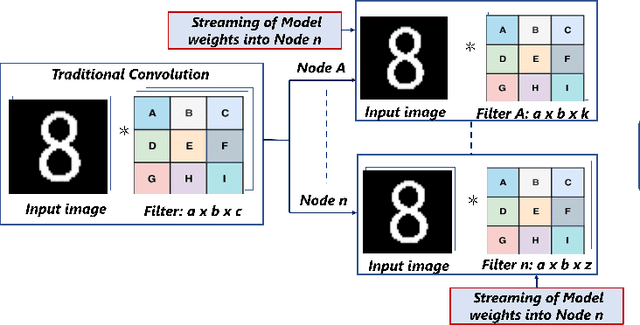

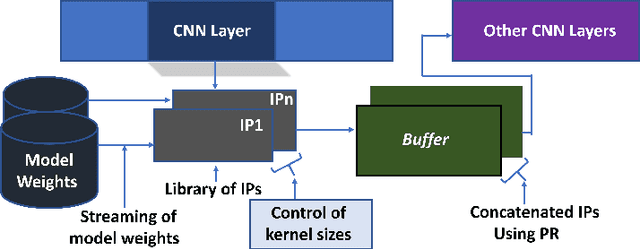

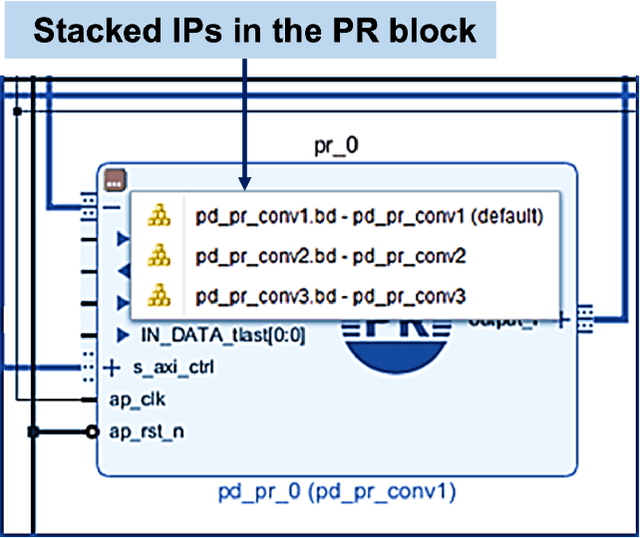

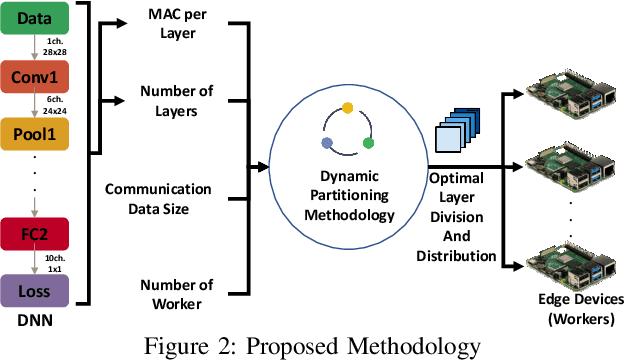

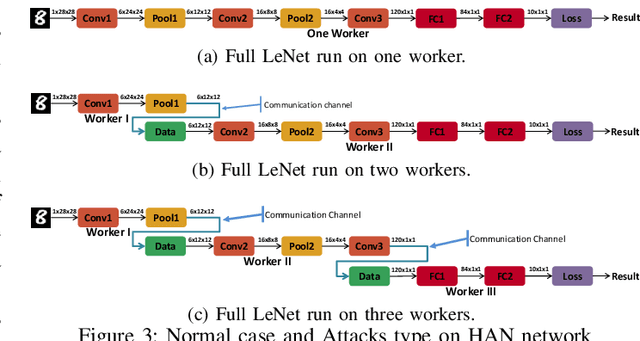

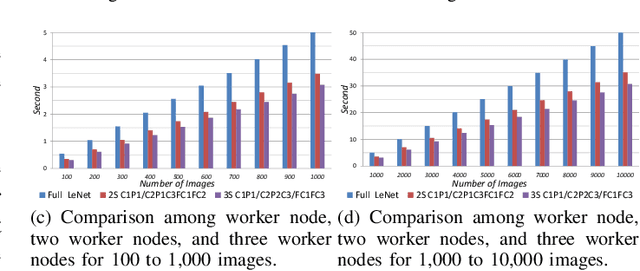

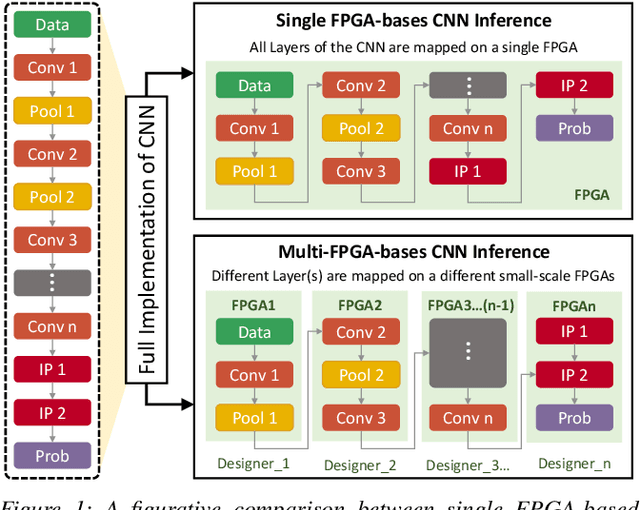

Abstract:Deep learning applications have achieved great success in numerous real-world applications. Deep learning models, especially Convolution Neural Networks (CNN) are often prototyped using FPGA because it offers high power efficiency and reconfigurability. The deployment of CNNs on FPGAs follows a design cycle that requires saving of model parameters in the on-chip memory during High-level synthesis (HLS). Recent advances in edge intelligence require CNN inference on edge network to increase throughput and reduce latency. To provide flexibility, dynamic parameter allocation to different mobile devices is required to implement either a predefined or defined on-the-fly CNN architecture. In this study, we present novel methodologies for dynamically streaming the model parameters at run-time to implement a traditional CNN architecture. We further propose a library-based approach to design scalable and dynamic distributed CNN inference on the fly leveraging partial-reconfiguration techniques, which is particularly suitable for resource-constrained edge devices. The proposed techniques are implemented on the Xilinx PYNQ-Z2 board to prove the concept by utilizing the LeNet-5 CNN model. The results show that the proposed methodologies are effective, with classification accuracy rates of 92%, 86%, and 94% respectively

Security Analysis of Capsule Network Inference using Horizontal Collaboration

Sep 22, 2021

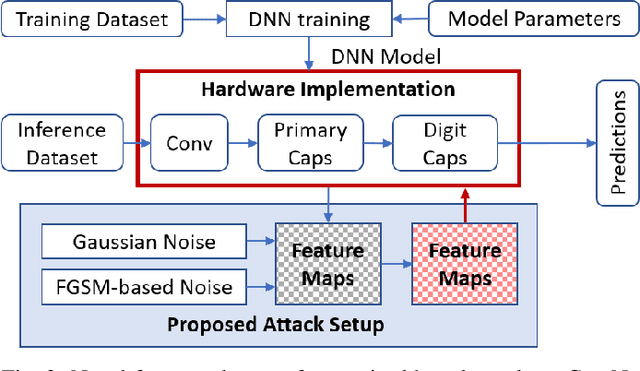

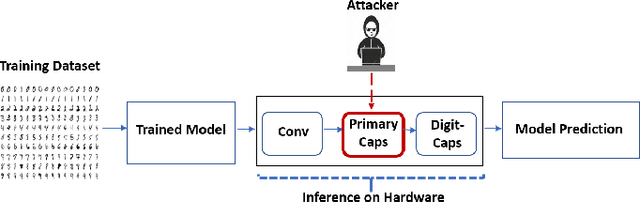

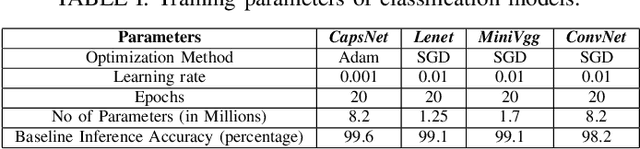

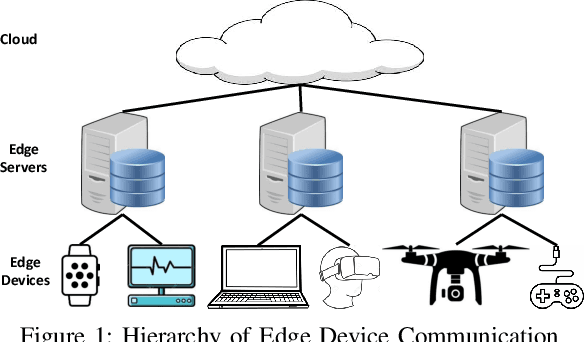

Abstract:The traditional convolution neural networks (CNN) have several drawbacks like the Picasso effect and the loss of information by the pooling layer. The Capsule network (CapsNet) was proposed to address these challenges because its architecture can encode and preserve the spatial orientation of input images. Similar to traditional CNNs, CapsNet is also vulnerable to several malicious attacks, as studied by several researchers in the literature. However, most of these studies focus on single-device-based inference, but horizontally collaborative inference in state-of-the-art systems, like intelligent edge services in self-driving cars, voice controllable systems, and drones, nullify most of these analyses. Horizontal collaboration implies partitioning the trained CNN models or CNN tasks to multiple end devices or edge nodes. Therefore, it is imperative to examine the robustness of the CapsNet against malicious attacks when deployed in horizontally collaborative environments. Towards this, we examine the robustness of the CapsNet when subjected to noise-based inference attacks in a horizontal collaborative environment. In this analysis, we perturbed the feature maps of the different layers of four DNN models, i.e., CapsNet, Mini-VGG, LeNet, and an in-house designed CNN (ConvNet) with the same number of parameters as CapsNet, using two types of noised-based attacks, i.e., Gaussian Noise Attack and FGSM noise attack. The experimental results show that similar to the traditional CNNs, depending upon the access of the attacker to the DNN layer, the classification accuracy of the CapsNet drops significantly. For example, when Gaussian Noise Attack classification is performed at the DigitCap layer of the CapsNet, the maximum classification accuracy drop is approximately 97%.

Dynamic Distribution of Edge Intelligence at the Node Level for Internet of Things

Jul 13, 2021

Abstract:In this paper, dynamic deployment of Convolutional Neural Network (CNN) architecture is proposed utilizing only IoT-level devices. By partitioning and pipelining the CNN, it horizontally distributes the computation load among resource-constrained devices (called horizontal collaboration), which in turn increases the throughput. Through partitioning, we can decrease the computation and energy consumption on individual IoT devices and increase the throughput without sacrificing accuracy. Also, by processing the data at the generation point, data privacy can be achieved. The results show that throughput can be increased by 1.55x to 1.75x for sharing the CNN into two and three resource-constrained devices, respectively.

FeSHI: Feature Map Based Stealthy Hardware Intrinsic Attack

Jun 13, 2021

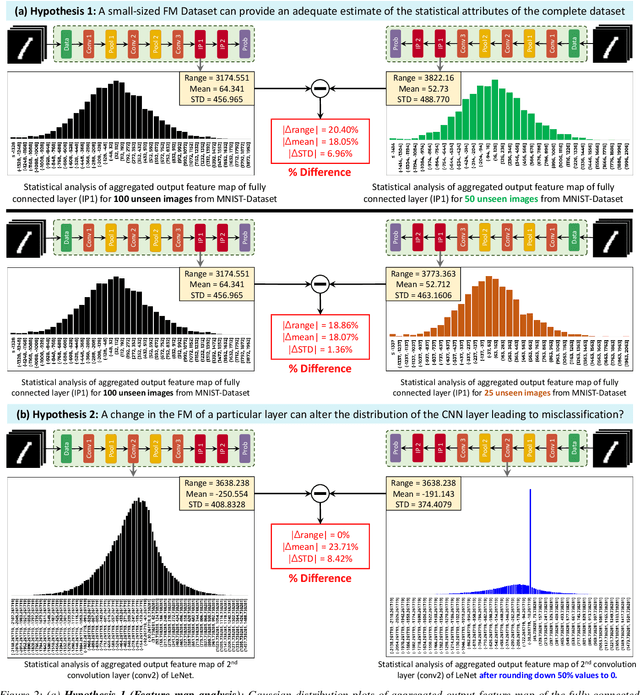

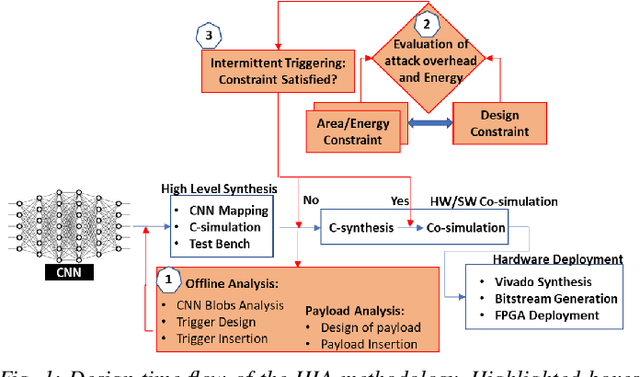

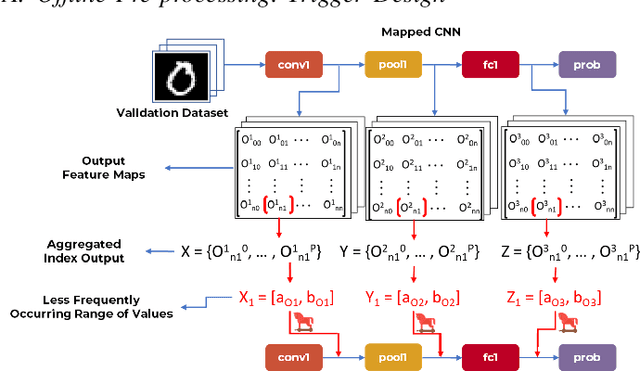

Abstract:Convolutional Neural Networks (CNN) have shown impressive performance in computer vision, natural language processing, and many other applications, but they exhibit high computations and substantial memory requirements. To address these limitations, especially in resource-constrained devices, the use of cloud computing for CNNs is becoming more popular. This comes with privacy and latency concerns that have motivated the designers to develop embedded hardware accelerators for CNNs. However, designing a specialized accelerator increases the time-to-market and cost of production. Therefore, to reduce the time-to-market and access to state-of-the-art techniques, CNN hardware mapping and deployment on embedded accelerators are often outsourced to untrusted third parties, which is going to be more prevalent in futuristic artificial intelligence of things (AIoT) systems. These AIoT systems anticipate horizontal collaboration among different resource-constrained AIoT node devices, where CNN layers are partitioned and these devices collaboratively compute complex CNN tasks Therefore, there is a dire need to explore this attack surface for designing secure embedded hardware accelerators for CNNs. Towards this goal, in this paper, we exploited this attack surface to propose an HT-based attack called FeSHI. This attack exploits the statistical distribution i.e., Gaussian distribution, of the layer-by-layer feature maps of the CNN to design two triggers for stealthy HT with a very low probability of triggering. To illustrate the effectiveness of the proposed attack, we deployed the LeNet and LeNet-3D on PYNQ to classify the MNIST and CIFAR-10 datasets, respectively, and tested FeSHI. The experimental results show that FeSHI utilizes up to 2% extra LUTs, and the overall resource overhead is less than 1% compared to the original designs

SoWaF: Shuffling of Weights and Feature Maps: A Novel Hardware Intrinsic Attack on Convolutional Neural Network

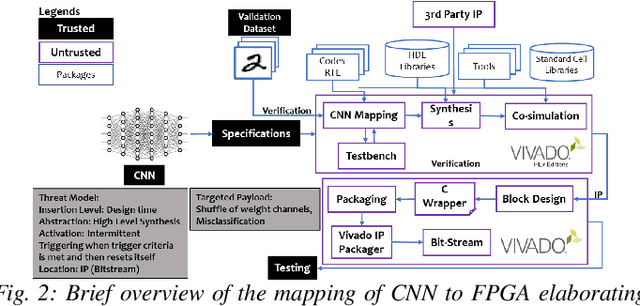

Mar 16, 2021

Abstract:Security of inference phase deployment of Convolutional neural network (CNN) into resource constrained embedded systems (e.g. low end FPGAs) is a growing research area. Using secure practices, third party FPGA designers can be provided with no knowledge of initial and final classification layers. In this work, we demonstrate that hardware intrinsic attack (HIA) in such a "secure" design is still possible. Proposed HIA is inserted inside mathematical operations of individual layers of CNN, which propagates erroneous operations in all the subsequent CNN layers that lead to misclassification. The attack is non-periodic and completely random, hence it becomes difficult to detect. Five different attack scenarios with respect to each CNN layer are designed and evaluated based on the overhead resources and the rate of triggering in comparison to the original implementation. Our results for two CNN architectures show that in all the attack scenarios, additional latency is negligible (<0.61%), increment in DSP, LUT, FF is also less than 2.36%. Three attack scenarios do not require any additional BRAM resources, while in two scenarios BRAM increases, which compensates with the corresponding decrease in FF and LUTs. To the authors' best knowledge this work is the first to address the hardware intrinsic CNN attack with the attacker does not have knowledge of the full CNN.

MacLeR: Machine Learning-based Run-Time Hardware Trojan Detection in Resource-Constrained IoT Edge Devices

Nov 21, 2020

Abstract:Traditional learning-based approaches for run-time Hardware Trojan detection require complex and expensive on-chip data acquisition frameworks and thus incur high area and power overhead. To address these challenges, we propose to leverage the power correlation between the executing instructions of a microprocessor to establish a machine learning-based run-time Hardware Trojan (HT) detection framework, called MacLeR. To reduce the overhead of data acquisition, we propose a single power-port current acquisition block using current sensors in time-division multiplexing, which increases accuracy while incurring reduced area overhead. We have implemented a practical solution by analyzing multiple HT benchmarks inserted in the RTL of a system-on-chip (SoC) consisting of four LEON3 processors integrated with other IPs like vga_lcd, RSA, AES, Ethernet, and memory controllers. Our experimental results show that compared to state-of-the-art HT detection techniques, MacLeR achieves 10\% better HT detection accuracy (i.e., 96.256%) while incurring a 7x reduction in area and power overhead (i.e., 0.025% of the area of the SoC and <0.07% of the power of the SoC). In addition, we also analyze the impact of process variation and aging on the extracted power profiles and the HT detection accuracy of MacLeR. Our analysis shows that variations in fine-grained power profiles due to the HTs are significantly higher compared to the variations in fine-grained power profiles caused by the process variations (PV) and aging effects. Moreover, our analysis demonstrates that, on average, the HT detection accuracy drop in MacLeR is less than 1% and 9% when considering only PV and PV with worst-case aging, respectively, which is ~10x less than in the case of the state-of-the-art ML-based HT detection technique.

How Secure is Distributed Convolutional Neural Network on IoT Edge Devices?

Jun 16, 2020

Abstract:Convolutional Neural Networks (CNN) has found successful adoption in many applications. The deployment of CNN on resource-constrained edge devices have proved challenging. CNN distributed deployment across different edge devices has been adopted. In this paper, we propose Trojan attacks on CNN deployed across a distributed edge network across different nodes. We propose five stealthy attack scenarios for distributed CNN inference. These attacks are divided into trigger and payload circuitry. These attacks are tested on deep learning models (LeNet, AlexNet). The results show how the degree of vulnerability of individual layers and how critical they are to the final classification.

2L-3W: 2-Level 3-Way Hardware-Software Co-Verification for the Mapping of Deep Learning Architecture onto FPGA Boards

Nov 14, 2019

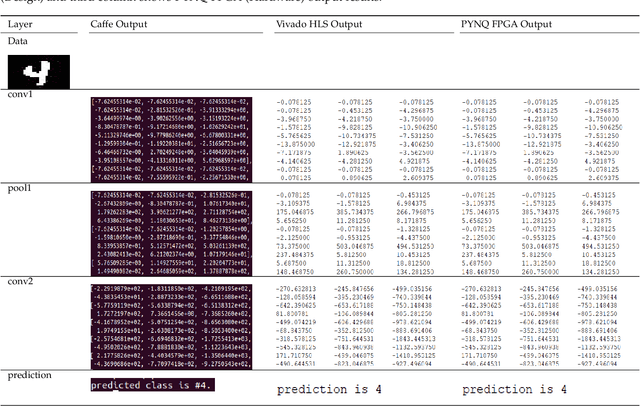

Abstract:FPGAs have become a popular choice for deploying deep learning architectures (DLA). There are many researchers that have explored the deployment and mapping of DLA on FPGA. However, there has been a growing need to do design-time hardware-software co-verification of these deployments. To the best of our knowledge this is the first work that proposes a 2-Level 3-Way (2L-3W) hardware-software co-verification methodology and provides a step-by-step guide for the successful mapping, deployment and verification of DLA on FPGA boards. The 2-Level verification is to make sure the implementation in each stage (software and hardware) are following the desired behavior. The 3-Way co-verification provides a cross-paradigm (software, design and hardware) layer-by-layer parameter check to assure the correct implementation and mapping of the DLA onto FPGA boards. The proposed 2L-3W co-verification methodology has been evaluated over several test cases. In each case, the prediction and layer-by-layer output of the DLA deployed on PYNQ FPGA board (hardware) alongside with the intermediate design results of the layer-by-layer output of the DLA implemented on Vivado HLS and the prediction and layer-by-layer output of the software level (Caffe deep learning framework) are compared to obtain a layer-by-layer similarity score. The comparison is achieved using a completely automated Python script. The comparison provides a layer-by-layer similarity score that informs us the degree of success of the DLA mapping to the FPGA or help identify in design time the layer to be debugged in the case of unsuccessful mapping. We demonstrated our technique on LeNet DLA and Caffe inspired Cifar-10 DLA and the co-verification results yielded layer-by-layer similarity scores of 99\% accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge