Jonathan Sanderson

A Case Study of Image Enhancement Algorithms' Effectiveness of Improving Neural Networks' Performance on Adverse Images

Dec 18, 2023

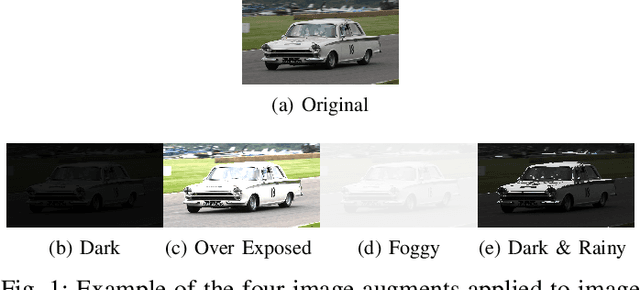

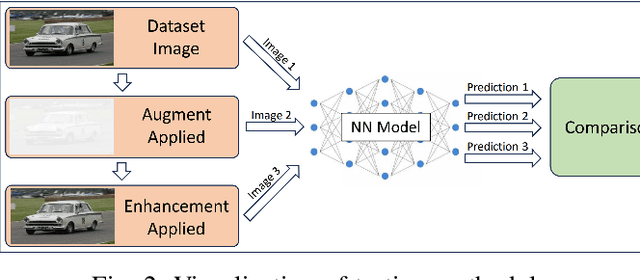

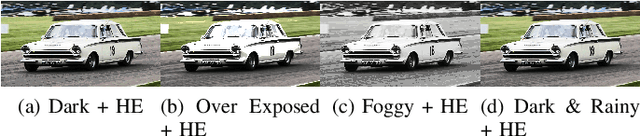

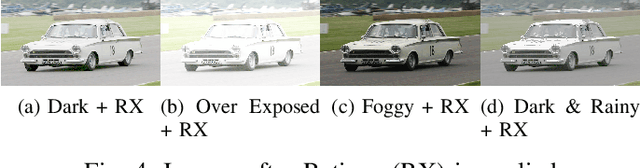

Abstract:Neural Networks (NNs) have become indispensable for applications of Computer Vision (CV) and their use has been ever-growing. NNs are commonly trained for long periods of time on datasets like ImageNet and COCO that have been carefully created to represent common "real-world" environments. When deployed in the field, such as applications of autonomous vehicles, NNs can encounter adverse scenarios that degrade performance. Using image enhancements algorithms to enhance images before being inferenced on a NN model poses an intriguing alternative to retraining, however, published literature on the effectiveness of this solution is scarce. To fill this knowledge gap, we provide a case study on two popular image enhancement algorithms, Histogram Equalization (HE) and Retinex (RX). We simulate four types of adverse scenarios an autonomous vehicle could encounter, dark, over exposed, foggy, and dark & rainy weather conditions. We evaluate the effectiveness of HE and RX using several well established models:, Resnet, GoogleLeNet, YOLO, and a Vision Transformer.

System Integration of Xilinx DPU and HDMI for Real-Time inference in PYNQ Environment with Image Enhancement

Dec 18, 2023Abstract:Use of edge computing in application of Computer Vision (CV) is an active field of research. Today, most CV applications make use of Convolutional Neural Networks (CNNs) to inference on and interpret video data. These edge devices are responsible for several CV related tasks, such as gathering, processing and enhancing, inferencing on, and displaying video data. Due to ease of reconfiguration, computation on FPGA fabric is used to achieve such complex computation tasks. Xilinx provides the PYNQ environment as a user-friendly interface that facilitates in Hardware/Software system integration. However, to the best of authors' knowledge there is no end-to-end framework available for the PYNQ environment that allows Hardware/Software system integration and deployment of CNNs for real-time input feed from High Definition Multimedia Interface (HDMI) input to HDMI output, along with insertion of customized hardware IPs. In this work we propose an integration of rea\textbf{L}-time image \textbf{E}nancement IP with \textbf{A}I inferencing engine in the \textbf{P}ynq environment (\textbf{LEAP}), that integrates HDMI, AI acceleration, image enhancement in the PYNQ environment for Xilinx's Microprocessor on Chip (MPSoC) platform. We evaluate our methodology with two well know CNN models, Resnet50 and YOLOv3. To validate our proposed methodology, LEAP, a simple image enhancement algorithm, histogram equalization, is designed and integrated in the FPGA fabric along with Xilinx's Deep Processing Unit (DPU). Our results show successful implementation of end-to-end integration using completely open source information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge