Stefan Huber

Mechanisms of AI Protein Folding in ESMFold

Feb 05, 2026Abstract:How do protein structure prediction models fold proteins? We investigate this question by tracing how ESMFold folds a beta hairpin, a prevalent structural motif. Through counterfactual interventions on model latents, we identify two computational stages in the folding trunk. In the first stage, early blocks initialize pairwise biochemical signals: residue identities and associated biochemical features such as charge flow from sequence representations into pairwise representations. In the second stage, late blocks develop pairwise spatial features: distance and contact information accumulate in the pairwise representation. We demonstrate that the mechanisms underlying structural decisions of ESMFold can be localized, traced through interpretable representations, and manipulated with strong causal effects.

Embedding the MLOps Lifecycle into OT Reference Models

Oct 23, 2025Abstract:Machine Learning Operations (MLOps) practices are increas- ingly adopted in industrial settings, yet their integration with Opera- tional Technology (OT) systems presents significant challenges. This pa- per analyzes the fundamental obstacles in combining MLOps with OT en- vironments and proposes a systematic approach to embed MLOps prac- tices into established OT reference models. We evaluate the suitability of the Reference Architectural Model for Industry 4.0 (RAMI 4.0) and the International Society of Automation Standard 95 (ISA-95) for MLOps integration and present a detailed mapping of MLOps lifecycle compo- nents to RAMI 4.0 exemplified by a real-world use case. Our findings demonstrate that while standard MLOps practices cannot be directly transplanted to OT environments, structured adaptation using existing reference models can provide a pathway for successful integration.

The Flood Complex: Large-Scale Persistent Homology on Millions of Points

Sep 26, 2025

Abstract:We consider the problem of computing persistent homology (PH) for large-scale Euclidean point cloud data, aimed at downstream machine learning tasks, where the exponential growth of the most widely-used Vietoris-Rips complex imposes serious computational limitations. Although more scalable alternatives such as the Alpha complex or sparse Rips approximations exist, they often still result in a prohibitively large number of simplices. This poses challenges in the complex construction and in the subsequent PH computation, prohibiting their use on large-scale point clouds. To mitigate these issues, we introduce the Flood complex, inspired by the advantages of the Alpha and Witness complex constructions. Informally, at a given filtration value $r\geq 0$, the Flood complex contains all simplices from a Delaunay triangulation of a small subset of the point cloud $X$ that are fully covered by balls of radius $r$ emanating from $X$, a process we call flooding. Our construction allows for efficient PH computation, possesses several desirable theoretical properties, and is amenable to GPU parallelization. Scaling experiments on 3D point cloud data show that we can compute PH of up to dimension 2 on several millions of points. Importantly, when evaluating object classification performance on real-world and synthetic data, we provide evidence that this scaling capability is needed, especially if objects are geometrically or topologically complex, yielding performance superior to other PH-based methods and neural networks for point cloud data.

Topology-driven identification of repetitions in multi-variate time series

May 15, 2025Abstract:Many multi-variate time series obtained in the natural sciences and engineering possess a repetitive behavior, as for instance state-space trajectories of industrial machines in discrete automation. Recovering the times of recurrence from such a multi-variate time series is of a fundamental importance for many monitoring and control tasks. For a periodic time series this is equivalent to determining its period length. In this work we present a persistent homology framework to estimate recurrence times in multi-variate time series with different generalizations of cyclic behavior (periodic, repetitive, and recurring). To this end, we provide three specialized methods within our framework that are provably stable and validate them using real-world data, including a new benchmark dataset from an injection molding machine.

Persistence-based Hough Transform for Line Detection

Apr 18, 2025Abstract:The Hough transform is a popular and classical technique in computer vision for the detection of lines (or more general objects). It maps a pixel into a dual space -- the Hough space: each pixel is mapped to the set of lines through this pixel, which forms a curve in Hough space. The detection of lines then becomes a voting process to find those lines that received many votes by pixels. However, this voting is done by thresholding, which is susceptible to noise and other artifacts. In this work, we present an alternative voting technique to detect peaks in the Hough space based on persistent homology, which very naturally addresses limitations of simple thresholding. Experiments on synthetic data show that our method significantly outperforms the original method, while also demonstrating enhanced robustness. This work seeks to inspire future research in two key directions. First, we highlight the untapped potential of Topological Data Analysis techniques and advocate for their broader integration into existing methods, including well-established ones. Secondly, we initiate a discussion on the mathematical stability of the Hough transform, encouraging exploration of mathematically grounded improvements to enhance its robustness.

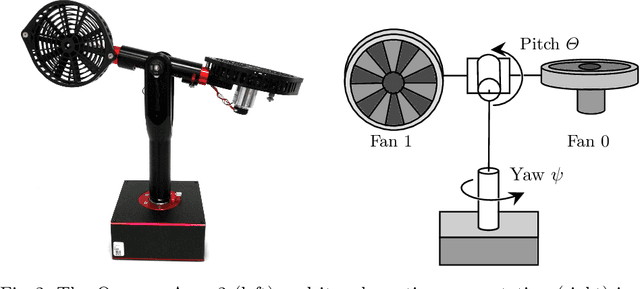

The Crucial Role of Problem Formulation in Real-World Reinforcement Learning

Mar 26, 2025Abstract:Reinforcement Learning (RL) offers promising solutions for control tasks in industrial cyber-physical systems (ICPSs), yet its real-world adoption remains limited. This paper demonstrates how seemingly small but well-designed modifications to the RL problem formulation can substantially improve performance, stability, and sample efficiency. We identify and investigate key elements of RL problem formulation and show that these enhance both learning speed and final policy quality. Our experiments use a one-degree-of-freedom (1-DoF) helicopter testbed, the Quanser Aero~2, which features non-linear dynamics representative of many industrial settings. In simulation, the proposed problem design principles yield more reliable and efficient training, and we further validate these results by training the agent directly on physical hardware. The encouraging real-world outcomes highlight the potential of RL for ICPS, especially when careful attention is paid to the design principles of problem formulation. Overall, our study underscores the crucial role of thoughtful problem formulation in bridging the gap between RL research and the demands of real-world industrial systems.

Energy Optimized Piecewise Polynomial Approximation Utilizing Modern Machine Learning Optimizers

Mar 12, 2025Abstract:This work explores an extension of ML-optimized piecewise polynomial approximation by incorporating energy optimization as an additional objective. Traditional closed-form solutions enable continuity and approximation targets but lack flexibility in accommodating complex optimization goals. By leveraging modern gradient descent optimizers within TensorFlow, we introduce a framework that minimizes total curvature in cam profiles, leading to smoother motion and reduced energy consumption for input data that is unfavorable for sole approximation and continuity optimization. Experimental results confirm the effectiveness of this approach, demonstrating its potential to improve efficiency in scenarios where input data is noisy or suboptimal for conventional methods.

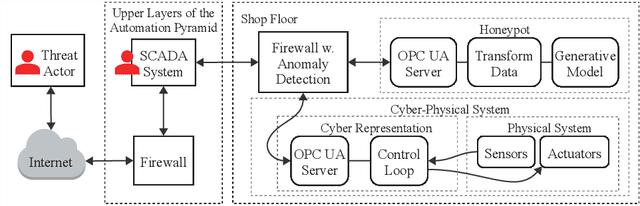

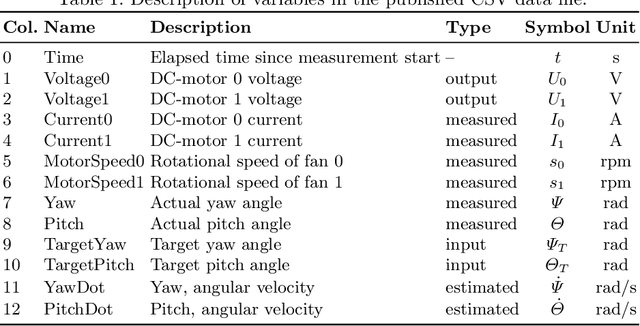

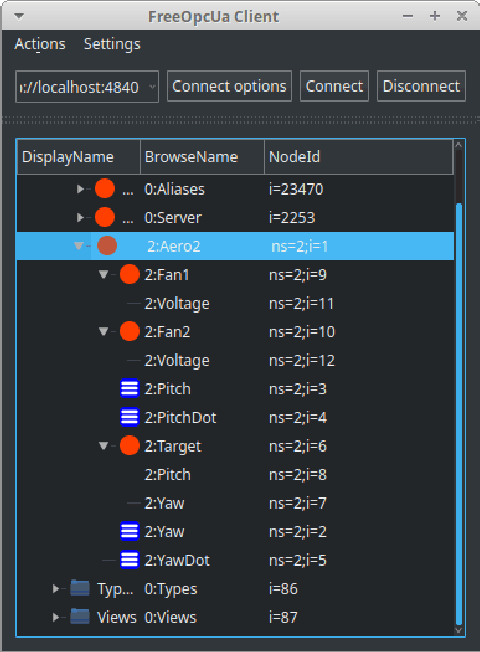

A Generative Model Based Honeypot for Industrial OPC UA Communication

Oct 28, 2024

Abstract:Industrial Operational Technology (OT) systems are increasingly targeted by cyber-attacks due to their integration with Information Technology (IT) systems in the Industry 4.0 era. Besides intrusion detection systems, honeypots can effectively detect these attacks. However, creating realistic honeypots for brownfield systems is particularly challenging. This paper introduces a generative model-based honeypot designed to mimic industrial OPC UA communication. Utilizing a Long ShortTerm Memory (LSTM) network, the honeypot learns the characteristics of a highly dynamic mechatronic system from recorded state space trajectories. Our contributions are twofold: first, we present a proof-of concept for a honeypot based on generative machine-learning models, and second, we publish a dataset for a cyclic industrial process. The results demonstrate that a generative model-based honeypot can feasibly replicate a cyclic industrial process via OPC UA communication. In the short-term, the generative model indicates a stable and plausible trajectory generation, while deviations occur over extended periods. The proposed honeypot implementation operates efficiently on constrained hardware, requiring low computational resources. Future work will focus on improving model accuracy, interaction capabilities, and extending the dataset for broader applications.

Comparison of Model Predictive Control and Proximal Policy Optimization for a 1-DOF Helicopter System

Aug 28, 2024Abstract:This study conducts a comparative analysis of Model Predictive Control (MPC) and Proximal Policy Optimization (PPO), a Deep Reinforcement Learning (DRL) algorithm, applied to a 1-Degree of Freedom (DOF) Quanser Aero 2 system. Classical control techniques such as MPC and Linear Quadratic Regulator (LQR) are widely used due to their theoretical foundation and practical effectiveness. However, with advancements in computational techniques and machine learning, DRL approaches like PPO have gained traction in solving optimal control problems through environment interaction. This paper systematically evaluates the dynamic response characteristics of PPO and MPC, comparing their performance, computational resource consumption, and implementation complexity. Experimental results show that while LQR achieves the best steady-state accuracy, PPO excels in rise-time and adaptability, making it a promising approach for applications requiring rapid response and adaptability. Additionally, we have established a baseline for future RL-related research on this specific testbed. We also discuss the strengths and limitations of each control strategy, providing recommendations for selecting appropriate controllers for real-world scenarios.

Neural Persistence Dynamics

May 24, 2024Abstract:We consider the problem of learning the dynamics in the topology of time-evolving point clouds, the prevalent spatiotemporal model for systems exhibiting collective behavior, such as swarms of insects and birds or particles in physics. In such systems, patterns emerge from (local) interactions among self-propelled entities. While several well-understood governing equations for motion and interaction exist, they are difficult to fit to data due to the often large number of entities and missing correspondences between the observation times, which may also not be equidistant. To evade such confounding factors, we investigate collective behavior from a \textit{topological perspective}, but instead of summarizing entire observation sequences (as in prior work), we propose learning a latent dynamical model from topological features \textit{per time point}. The latter is then used to formulate a downstream regression task to predict the parametrization of some a priori specified governing equation. We implement this idea based on a latent ODE learned from vectorized (static) persistence diagrams and show that this modeling choice is justified by a combination of recent stability results for persistent homology. Various (ablation) experiments not only demonstrate the relevance of each individual model component, but provide compelling empirical evidence that our proposed model -- \textit{neural persistence dynamics} -- substantially outperforms the state-of-the-art across a diverse set of parameter regression tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge