Stacey Svetlichnaya

Satellite-based Prediction of Forage Conditions for Livestock in Northern Kenya

Apr 23, 2020

Abstract:This paper introduces the first dataset of satellite images labeled with forage quality by on-the-ground experts and provides proof of concept for applying computer vision methods to index-based drought insurance. We also present the results of a collaborative benchmark tool used to crowdsource an accurate machine learning model on the dataset. Our methods significantly outperform the existing technology for an insurance program in Northern Kenya, suggesting that a computer vision-based approach could substantially benefit pastoralists, whose exposure to droughts is severe and worsening with climate change.

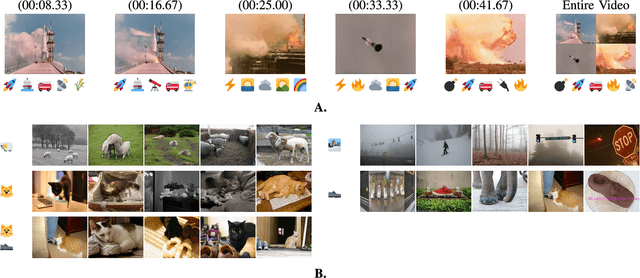

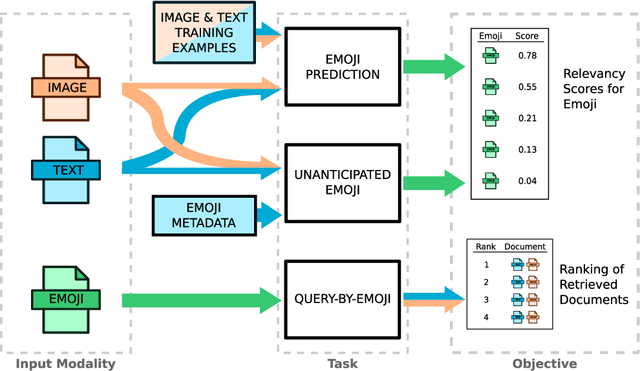

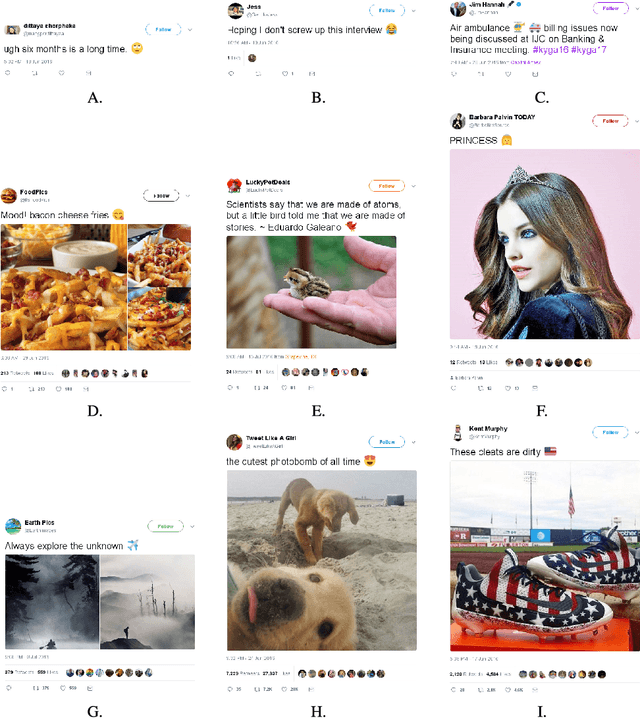

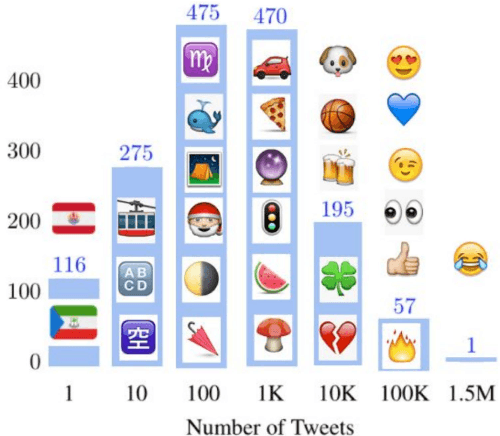

The New Modality: Emoji Challenges in Prediction, Anticipation, and Retrieval

Feb 02, 2018

Abstract:Over the past decade, emoji have emerged as a new and widespread form of digital communication, spanning diverse social networks and spoken languages. We propose to treat these ideograms as a new modality in their own right, distinct in their semantic structure from both the text in which they are often embedded as well as the images which they resemble. As a new modality, emoji present rich novel possibilities for representation and interaction. In this paper, we explore the challenges that arise naturally from considering the emoji modality through the lens of multimedia research. Specifically, the ways in which emoji can be related to other common modalities such as text and images. To do so, we first present a large scale dataset of real-world emoji usage collected from Twitter. This dataset contains examples of both text-emoji and image-emoji relationships. We present baseline results on the challenge of predicting emoji from both text and images, using state-of-the-art neural networks. Further, we offer a first consideration into the problem of how to account for new, unseen emoji - a relevant issue as the emoji vocabulary continues to expand on a yearly basis. Finally, we present results for multimedia retrieval using emoji as queries.

Beautiful and damned. Combined effect of content quality and social ties on user engagement

Nov 01, 2017

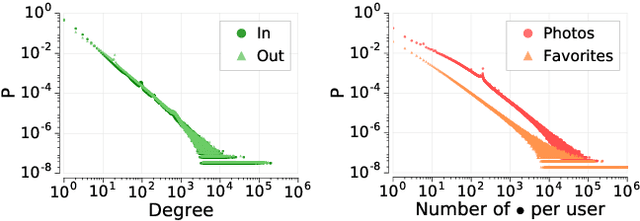

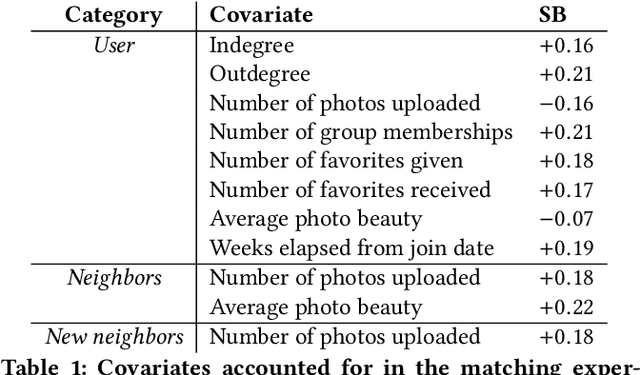

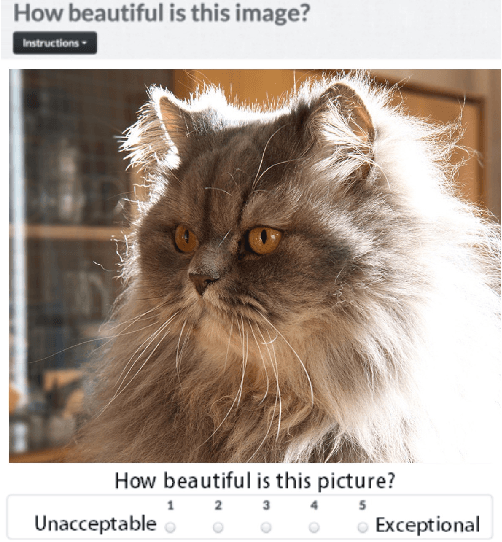

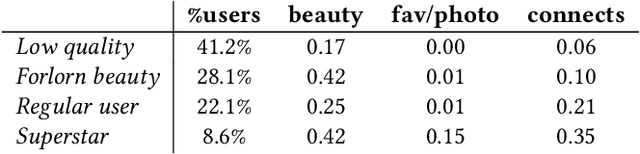

Abstract:User participation in online communities is driven by the intertwinement of the social network structure with the crowd-generated content that flows along its links. These aspects are rarely explored jointly and at scale. By looking at how users generate and access pictures of varying beauty on Flickr, we investigate how the production of quality impacts the dynamics of online social systems. We develop a deep learning computer vision model to score images according to their aesthetic value and we validate its output through crowdsourcing. By applying it to over 15B Flickr photos, we study for the first time how image beauty is distributed over a large-scale social system. Beautiful images are evenly distributed in the network, although only a small core of people get social recognition for them. To study the impact of exposure to quality on user engagement, we set up matching experiments aimed at detecting causality from observational data. Exposure to beauty is double-edged: following people who produce high-quality content increases one's probability of uploading better photos; however, an excessive imbalance between the quality generated by a user and the user's neighbors leads to a decline in engagement. Our analysis has practical implications for improving link recommender systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge