Spencer Cappallo

The New Modality: Emoji Challenges in Prediction, Anticipation, and Retrieval

Feb 02, 2018

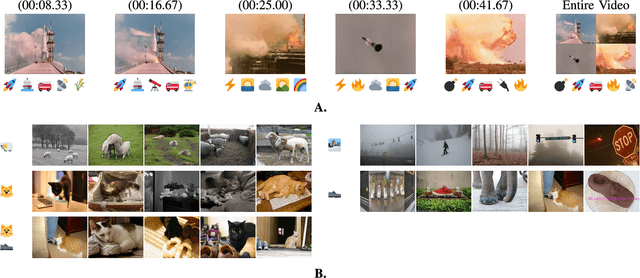

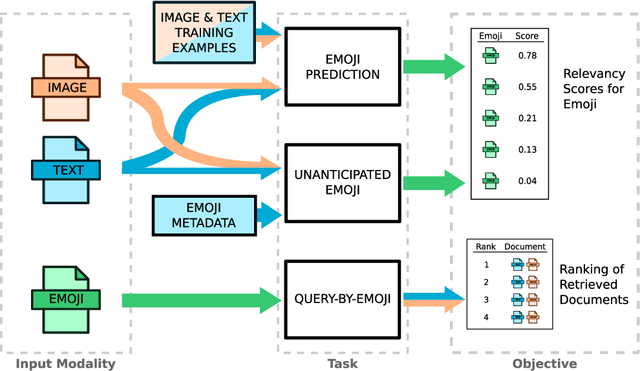

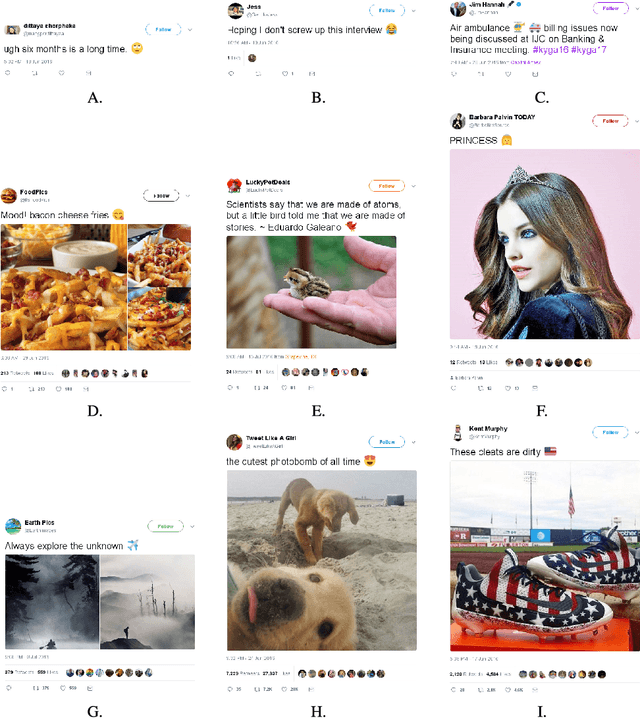

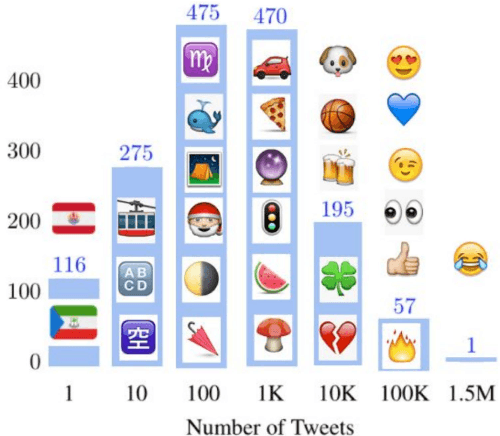

Abstract:Over the past decade, emoji have emerged as a new and widespread form of digital communication, spanning diverse social networks and spoken languages. We propose to treat these ideograms as a new modality in their own right, distinct in their semantic structure from both the text in which they are often embedded as well as the images which they resemble. As a new modality, emoji present rich novel possibilities for representation and interaction. In this paper, we explore the challenges that arise naturally from considering the emoji modality through the lens of multimedia research. Specifically, the ways in which emoji can be related to other common modalities such as text and images. To do so, we first present a large scale dataset of real-world emoji usage collected from Twitter. This dataset contains examples of both text-emoji and image-emoji relationships. We present baseline results on the challenge of predicting emoji from both text and images, using state-of-the-art neural networks. Further, we offer a first consideration into the problem of how to account for new, unseen emoji - a relevant issue as the emoji vocabulary continues to expand on a yearly basis. Finally, we present results for multimedia retrieval using emoji as queries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge