Sreedhar Pratty

JARVIS: A Multi-Agent Code Assistant for High-Quality EDA Script Generation

May 20, 2025

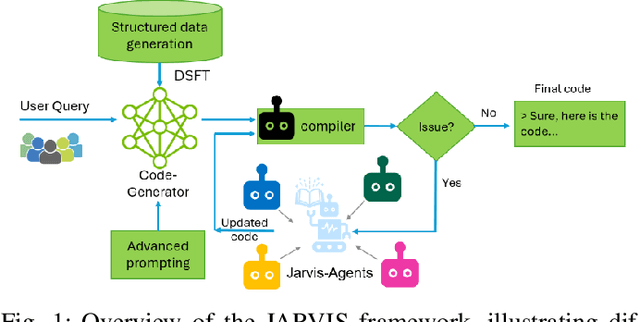

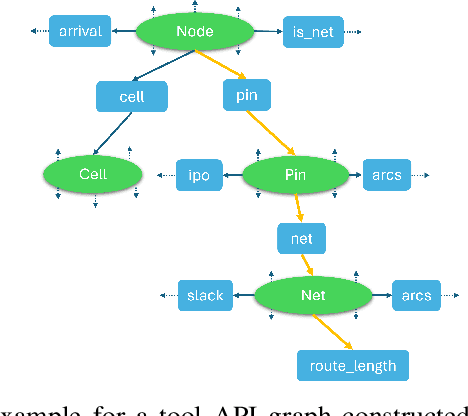

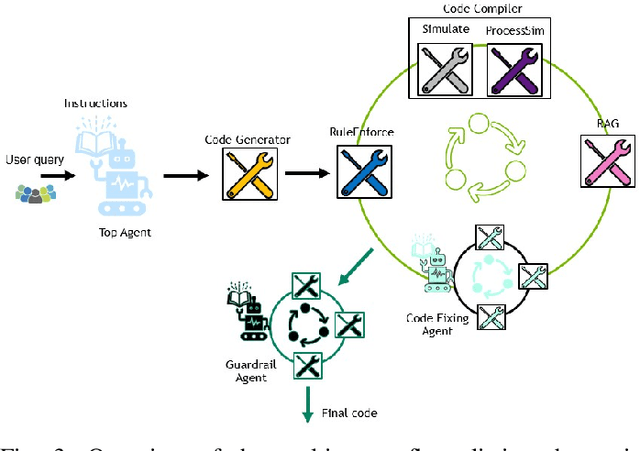

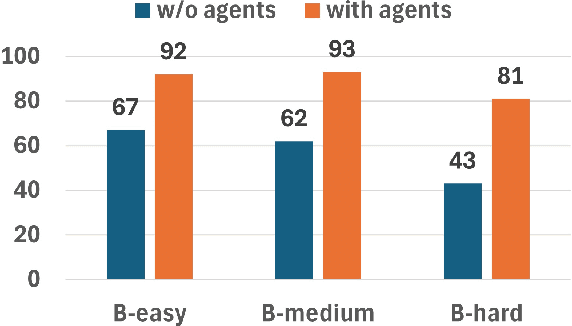

Abstract:This paper presents JARVIS, a novel multi-agent framework that leverages Large Language Models (LLMs) and domain expertise to generate high-quality scripts for specialized Electronic Design Automation (EDA) tasks. By combining a domain-specific LLM trained with synthetically generated data, a custom compiler for structural verification, rule enforcement, code fixing capabilities, and advanced retrieval mechanisms, our approach achieves significant improvements over state-of-the-art domain-specific models. Our framework addresses the challenges of data scarcity and hallucination errors in LLMs, demonstrating the potential of LLMs in specialized engineering domains. We evaluate our framework on multiple benchmarks and show that it outperforms existing models in terms of accuracy and reliability. Our work sets a new precedent for the application of LLMs in EDA and paves the way for future innovations in this field.

ChipNeMo: Domain-Adapted LLMs for Chip Design

Nov 13, 2023

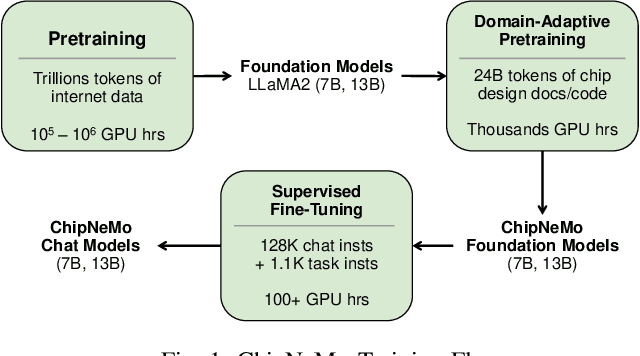

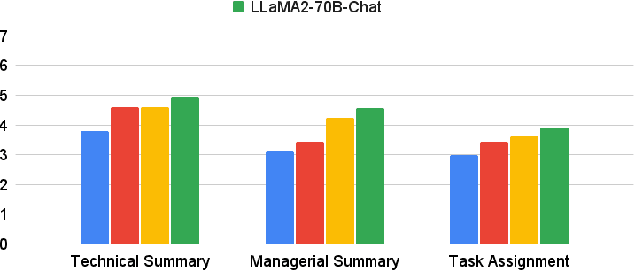

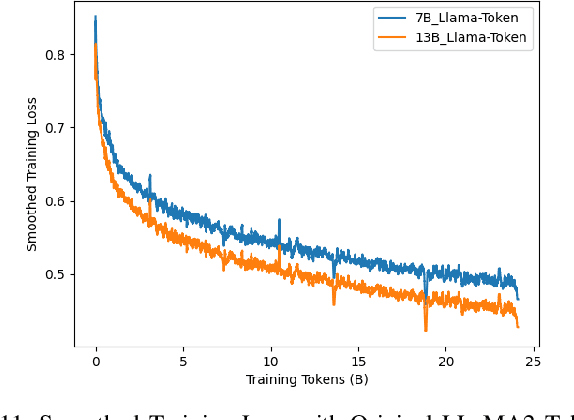

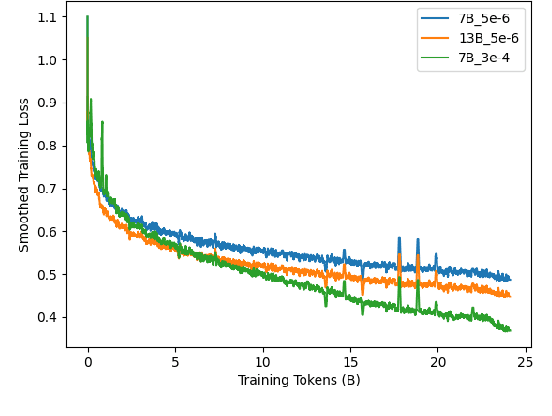

Abstract:ChipNeMo aims to explore the applications of large language models (LLMs) for industrial chip design. Instead of directly deploying off-the-shelf commercial or open-source LLMs, we instead adopt the following domain adaptation techniques: custom tokenizers, domain-adaptive continued pretraining, supervised fine-tuning (SFT) with domain-specific instructions, and domain-adapted retrieval models. We evaluate these methods on three selected LLM applications for chip design: an engineering assistant chatbot, EDA script generation, and bug summarization and analysis. Our results show that these domain adaptation techniques enable significant LLM performance improvements over general-purpose base models across the three evaluated applications, enabling up to 5x model size reduction with similar or better performance on a range of design tasks. Our findings also indicate that there's still room for improvement between our current results and ideal outcomes. We believe that further investigation of domain-adapted LLM approaches will help close this gap in the future.

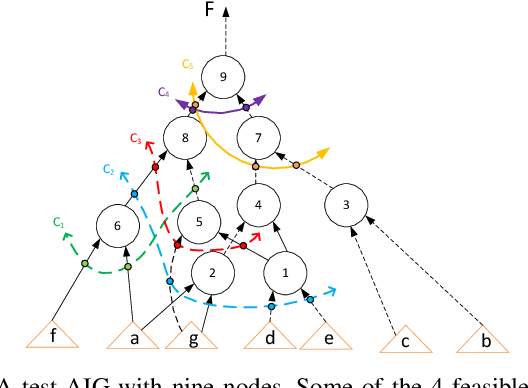

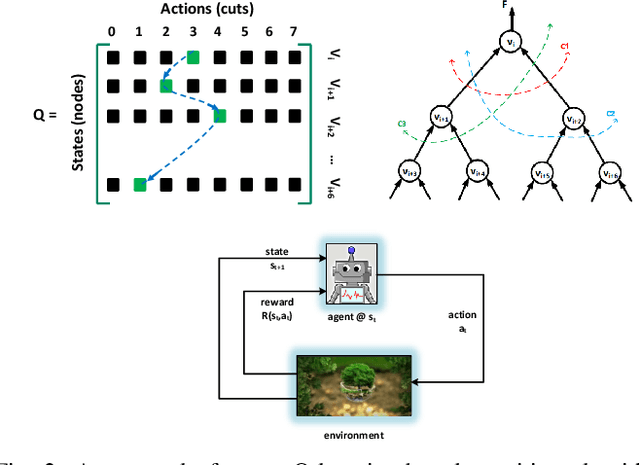

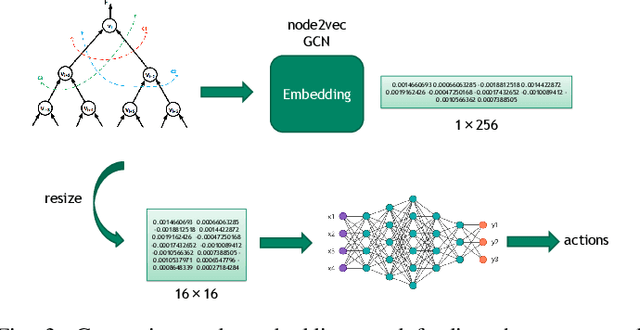

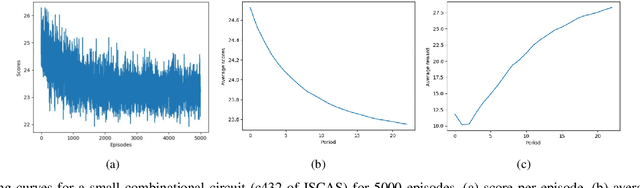

AISYN: AI-driven Reinforcement Learning-Based Logic Synthesis Framework

Feb 08, 2023

Abstract:Logic synthesis is one of the most important steps in design and implementation of digital chips with a big impact on final Quality of Results (QoR). For a most general input circuit modeled by a Directed Acyclic Graph (DAG), many logic synthesis problems such as delay or area minimization are NP-Complete, hence, no optimal solution is available. This is why many classical logic optimization functions tend to follow greedy approaches that are easily trapped in local minima that does not allow improving QoR as much as needed. We believe that Artificial Intelligence (AI) and more specifically Reinforcement Learning (RL) algorithms can help in solving this problem. This is because AI and RL can help minimizing QoR further by exiting from local minima. Our experiments on both open source and industrial benchmark circuits show that significant improvements on important metrics such as area, delay, and power can be achieved by making logic synthesis optimization functions AI-driven. For example, our RL-based rewriting algorithm could improve total cell area post-synthesis by up to 69.3% when compared to a classical rewriting algorithm with no AI awareness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge