Ghasem Pasandi

JARVIS: A Multi-Agent Code Assistant for High-Quality EDA Script Generation

May 20, 2025

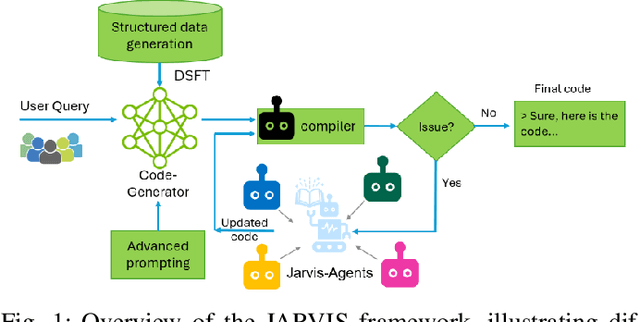

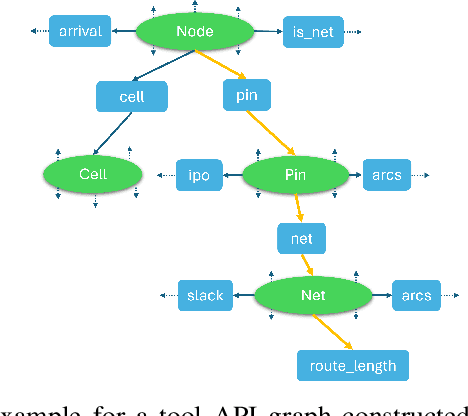

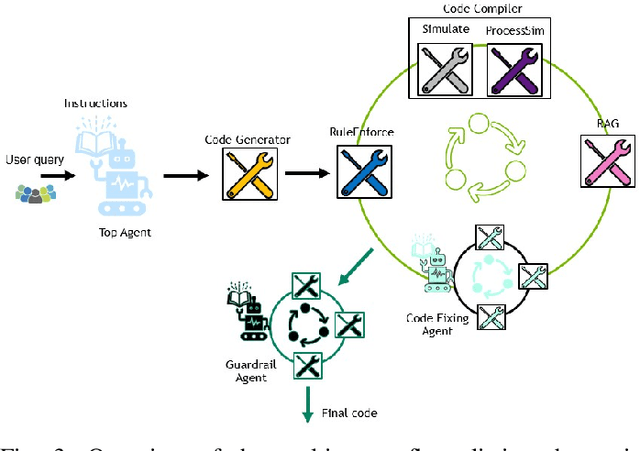

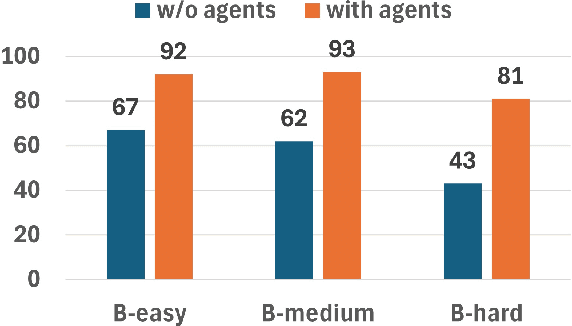

Abstract:This paper presents JARVIS, a novel multi-agent framework that leverages Large Language Models (LLMs) and domain expertise to generate high-quality scripts for specialized Electronic Design Automation (EDA) tasks. By combining a domain-specific LLM trained with synthetically generated data, a custom compiler for structural verification, rule enforcement, code fixing capabilities, and advanced retrieval mechanisms, our approach achieves significant improvements over state-of-the-art domain-specific models. Our framework addresses the challenges of data scarcity and hallucination errors in LLMs, demonstrating the potential of LLMs in specialized engineering domains. We evaluate our framework on multiple benchmarks and show that it outperforms existing models in terms of accuracy and reliability. Our work sets a new precedent for the application of LLMs in EDA and paves the way for future innovations in this field.

AISYN: AI-driven Reinforcement Learning-Based Logic Synthesis Framework

Feb 08, 2023

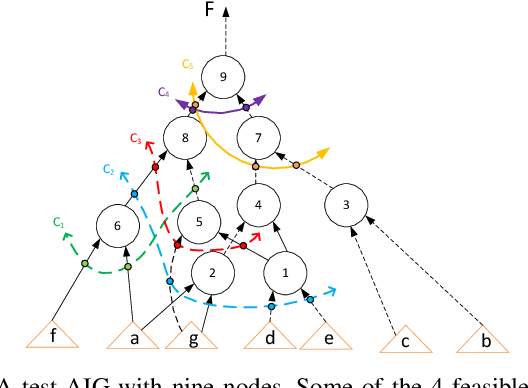

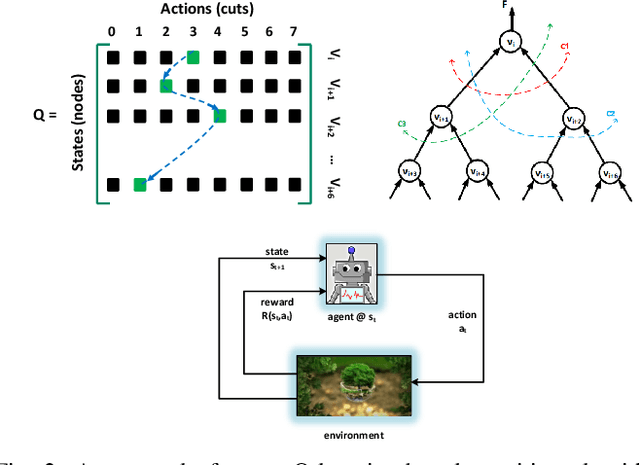

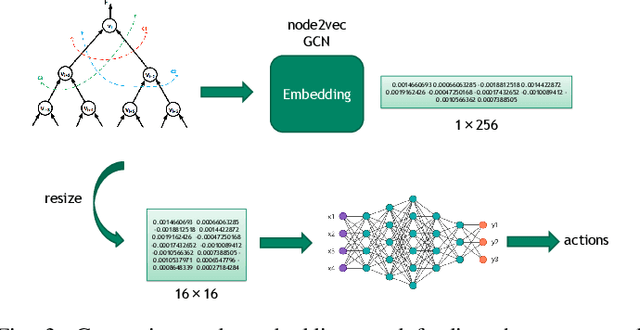

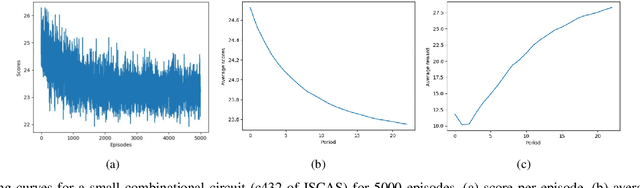

Abstract:Logic synthesis is one of the most important steps in design and implementation of digital chips with a big impact on final Quality of Results (QoR). For a most general input circuit modeled by a Directed Acyclic Graph (DAG), many logic synthesis problems such as delay or area minimization are NP-Complete, hence, no optimal solution is available. This is why many classical logic optimization functions tend to follow greedy approaches that are easily trapped in local minima that does not allow improving QoR as much as needed. We believe that Artificial Intelligence (AI) and more specifically Reinforcement Learning (RL) algorithms can help in solving this problem. This is because AI and RL can help minimizing QoR further by exiting from local minima. Our experiments on both open source and industrial benchmark circuits show that significant improvements on important metrics such as area, delay, and power can be achieved by making logic synthesis optimization functions AI-driven. For example, our RL-based rewriting algorithm could improve total cell area post-synthesis by up to 69.3% when compared to a classical rewriting algorithm with no AI awareness.

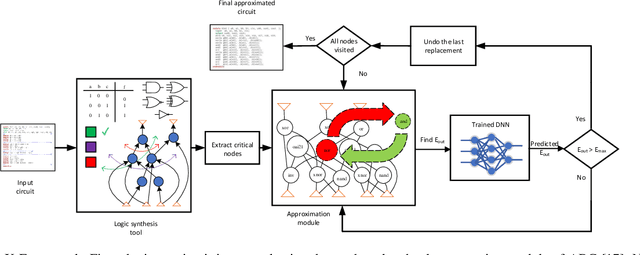

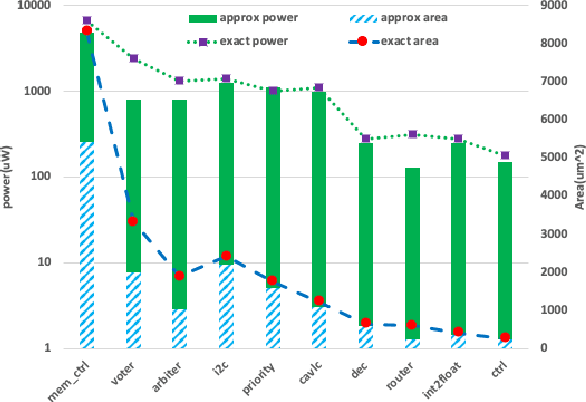

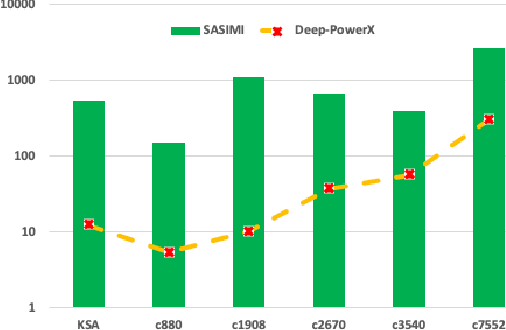

Deep-PowerX: A Deep Learning-Based Framework for Low-Power Approximate Logic Synthesis

Jul 03, 2020

Abstract:This paper aims at integrating three powerful techniques namely Deep Learning, Approximate Computing, and Low Power Design into a strategy to optimize logic at the synthesis level. We utilize advances in deep learning to guide an approximate logic synthesis engine to minimize the dynamic power consumption of a given digital CMOS circuit, subject to a predetermined error rate at the primary outputs. Our framework, Deep-PowerX, focuses on replacing or removing gates on a technology-mapped network and uses a Deep Neural Network (DNN) to predict error rates at primary outputs of the circuit when a specific part of the netlist is approximated. The primary goal of Deep-PowerX is to reduce the dynamic power whereas area reduction serves as a secondary objective. Using the said DNN, Deep-PowerX is able to reduce the exponential time complexity of standard approximate logic synthesis to linear time. Experiments are done on numerous open source benchmark circuits. Results show significant reduction in power and area by up to 1.47 times and 1.43 times compared to exact solutions and by up to 22% and 27% compared to state-of-the-art approximate logic synthesis tools while having orders of magnitudes lower run-time.

NullaNet: Training Deep Neural Networks for Reduced-Memory-Access Inference

Aug 27, 2018

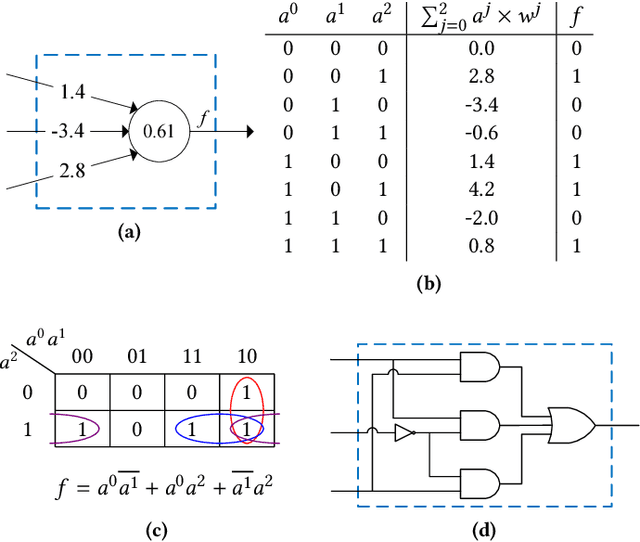

Abstract:Deep neural networks have been successfully deployed in a wide variety of applications including computer vision and speech recognition. However, computational and storage complexity of these models has forced the majority of computations to be performed on high-end computing platforms or on the cloud. To cope with computational and storage complexity of these models, this paper presents a training method that enables a radically different approach for realization of deep neural networks through Boolean logic minimization. The aforementioned realization completely removes the energy-hungry step of accessing memory for obtaining model parameters, consumes about two orders of magnitude fewer computing resources compared to realizations that use floatingpoint operations, and has a substantially lower latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge