Massoud Pedram

SkipKV: Selective Skipping of KV Generation and Storage for Efficient Inference with Large Reasoning Models

Dec 08, 2025

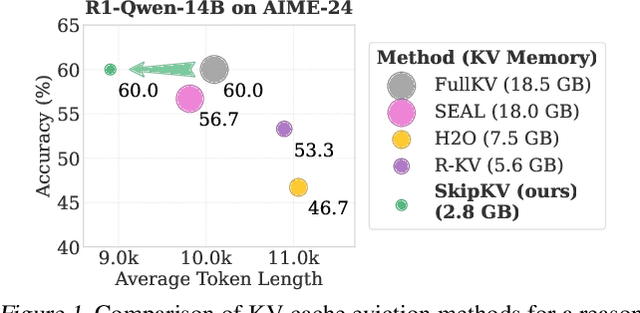

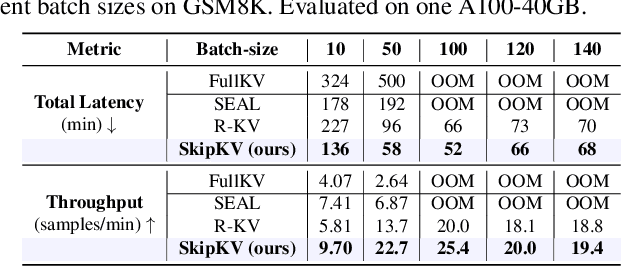

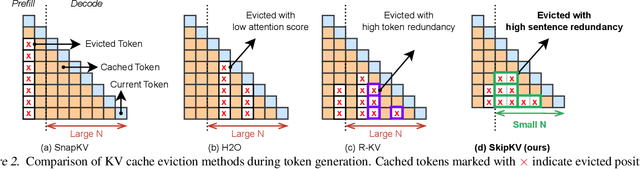

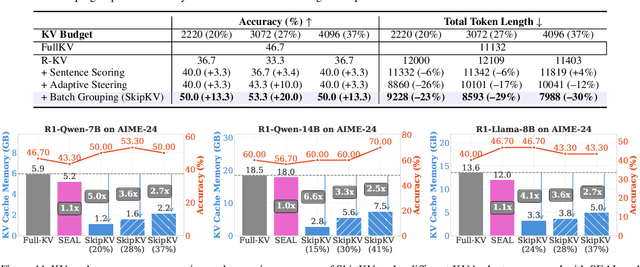

Abstract:Large reasoning models (LRMs) often cost significant key-value (KV) cache overhead, due to their linear growth with the verbose chain-of-thought (CoT) reasoning process. This costs both memory and throughput bottleneck limiting their efficient deployment. Towards reducing KV cache size during inference, we first investigate the effectiveness of existing KV cache eviction methods for CoT reasoning. Interestingly, we find that due to unstable token-wise scoring and the reduced effective KV budget caused by padding tokens, state-of-the-art (SoTA) eviction methods fail to maintain accuracy in the multi-batch setting. Additionally, these methods often generate longer sequences than the original model, as semantic-unaware token-wise eviction leads to repeated revalidation during reasoning. To address these issues, we present \textbf{SkipKV}, a \textbf{\textit{training-free}} KV compression method for selective \textit{eviction} and \textit{generation} operating at a coarse-grained sentence-level sequence removal for efficient CoT reasoning. In specific, it introduces a \textit{sentence-scoring metric} to identify and remove highly similar sentences while maintaining semantic coherence. To suppress redundant generation, SkipKV dynamically adjusts a steering vector to update the hidden activation states during inference enforcing the LRM to generate concise response. Extensive evaluations on multiple reasoning benchmarks demonstrate the effectiveness of SkipKV in maintaining up to $\mathbf{26.7}\%$ improved accuracy compared to the alternatives, at a similar compression budget. Additionally, compared to SoTA, SkipKV yields up to $\mathbf{1.6}\times$ fewer generation length while improving throughput up to $\mathbf{1.7}\times$.

ASAP-FE: Energy-Efficient Feature Extraction Enabling Multi-Channel Keyword Spotting on Edge Processors

Jun 17, 2025Abstract:Multi-channel keyword spotting (KWS) has become crucial for voice-based applications in edge environments. However, its substantial computational and energy requirements pose significant challenges. We introduce ASAP-FE (Agile Sparsity-Aware Parallelized-Feature Extractor), a hardware-oriented front-end designed to address these challenges. Our framework incorporates three key innovations: (1) Half-overlapped Infinite Impulse Response (IIR) Framing: This reduces redundant data by approximately 25% while maintaining essential phoneme transition cues. (2) Sparsity-aware Data Reduction: We exploit frame-level sparsity to achieve an additional 50% data reduction by combining frame skipping with stride-based filtering. (3) Dynamic Parallel Processing: We introduce a parameterizable filter cluster and a priority-based scheduling algorithm that allows parallel execution of IIR filtering tasks, reducing latency and optimizing energy efficiency. ASAP-FE is implemented with various filter cluster sizes on edge processors, with functionality verified on FPGA prototypes and designs synthesized at 45 nm. Experimental results using TC-ResNet8, DS-CNN, and KWT-1 demonstrate that ASAP-FE reduces the average workload by 62.73% while supporting real-time processing for up to 32 channels. Compared to a conventional fully overlapped baseline, ASAP-FE achieves less than a 1% accuracy drop (e.g., 96.22% vs. 97.13% for DS-CNN), which is well within acceptable limits for edge AI. By adjusting the number of filter modules, our design optimizes the trade-off between performance and energy, with 15 parallel filters providing optimal performance for up to 25 channels. Overall, ASAP-FE offers a practical and efficient solution for multi-channel KWS on energy-constrained edge devices.

MARCO: Hardware-Aware Neural Architecture Search for Edge Devices with Multi-Agent Reinforcement Learning and Conformal Prediction Filtering

Jun 16, 2025Abstract:This paper introduces MARCO (Multi-Agent Reinforcement learning with Conformal Optimization), a novel hardware-aware framework for efficient neural architecture search (NAS) targeting resource-constrained edge devices. By significantly reducing search time and maintaining accuracy under strict hardware constraints, MARCO bridges the gap between automated DNN design and CAD for edge AI deployment. MARCO's core technical contribution lies in its unique combination of multi-agent reinforcement learning (MARL) with Conformal Prediction (CP) to accelerate the hardware/software co-design process for deploying deep neural networks. Unlike conventional once-for-all (OFA) supernet approaches that require extensive pretraining, MARCO decomposes the NAS task into a hardware configuration agent (HCA) and a Quantization Agent (QA). The HCA optimizes high-level design parameters, while the QA determines per-layer bit-widths under strict memory and latency budgets using a shared reward signal within a centralized-critic, decentralized-execution (CTDE) paradigm. A key innovation is the integration of a calibrated CP surrogate model that provides statistical guarantees (with a user-defined miscoverage rate) to prune unpromising candidate architectures before incurring the high costs of partial training or hardware simulation. This early filtering drastically reduces the search space while ensuring that high-quality designs are retained with a high probability. Extensive experiments on MNIST, CIFAR-10, and CIFAR-100 demonstrate that MARCO achieves a 3-4x reduction in total search time compared to an OFA baseline while maintaining near-baseline accuracy (within 0.3%). Furthermore, MARCO also reduces inference latency. Validation on a MAX78000 evaluation board confirms that simulator trends hold in practice, with simulator estimates deviating from measured values by less than 5%.

FAIR-SIGHT: Fairness Assurance in Image Recognition via Simultaneous Conformal Thresholding and Dynamic Output Repair

Apr 10, 2025

Abstract:We introduce FAIR-SIGHT, an innovative post-hoc framework designed to ensure fairness in computer vision systems by combining conformal prediction with a dynamic output repair mechanism. Our approach calculates a fairness-aware non-conformity score that simultaneously assesses prediction errors and fairness violations. Using conformal prediction, we establish an adaptive threshold that provides rigorous finite-sample, distribution-free guarantees. When the non-conformity score for a new image exceeds the calibrated threshold, FAIR-SIGHT implements targeted corrective adjustments, such as logit shifts for classification and confidence recalibration for detection, to reduce both group and individual fairness disparities, all without the need for retraining or having access to internal model parameters. Comprehensive theoretical analysis validates our method's error control and convergence properties. At the same time, extensive empirical evaluations on benchmark datasets show that FAIR-SIGHT significantly reduces fairness disparities while preserving high predictive performance.

RocketPPA: Ultra-Fast LLM-Based PPA Estimator at Code-Level Abstraction

Mar 27, 2025

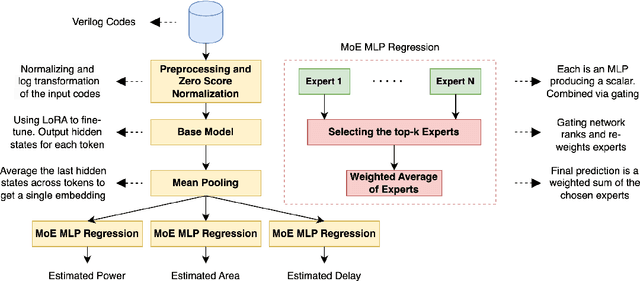

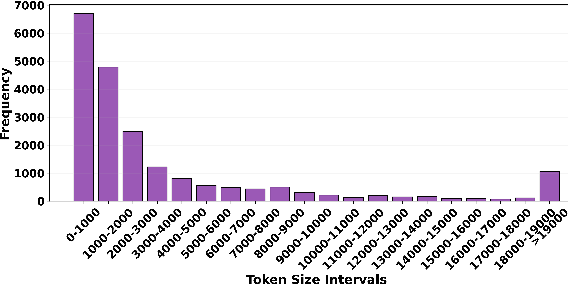

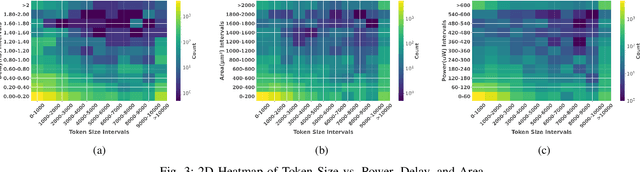

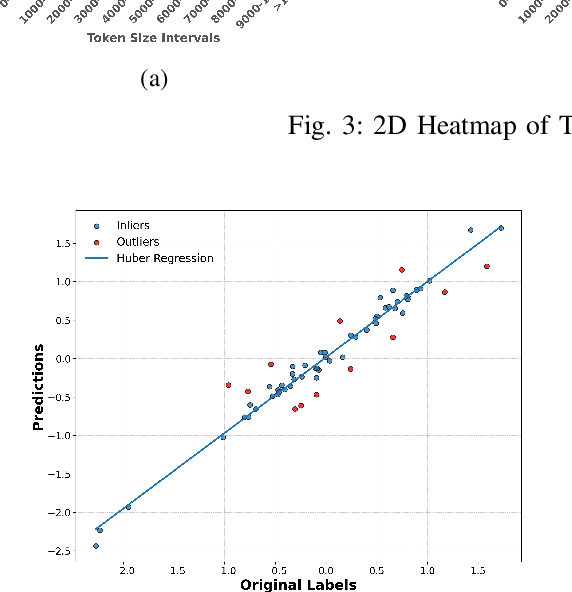

Abstract:Large language models have recently transformed hardware design, yet bridging the gap between code synthesis and PPA (power, performance, and area) estimation remains a challenge. In this work, we introduce a novel framework that leverages a 21k dataset of thoroughly cleaned and synthesizable Verilog modules, each annotated with detailed power, delay, and area metrics. By employing chain-of-thought techniques, we automatically debug and curate this dataset to ensure high fidelity in downstream applications. We then fine-tune CodeLlama using LoRA-based parameter-efficient methods, framing the task as a regression problem to accurately predict PPA metrics from Verilog code. Furthermore, we augment our approach with a mixture-of-experts architecture-integrating both LoRA and an additional MLP expert layer-to further refine predictions. Experimental results demonstrate significant improvements: power estimation accuracy is enhanced by 5.9% at a 20% error threshold and by 7.2% at a 10% threshold, delay estimation improves by 5.1% and 3.9%, and area estimation sees gains of 4% and 7.9% for the 20% and 10% thresholds, respectively. Notably, the incorporation of the mixture-of-experts module contributes an additional 3--4% improvement across these tasks. Our results establish a new benchmark for PPA-aware Verilog generation, highlighting the effectiveness of our integrated dataset and modeling strategies for next-generation EDA workflows.

FACTER: Fairness-Aware Conformal Thresholding and Prompt Engineering for Enabling Fair LLM-Based Recommender Systems

Feb 05, 2025

Abstract:We propose FACTER, a fairness-aware framework for LLM-based recommendation systems that integrates conformal prediction with dynamic prompt engineering. By introducing an adaptive semantic variance threshold and a violation-triggered mechanism, FACTER automatically tightens fairness constraints whenever biased patterns emerge. We further develop an adversarial prompt generator that leverages historical violations to reduce repeated demographic biases without retraining the LLM. Empirical results on MovieLens and Amazon show that FACTER substantially reduces fairness violations (up to 95.5%) while maintaining strong recommendation accuracy, revealing semantic variance as a potent proxy of bias.

Efficient Noise Mitigation for Enhancing Inference Accuracy in DNNs on Mixed-Signal Accelerators

Sep 27, 2024Abstract:In this paper, we propose a framework to enhance the robustness of the neural models by mitigating the effects of process-induced and aging-related variations of analog computing components on the accuracy of the analog neural networks. We model these variations as the noise affecting the precision of the activations and introduce a denoising block inserted between selected layers of a pre-trained model. We demonstrate that training the denoising block significantly increases the model's robustness against various noise levels. To minimize the overhead associated with adding these blocks, we present an exploration algorithm to identify optimal insertion points for the denoising blocks. Additionally, we propose a specialized architecture to efficiently execute the denoising blocks, which can be integrated into mixed-signal accelerators. We evaluate the effectiveness of our approach using Deep Neural Network (DNN) models trained on the ImageNet and CIFAR-10 datasets. The results show that on average, by accepting 2.03% parameter count overhead, the accuracy drop due to the variations reduces from 31.7% to 1.15%.

Enhancing Layout Hotspot Detection Efficiency with YOLOv8 and PCA-Guided Augmentation

Jul 19, 2024

Abstract:In this paper, we present a YOLO-based framework for layout hotspot detection, aiming to enhance the efficiency and performance of the design rule checking (DRC) process. Our approach leverages the YOLOv8 vision model to detect multiple hotspots within each layout image, even when dealing with large layout image sizes. Additionally, to enhance pattern-matching effectiveness, we introduce a novel approach to augment the layout image using information extracted through Principal Component Analysis (PCA). The core of our proposed method is an algorithm that utilizes PCA to extract valuable auxiliary information from the layout image. This extracted information is then incorporated into the layout image as an additional color channel. This augmentation significantly improves the accuracy of multi-hotspot detection while reducing the false alarm rate of the object detection algorithm. We evaluate the effectiveness of our framework using four datasets generated from layouts found in the ICCAD-2019 benchmark dataset. The results demonstrate that our framework achieves a precision (recall) of approximately 83% (86%) while maintaining a false alarm rate of less than 7.4\%. Also, the studies show that the proposed augmentation approach could improve the detection ability of never-seen-before (NSB) hotspots by about 10%.

CHOSEN: Compilation to Hardware Optimization Stack for Efficient Vision Transformer Inference

Jul 17, 2024

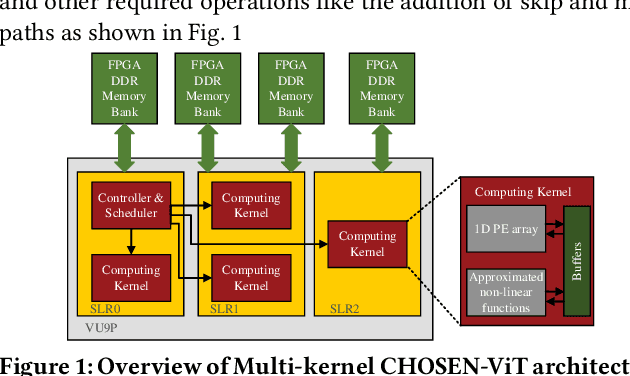

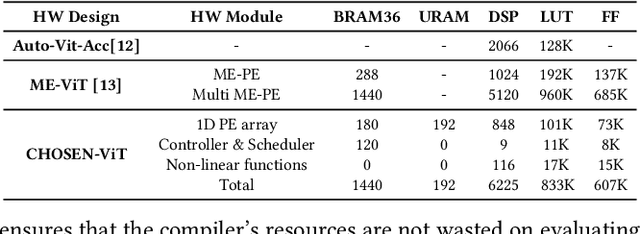

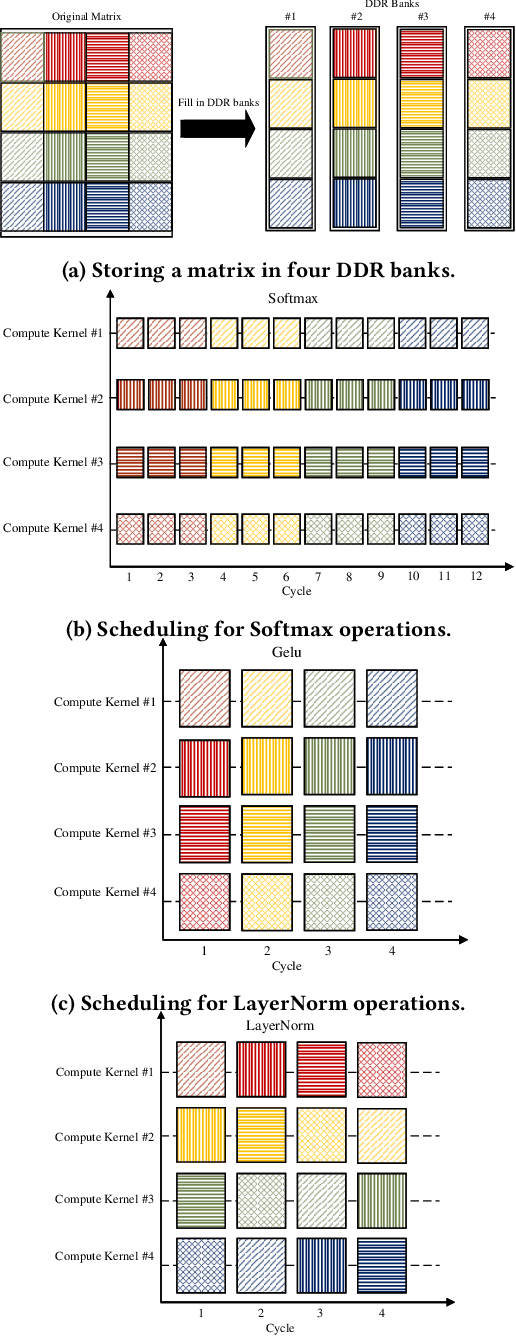

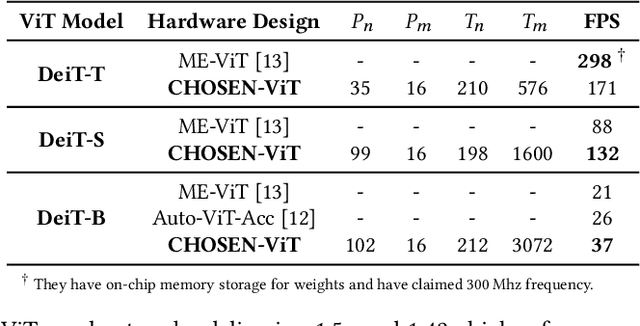

Abstract:Vision Transformers (ViTs) represent a groundbreaking shift in machine learning approaches to computer vision. Unlike traditional approaches, ViTs employ the self-attention mechanism, which has been widely used in natural language processing, to analyze image patches. Despite their advantages in modeling visual tasks, deploying ViTs on hardware platforms, notably Field-Programmable Gate Arrays (FPGAs), introduces considerable challenges. These challenges stem primarily from the non-linear calculations and high computational and memory demands of ViTs. This paper introduces CHOSEN, a software-hardware co-design framework to address these challenges and offer an automated framework for ViT deployment on the FPGAs in order to maximize performance. Our framework is built upon three fundamental contributions: multi-kernel design to maximize the bandwidth, mainly targeting benefits of multi DDR memory banks, approximate non-linear functions that exhibit minimal accuracy degradation, and efficient use of available logic blocks on the FPGA, and efficient compiler to maximize the performance and memory-efficiency of the computing kernels by presenting a novel algorithm for design space exploration to find optimal hardware configuration that achieves optimal throughput and latency. Compared to the state-of-the-art ViT accelerators, CHOSEN achieves a 1.5x and 1.42x improvement in the throughput on the DeiT-S and DeiT-B models.

ARCO:Adaptive Multi-Agent Reinforcement Learning-Based Hardware/Software Co-Optimization Compiler for Improved Performance in DNN Accelerator Design

Jul 11, 2024Abstract:This paper presents ARCO, an adaptive Multi-Agent Reinforcement Learning (MARL)-based co-optimizing compilation framework designed to enhance the efficiency of mapping machine learning (ML) models - such as Deep Neural Networks (DNNs) - onto diverse hardware platforms. The framework incorporates three specialized actor-critic agents within MARL, each dedicated to a distinct aspect of compilation/optimization at an abstract level: one agent focuses on hardware, while two agents focus on software optimizations. This integration results in a collaborative hardware/software co-optimization strategy that improves the precision and speed of DNN deployments. Concentrating on high-confidence configurations simplifies the search space and delivers superior performance compared to current optimization methods. The ARCO framework surpasses existing leading frameworks, achieving a throughput increase of up to 37.95% while reducing the optimization time by up to 42.2% across various DNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge