Sizhe Zhang

MMMR: Benchmarking Massive Multi-Modal Reasoning Tasks

May 22, 2025Abstract:Recent advances in Multi-Modal Large Language Models (MLLMs) have enabled unified processing of language, vision, and structured inputs, opening the door to complex tasks such as logical deduction, spatial reasoning, and scientific analysis. Despite their promise, the reasoning capabilities of MLLMs, particularly those augmented with intermediate thinking traces (MLLMs-T), remain poorly understood and lack standardized evaluation benchmarks. Existing work focuses primarily on perception or final answer correctness, offering limited insight into how models reason or fail across modalities. To address this gap, we introduce the MMMR, a new benchmark designed to rigorously evaluate multi-modal reasoning with explicit thinking. The MMMR comprises 1) a high-difficulty dataset of 1,083 questions spanning six diverse reasoning types with symbolic depth and multi-hop demands and 2) a modular Reasoning Trace Evaluation Pipeline (RTEP) for assessing reasoning quality beyond accuracy through metrics like relevance, consistency, and structured error annotations. Empirical results show that MLLMs-T overall outperform non-thinking counterparts, but even top models like Claude-3.7-Sonnet and Gemini-2.5 Pro suffer from reasoning pathologies such as inconsistency and overthinking. This benchmark reveals persistent gaps between accuracy and reasoning quality and provides an actionable evaluation pipeline for future model development. Overall, the MMMR offers a scalable foundation for evaluating, comparing, and improving the next generation of multi-modal reasoning systems.

Integrating Active Learning in Causal Inference with Interference: A Novel Approach in Online Experiments

Feb 20, 2024

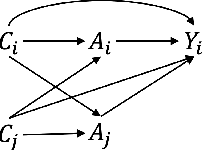

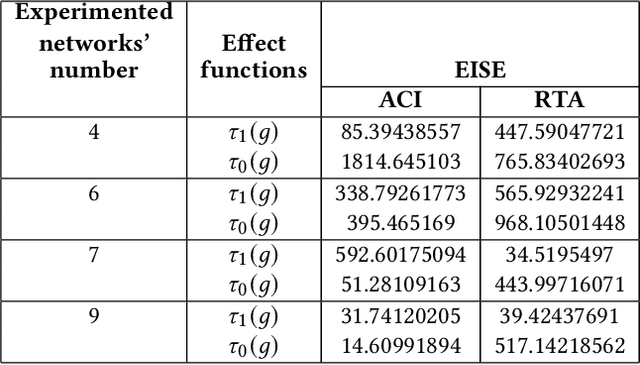

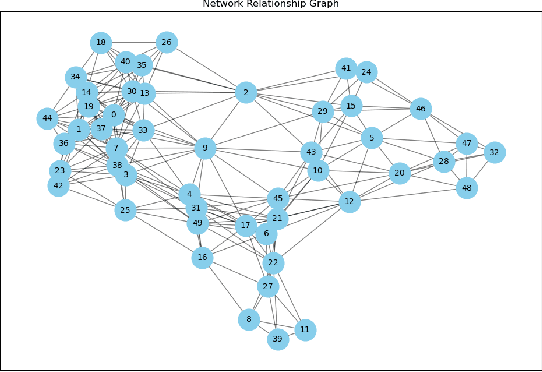

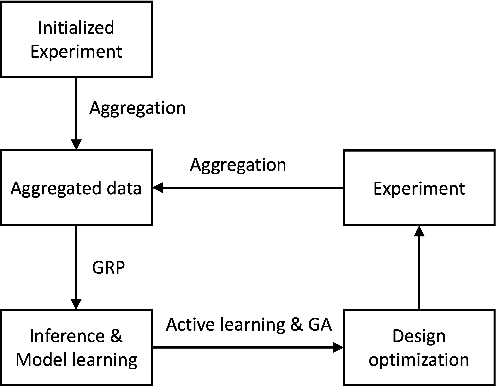

Abstract:In the domain of causal inference research, the prevalent potential outcomes framework, notably the Rubin Causal Model (RCM), often overlooks individual interference and assumes independent treatment effects. This assumption, however, is frequently misaligned with the intricate realities of real-world scenarios, where interference is not merely a possibility but a common occurrence. Our research endeavors to address this discrepancy by focusing on the estimation of direct and spillover treatment effects under two assumptions: (1) network-based interference, where treatments on neighbors within connected networks affect one's outcomes, and (2) non-random treatment assignments influenced by confounders. To improve the efficiency of estimating potentially complex effects functions, we introduce an novel active learning approach: Active Learning in Causal Inference with Interference (ACI). This approach uses Gaussian process to flexibly model the direct and spillover treatment effects as a function of a continuous measure of neighbors' treatment assignment. The ACI framework sequentially identifies the experimental settings that demand further data. It further optimizes the treatment assignments under the network interference structure using genetic algorithms to achieve efficient learning outcome. By applying our method to simulation data and a Tencent game dataset, we demonstrate its feasibility in achieving accurate effects estimations with reduced data requirements. This ACI approach marks a significant advancement in the realm of data efficiency for causal inference, offering a robust and efficient alternative to traditional methodologies, particularly in scenarios characterized by complex interference patterns.

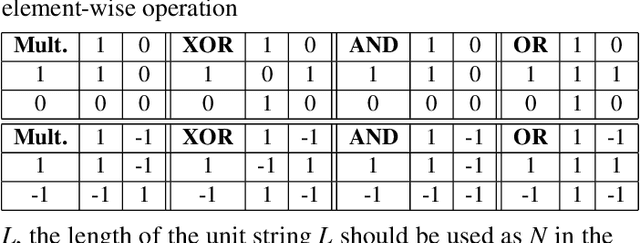

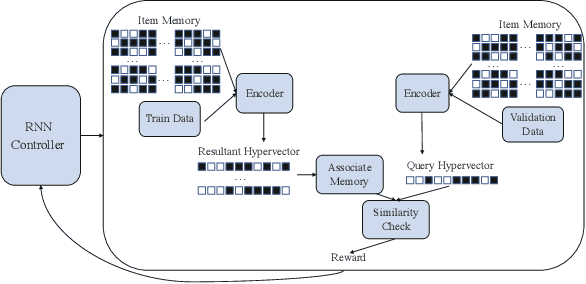

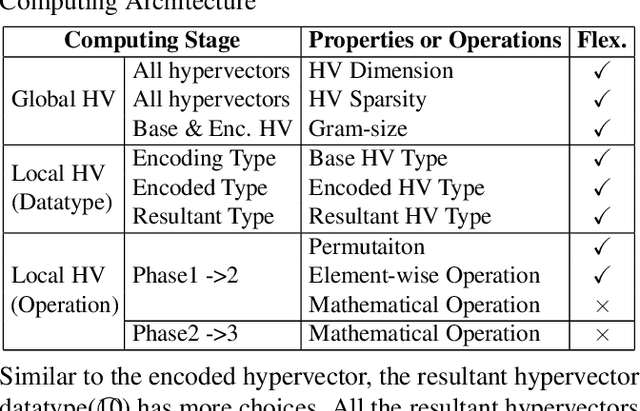

Automated Architecture Search for Brain-inspired Hyperdimensional Computing

Feb 11, 2022

Abstract:This paper represents the first effort to explore an automated architecture search for hyperdimensional computing (HDC), a type of brain-inspired neural network. Currently, HDC design is largely carried out in an application-specific ad-hoc manner, which significantly limits its application. Furthermore, the approach leads to inferior accuracy and efficiency, which suggests that HDC cannot perform competitively against deep neural networks. Herein, we present a thorough study to formulate an HDC architecture search space. On top of the search space, we apply reinforcement-learning to automatically explore the HDC architectures. The searched HDC architectures show competitive performance on case studies involving a drug discovery dataset and a language recognition task. On the Clintox dataset, which tries to learn features from developed drugs that passed/failed clinical trials for toxicity reasons, the searched HDC architecture obtains the state-of-the-art ROC-AUC scores, which are 0.80% higher than the manually designed HDC and 9.75% higher than conventional neural networks. Similar results are achieved on the language recognition task, with 1.27% higher performance than conventional methods.

HDCoin: A Proof-of-Useful-Work Based Blockchain for Hyperdimensional Computing

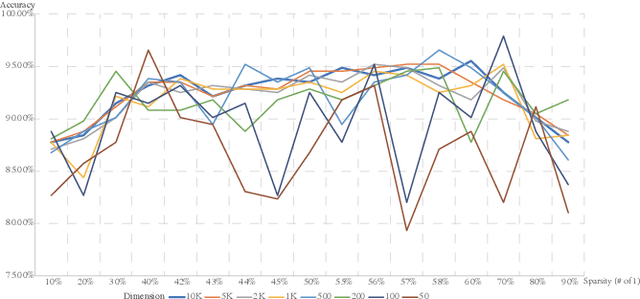

Feb 07, 2022Abstract:Various blockchain systems and schemes have been proposed since Bitcoin was first introduced by Nakamoto Satoshi as a distributed ledger. However, blockchains usually face criticisms, particularly on environmental concerns as their ``proof-of-work'' based mining process usually consumes a considerable amount of energy which hardly makes any useful contributions to the real world. Therefore, the concept of ``proof-of-useful-work'' (PoUW) is proposed to connect blockchain with practical application domain problems so the computation power consumed in the mining process can be spent on useful activities, such as solving optimization problems or training machine learning models. This paper introduces HDCoin, a blockchain-based framework for an emerging machine learning scheme: the brain-inspired hyperdimensional computing (HDC). We formulate the model development of HDC as a problem that can be used in blockchain mining. Specifically, we define the PoUW under the HDC scenario and develop the entire mining process of HDCoin. During mining, miners are competing to obtain the highest test accuracy on a given dataset. The winner also has its model recorded in the blockchain and are available for the public as a trustworthy HDC model. In addition, we also quantitatively examine the performance of mining under different HDC configurations to illustrate the adaptive mining difficulty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge