Siyuan Dai

for the Alzheimer's Disease Neuroimaging Initiative

Deep Models, Shallow Alignment: Uncovering the Granularity Mismatch in Neural Decoding

Jan 29, 2026Abstract:Neural visual decoding is a central problem in brain computer interface research, aiming to reconstruct human visual perception and to elucidate the structure of neural representations. However, existing approaches overlook a fundamental granularity mismatch between human and machine vision, where deep vision models emphasize semantic invariance by suppressing local texture information, whereas neural signals preserve an intricate mixture of low-level visual attributes and high-level semantic content. To address this mismatch, we propose Shallow Alignment, a novel contrastive learning strategy that aligns neural signals with intermediate representations of visual encoders rather than their final outputs, thereby striking a better balance between low-level texture details and high-level semantic features. Extensive experiments across multiple benchmarks demonstrate that Shallow Alignment significantly outperforms standard final-layer alignment, with performance gains ranging from 22% to 58% across diverse vision backbones. Notably, our approach effectively unlocks the scaling law in neural visual decoding, enabling decoding performance to scale predictably with the capacity of pre-trained vision backbones. We further conduct systematic empirical analyses to shed light on the mechanisms underlying the observed performance gains.

Aligning Findings with Diagnosis: A Self-Consistent Reinforcement Learning Framework for Trustworthy Radiology Reporting

Jan 06, 2026Abstract:Multimodal Large Language Models (MLLMs) have shown strong potential for radiology report generation, yet their clinical translation is hindered by architectural heterogeneity and the prevalence of factual hallucinations. Standard supervised fine-tuning often fails to strictly align linguistic outputs with visual evidence, while existing reinforcement learning approaches struggle with either prohibitive computational costs or limited exploration. To address these challenges, we propose a comprehensive framework for self-consistent radiology report generation. First, we conduct a systematic evaluation to identify optimal vision encoder and LLM backbone configurations for medical imaging. Building on this foundation, we introduce a novel "Reason-then-Summarize" architecture optimized via Group Relative Policy Optimization (GRPO). This framework restructures generation into two distinct components: a think block for detailed findings and an answer block for structured disease labels. By utilizing a multi-dimensional composite reward function, we explicitly penalize logical discrepancies between the generated narrative and the final diagnosis. Extensive experiments on the MIMIC-CXR benchmark demonstrate that our method achieves state-of-the-art performance in clinical efficacy metrics and significantly reduces hallucinations compared to strong supervised baselines.

R-GenIMA: Integrating Neuroimaging and Genetics with Interpretable Multimodal AI for Alzheimer's Disease Progression

Dec 22, 2025Abstract:Early detection of Alzheimer's disease (AD) requires models capable of integrating macro-scale neuroanatomical alterations with micro-scale genetic susceptibility, yet existing multimodal approaches struggle to align these heterogeneous signals. We introduce R-GenIMA, an interpretable multimodal large language model that couples a novel ROI-wise vision transformer with genetic prompting to jointly model structural MRI and single nucleotide polymorphisms (SNPs) variations. By representing each anatomically parcellated brain region as a visual token and encoding SNP profiles as structured text, the framework enables cross-modal attention that links regional atrophy patterns to underlying genetic factors. Applied to the ADNI cohort, R-GenIMA achieves state-of-the-art performance in four-way classification across normal cognition (NC), subjective memory concerns (SMC), mild cognitive impairment (MCI), and AD. Beyond predictive accuracy, the model yields biologically meaningful explanations by identifying stage-specific brain regions and gene signatures, as well as coherent ROI-Gene association patterns across the disease continuum. Attention-based attribution revealed genes consistently enriched for established GWAS-supported AD risk loci, including APOE, BIN1, CLU, and RBFOX1. Stage-resolved neuroanatomical signatures identified shared vulnerability hubs across disease stages alongside stage-specific patterns: striatal involvement in subjective decline, frontotemporal engagement during prodromal impairment, and consolidated multimodal network disruption in AD. These results demonstrate that interpretable multimodal AI can synthesize imaging and genetics to reveal mechanistic insights, providing a foundation for clinically deployable tools that enable earlier risk stratification and inform precision therapeutic strategies in Alzheimer's disease.

Why Text Prevails: Vision May Undermine Multimodal Medical Decision Making

Dec 15, 2025Abstract:With the rapid progress of large language models (LLMs), advanced multimodal large language models (MLLMs) have demonstrated impressive zero-shot capabilities on vision-language tasks. In the biomedical domain, however, even state-of-the-art MLLMs struggle with basic Medical Decision Making (MDM) tasks. We investigate this limitation using two challenging datasets: (1) three-stage Alzheimer's disease (AD) classification (normal, mild cognitive impairment, dementia), where category differences are visually subtle, and (2) MIMIC-CXR chest radiograph classification with 14 non-mutually exclusive conditions. Our empirical study shows that text-only reasoning consistently outperforms vision-only or vision-text settings, with multimodal inputs often performing worse than text alone. To mitigate this, we explore three strategies: (1) in-context learning with reason-annotated exemplars, (2) vision captioning followed by text-only inference, and (3) few-shot fine-tuning of the vision tower with classification supervision. These findings reveal that current MLLMs lack grounded visual understanding and point to promising directions for improving multimodal decision making in healthcare.

DRE: An Effective Dual-Refined Method for Integrating Small and Large Language Models in Open-Domain Dialogue Evaluation

Jun 04, 2025Abstract:Large Language Models (LLMs) excel at many tasks but struggle with ambiguous scenarios where multiple valid responses exist, often yielding unreliable results. Conversely, Small Language Models (SLMs) demonstrate robustness in such scenarios but are susceptible to misleading or adversarial inputs. We observed that LLMs handle negative examples effectively, while SLMs excel with positive examples. To leverage their complementary strengths, we introduce SLIDE (Small and Large Integrated for Dialogue Evaluation), a method integrating SLMs and LLMs via adaptive weighting. Building on SLIDE, we further propose a Dual-Refinement Evaluation (DRE) method to enhance SLM-LLM integration: (1) SLM-generated insights guide the LLM to produce initial evaluations; (2) SLM-derived adjustments refine the LLM's scores for improved accuracy. Experiments demonstrate that DRE outperforms existing methods, showing stronger alignment with human judgment across diverse benchmarks. This work illustrates how combining small and large models can yield more reliable evaluation tools, particularly for open-ended tasks such as dialogue evaluation.

Zeus: Zero-shot LLM Instruction for Union Segmentation in Multimodal Medical Imaging

Apr 09, 2025Abstract:Medical image segmentation has achieved remarkable success through the continuous advancement of UNet-based and Transformer-based foundation backbones. However, clinical diagnosis in the real world often requires integrating domain knowledge, especially textual information. Conducting multimodal learning involves visual and text modalities shown as a solution, but collecting paired vision-language datasets is expensive and time-consuming, posing significant challenges. Inspired by the superior ability in numerous cross-modal tasks for Large Language Models (LLMs), we proposed a novel Vision-LLM union framework to address the issues. Specifically, we introduce frozen LLMs for zero-shot instruction generation based on corresponding medical images, imitating the radiology scanning and report generation process. {To better approximate real-world diagnostic processes}, we generate more precise text instruction from multimodal radiology images (e.g., T1-w or T2-w MRI and CT). Based on the impressive ability of semantic understanding and rich knowledge of LLMs. This process emphasizes extracting special features from different modalities and reunion the information for the ultimate clinical diagnostic. With generated text instruction, our proposed union segmentation framework can handle multimodal segmentation without prior collected vision-language datasets. To evaluate our proposed method, we conduct comprehensive experiments with influential baselines, the statistical results and the visualized case study demonstrate the superiority of our novel method.}

A Heterogeneous Graph Neural Network Fusing Functional and Structural Connectivity for MCI Diagnosis

Nov 13, 2024

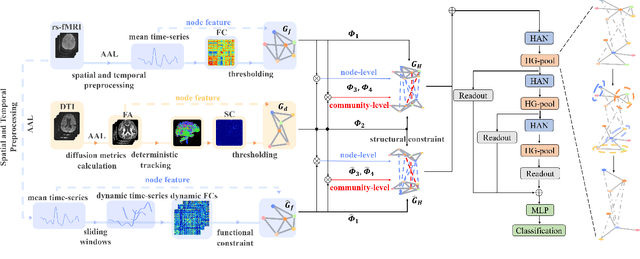

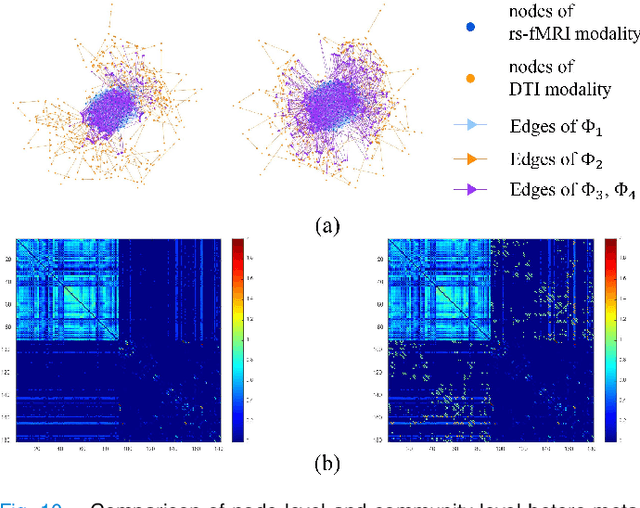

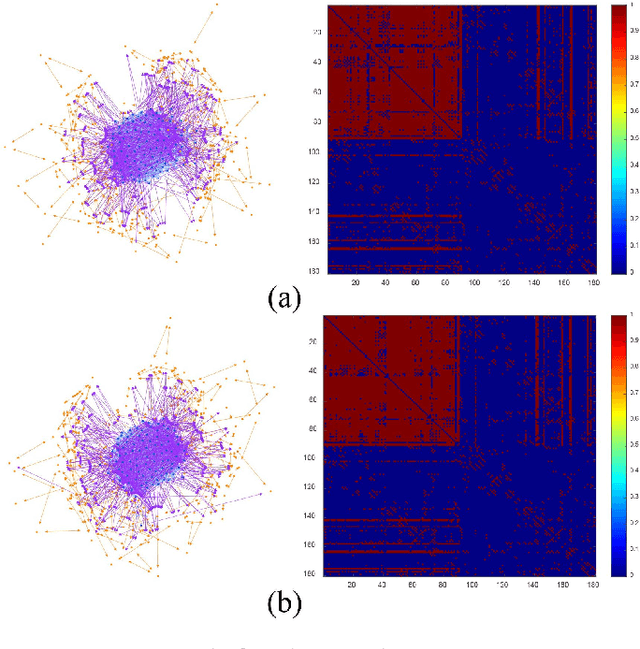

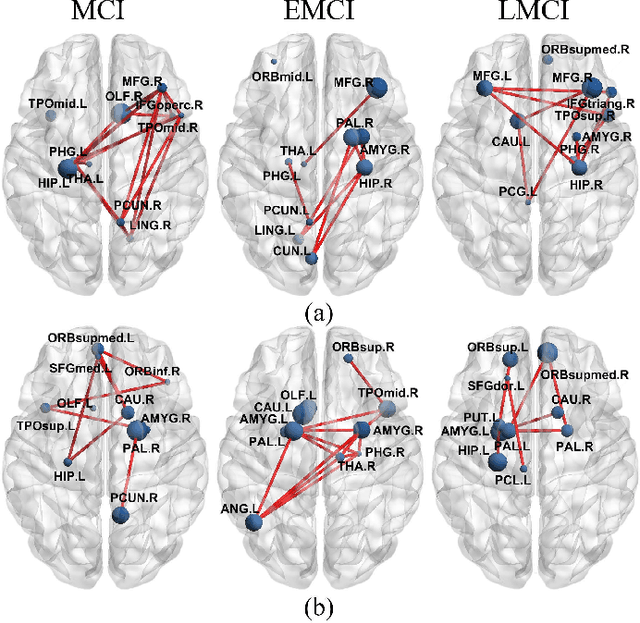

Abstract:Brain connectivity alternations associated with brain disorders have been widely reported in resting-state functional imaging (rs-fMRI) and diffusion tensor imaging (DTI). While many dual-modal fusion methods based on graph neural networks (GNNs) have been proposed, they generally follow homogenous fusion ways ignoring rich heterogeneity of dual-modal information. To address this issue, we propose a novel method that integrates functional and structural connectivity based on heterogeneous graph neural networks (HGNNs) to better leverage the rich heterogeneity in dual-modal images. We firstly use blood oxygen level dependency and whiter matter structure information provided by rs-fMRI and DTI to establish homo-meta-path, capturing node relationships within the same modality. At the same time, we propose to establish hetero-meta-path based on structure-function coupling and brain community searching to capture relations among cross-modal nodes. Secondly, we further introduce a heterogeneous graph pooling strategy that automatically balances homo- and hetero-meta-path, effectively leveraging heterogeneous information and preventing feature confusion after pooling. Thirdly, based on the flexibility of heterogeneous graphs, we propose a heterogeneous graph data augmentation approach that can conveniently address the sample imbalance issue commonly seen in clinical diagnosis. We evaluate our method on ADNI-3 dataset for mild cognitive impairment (MCI) diagnosis. Experimental results indicate the proposed method is effective and superior to other algorithms, with a mean classification accuracy of 93.3%.

Interpretable Spatio-Temporal Embedding for Brain Structural-Effective Network with Ordinary Differential Equation

May 21, 2024

Abstract:The MRI-derived brain network serves as a pivotal instrument in elucidating both the structural and functional aspects of the brain, encompassing the ramifications of diseases and developmental processes. However, prevailing methodologies, often focusing on synchronous BOLD signals from functional MRI (fMRI), may not capture directional influences among brain regions and rarely tackle temporal functional dynamics. In this study, we first construct the brain-effective network via the dynamic causal model. Subsequently, we introduce an interpretable graph learning framework termed Spatio-Temporal Embedding ODE (STE-ODE). This framework incorporates specifically designed directed node embedding layers, aiming at capturing the dynamic interplay between structural and effective networks via an ordinary differential equation (ODE) model, which characterizes spatial-temporal brain dynamics. Our framework is validated on several clinical phenotype prediction tasks using two independent publicly available datasets (HCP and OASIS). The experimental results clearly demonstrate the advantages of our model compared to several state-of-the-art methods.

Constrained Multiview Representation for Self-supervised Contrastive Learning

Feb 05, 2024Abstract:Representation learning constitutes a pivotal cornerstone in contemporary deep learning paradigms, offering a conduit to elucidate distinctive features within the latent space and interpret the deep models. Nevertheless, the inherent complexity of anatomical patterns and the random nature of lesion distribution in medical image segmentation pose significant challenges to the disentanglement of representations and the understanding of salient features. Methods guided by the maximization of mutual information, particularly within the framework of contrastive learning, have demonstrated remarkable success and superiority in decoupling densely intertwined representations. However, the effectiveness of contrastive learning highly depends on the quality of the positive and negative sample pairs, i.e. the unselected average mutual information among multi-views would obstruct the learning strategy so the selection of the views is vital. In this work, we introduce a novel approach predicated on representation distance-based mutual information (MI) maximization for measuring the significance of different views, aiming at conducting more efficient contrastive learning and representation disentanglement. Additionally, we introduce an MI re-ranking strategy for representation selection, benefiting both the continuous MI estimating and representation significance distance measuring. Specifically, we harness multi-view representations extracted from the frequency domain, re-evaluating their significance based on mutual information across varying frequencies, thereby facilitating a multifaceted contrastive learning approach to bolster semantic comprehension. The statistical results under the five metrics demonstrate that our proposed framework proficiently constrains the MI maximization-driven representation selection and steers the multi-view contrastive learning process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge