Siwen Quan

SPU-IMR: Self-supervised Arbitrary-scale Point Cloud Upsampling via Iterative Mask-recovery Network

Feb 26, 2025Abstract:Point cloud upsampling aims to generate dense and uniformly distributed point sets from sparse point clouds. Existing point cloud upsampling methods typically approach the task as an interpolation problem. They achieve upsampling by performing local interpolation between point clouds or in the feature space, then regressing the interpolated points to appropriate positions. By contrast, our proposed method treats point cloud upsampling as a global shape completion problem. Specifically, our method first divides the point cloud into multiple patches. Then, a masking operation is applied to remove some patches, leaving visible point cloud patches. Finally, our custom-designed neural network iterative completes the missing sections of the point cloud through the visible parts. During testing, by selecting different mask sequences, we can restore various complete patches. A sufficiently dense upsampled point cloud can be obtained by merging all the completed patches. We demonstrate the superior performance of our method through both quantitative and qualitative experiments, showing overall superiority against both existing self-supervised and supervised methods.

Deep Learning for 3D Point Cloud Enhancement: A Survey

Oct 30, 2024

Abstract:Point cloud data now are popular data representations in a number of three-dimensional (3D) vision research realms. However, due to the limited performance of sensors and sensing noise, the raw data usually suffer from sparsity, noise, and incompleteness. This poses great challenges to down-stream point cloud processing tasks. In recent years, deep-learning-based point cloud enhancement methods, which aim to achieve dense, clean, and complete point clouds from low-quality raw point clouds using deep neural networks, are gaining tremendous research attention. This paper, for the first time to our knowledge, presents a comprehensive survey for deep-learning-based point cloud enhancement methods. It covers three main perspectives for point cloud enhancement, i.e., (1) denoising to achieve clean data; (2) completion to recover unseen data; (3) upsampling to obtain dense data. Our survey presents a new taxonomy for recent state-of-the-art methods and systematic experimental results on standard benchmarks. In addition, we share our insightful observations, thoughts, and inspiring future research directions for point cloud enhancement with deep learning.

On Efficient and Robust Metrics for RANSAC Hypotheses and 3D Rigid Registration

Nov 10, 2020

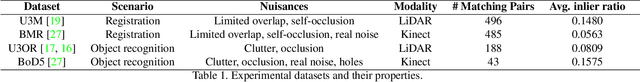

Abstract:This paper focuses on developing efficient and robust evaluation metrics for RANSAC hypotheses to achieve accurate 3D rigid registration. Estimating six-degree-of-freedom (6-DoF) pose from feature correspondences remains a popular approach to 3D rigid registration, where random sample consensus (RANSAC) is a de-facto choice to this problem. However, existing metrics for RANSAC hypotheses are either time-consuming or sensitive to common nuisances, parameter variations, and different application scenarios, resulting in performance deterioration in overall registration accuracy and speed. We alleviate this problem by first analyzing the contributions of inliers and outliers, and then proposing several efficient and robust metrics with different designing motivations for RANSAC hypotheses. Comparative experiments on four standard datasets with different nuisances and application scenarios verify that the proposed metrics can significantly improve the registration performance and are more robust than several state-of-the-art competitors, making them good gifts to practical applications. This work also draws an interesting conclusion, i.e., not all inliers are equal while all outliers should be equal, which may shed new light on this research problem.

3D Correspondence Grouping with Compatibility Features

Jul 21, 2020

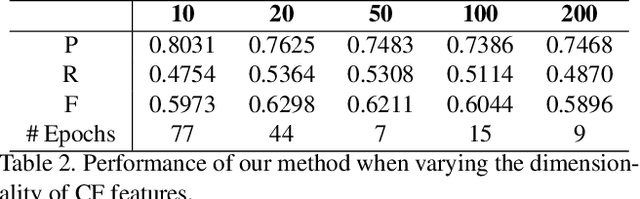

Abstract:We present a simple yet effective method for 3D correspondence grouping. The objective is to accurately classify initial correspondences obtained by matching local geometric descriptors into inliers and outliers. Although the spatial distribution of correspondences is irregular, inliers are expected to be geometrically compatible with each other. Based on such observation, we propose a novel representation for 3D correspondences, dubbed compatibility feature (CF), to describe the consistencies within inliers and inconsistencies within outliers. CF consists of top-ranked compatibility scores of a candidate to other correspondences, which purely relies on robust and rotation-invariant geometric constraints. We then formulate the grouping problem as a classification problem for CF features, which is accomplished via a simple multilayer perceptron (MLP) network. Comparisons with nine state-of-the-art methods on four benchmarks demonstrate that: 1) CF is distinctive, robust, and rotation-invariant; 2) our CF-based method achieves the best overall performance and holds good generalization ability.

Evaluating Local Geometric Feature Representations for 3D Rigid Data Matching

Jun 29, 2019

Abstract:Local geometric descriptors remain an essential component for 3D rigid data matching and fusion. The devise of a rotational invariant local geometric descriptor usually consists of two steps: local reference frame (LRF) construction and feature representation. Existing evaluation efforts have mainly been paid on the LRF or the overall descriptor, yet the quantitative comparison of feature representations remains unexplored. This paper fills this gap by comprehensively evaluating nine state-of-the-art local geometric feature representations. Our evaluation is on the ground that ground-truth LRFs are leveraged such that the ranking of tested feature representations are more convincing as opposed to existing studies. The experiments are deployed on six standard datasets with various application scenarios (shape retrieval, point cloud registration, and object recognition) and data modalities (LiDAR, Kinect, and Space Time) as well as perturbations including Gaussian noise, shot noise, data decimation, clutter, occlusion, and limited overlap. The evaluated terms cover the major concerns for a feature representation, e.g., distinctiveness, robustness, compactness, and efficiency. The outcomes present interesting findings that may shed new light on this community and provide complementary perspectives to existing evaluations on the topic of local geometric feature description. A summary of evaluated methods regarding their peculiarities is also presented to guide real-world applications and new descriptor crafting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge