Sinem Coleri

Large AI Model-Enabled Secure Communications in Low-Altitude Wireless Networks: Concepts, Perspectives and Case Study

Aug 01, 2025Abstract:Low-altitude wireless networks (LAWNs) have the potential to revolutionize communications by supporting a range of applications, including urban parcel delivery, aerial inspections and air taxis. However, compared with traditional wireless networks, LAWNs face unique security challenges due to low-altitude operations, frequent mobility and reliance on unlicensed spectrum, making it more vulnerable to some malicious attacks. In this paper, we investigate some large artificial intelligence model (LAM)-enabled solutions for secure communications in LAWNs. Specifically, we first explore the amplified security risks and important limitations of traditional AI methods in LAWNs. Then, we introduce the basic concepts of LAMs and delve into the role of LAMs in addressing these challenges. To demonstrate the practical benefits of LAMs for secure communications in LAWNs, we propose a novel LAM-based optimization framework that leverages large language models (LLMs) to generate enhanced state features on top of handcrafted representations, and to design intrinsic rewards accordingly, thereby improving reinforcement learning performance for secure communication tasks. Through a typical case study, simulation results validate the effectiveness of the proposed framework. Finally, we outline future directions for integrating LAMs into secure LAWN applications.

From Ground to Sky: Architectures, Applications, and Challenges Shaping Low-Altitude Wireless Networks

Jun 14, 2025Abstract:In this article, we introduce a novel low-altitude wireless network (LAWN), which is a reconfigurable, three-dimensional (3D) layered architecture. In particular, the LAWN integrates connectivity, sensing, control, and computing across aerial and terrestrial nodes that enable seamless operation in complex, dynamic, and mission-critical environments. In this article, we introduce a novel low-altitude wireless network (LAWN), which is a reconfigurable, three-dimensional (3D) layered architecture. Different from the conventional aerial communication systems, LAWN's distinctive feature is its tight integration of functional planes in which multiple functionalities continually reshape themselves to operate safely and efficiently in the low-altitude sky. With the LAWN, we discuss several enabling technologies, such as integrated sensing and communication (ISAC), semantic communication, and fully-actuated control systems. Finally, we identify potential applications and key cross-layer challenges. This article offers a comprehensive roadmap for future research and development in the low-altitude airspace.

SNR and Resource Adaptive Deep JSCC for Distributed IoT Image Classification

Jun 12, 2025

Abstract:Sensor-based local inference at IoT devices faces severe computational limitations, often requiring data transmission over noisy wireless channels for server-side processing. To address this, split-network Deep Neural Network (DNN) based Joint Source-Channel Coding (JSCC) schemes are used to extract and transmit relevant features instead of raw data. However, most existing methods rely on fixed network splits and static configurations, lacking adaptability to varying computational budgets and channel conditions. In this paper, we propose a novel SNR- and computation-adaptive distributed CNN framework for wireless image classification across IoT devices and edge servers. We introduce a learning-assisted intelligent Genetic Algorithm (LAIGA) that efficiently explores the CNN hyperparameter space to optimize network configuration under given FLOPs constraints and given SNR. LAIGA intelligently discards the infeasible network configurations that exceed computational budget at IoT device. It also benefits from the Random Forests based learning assistance to avoid a thorough exploration of hyperparameter space and to induce application specific bias in candidate optimal configurations. Experimental results demonstrate that the proposed framework outperforms fixed-split architectures and existing SNR-adaptive methods, especially under low SNR and limited computational resources. We achieve a 10\% increase in classification accuracy as compared to existing JSCC based SNR-adaptive multilayer framework at an SNR as low as -10dB across a range of available computational budget (1M to 70M FLOPs) at IoT device.

Secure Cluster-Based Hierarchical Federated Learning in Vehicular Networks

May 02, 2025Abstract:Hierarchical Federated Learning (HFL) has recently emerged as a promising solution for intelligent decision-making in vehicular networks, helping to address challenges such as limited communication resources, high vehicle mobility, and data heterogeneity. However, HFL remains vulnerable to adversarial and unreliable vehicles, whose misleading updates can significantly compromise the integrity and convergence of the global model. To address these challenges, we propose a novel defense framework that integrates dynamic vehicle selection with robust anomaly detection within a cluster-based HFL architecture, specifically designed to counter Gaussian noise and gradient ascent attacks. The framework performs a comprehensive reliability assessment for each vehicle by evaluating historical accuracy, contribution frequency, and anomaly records. Anomaly detection combines Z-score and cosine similarity analyses on model updates to identify both statistical outliers and directional deviations in model updates. To further refine detection, an adaptive thresholding mechanism is incorporated into the cosine similarity metric, dynamically adjusting the threshold based on the historical accuracy of each vehicle to enforce stricter standards for consistently high-performing vehicles. In addition, a weighted gradient averaging mechanism is implemented, which assigns higher weights to gradient updates from more trustworthy vehicles. To defend against coordinated attacks, a cross-cluster consistency check is applied to identify collaborative attacks in which multiple compromised clusters coordinate misleading updates. Together, these mechanisms form a multi-level defense strategy to filter out malicious contributions effectively. Simulation results show that the proposed algorithm significantly reduces convergence time compared to benchmark methods across both 1-hop and 3-hop topologies.

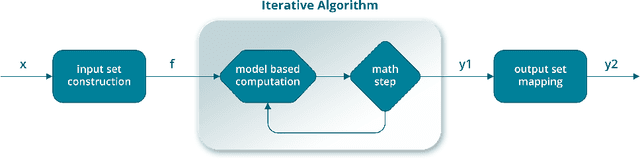

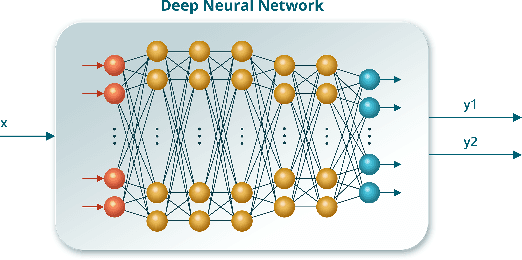

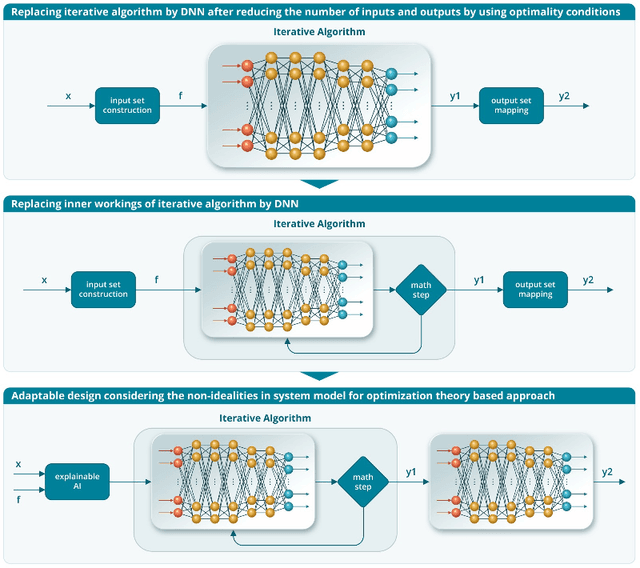

Integrating Optimization Theory with Deep Learning for Wireless Network Design

Dec 11, 2024

Abstract:Traditional wireless network design relies on optimization algorithms derived from domain-specific mathematical models, which are often inefficient and unsuitable for dynamic, real-time applications due to high complexity. Deep learning has emerged as a promising alternative to overcome complexity and adaptability concerns, but it faces challenges such as accuracy issues, delays, and limited interpretability due to its inherent black-box nature. This paper introduces a novel approach that integrates optimization theory with deep learning methodologies to address these issues. The methodology starts by constructing the block diagram of the optimization theory-based solution, identifying key building blocks corresponding to optimality conditions and iterative solutions. Selected building blocks are then replaced with deep neural networks, enhancing the adaptability and interpretability of the system. Extensive simulations show that this hybrid approach not only reduces runtime compared to optimization theory based approaches but also significantly improves accuracy and convergence rates, outperforming pure deep learning models.

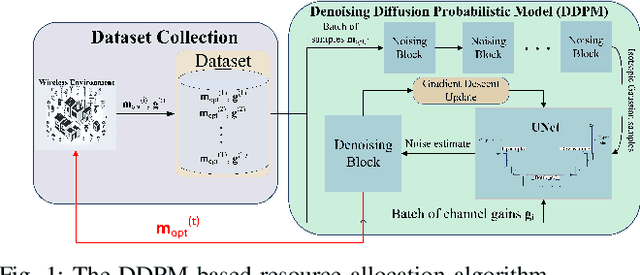

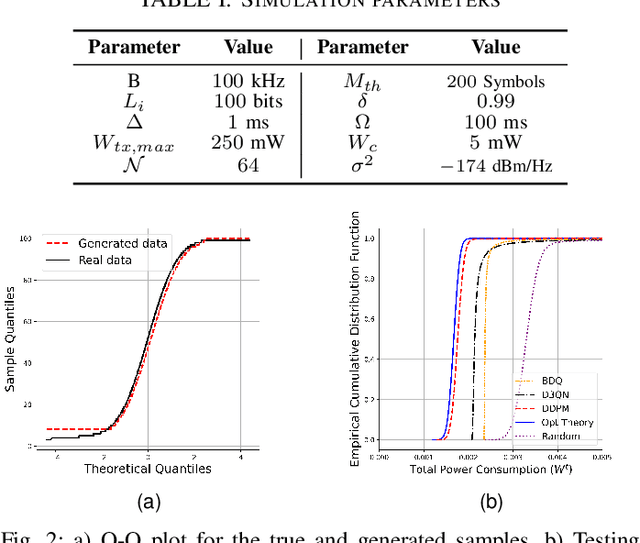

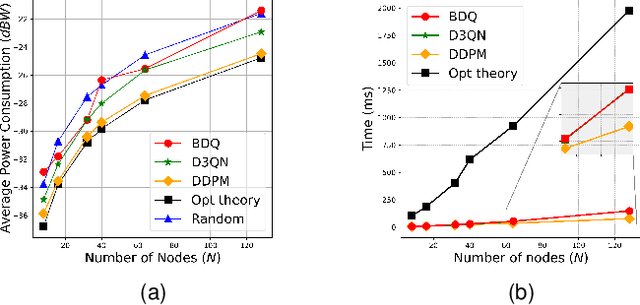

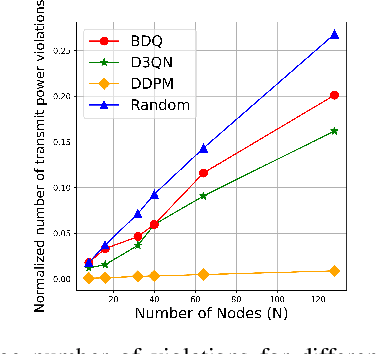

Diffusion Model Based Resource Allocation Strategy in Ultra-Reliable Wireless Networked Control Systems

Jul 22, 2024

Abstract:Diffusion models are vastly used in generative AI, leveraging their capability to capture complex data distributions. However, their potential remains largely unexplored in the field of resource allocation in wireless networks. This paper introduces a novel diffusion model-based resource allocation strategy for Wireless Networked Control Systems (WNCSs) with the objective of minimizing total power consumption through the optimization of the sampling period in the control system, and blocklength and packet error probability in the finite blocklength regime of the communication system. The problem is first reduced to the optimization of blocklength only based on the derivation of the optimality conditions. Then, the optimization theory solution collects a dataset of channel gains and corresponding optimal blocklengths. Finally, the Denoising Diffusion Probabilistic Model (DDPM) uses this collected dataset to train the resource allocation algorithm that generates optimal blocklength values conditioned on the channel state information (CSI). Via extensive simulations, the proposed approach is shown to outperform previously proposed Deep Reinforcement Learning (DRL) based approaches with close to optimal performance regarding total power consumption. Moreover, an improvement of up to eighteen-fold in the reduction of critical constraint violations is observed, further underscoring the accuracy of the solution.

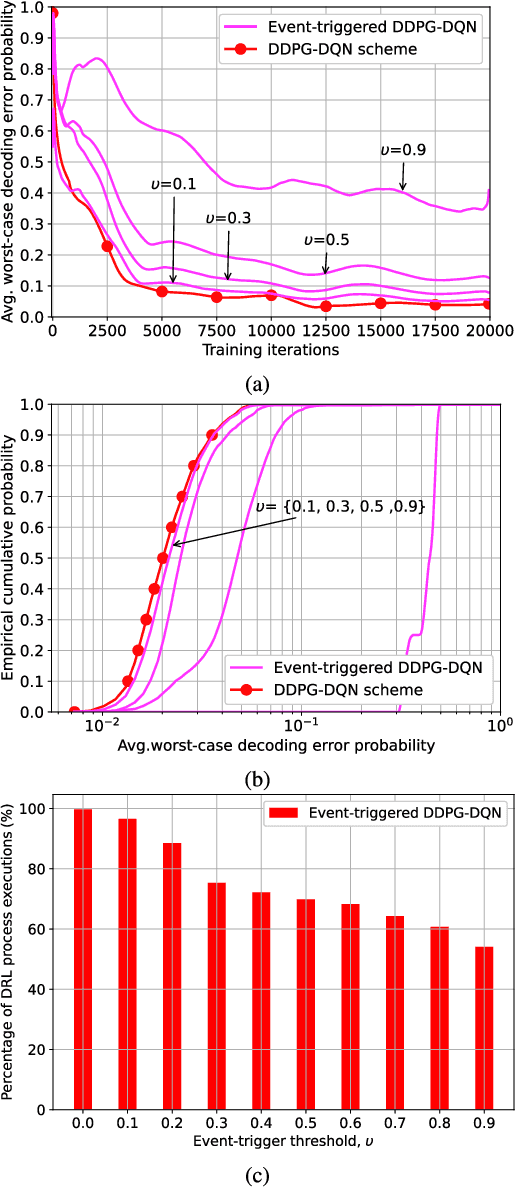

Event-Triggered Reinforcement Learning Based Joint Resource Allocation for Ultra-Reliable Low-Latency V2X Communications

Jul 18, 2024

Abstract:Future 6G-enabled vehicular networks face the challenge of ensuring ultra-reliable low-latency communication (URLLC) for delivering safety-critical information in a timely manner. Existing resource allocation schemes for vehicle-to-everything (V2X) communication systems primarily rely on traditional optimization-based algorithms. However, these methods often fail to guarantee the strict reliability and latency requirements of URLLC applications in dynamic vehicular environments due to the high complexity and communication overhead of the solution methodologies. This paper proposes a novel deep reinforcement learning (DRL) based framework for the joint power and block length allocation to minimize the worst-case decoding-error probability in the finite block length (FBL) regime for a URLLC-based downlink V2X communication system. The problem is formulated as a non-convex mixed-integer nonlinear programming problem (MINLP). Initially, an algorithm grounded in optimization theory is developed based on deriving the joint convexity of the decoding error probability in the block length and transmit power variables within the region of interest. Subsequently, an efficient event-triggered DRL-based algorithm is proposed to solve the joint optimization problem. Incorporating event-triggered learning into the DRL framework enables assessing whether to initiate the DRL process, thereby reducing the number of DRL process executions while maintaining reasonable reliability performance. Simulation results demonstrate that the proposed event-triggered DRL scheme can achieve 95% of the performance of the joint optimization scheme while reducing the DRL executions by up to 24% for different network settings.

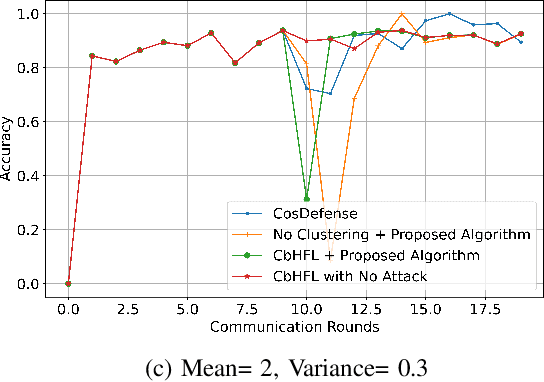

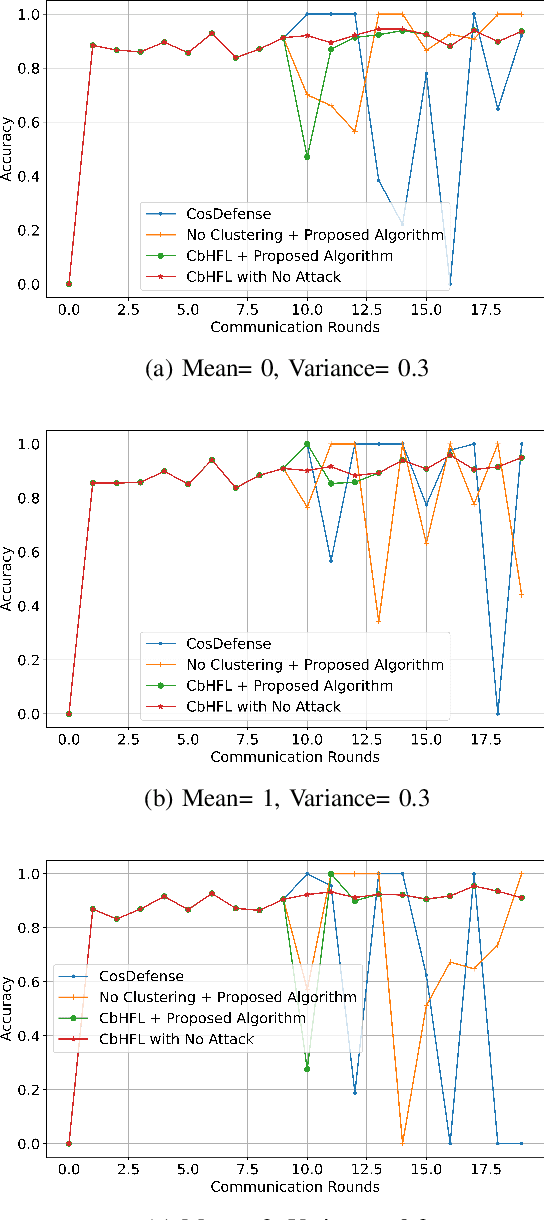

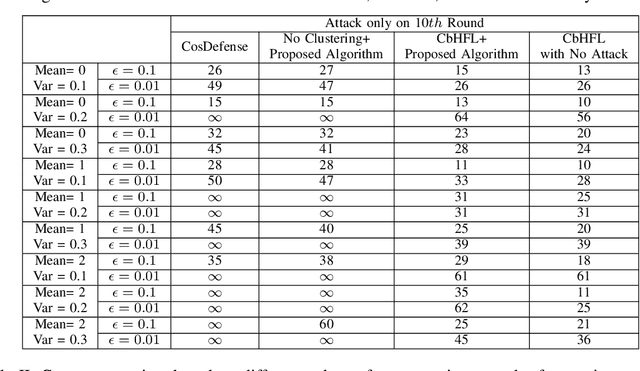

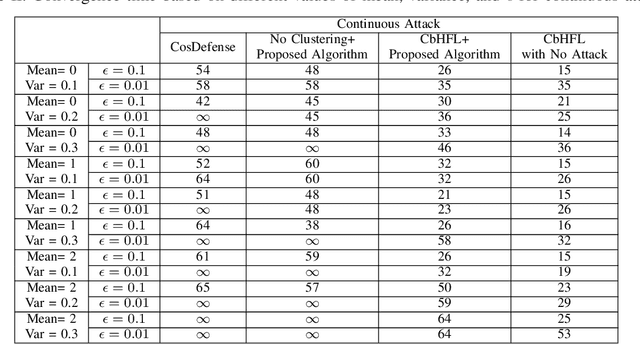

Secure Hierarchical Federated Learning in Vehicular Networks Using Dynamic Client Selection and Anomaly Detection

May 25, 2024

Abstract:Hierarchical Federated Learning (HFL) faces the significant challenge of adversarial or unreliable vehicles in vehicular networks, which can compromise the model's integrity through misleading updates. Addressing this, our study introduces a novel framework that integrates dynamic vehicle selection and robust anomaly detection mechanisms, aiming to optimize participant selection and mitigate risks associated with malicious contributions. Our approach involves a comprehensive vehicle reliability assessment, considering historical accuracy, contribution frequency, and anomaly records. An anomaly detection algorithm is utilized to identify anomalous behavior by analyzing the cosine similarity of local or model parameters during the federated learning (FL) process. These anomaly records are then registered and combined with past performance for accuracy and contribution frequency to identify the most suitable vehicles for each learning round. Dynamic client selection and anomaly detection algorithms are deployed at different levels, including cluster heads (CHs), cluster members (CMs), and the Evolving Packet Core (EPC), to detect and filter out spurious updates. Through simulation-based performance evaluation, our proposed algorithm demonstrates remarkable resilience even under intense attack conditions. Even in the worst-case scenarios, it achieves convergence times at $63$\% as effective as those in scenarios without any attacks. Conversely, in scenarios without utilizing our proposed algorithm, there is a high likelihood of non-convergence in the FL process.

Teacher-Student Learning based Low Complexity Relay Selection in Wireless Powered Communications

Feb 03, 2024Abstract:Radio Frequency Energy Harvesting (RF-EH) networks are key enablers of massive Internet-of-things by providing controllable and long-distance energy transfer to energy-limited devices. Relays, helping either energy or information transfer, have been demonstrated to significantly improve the performance of these networks. This paper studies the joint relay selection, scheduling, and power control problem in multiple-source-multiple-relay RF-EH networks under nonlinear EH conditions. We first obtain the optimal solution to the scheduling and power control problem for the given relay selection. Then, the relay selection problem is formulated as a classification problem, for which two convolutional neural network (CNN) based architectures are proposed. While the first architecture employs conventional 2D convolution blocks and benefits from skip connections between layers; the second architecture replaces them with inception blocks, to decrease trainable parameter size without sacrificing accuracy for memory-constrained applications. To decrease the runtime complexity further, teacher-student learning is employed such that the teacher network is larger, and the student is a smaller size CNN-based architecture distilling the teacher's knowledge. A novel dichotomous search-based algorithm is employed to determine the best architecture for the student network. Our simulation results demonstrate that the proposed solutions provide lower complexity than the state-of-art iterative approaches without compromising optimality.

Hierarchical Federated Learning in Multi-hop Cluster-Based VANETs

Jan 18, 2024Abstract:The usage of federated learning (FL) in Vehicular Ad hoc Networks (VANET) has garnered significant interest in research due to the advantages of reducing transmission overhead and protecting user privacy by communicating local dataset gradients instead of raw data. However, implementing FL in VANETs faces challenges, including limited communication resources, high vehicle mobility, and the statistical diversity of data distributions. In order to tackle these issues, this paper introduces a novel framework for hierarchical federated learning (HFL) over multi-hop clustering-based VANET. The proposed method utilizes a weighted combination of the average relative speed and cosine similarity of FL model parameters as a clustering metric to consider both data diversity and high vehicle mobility. This metric ensures convergence with minimum changes in cluster heads while tackling the complexities associated with non-independent and identically distributed (non-IID) data scenarios. Additionally, the framework includes a novel mechanism to manage seamless transitions of cluster heads (CHs), followed by transferring the most recent FL model parameter to the designated CH. Furthermore, the proposed approach considers the option of merging CHs, aiming to reduce their count and, consequently, mitigate associated overhead. Through extensive simulations, the proposed hierarchical federated learning over clustered VANET has been demonstrated to improve accuracy and convergence time significantly while maintaining an acceptable level of packet overhead compared to previously proposed clustering algorithms and non-clustered VANET.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge