Marco di Renzo

Secrecy Rate Maximization for 6G Reconfigurable Holographic Surfaces Assisted Systems

Mar 03, 2025Abstract:Reconfigurable holographic surfaces (RHS) have emerged as a transformative material technology, enabling dynamic control of electromagnetic waves to generate versatile holographic beam patterns. This paper addresses the problem of secrecy rate maximization for an RHS-assisted systems by joint designing the digital beamforming, artificial noise (AN), and the analog holographic beamforming. However, such a problem results to be non-convex and challenging. Therefore, to solve it, a novel alternating optimization algorithm based on the majorization-maximization (MM) framework for RHS-assisted systems is proposed, which rely on surrogate functions to facilitate efficient and reliable optimization. In the proposed approach, digital beamforming design ensures directed signal power toward the legitimate user while minimizing leakage to the unintended receiver. The AN generation method projects noise into the null space of the legitimate user channel, aligning it with the unintended receiver channel to degrade its signal quality. Finally, the holographic beamforming weights are optimized to refine the wavefronts for enhanced secrecy rate performance Simulation results validate the effectiveness of the proposed framework, demonstrating significant improvements in secrecy rate compared to the benchmark method.

Integrating Optimization Theory with Deep Learning for Wireless Network Design

Dec 11, 2024

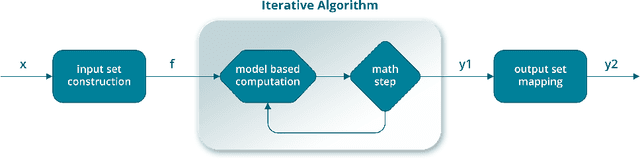

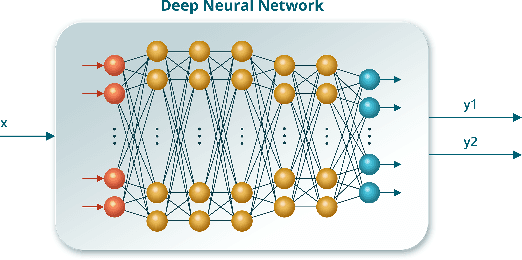

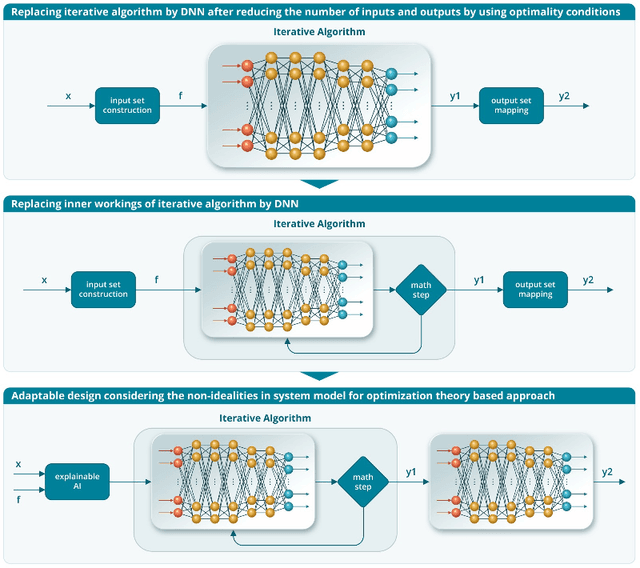

Abstract:Traditional wireless network design relies on optimization algorithms derived from domain-specific mathematical models, which are often inefficient and unsuitable for dynamic, real-time applications due to high complexity. Deep learning has emerged as a promising alternative to overcome complexity and adaptability concerns, but it faces challenges such as accuracy issues, delays, and limited interpretability due to its inherent black-box nature. This paper introduces a novel approach that integrates optimization theory with deep learning methodologies to address these issues. The methodology starts by constructing the block diagram of the optimization theory-based solution, identifying key building blocks corresponding to optimality conditions and iterative solutions. Selected building blocks are then replaced with deep neural networks, enhancing the adaptability and interpretability of the system. Extensive simulations show that this hybrid approach not only reduces runtime compared to optimization theory based approaches but also significantly improves accuracy and convergence rates, outperforming pure deep learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge