Sina Shahhosseini

Edge-centric Optimization of Multi-modal ML-driven eHealth Applications

Aug 04, 2022

Abstract:Smart eHealth applications deliver personalized and preventive digital healthcare services to clients through remote sensing, continuous monitoring, and data analytics. Smart eHealth applications sense input data from multiple modalities, transmit the data to edge and/or cloud nodes, and process the data with compute intensive machine learning (ML) algorithms. Run-time variations with continuous stream of noisy input data, unreliable network connection, computational requirements of ML algorithms, and choice of compute placement among sensor-edge-cloud layers affect the efficiency of ML-driven eHealth applications. In this chapter, we present edge-centric techniques for optimized compute placement, exploration of accuracy-performance trade-offs, and cross-layered sense-compute co-optimization for ML-driven eHealth applications. We demonstrate the practical use cases of smart eHealth applications in everyday settings, through a sensor-edge-cloud framework for an objective pain assessment case study.

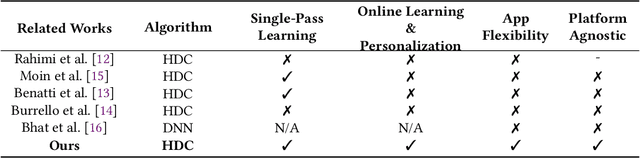

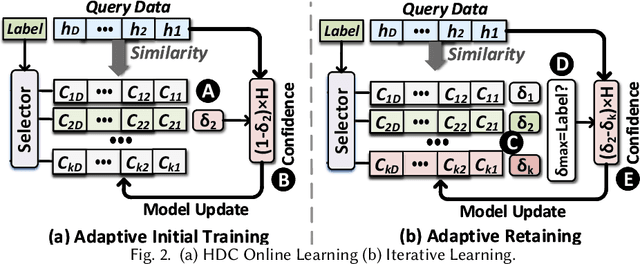

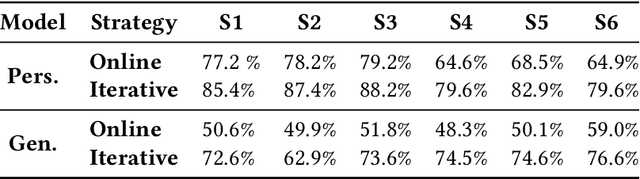

Efficient Personalized Learning for Wearable Health Applications using HyperDimensional Computing

Aug 01, 2022

Abstract:Health monitoring applications increasingly rely on machine learning techniques to learn end-user physiological and behavioral patterns in everyday settings. Considering the significant role of wearable devices in monitoring human body parameters, on-device learning can be utilized to build personalized models for behavioral and physiological patterns, and provide data privacy for users at the same time. However, resource constraints on most of these wearable devices prevent the ability to perform online learning on them. To address this issue, it is required to rethink the machine learning models from the algorithmic perspective to be suitable to run on wearable devices. Hyperdimensional computing (HDC) offers a well-suited on-device learning solution for resource-constrained devices and provides support for privacy-preserving personalization. Our HDC-based method offers flexibility, high efficiency, resilience, and performance while enabling on-device personalization and privacy protection. We evaluate the efficacy of our approach using three case studies and show that our system improves the energy efficiency of training by up to $45.8\times$ compared with the state-of-the-art Deep Neural Network (DNN) algorithms while offering a comparable accuracy.

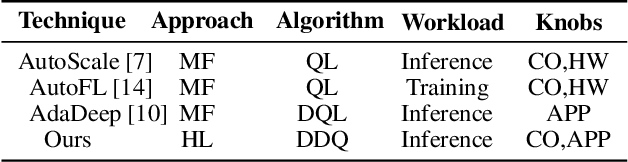

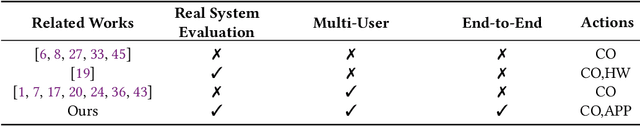

Hybrid Learning for Orchestrating Deep Learning Inference in Multi-user Edge-cloud Networks

Feb 21, 2022

Abstract:Deep-learning-based intelligent services have become prevalent in cyber-physical applications including smart cities and health-care. Collaborative end-edge-cloud computing for deep learning provides a range of performance and efficiency that can address application requirements through computation offloading. The decision to offload computation is a communication-computation co-optimization problem that varies with both system parameters (e.g., network condition) and workload characteristics (e.g., inputs). Identifying optimal orchestration considering the cross-layer opportunities and requirements in the face of varying system dynamics is a challenging multi-dimensional problem. While Reinforcement Learning (RL) approaches have been proposed earlier, they suffer from a large number of trial-and-errors during the learning process resulting in excessive time and resource consumption. We present a Hybrid Learning orchestration framework that reduces the number of interactions with the system environment by combining model-based and model-free reinforcement learning. Our Deep Learning inference orchestration strategy employs reinforcement learning to find the optimal orchestration policy. Furthermore, we deploy Hybrid Learning (HL) to accelerate the RL learning process and reduce the number of direct samplings. We demonstrate efficacy of our HL strategy through experimental comparison with state-of-the-art RL-based inference orchestration, demonstrating that our HL strategy accelerates the learning process by up to 166.6x.

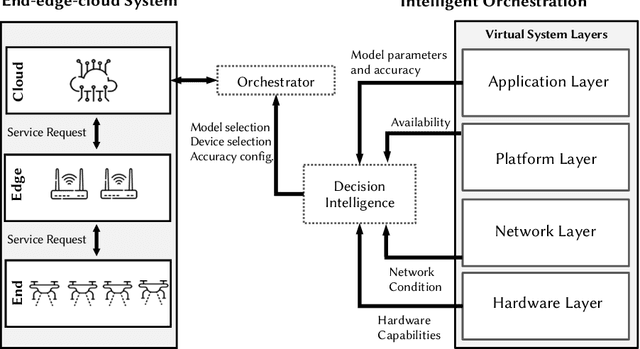

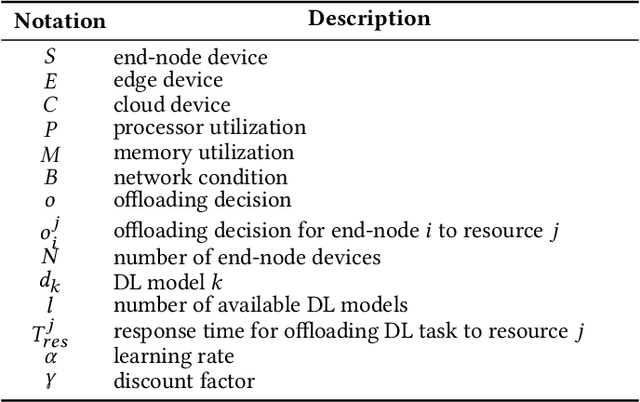

Online Learning for Orchestration of Inference in Multi-User End-Edge-Cloud Networks

Feb 21, 2022

Abstract:Deep-learning-based intelligent services have become prevalent in cyber-physical applications including smart cities and health-care. Deploying deep-learning-based intelligence near the end-user enhances privacy protection, responsiveness, and reliability. Resource-constrained end-devices must be carefully managed in order to meet the latency and energy requirements of computationally-intensive deep learning services. Collaborative end-edge-cloud computing for deep learning provides a range of performance and efficiency that can address application requirements through computation offloading. The decision to offload computation is a communication-computation co-optimization problem that varies with both system parameters (e.g., network condition) and workload characteristics (e.g., inputs). On the other hand, deep learning model optimization provides another source of tradeoff between latency and model accuracy. An end-to-end decision-making solution that considers such computation-communication problem is required to synergistically find the optimal offloading policy and model for deep learning services. To this end, we propose a reinforcement-learning-based computation offloading solution that learns optimal offloading policy considering deep learning model selection techniques to minimize response time while providing sufficient accuracy. We demonstrate the effectiveness of our solution for edge devices in an end-edge-cloud system and evaluate with a real-setup implementation using multiple AWS and ARM core configurations. Our solution provides 35% speedup in the average response time compared to the state-of-the-art with less than 0.9% accuracy reduction, demonstrating the promise of our online learning framework for orchestrating DL inference in end-edge-cloud systems.

AMSER: Adaptive Multi-modal Sensing for Energy Efficient and Resilient eHealth Systems

Dec 13, 2021

Abstract:eHealth systems deliver critical digital healthcare and wellness services for users by continuously monitoring physiological and contextual data. eHealth applications use multi-modal machine learning kernels to analyze data from different sensor modalities and automate decision-making. Noisy inputs and motion artifacts during sensory data acquisition affect the i) prediction accuracy and resilience of eHealth services and ii) energy efficiency in processing garbage data. Monitoring raw sensory inputs to identify and drop data and features from noisy modalities can improve prediction accuracy and energy efficiency. We propose a closed-loop monitoring and control framework for multi-modal eHealth applications, AMSER, that can mitigate garbage-in garbage-out by i) monitoring input modalities, ii) analyzing raw input to selectively drop noisy data and features, and iii) choosing appropriate machine learning models that fit the configured data and feature vector - to improve prediction accuracy and energy efficiency. We evaluate our AMSER approach using multi-modal eHealth applications of pain assessment and stress monitoring over different levels and types of noisy components incurred via different sensor modalities. Our approach achieves up to 22\% improvement in prediction accuracy and 5.6$\times$ energy consumption reduction in the sensing phase against the state-of-the-art multi-modal monitoring application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge