Simin Zheng

Performance Evaluation of Large Language Models in Statistical Programming

Feb 18, 2025Abstract:The programming capabilities of large language models (LLMs) have revolutionized automatic code generation and opened new avenues for automatic statistical analysis. However, the validity and quality of these generated codes need to be systematically evaluated before they can be widely adopted. Despite their growing prominence, a comprehensive evaluation of statistical code generated by LLMs remains scarce in the literature. In this paper, we assess the performance of LLMs, including two versions of ChatGPT and one version of Llama, in the domain of SAS programming for statistical analysis. Our study utilizes a set of statistical analysis tasks encompassing diverse statistical topics and datasets. Each task includes a problem description, dataset information, and human-verified SAS code. We conduct a comprehensive assessment of the quality of SAS code generated by LLMs through human expert evaluation based on correctness, effectiveness, readability, executability, and the accuracy of output results. The analysis of rating scores reveals that while LLMs demonstrate usefulness in generating syntactically correct code, they struggle with tasks requiring deep domain understanding and may produce redundant or incorrect results. This study offers valuable insights into the capabilities and limitations of LLMs in statistical programming, providing guidance for future advancements in AI-assisted coding systems for statistical analysis.

Bridging the Data Gap in AI Reliability Research and Establishing DR-AIR, a Comprehensive Data Repository for AI Reliability

Feb 17, 2025

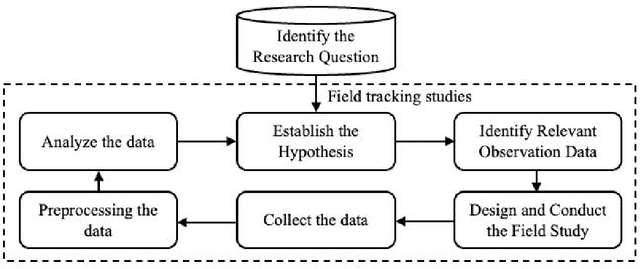

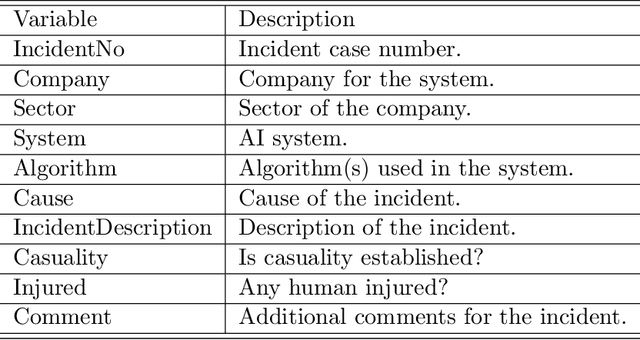

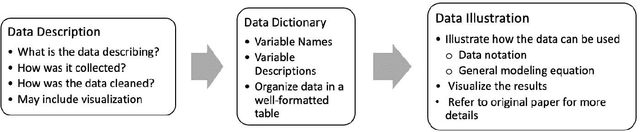

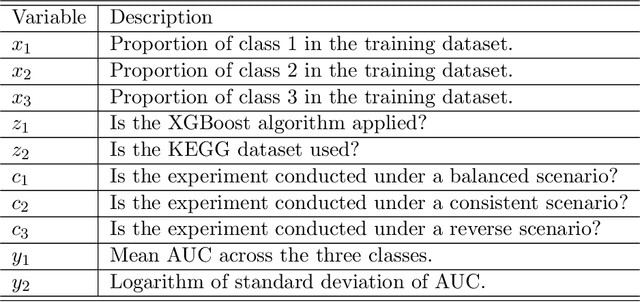

Abstract:Artificial intelligence (AI) technology and systems have been advancing rapidly. However, ensuring the reliability of these systems is crucial for fostering public confidence in their use. This necessitates the modeling and analysis of reliability data specific to AI systems. A major challenge in AI reliability research, particularly for those in academia, is the lack of readily available AI reliability data. To address this gap, this paper focuses on conducting a comprehensive review of available AI reliability data and establishing DR-AIR: a data repository for AI reliability. Specifically, we introduce key measurements and data types for assessing AI reliability, along with the methodologies used to collect these data. We also provide a detailed description of the currently available datasets with illustrative examples. Furthermore, we outline the setup of the DR-AIR repository and demonstrate its practical applications. This repository provides easy access to datasets specifically curated for AI reliability research. We believe these efforts will significantly benefit the AI research community by facilitating access to valuable reliability data and promoting collaboration across various academic domains within AI. We conclude our paper with a call to action, encouraging the research community to contribute and share AI reliability data to further advance this critical field of study.

Planning Reliability Assurance Tests for Autonomous Vehicles

Nov 30, 2023Abstract:Artificial intelligence (AI) technology has become increasingly prevalent and transforms our everyday life. One important application of AI technology is the development of autonomous vehicles (AV). However, the reliability of an AV needs to be carefully demonstrated via an assurance test so that the product can be used with confidence in the field. To plan for an assurance test, one needs to determine how many AVs need to be tested for how many miles and the standard for passing the test. Existing research has made great efforts in developing reliability demonstration tests in the other fields of applications for product development and assessment. However, statistical methods have not been utilized in AV test planning. This paper aims to fill in this gap by developing statistical methods for planning AV reliability assurance tests based on recurrent events data. We explore the relationship between multiple criteria of interest in the context of planning AV reliability assurance tests. Specifically, we develop two test planning strategies based on homogeneous and non-homogeneous Poisson processes while balancing multiple objectives with the Pareto front approach. We also offer recommendations for practical use. The disengagement events data from the California Department of Motor Vehicles AV testing program is used to illustrate the proposed assurance test planning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge