Sigang Yang

Sophisticated deep learning with on-chip optical diffractive tensor processing

Dec 20, 2022

Abstract:The ever-growing deep learning technologies are making revolutionary changes for modern life. However, conventional computing architectures are designed to process sequential and digital programs, being extremely burdened with performing massive parallel and adaptive deep learning applications. Photonic integrated circuits provide an efficient approach to mitigate bandwidth limitations and power-wall brought by its electronic counterparts, showing great potential in ultrafast and energy-free high-performance computing. Here, we propose an optical computing architecture enabled by on-chip diffraction to implement convolutional acceleration, termed optical convolution unit (OCU). We demonstrate that any real-valued convolution kernels can be exploited by OCU with a prominent computational throughput boosting via the concept of structral re-parameterization. With OCU as the fundamental unit, we build an optical convolutional neural network (oCNN) to implement two popular deep learning tasks: classification and regression. For classification, Fashion-MNIST and CIFAR-4 datasets are tested with accuracy of 91.63% and 86.25%, respectively. For regression, we build an optical denoising convolutional neural network (oDnCNN) to handle Gaussian noise in gray scale images with noise level {\sigma} = 10, 15, 20, resulting clean images with average PSNR of 31.70dB, 29.39dB and 27.72dB, respectively. The proposed OCU presents remarkable performance of low energy consumption and high information density due to its fully passive nature and compact footprint, providing a highly parallel while lightweight solution for future computing architecture to handle high dimensional tensors in deep learning.

Key frames assisted hybrid encoding for photorealistic compressive video sensing

Jul 26, 2022

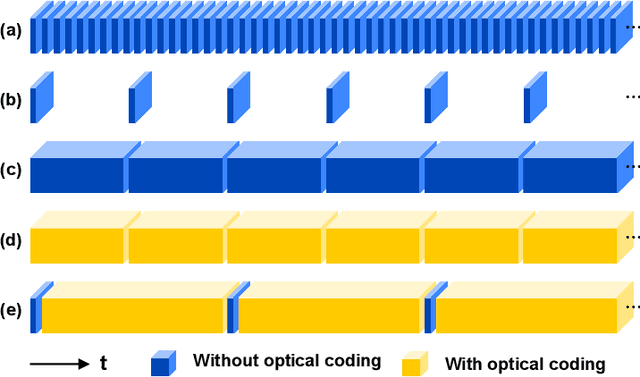

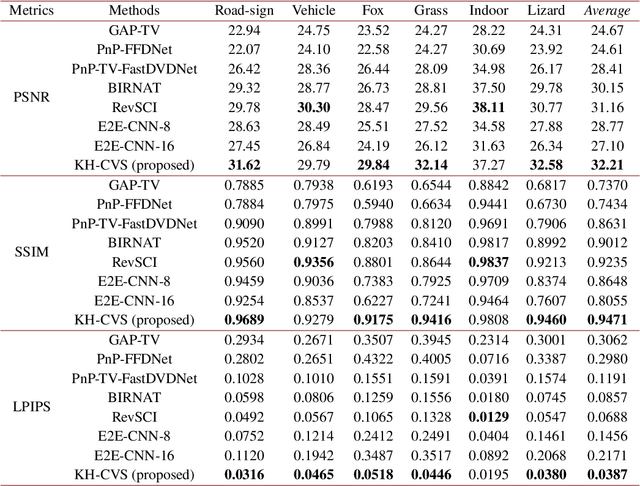

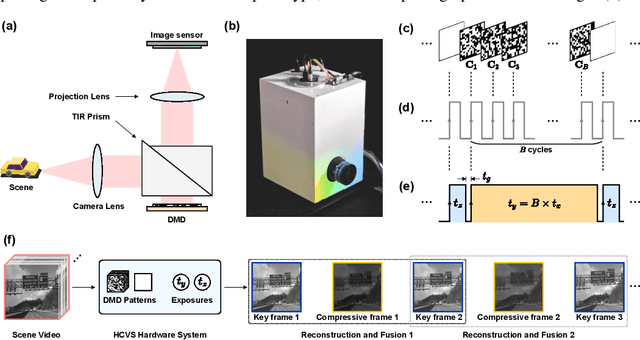

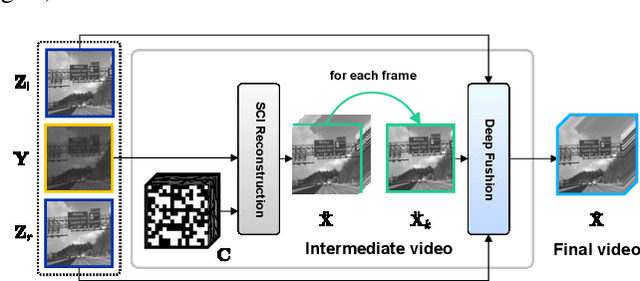

Abstract:Snapshot compressive imaging (SCI) encodes high-speed scene video into a snapshot measurement and then computationally makes reconstructions, allowing for efficient high-dimensional data acquisition. Numerous algorithms, ranging from regularization-based optimization and deep learning, are being investigated to improve reconstruction quality, but they are still limited by the ill-posed and information-deficient nature of the standard SCI paradigm. To overcome these drawbacks, we propose a new key frames assisted hybrid encoding paradigm for compressive video sensing, termed KH-CVS, that alternatively captures short-exposure key frames without coding and long-exposure encoded compressive frames to jointly reconstruct photorealistic video. With the use of optical flow and spatial warping, a deep convolutional neural network framework is constructed to integrate the benefits of these two types of frames. Extensive experiments on both simulations and real data from the prototype we developed verify the superiority of the proposed method.

Principle-driven Fiber Transmission Model based on PINN Neural Network

Aug 24, 2021

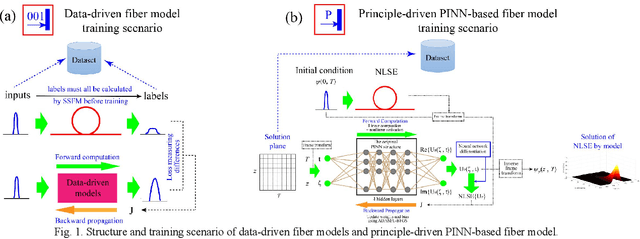

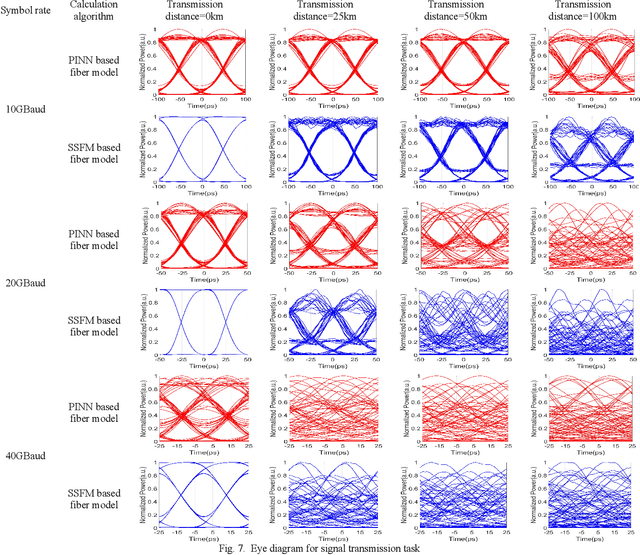

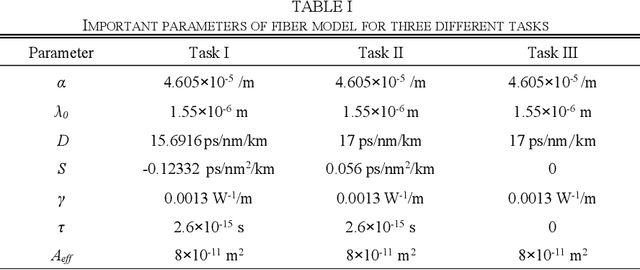

Abstract:In this paper, a novel principle-driven fiber transmission model based on physical induced neural network (PINN) is proposed. Unlike data-driven models which regard fiber transmission problem as data regression tasks, this model views it as an equation solving problem. Instead of adopting input signals and output signals which are calculated by SSFM algorithm in advance before training, this principle-driven PINN based fiber model adopts frames of time and distance as its inputs and the corresponding real and imaginary parts of NLSE solutions as its outputs. By taking into account of pulses and signals before transmission as initial conditions and fiber physical principles as NLSE in the design of loss functions, this model will progressively learn the transmission rules. Therefore, it can be effectively trained without the data labels, referred as the pre-calculated signals after transmission in data-driven models. Due to this advantage, SSFM algorithm is no longer needed before the training of principle-driven fiber model which can save considerable time consumption. Through numerical demonstration, the results show that this principle-driven PINN based fiber model can handle the prediction tasks of pulse evolution, signal transmission and fiber birefringence for different transmission parameters of fiber telecommunications.

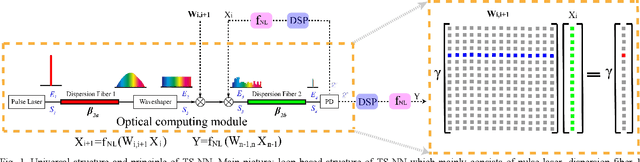

Electro-optical Neural Networks based on Time-stretch Method

Sep 13, 2019

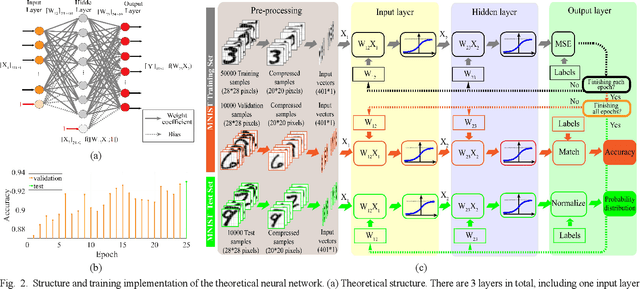

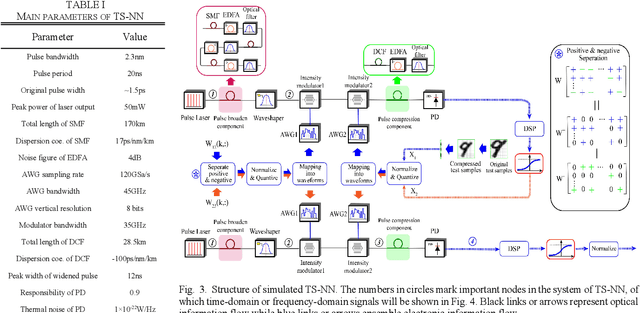

Abstract:In this paper, a novel architecture of electro-optical neural networks based on the time-stretch method is proposed and numerically simulated. By stretching time-domain ultrashort pulses, multiplications of large scale weight matrices and vectors can be implemented on light and multiple-layer of feedforward neural network operations can be easily implemented with fiber loops. Via simulation, the performance of a three-layer electro-optical neural network is tested by the handwriting digit recognition task and the accuracy reaches 88% under considerable noise.

High-speed real-time single-pixel microscopy based on Fourier sampling

Jun 15, 2016

Abstract:Single-pixel cameras based on the concepts of compressed sensing (CS) leverage the inherent structure of images to retrieve them with far fewer measurements and operate efficiently over a significantly broader spectral range than conventional silicon-based cameras. Recently, photonic time-stretch (PTS) technique facilitates the emergence of high-speed single-pixel cameras. A significant breakthrough in imaging speed of single-pixel cameras enables observation of fast dynamic phenomena. However, according to CS theory, image reconstruction is an iterative process that consumes enormous amounts of computational time and cannot be performed in real time. To address this challenge, we propose a novel single-pixel imaging technique that can produce high-quality images through rapid acquisition of their effective spatial Fourier spectrum. We employ phase-shifting sinusoidal structured illumination instead of random illumination for spectrum acquisition and apply inverse Fourier transform to the obtained spectrum for image restoration. We evaluate the performance of our prototype system by recognizing quick response (QR) codes and flow cytometric screening of cells. A frame rate of 625 kHz and a compression ratio of 10% are experimentally demonstrated in accordance with the recognition rate of the QR code. An imaging flow cytometer enabling high-content screening with an unprecedented throughput of 100,000 cells/s is also demonstrated. For real-time imaging applications, the proposed single-pixel microscope can significantly reduce the time required for image reconstruction by two orders of magnitude, which can be widely applied in industrial quality control and label-free biomedical imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge