Sichao Li

FaithAct: Faithfulness Planning and Acting in MLLMs

Nov 11, 2025Abstract:Unfaithfulness remains a persistent challenge for large language models (LLMs), which often produce plausible yet ungrounded reasoning chains that diverge from perceptual evidence or final conclusions. We distinguish between behavioral faithfulness (alignment between reasoning and output) and perceptual faithfulness (alignment between reasoning and input), and introduce FaithEval for quantifying step-level and chain-level faithfulness by evaluating whether each claimed object is visually supported by the image. Building on these insights, we propose FaithAct, a faithfulness-first planning and acting framework that enforces evidential grounding at every reasoning step. Experiments across multiple reasoning benchmarks demonstrate that FaithAct improves perceptual faithfulness by up to 26% without degrading task accuracy compared to prompt-based and tool-augmented baselines. Our analysis shows that treating faithfulness as a guiding principle not only mitigates hallucination but also leads to more stable reasoning trajectories. This work thereby establishes a unified framework for both evaluating and enforcing faithfulness in multimodal reasoning.

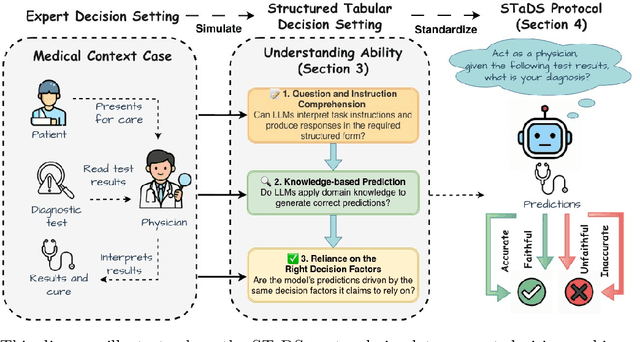

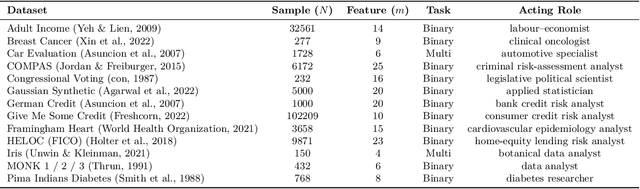

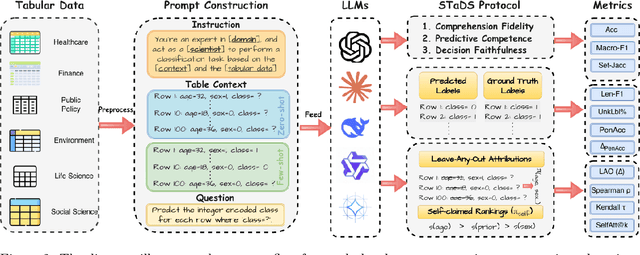

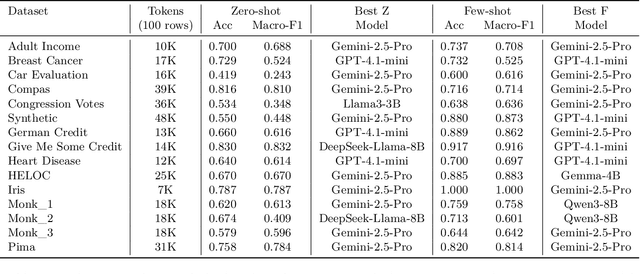

Evaluating LLM Understanding via Structured Tabular Decision Simulations

Nov 07, 2025

Abstract:Large language models (LLMs) often achieve impressive predictive accuracy, yet correctness alone does not imply genuine understanding. True LLM understanding, analogous to human expertise, requires making consistent, well-founded decisions across multiple instances and diverse domains, relying on relevant and domain-grounded decision factors. We introduce Structured Tabular Decision Simulations (STaDS), a suite of expert-like decision settings that evaluate LLMs as if they were professionals undertaking structured decision ``exams''. In this context, understanding is defined as the ability to identify and rely on the correct decision factors, features that determine outcomes within a domain. STaDS jointly assesses understanding through: (i) question and instruction comprehension, (ii) knowledge-based prediction, and (iii) reliance on relevant decision factors. By analyzing 9 frontier LLMs across 15 diverse decision settings, we find that (a) most models struggle to achieve consistently strong accuracy across diverse domains; (b) models can be accurate yet globally unfaithful, and there are frequent mismatches between stated rationales and factors driving predictions. Our findings highlight the need for global-level understanding evaluation protocols and advocate for novel frameworks that go beyond accuracy to enhance LLMs' understanding ability.

EXAGREE: Towards Explanation Agreement in Explainable Machine Learning

Nov 04, 2024

Abstract:Explanations in machine learning are critical for trust, transparency, and fairness. Yet, complex disagreements among these explanations limit the reliability and applicability of machine learning models, especially in high-stakes environments. We formalize four fundamental ranking-based explanation disagreement problems and introduce a novel framework, EXplanation AGREEment (EXAGREE), to bridge diverse interpretations in explainable machine learning, particularly from stakeholder-centered perspectives. Our approach leverages a Rashomon set for attribution predictions and then optimizes within this set to identify Stakeholder-Aligned Explanation Models (SAEMs) that minimize disagreement with diverse stakeholder needs while maintaining predictive performance. Rigorous empirical analysis on synthetic and real-world datasets demonstrates that EXAGREE reduces explanation disagreement and improves fairness across subgroups in various domains. EXAGREE not only provides researchers with a new direction for studying explanation disagreement problems but also offers data scientists a tool for making better-informed decisions in practical applications.

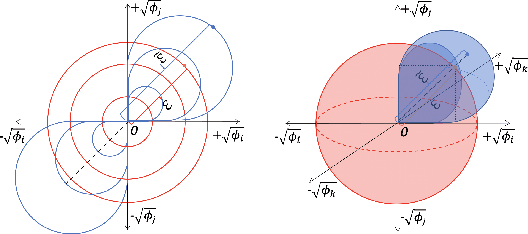

Practical Attribution Guidance for Rashomon Sets

Jul 26, 2024

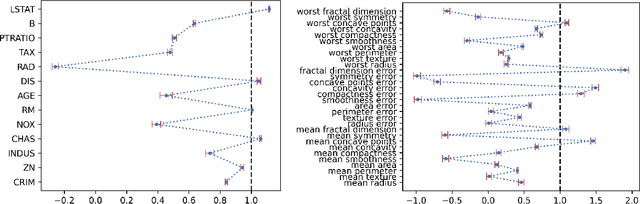

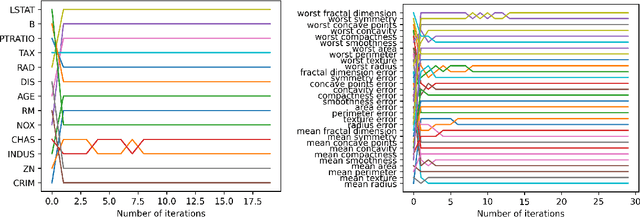

Abstract:Different prediction models might perform equally well (Rashomon set) in the same task, but offer conflicting interpretations and conclusions about the data. The Rashomon effect in the context of Explainable AI (XAI) has been recognized as a critical factor. Although the Rashomon set has been introduced and studied in various contexts, its practical application is at its infancy stage and lacks adequate guidance and evaluation. We study the problem of the Rashomon set sampling from a practical viewpoint and identify two fundamental axioms - generalizability and implementation sparsity that exploring methods ought to satisfy in practical usage. These two axioms are not satisfied by most known attribution methods, which we consider to be a fundamental weakness. We use the norms to guide the design of an $\epsilon$-subgradient-based sampling method. We apply this method to a fundamental mathematical problem as a proof of concept and to a set of practical datasets to demonstrate its ability compared with existing sampling methods.

Automated architectural space layout planning using a physics-inspired generative design framework

Jun 21, 2024

Abstract:The determination of space layout is one of the primary activities in the schematic design stage of an architectural project. The initial layout planning defines the shape, dimension, and circulation pattern of internal spaces; which can also affect performance and cost of the construction. When carried out manually, space layout planning can be complicated, repetitive and time consuming. In this work, a generative design framework for the automatic generation of spatial architectural layout has been developed. The proposed approach integrates a novel physics-inspired parametric model for space layout planning and an evolutionary optimisation metaheuristic. Results revealed that such a generative design framework can generate a wide variety of design suggestions at the schematic design stage, applicable to complex design problems.

Diverse Explanations from Data-driven and Domain-driven Perspectives for Machine Learning Models

Feb 01, 2024

Abstract:Explanations of machine learning models are important, especially in scientific areas such as chemistry, biology, and physics, where they guide future laboratory experiments and resource requirements. These explanations can be derived from well-trained machine learning models (data-driven perspective) or specific domain knowledge (domain-driven perspective). However, there exist inconsistencies between these perspectives due to accurate yet misleading machine learning models and various stakeholders with specific needs, wants, or aims. This paper calls attention to these inconsistencies and suggests a way to find an accurate model with expected explanations that reinforce physical laws and meet stakeholders' requirements from a set of equally-good models, also known as Rashomon sets. Our goal is to foster a comprehensive understanding of these inconsistencies and ultimately contribute to the integration of eXplainable Artificial Intelligence (XAI) into scientific domains.

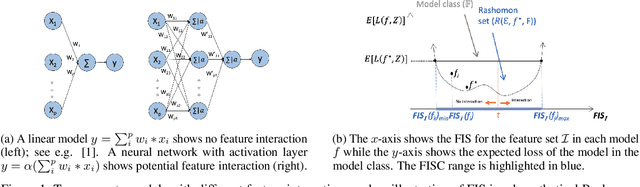

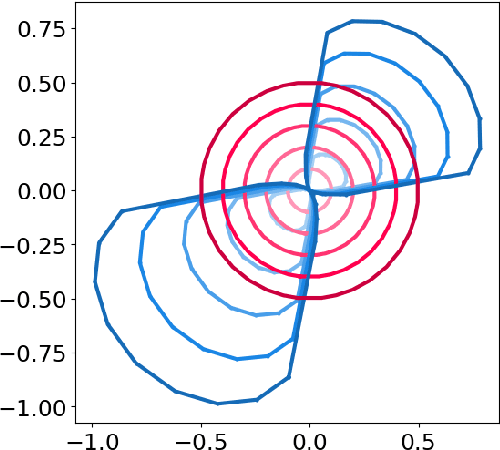

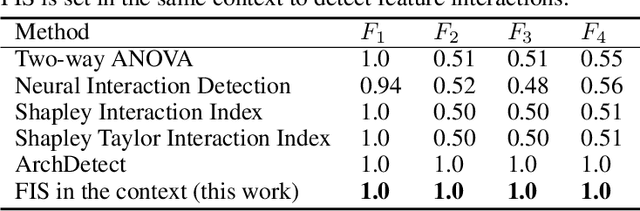

Exploring the cloud of feature interaction scores in a Rashomon set

May 17, 2023

Abstract:Interactions among features are central to understanding the behavior of machine learning models. Recent research has made significant strides in detecting and quantifying feature interactions in single predictive models. However, we argue that the feature interactions extracted from a single pre-specified model may not be trustworthy since: a well-trained predictive model may not preserve the true feature interactions and there exist multiple well-performing predictive models that differ in feature interaction strengths. Thus, we recommend exploring feature interaction strengths in a model class of approximately equally accurate predictive models. In this work, we introduce the feature interaction score (FIS) in the context of a Rashomon set, representing a collection of models that achieve similar accuracy on a given task. We propose a general and practical algorithm to calculate the FIS in the model class. We demonstrate the properties of the FIS via synthetic data and draw connections to other areas of statistics. Additionally, we introduce a Halo plot for visualizing the feature interaction variance in high-dimensional space and a swarm plot for analyzing FIS in a Rashomon set. Experiments with recidivism prediction and image classification illustrate how feature interactions can vary dramatically in importance for similarly accurate predictive models. Our results suggest that the proposed FIS can provide valuable insights into the nature of feature interactions in machine learning models.

Simultaneous prediction of hand gestures, handedness, and hand keypoints using thermal images

Mar 02, 2023

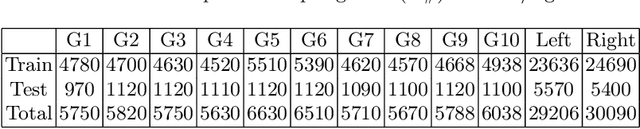

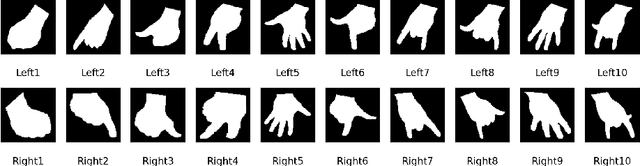

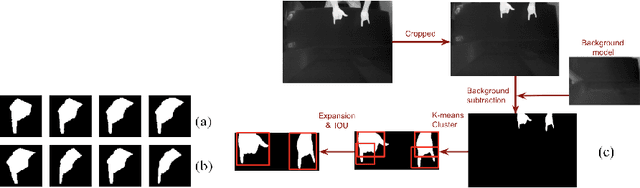

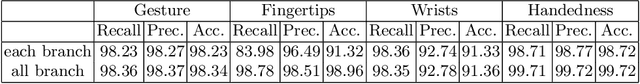

Abstract:Hand gesture detection is a well-explored area in computer vision with applications in various forms of Human-Computer Interactions. In this work, we propose a technique for simultaneous hand gesture classification, handedness detection, and hand keypoints localization using thermal data captured by an infrared camera. Our method uses a novel deep multi-task learning architecture that includes shared encoderdecoder layers followed by three branches dedicated for each mentioned task. We performed extensive experimental validation of our model on an in-house dataset consisting of 24 users data. The results confirm higher than 98 percent accuracy for gesture classification, handedness detection, and fingertips localization, and more than 91 percent accuracy for wrist points localization.

Variance Tolerance Factors For Interpreting Neural Networks

Sep 28, 2022

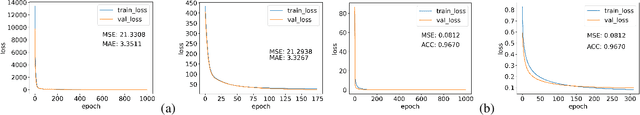

Abstract:Black box models only provide results for deep learning tasks and lack informative details about how these results were obtained. In this paper, we propose a general theory that defines a variance tolerance factor (VTF) to interpret the neural networks by ranking the importance of features and constructing a novel architecture consisting of a base model and feature model to demonstrate its utility. Two feature importance ranking methods and a feature selection method based on the VTF are created. A thorough evaluation on synthetic, benchmark, and real datasets is provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge