Amanda Barnard

Diverse Explanations from Data-driven and Domain-driven Perspectives for Machine Learning Models

Feb 01, 2024

Abstract:Explanations of machine learning models are important, especially in scientific areas such as chemistry, biology, and physics, where they guide future laboratory experiments and resource requirements. These explanations can be derived from well-trained machine learning models (data-driven perspective) or specific domain knowledge (domain-driven perspective). However, there exist inconsistencies between these perspectives due to accurate yet misleading machine learning models and various stakeholders with specific needs, wants, or aims. This paper calls attention to these inconsistencies and suggests a way to find an accurate model with expected explanations that reinforce physical laws and meet stakeholders' requirements from a set of equally-good models, also known as Rashomon sets. Our goal is to foster a comprehensive understanding of these inconsistencies and ultimately contribute to the integration of eXplainable Artificial Intelligence (XAI) into scientific domains.

Shapley Based Residual Decomposition for Instance Analysis

May 30, 2023Abstract:In this paper, we introduce the idea of decomposing the residuals of regression with respect to the data instances instead of features. This allows us to determine the effects of each individual instance on the model and each other, and in doing so makes for a model-agnostic method of identifying instances of interest. In doing so, we can also determine the appropriateness of the model and data in the wider context of a given study. The paper focuses on the possible applications that such a framework brings to the relatively unexplored field of instance analysis in the context of Explainable AI tasks.

Exploring the cloud of feature interaction scores in a Rashomon set

May 17, 2023

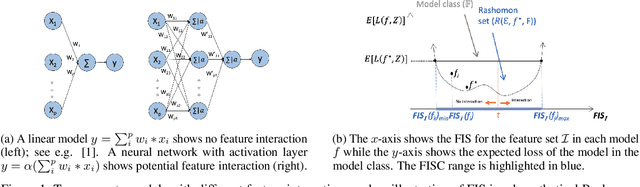

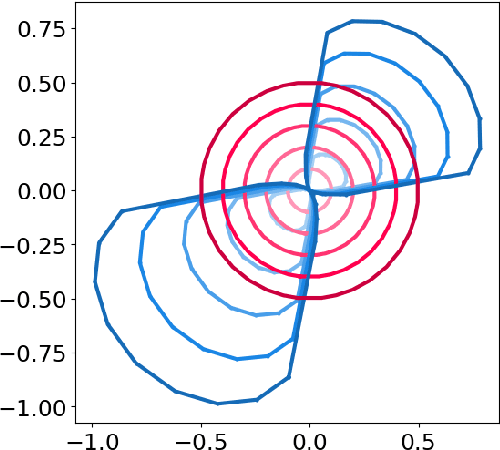

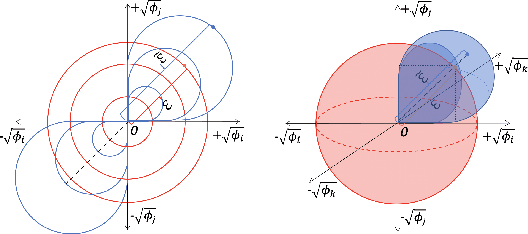

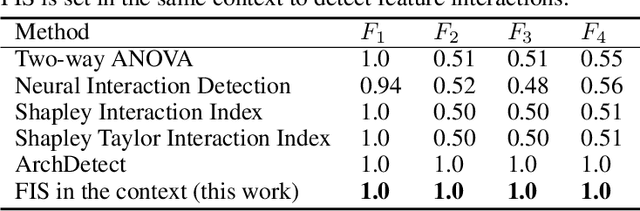

Abstract:Interactions among features are central to understanding the behavior of machine learning models. Recent research has made significant strides in detecting and quantifying feature interactions in single predictive models. However, we argue that the feature interactions extracted from a single pre-specified model may not be trustworthy since: a well-trained predictive model may not preserve the true feature interactions and there exist multiple well-performing predictive models that differ in feature interaction strengths. Thus, we recommend exploring feature interaction strengths in a model class of approximately equally accurate predictive models. In this work, we introduce the feature interaction score (FIS) in the context of a Rashomon set, representing a collection of models that achieve similar accuracy on a given task. We propose a general and practical algorithm to calculate the FIS in the model class. We demonstrate the properties of the FIS via synthetic data and draw connections to other areas of statistics. Additionally, we introduce a Halo plot for visualizing the feature interaction variance in high-dimensional space and a swarm plot for analyzing FIS in a Rashomon set. Experiments with recidivism prediction and image classification illustrate how feature interactions can vary dramatically in importance for similarly accurate predictive models. Our results suggest that the proposed FIS can provide valuable insights into the nature of feature interactions in machine learning models.

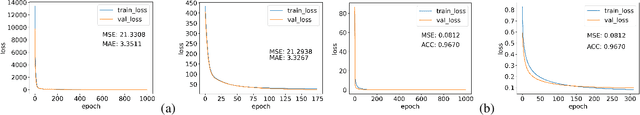

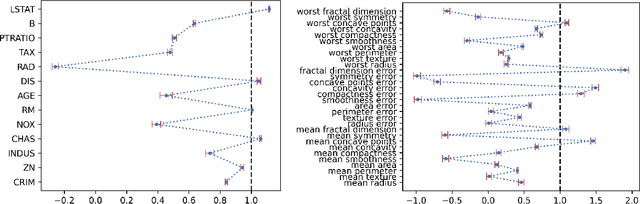

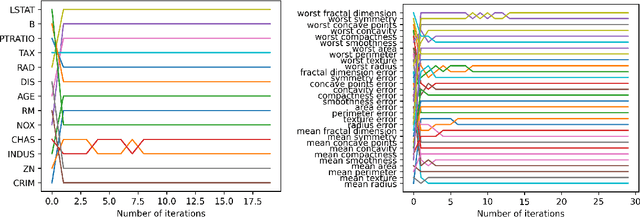

Variance Tolerance Factors For Interpreting Neural Networks

Sep 28, 2022

Abstract:Black box models only provide results for deep learning tasks and lack informative details about how these results were obtained. In this paper, we propose a general theory that defines a variance tolerance factor (VTF) to interpret the neural networks by ranking the importance of features and constructing a novel architecture consisting of a base model and feature model to demonstrate its utility. Two feature importance ranking methods and a feature selection method based on the VTF are created. A thorough evaluation on synthetic, benchmark, and real datasets is provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge