Simultaneous prediction of hand gestures, handedness, and hand keypoints using thermal images

Paper and Code

Mar 02, 2023

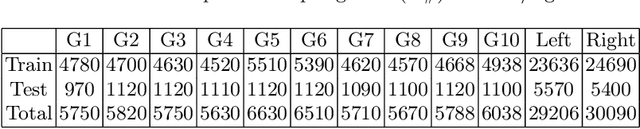

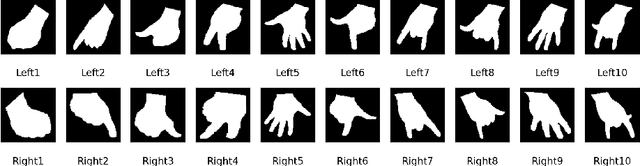

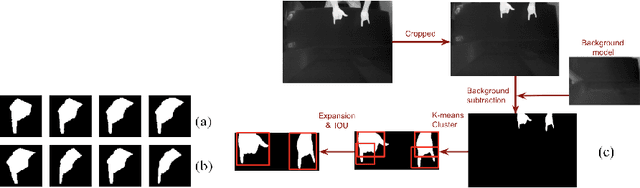

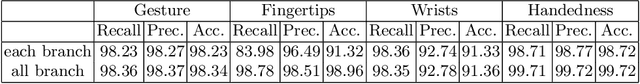

Hand gesture detection is a well-explored area in computer vision with applications in various forms of Human-Computer Interactions. In this work, we propose a technique for simultaneous hand gesture classification, handedness detection, and hand keypoints localization using thermal data captured by an infrared camera. Our method uses a novel deep multi-task learning architecture that includes shared encoderdecoder layers followed by three branches dedicated for each mentioned task. We performed extensive experimental validation of our model on an in-house dataset consisting of 24 users data. The results confirm higher than 98 percent accuracy for gesture classification, handedness detection, and fingertips localization, and more than 91 percent accuracy for wrist points localization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge