Sibo Zhu

Kernel-Based Enhanced Oversampling Method for Imbalanced Classification

Apr 12, 2025Abstract:This paper introduces a novel oversampling technique designed to improve classification performance on imbalanced datasets. The proposed method enhances the traditional SMOTE algorithm by incorporating convex combination and kernel-based weighting to generate synthetic samples that better represent the minority class. Through experiments on multiple real-world datasets, we demonstrate that the new technique outperforms existing methods in terms of F1-score, G-mean, and AUC, providing a robust solution for handling imbalanced datasets in classification tasks.

IPOF: An Extremely and Excitingly Simple Outlier Detection Booster via Infinite Propagation

Aug 01, 2021

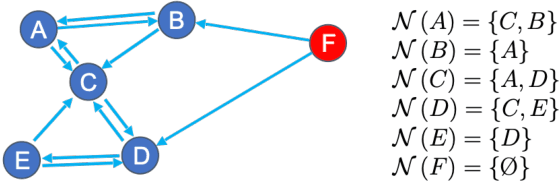

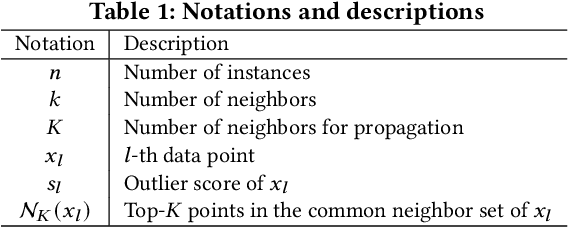

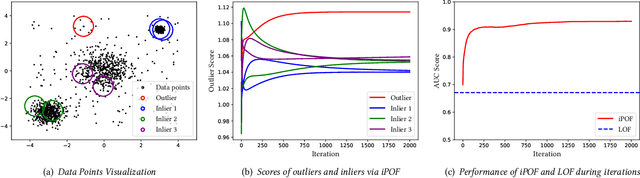

Abstract:Outlier detection is one of the most popular and continuously rising topics in the data mining field due to its crucial academic value and extensive industrial applications. Among different settings, unsupervised outlier detection is the most challenging and practical one, which attracts tremendous efforts from diverse perspectives. In this paper, we consider the score-based outlier detection category and point out that the performance of current outlier detection algorithms might be further boosted by score propagation. Specifically, we propose Infinite Propagation of Outlier Factor (iPOF) algorithm, an extremely and excitingly simple outlier detection booster via infinite propagation. By employing score-based outlier detectors for initialization, iPOF updates each data point's outlier score by averaging the outlier factors of its nearest common neighbors. Extensive experimental results on numerous datasets in various domains demonstrate the effectiveness and efficiency of iPOF significantly over several classical and recent state-of-the-art methods. We also provide the parameter analysis on the number of neighbors, the unique parameter in iPOF, and different initial outlier detectors for general validation. It is worthy to note that iPOF brings in positive improvements ranging from 2% to 46% on the average level, and in some cases, iPOF boosts the performance over 3000% over the original outlier detection algorithm.

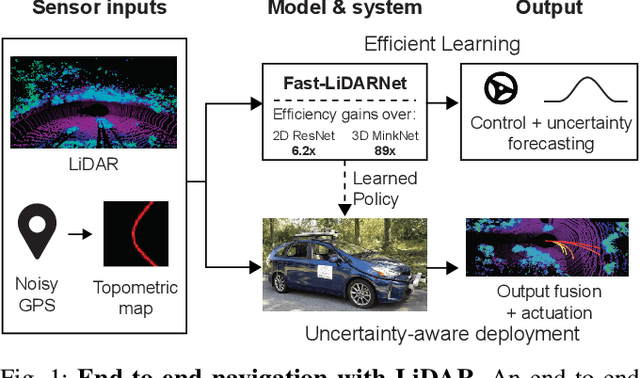

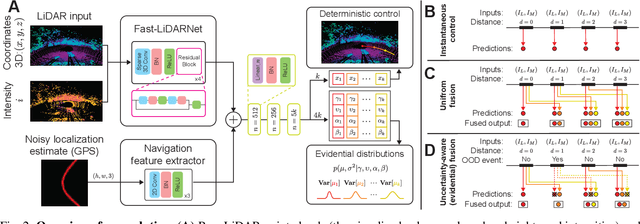

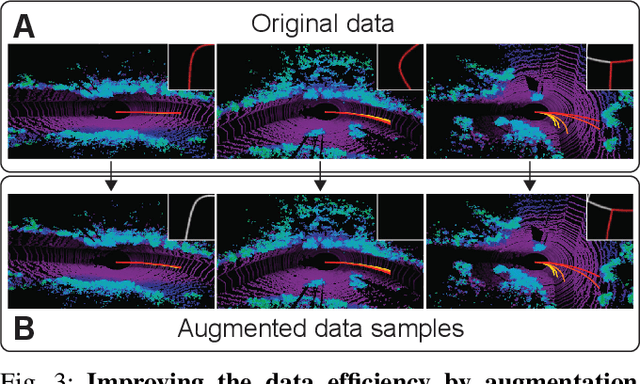

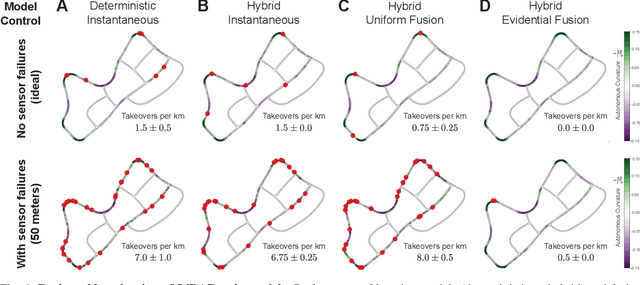

Efficient and Robust LiDAR-Based End-to-End Navigation

May 20, 2021

Abstract:Deep learning has been used to demonstrate end-to-end neural network learning for autonomous vehicle control from raw sensory input. While LiDAR sensors provide reliably accurate information, existing end-to-end driving solutions are mainly based on cameras since processing 3D data requires a large memory footprint and computation cost. On the other hand, increasing the robustness of these systems is also critical; however, even estimating the model's uncertainty is very challenging due to the cost of sampling-based methods. In this paper, we present an efficient and robust LiDAR-based end-to-end navigation framework. We first introduce Fast-LiDARNet that is based on sparse convolution kernel optimization and hardware-aware model design. We then propose Hybrid Evidential Fusion that directly estimates the uncertainty of the prediction from only a single forward pass and then fuses the control predictions intelligently. We evaluate our system on a full-scale vehicle and demonstrate lane-stable as well as navigation capabilities. In the presence of out-of-distribution events (e.g., sensor failures), our system significantly improves robustness and reduces the number of takeovers in the real world.

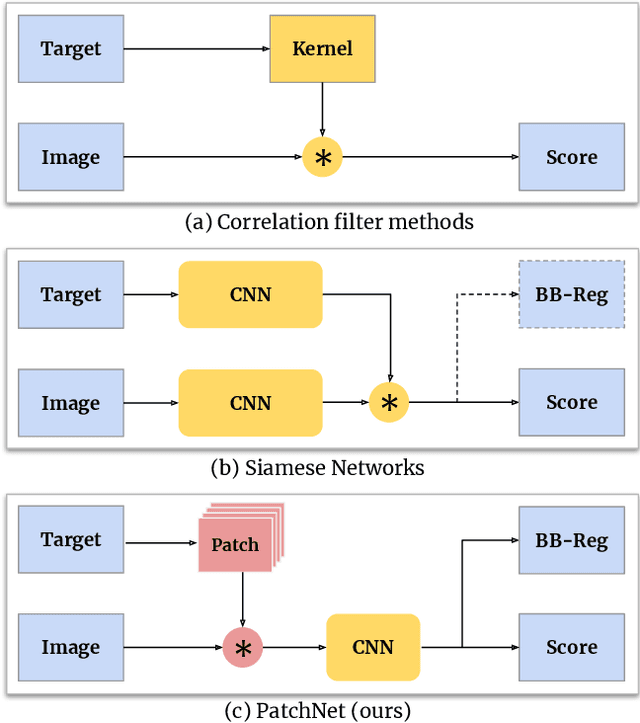

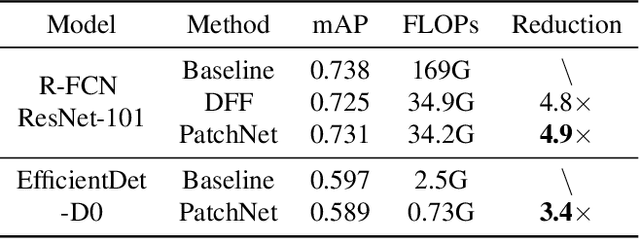

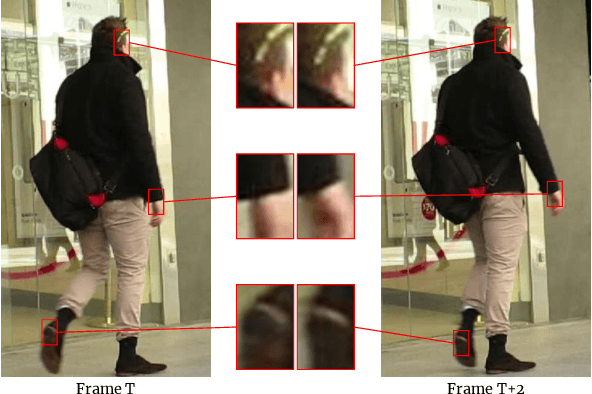

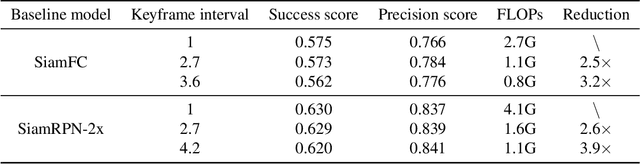

PatchNet -- Short-range Template Matching for Efficient Video Processing

Mar 10, 2021

Abstract:Object recognition is a fundamental problem in many video processing tasks, accurately locating seen objects at low computation cost paves the way for on-device video recognition. We propose PatchNet, an efficient convolutional neural network to match objects in adjacent video frames. It learns the patchwise correlation features instead of pixel features. PatchNet is very compact, running at just 58MFLOPs, $5\times$ simpler than MobileNetV2. We demonstrate its application on two tasks, video object detection and visual object tracking. On ImageNet VID, PatchNet reduces the flops of R-FCN ResNet-101 by 5x and EfficientDet-D0 by 3.4x with less than 1% mAP loss. On OTB2015, PatchNet reduces SiamFC and SiamRPN by 2.5x with no accuracy loss. Experiments on Jetson Nano further demonstrate 2.8x to 4.3x speed-ups associated with flops reduction. Code is open sourced at https://github.com/RalphMao/PatchNet.

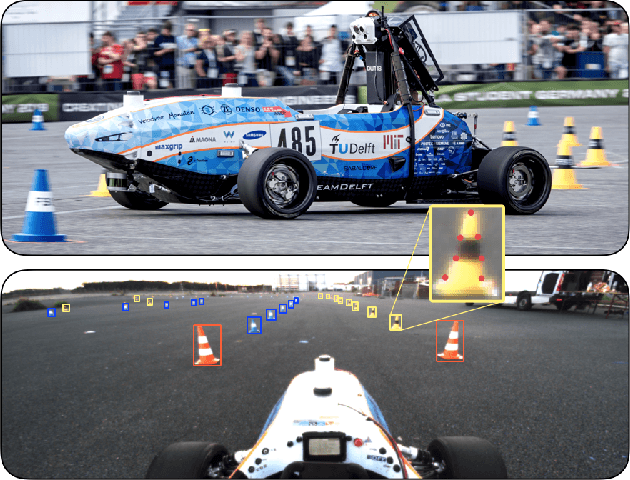

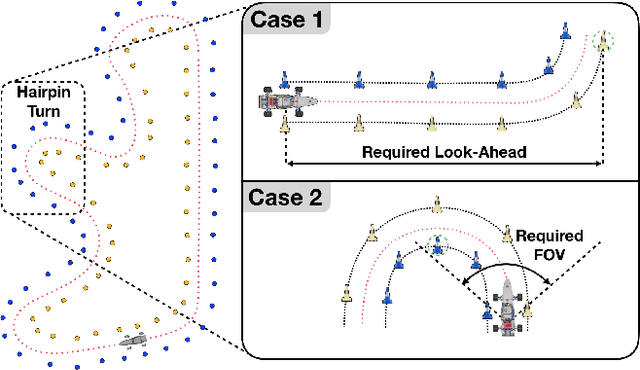

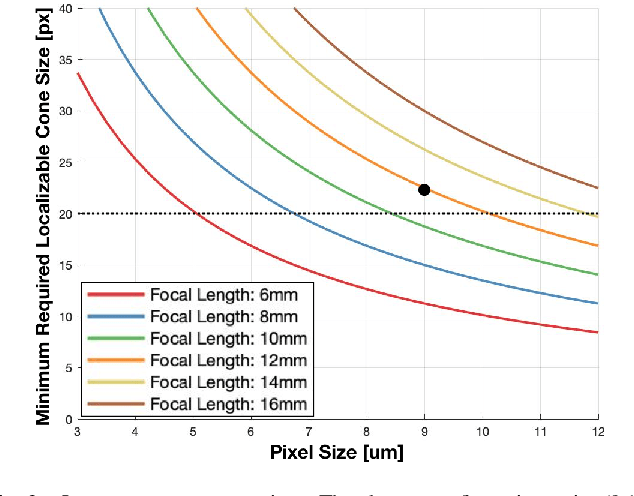

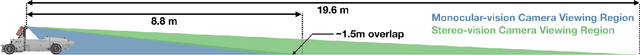

Accurate, Low-Latency Visual Perception for Autonomous Racing:Challenges, Mechanisms, and Practical Solutions

Jul 28, 2020

Abstract:Autonomous racing provides the opportunity to test safety-critical perception pipelines at their limit. This paper describes the practical challenges and solutions to applying state-of-the-art computer vision algorithms to build a low-latency, high-accuracy perception system for DUT18 Driverless (DUT18D), a 4WD electric race car with podium finishes at all Formula Driverless competitions for which it raced. The key components of DUT18D include YOLOv3-based object detection, pose estimation, and time synchronization on its dual stereovision/monovision camera setup. We highlight modifications required to adapt perception CNNs to racing domains, improvements to loss functions used for pose estimation, and methodologies for sub-microsecond camera synchronization among other improvements. We perform a thorough experimental evaluation of the system, demonstrating its accuracy and low-latency in real-world racing scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge