Shunli Ren

Interruption-Aware Cooperative Perception for V2X Communication-Aided Autonomous Driving

Apr 24, 2023Abstract:Cooperative perception enabled by V2X Communication technologies can significantly improve the perception performance of autonomous vehicles beyond the limited perception ability of the individual vehicles, therefore, improving the safety and efficiency of autonomous driving in intelligent transportation systems. However, in order to fully reap the benefits of cooperative perception in practice, the impacts of imperfect V2X communication, i.e., communication errors and disruptions, need to be understood and effective remedies need to be developed to alleviate their adverse impacts. Motivated by this need, we propose a novel INterruption-aware robust COoperative Perception (V2X-INCOP) solution for V2X communication-aided autonomous driving, which leverages historical information to recover missing information due to interruption. To achieve comprehensive recovery, we design a communication adaptive multi-scale spatial-temporal prediction model to extract multi-scale spatial-temporal features based on V2X communication conditions and capture the most significant information for the prediction of the missing information. To further improve recovery performance, we adopt a knowledge distillation framework to give direct supervision to the prediction model and a curriculum learning strategy to stabilize the training of the model. Our experiments on three public cooperative perception datasets demonstrate that our proposed method is effective in alleviating the impacts of communication interruption on cooperative perception.

Collaborative Perception for Autonomous Driving: Current Status and Future Trend

Aug 22, 2022

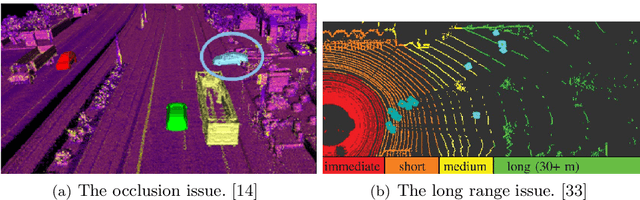

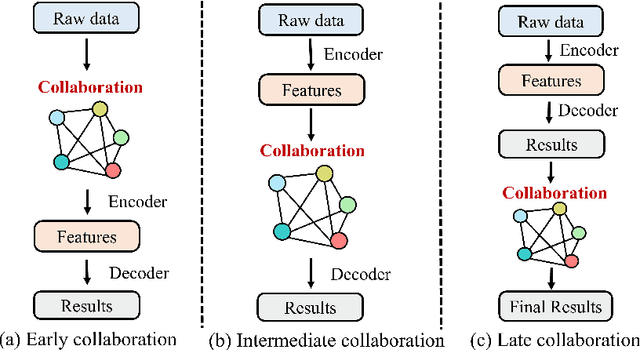

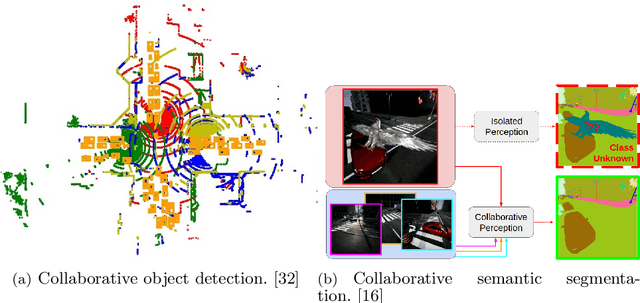

Abstract:Perception is one of the crucial module of the autonomous driving system, which has made great progress recently. However, limited ability of individual vehicles results in the bottleneck of improvement of the perception performance. To break through the limits of individual perception, collaborative perception has been proposed which enables vehicles to share information to perceive the environments beyond line-of-sight and field-of-view. In this paper, we provide a review of the related work about the promising collaborative perception technology, including introducing the fundamental concepts, generalizing the collaboration modes and summarizing the key ingredients and applications of collaborative perception. Finally, we discuss the open challenges and issues of this research area and give some potential further directions.

Latency-Aware Collaborative Perception

Jul 25, 2022

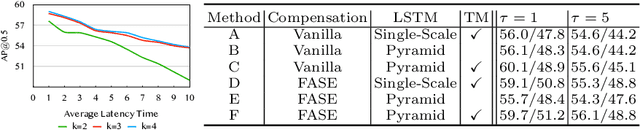

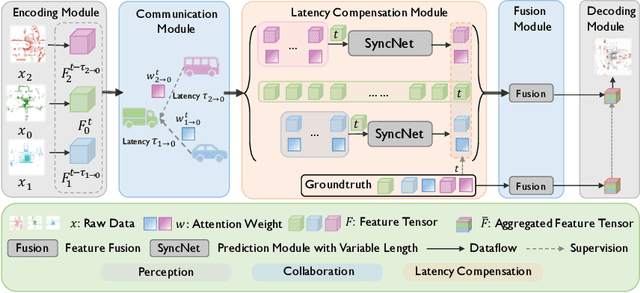

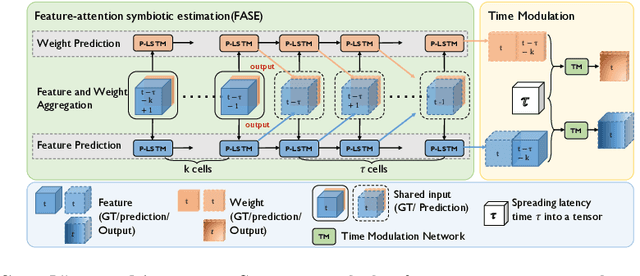

Abstract:Collaborative perception has recently shown great potential to improve perception capabilities over single-agent perception. Existing collaborative perception methods usually consider an ideal communication environment. However, in practice, the communication system inevitably suffers from latency issues, causing potential performance degradation and high risks in safety-critical applications, such as autonomous driving. To mitigate the effect caused by the inevitable latency, from a machine learning perspective, we present the first latency-aware collaborative perception system, which actively adapts asynchronous perceptual features from multiple agents to the same time stamp, promoting the robustness and effectiveness of collaboration. To achieve such a feature-level synchronization, we propose a novel latency compensation module, called SyncNet, which leverages feature-attention symbiotic estimation and time modulation techniques. Experiments results show that the proposed latency aware collaborative perception system with SyncNet can outperforms the state-of-the-art collaborative perception method by 15.6% in the communication latency scenario and keep collaborative perception being superior to single agent perception under severe latency.

Learning Distilled Collaboration Graph for Multi-Agent Perception

Nov 01, 2021

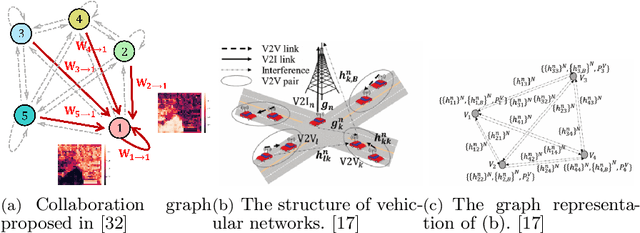

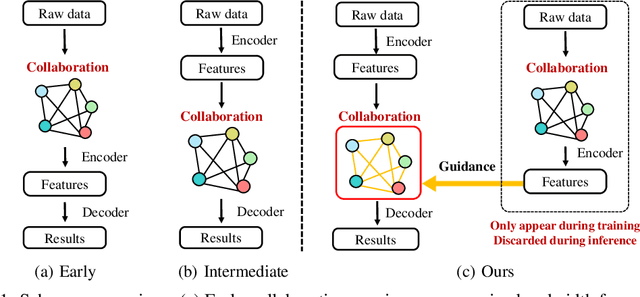

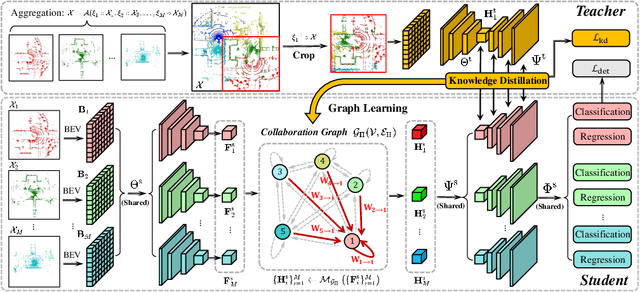

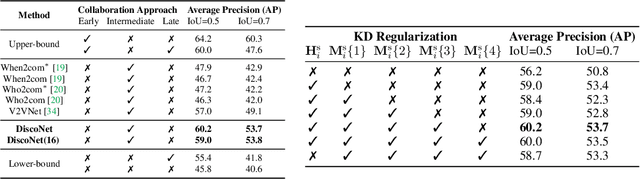

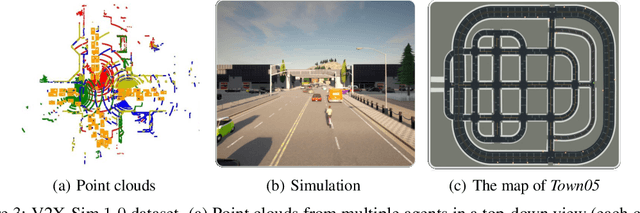

Abstract:To promote better performance-bandwidth trade-off for multi-agent perception, we propose a novel distilled collaboration graph (DiscoGraph) to model trainable, pose-aware, and adaptive collaboration among agents. Our key novelties lie in two aspects. First, we propose a teacher-student framework to train DiscoGraph via knowledge distillation. The teacher model employs an early collaboration with holistic-view inputs; the student model is based on intermediate collaboration with single-view inputs. Our framework trains DiscoGraph by constraining post-collaboration feature maps in the student model to match the correspondences in the teacher model. Second, we propose a matrix-valued edge weight in DiscoGraph. In such a matrix, each element reflects the inter-agent attention at a specific spatial region, allowing an agent to adaptively highlight the informative regions. During inference, we only need to use the student model named as the distilled collaboration network (DiscoNet). Attributed to the teacher-student framework, multiple agents with the shared DiscoNet could collaboratively approach the performance of a hypothetical teacher model with a holistic view. Our approach is validated on V2X-Sim 1.0, a large-scale multi-agent perception dataset that we synthesized using CARLA and SUMO co-simulation. Our quantitative and qualitative experiments in multi-agent 3D object detection show that DiscoNet could not only achieve a better performance-bandwidth trade-off than the state-of-the-art collaborative perception methods, but also bring more straightforward design rationale. Our code is available on https://github.com/ai4ce/DiscoNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge