Shucheng You

Deep learning based cloud detection for remote sensing images by the fusion of multi-scale convolutional features

Oct 13, 2018

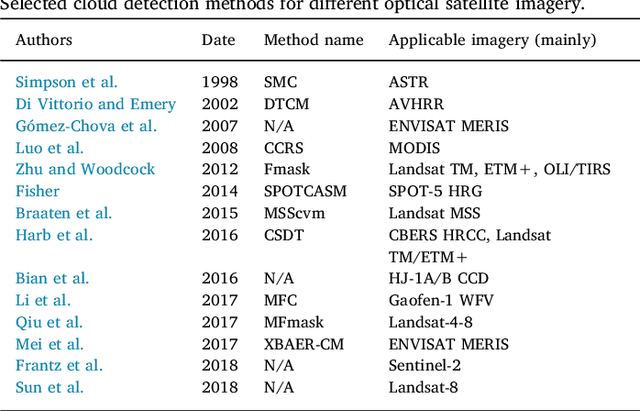

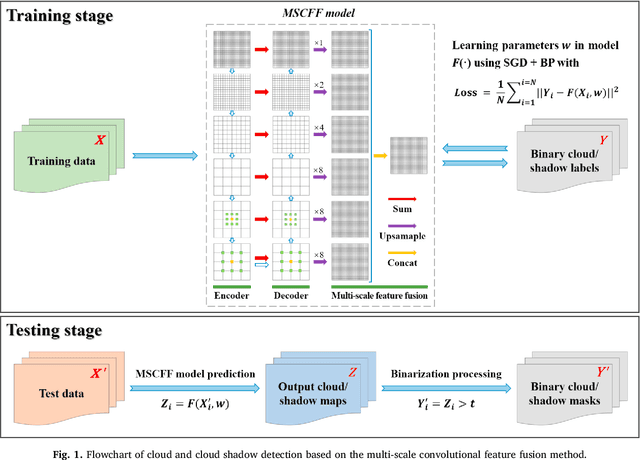

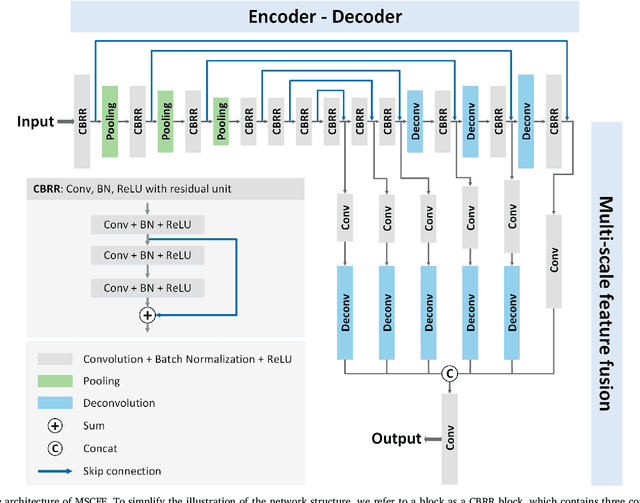

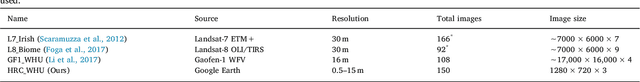

Abstract:Cloud detection is an important preprocessing step for the precise application of optical satellite imagery. In this paper, we propose a deep convolutional neural network based cloud detection method named multi-scale convolutional feature fusion (MSCFF) for remote sensing images. In the network architecture of MSCFF, the encoder and corresponding decoder modules, which provide both local and global context by densifying feature maps with trainable filter banks, are utilized to extract multi-scale and high-level spatial features. The feature maps of multiple scales are then up-sampled and concatenated, and a novel MSCFF module is designed to fuse the features of different scales for the output. The output feature maps of the network are regarded as probability maps, and fed to a binary classifier for the final pixel-wise cloud and cloud shadow segmentation. The MSCFF method was validated on hundreds of globally distributed optical satellite images, with spatial resolutions ranging from 0.5 to 50 m, including Landsat-5/7/8, Gaofen-1/2/4, Sentinel-2, Ziyuan-3, CBERS-04, Huanjing-1, and collected high-resolution images exported from Google Earth. The experimental results indicate that MSCFF has obvious advantages over the traditional rule-based cloud detection methods and the state-of-the-art deep learning models in terms of accuracy, especially in bright surface covered areas. The effectiveness of MSCFF means that it has great promise for the practical application of cloud detection for multiple types of satellite imagery. Our established global high-resolution cloud detection validation dataset has been made available online.

Learning Transferable Deep Models for Land-Use Classification with High-Resolution Remote Sensing Images

Jul 16, 2018

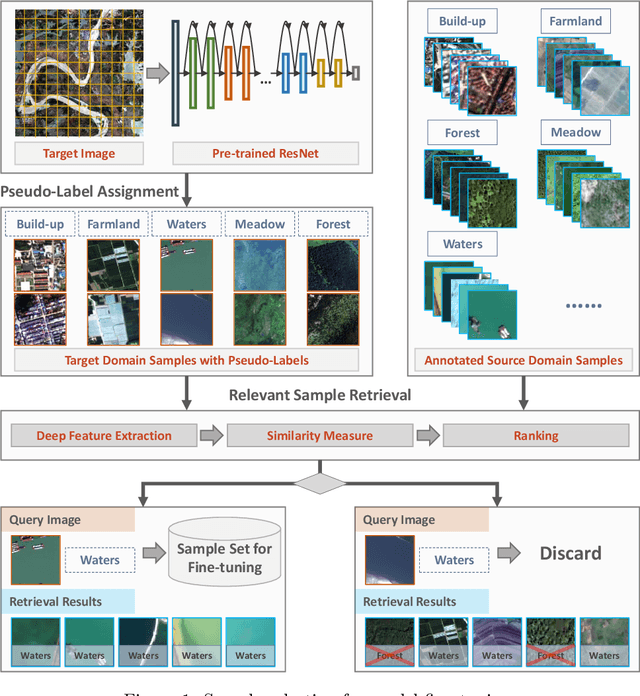

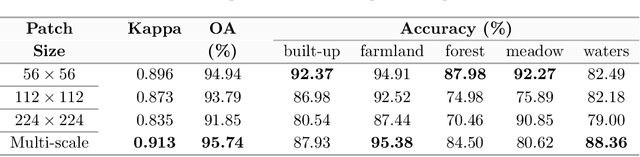

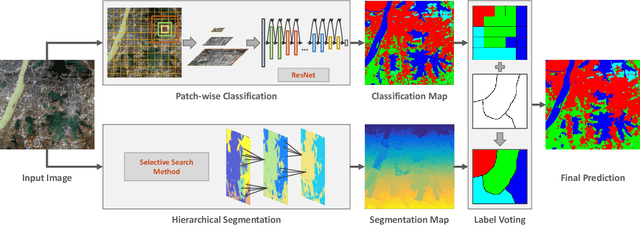

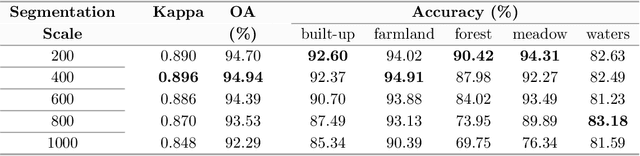

Abstract:In recent years, large amount of high spatial-resolution remote sensing (HRRS) images are available for land-use mapping. However, due to the complex information brought by the increased spatial resolution and the data disturbances caused by different conditions of image acquisition, it is often difficult to find an efficient method for achieving accurate land-use classification with heterogeneous and high-resolution remote sensing images. In this paper, we propose a scheme to learn transferable deep models for land-use classification with HRRS images. The main idea is to rely on deep neural networks for presenting the semantic information contained in different types of land-uses and propose a pseudo-labeling and sample selection scheme for improving the transferability of deep models. More precisely, a deep Convolutional Neural Networks (CNNs) is first pre-trained with a well-annotated land-use dataset, referred to as the source data. Then, given a target image with no labels, the pre-trained CNN model is utilized to classify the image in a patch-wise manner. The patches with high classification probability are assigned with pseudo-labels and employed as the queries to retrieve related samples from the source data. The pseudo-labels confirmed with the retrieved results are regarded as supervised information for fine-tuning the pre-trained deep model. In order to obtain a pixel-wise land-use classification with the target image, we rely on the fine-tuned CNN and develop a hybrid classification by combining patch-wise classification and hierarchical segmentation. In addition, we create a large-scale land-use dataset containing $150$ Gaofen-2 satellite images for CNN pre-training. Experiments on multi-source HRRS images, including Gaofen-2, Gaofen-1, Jilin-1, Ziyuan-3, and Google Earth images, show encouraging results and demonstrate the efficiency of the proposed scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge