Shreshth A. Malik

BatchGFN: Generative Flow Networks for Batch Active Learning

Jun 26, 2023

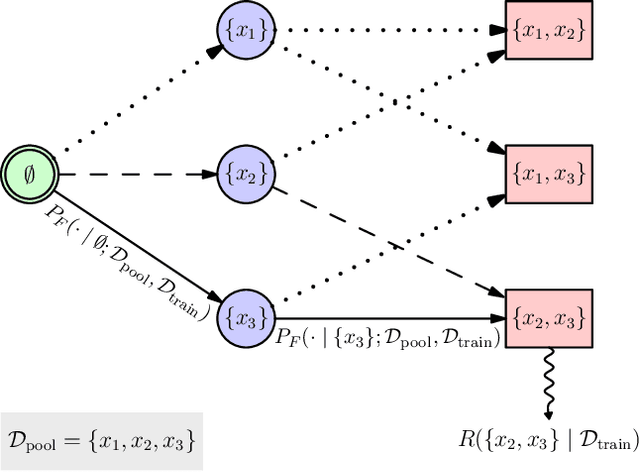

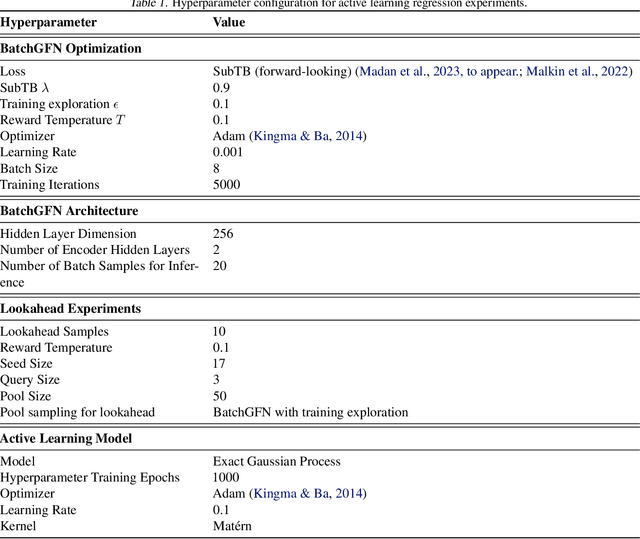

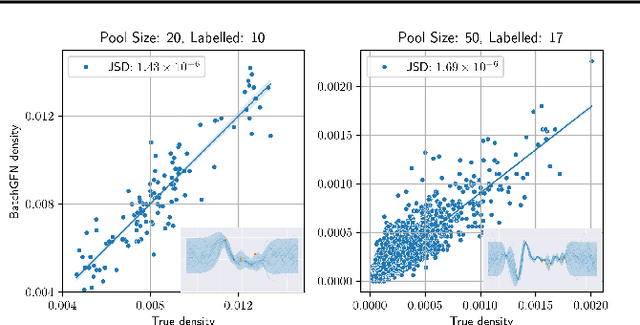

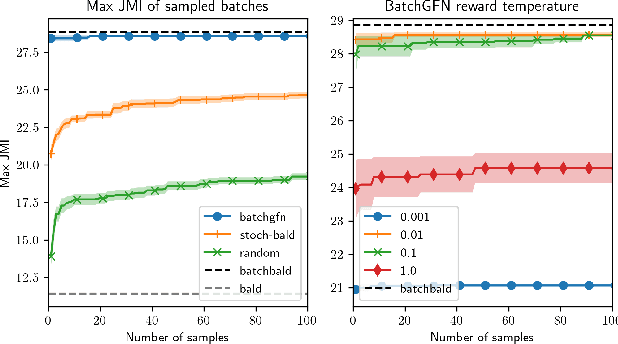

Abstract:We introduce BatchGFN -- a novel approach for pool-based active learning that uses generative flow networks to sample sets of data points proportional to a batch reward. With an appropriate reward function to quantify the utility of acquiring a batch, such as the joint mutual information between the batch and the model parameters, BatchGFN is able to construct highly informative batches for active learning in a principled way. We show our approach enables sampling near-optimal utility batches at inference time with a single forward pass per point in the batch in toy regression problems. This alleviates the computational complexity of batch-aware algorithms and removes the need for greedy approximations to find maximizers for the batch reward. We also present early results for amortizing training across acquisition steps, which will enable scaling to real-world tasks.

Discovering Long-period Exoplanets using Deep Learning with Citizen Science Labels

Nov 13, 2022Abstract:Automated planetary transit detection has become vital to prioritize candidates for expert analysis given the scale of modern telescopic surveys. While current methods for short-period exoplanet detection work effectively due to periodicity in the light curves, there lacks a robust approach for detecting single-transit events. However, volunteer-labelled transits recently collected by the Planet Hunters TESS (PHT) project now provide an unprecedented opportunity to investigate a data-driven approach to long-period exoplanet detection. In this work, we train a 1-D convolutional neural network to classify planetary transits using PHT volunteer scores as training data. We find using volunteer scores significantly improves performance over synthetic data, and enables the recovery of known planets at a precision and rate matching that of the volunteers. Importantly, the model also recovers transits found by volunteers but missed by current automated methods.

Multi-Modal Fusion by Meta-Initialization

Oct 10, 2022

Abstract:When experience is scarce, models may have insufficient information to adapt to a new task. In this case, auxiliary information - such as a textual description of the task - can enable improved task inference and adaptation. In this work, we propose an extension to the Model-Agnostic Meta-Learning algorithm (MAML), which allows the model to adapt using auxiliary information as well as task experience. Our method, Fusion by Meta-Initialization (FuMI), conditions the model initialization on auxiliary information using a hypernetwork, rather than learning a single, task-agnostic initialization. Furthermore, motivated by the shortcomings of existing multi-modal few-shot learning benchmarks, we constructed iNat-Anim - a large-scale image classification dataset with succinct and visually pertinent textual class descriptions. On iNat-Anim, FuMI significantly outperforms uni-modal baselines such as MAML in the few-shot regime. The code for this project and a dataset exploration tool for iNat-Anim are publicly available at https://github.com/s-a-malik/multi-few .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge