Chris J. Lintott

What We Don't C: Representations for scientific discovery beyond VAEs

Nov 12, 2025

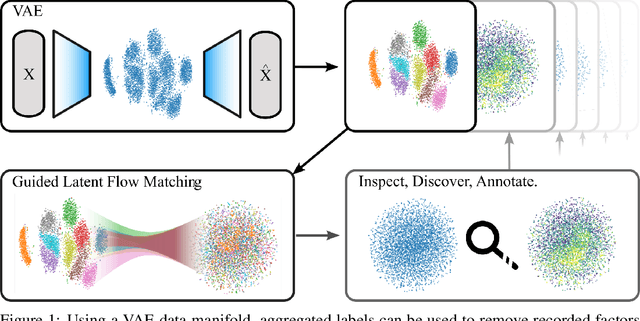

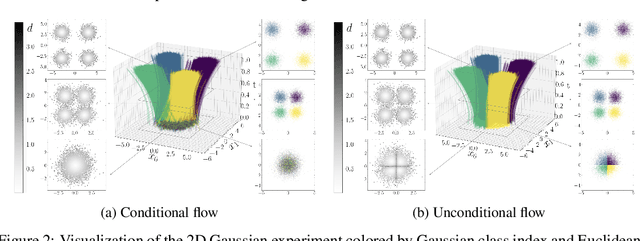

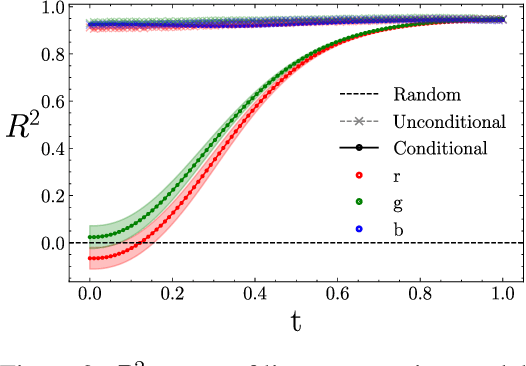

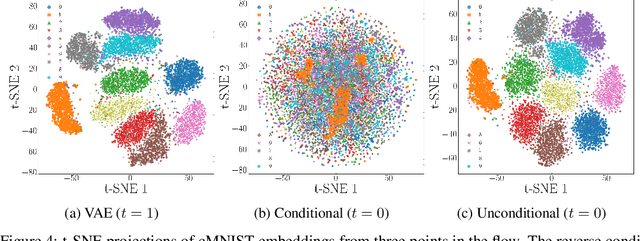

Abstract:Accessing information in learned representations is critical for scientific discovery in high-dimensional domains. We introduce a novel method based on latent flow matching with classifier-free guidance that disentangles latent subspaces by explicitly separating information included in conditioning from information that remains in the residual representation. Across three experiments -- a synthetic 2D Gaussian toy problem, colored MNIST, and the Galaxy10 astronomy dataset -- we show that our method enables access to meaningful features of high dimensional data. Our results highlight a simple yet powerful mechanism for analyzing, controlling, and repurposing latent representations, providing a pathway toward using generative models for scientific exploration of what we don't capture, consider, or catalog.

Scaling Laws for Galaxy Images

Apr 03, 2024

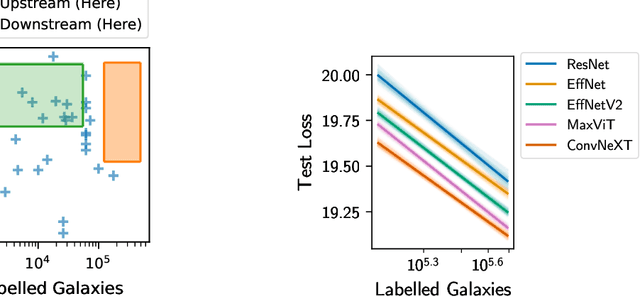

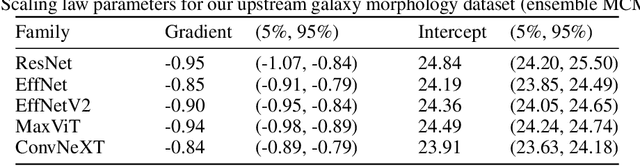

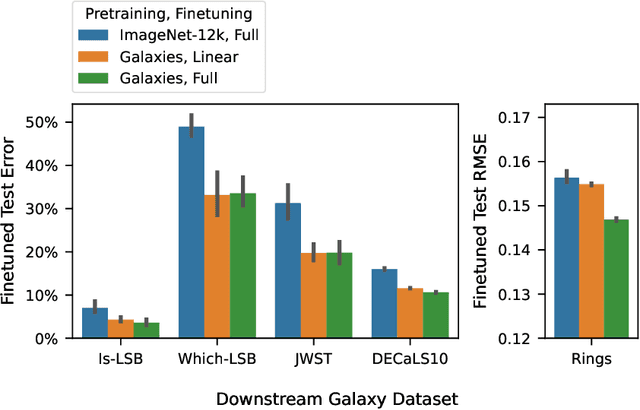

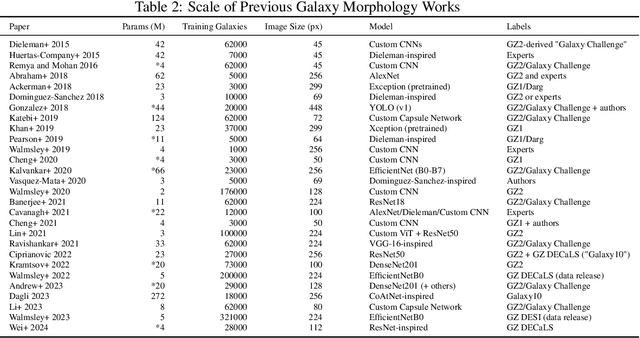

Abstract:We present the first systematic investigation of supervised scaling laws outside of an ImageNet-like context - on images of galaxies. We use 840k galaxy images and over 100M annotations by Galaxy Zoo volunteers, comparable in scale to Imagenet-1K. We find that adding annotated galaxy images provides a power law improvement in performance across all architectures and all tasks, while adding trainable parameters is effective only for some (typically more subjectively challenging) tasks. We then compare the downstream performance of finetuned models pretrained on either ImageNet-12k alone vs. additionally pretrained on our galaxy images. We achieve an average relative error rate reduction of 31% across 5 downstream tasks of scientific interest. Our finetuned models are more label-efficient and, unlike their ImageNet-12k-pretrained equivalents, often achieve linear transfer performance equal to that of end-to-end finetuning. We find relatively modest additional downstream benefits from scaling model size, implying that scaling alone is not sufficient to address our domain gap, and suggest that practitioners with qualitatively different images might benefit more from in-domain adaption followed by targeted downstream labelling.

The weird and the wonderful in our Solar System: Searching for serendipity in the Legacy Survey of Space and Time

Jan 16, 2024Abstract:We present a novel method for anomaly detection in Solar System object data, in preparation for the Legacy Survey of Space and Time. We train a deep autoencoder for anomaly detection and use the learned latent space to search for other interesting objects. We demonstrate the efficacy of the autoencoder approach by finding interesting examples, such as interstellar objects, and show that using the autoencoder, further examples of interesting classes can be found. We also investigate the limits of classic unsupervised approaches to anomaly detection through the generation of synthetic anomalies and evaluate the feasibility of using a supervised learning approach. Future work should consider expanding the feature space to increase the variety of anomalies that can be uncovered during the survey using an autoencoder.

Discovering Long-period Exoplanets using Deep Learning with Citizen Science Labels

Nov 13, 2022Abstract:Automated planetary transit detection has become vital to prioritize candidates for expert analysis given the scale of modern telescopic surveys. While current methods for short-period exoplanet detection work effectively due to periodicity in the light curves, there lacks a robust approach for detecting single-transit events. However, volunteer-labelled transits recently collected by the Planet Hunters TESS (PHT) project now provide an unprecedented opportunity to investigate a data-driven approach to long-period exoplanet detection. In this work, we train a 1-D convolutional neural network to classify planetary transits using PHT volunteer scores as training data. We find using volunteer scores significantly improves performance over synthetic data, and enables the recovery of known planets at a precision and rate matching that of the volunteers. Importantly, the model also recovers transits found by volunteers but missed by current automated methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge