Micah Bowles

What We Don't C: Representations for scientific discovery beyond VAEs

Nov 12, 2025

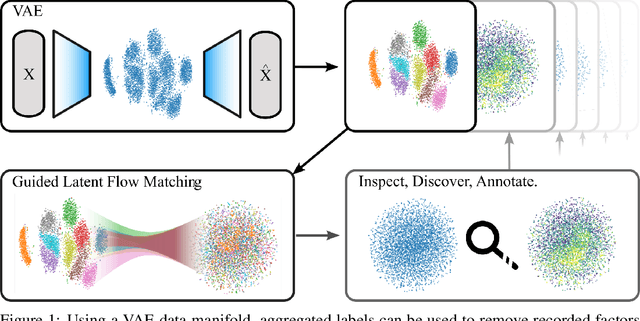

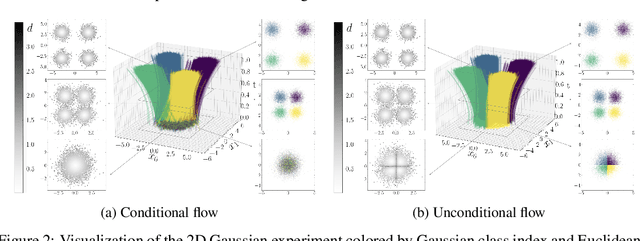

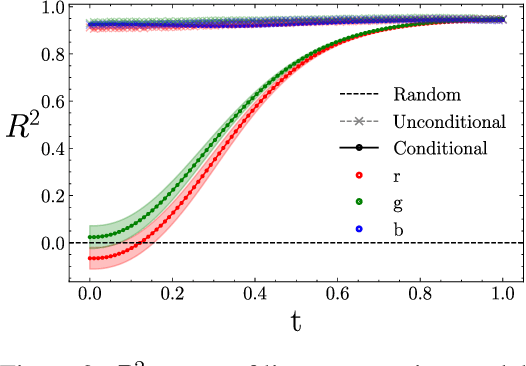

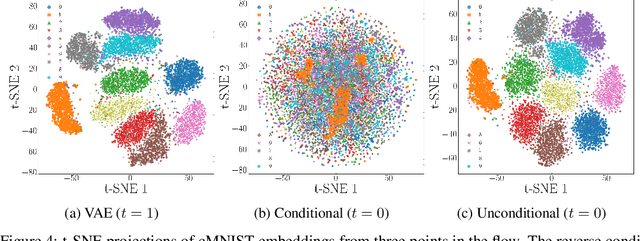

Abstract:Accessing information in learned representations is critical for scientific discovery in high-dimensional domains. We introduce a novel method based on latent flow matching with classifier-free guidance that disentangles latent subspaces by explicitly separating information included in conditioning from information that remains in the residual representation. Across three experiments -- a synthetic 2D Gaussian toy problem, colored MNIST, and the Galaxy10 astronomy dataset -- we show that our method enables access to meaningful features of high dimensional data. Our results highlight a simple yet powerful mechanism for analyzing, controlling, and repurposing latent representations, providing a pathway toward using generative models for scientific exploration of what we don't capture, consider, or catalog.

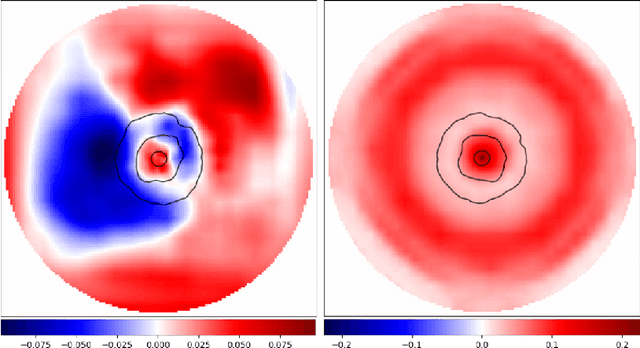

IRIS: A Bayesian Approach for Image Reconstruction in Radio Interferometry with expressive Score-Based priors

Jan 05, 2025

Abstract:Inferring sky surface brightness distributions from noisy interferometric data in a principled statistical framework has been a key challenge in radio astronomy. In this work, we introduce Imaging for Radio Interferometry with Score-based models (IRIS). We use score-based models trained on optical images of galaxies as an expressive prior in combination with a Gaussian likelihood in the uv-space to infer images of protoplanetary disks from visibility data of the DSHARP survey conducted by ALMA. We demonstrate the advantages of this framework compared with traditional radio interferometry imaging algorithms, showing that it produces plausible posterior samples despite the use of a misspecified galaxy prior. Through coverage testing on simulations, we empirically evaluate the accuracy of this approach to generate calibrated posterior samples.

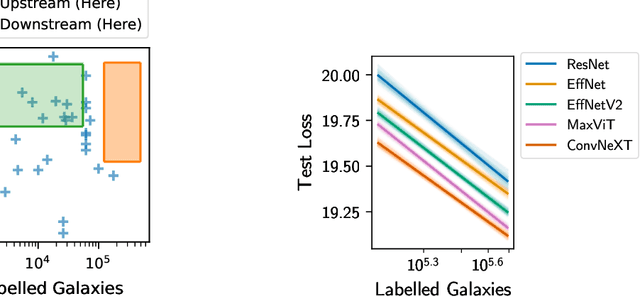

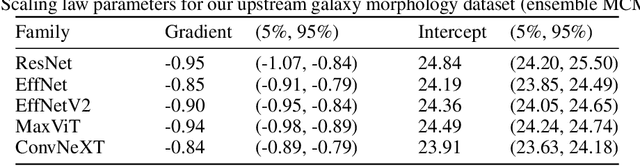

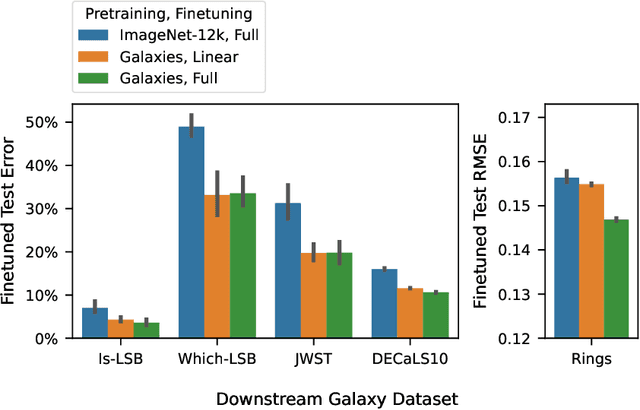

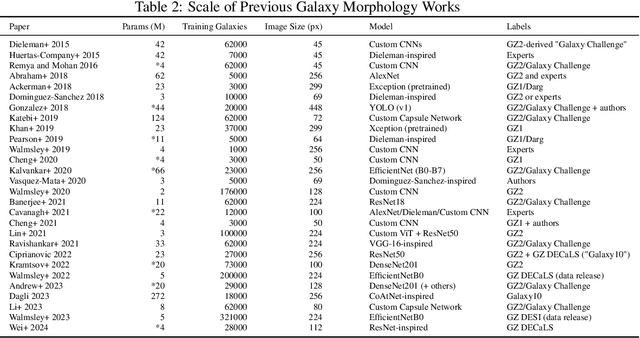

Scaling Laws for Galaxy Images

Apr 03, 2024

Abstract:We present the first systematic investigation of supervised scaling laws outside of an ImageNet-like context - on images of galaxies. We use 840k galaxy images and over 100M annotations by Galaxy Zoo volunteers, comparable in scale to Imagenet-1K. We find that adding annotated galaxy images provides a power law improvement in performance across all architectures and all tasks, while adding trainable parameters is effective only for some (typically more subjectively challenging) tasks. We then compare the downstream performance of finetuned models pretrained on either ImageNet-12k alone vs. additionally pretrained on our galaxy images. We achieve an average relative error rate reduction of 31% across 5 downstream tasks of scientific interest. Our finetuned models are more label-efficient and, unlike their ImageNet-12k-pretrained equivalents, often achieve linear transfer performance equal to that of end-to-end finetuning. We find relatively modest additional downstream benefits from scaling model size, implying that scaling alone is not sufficient to address our domain gap, and suggest that practitioners with qualitatively different images might benefit more from in-domain adaption followed by targeted downstream labelling.

Bayesian Imaging for Radio Interferometry with Score-Based Priors

Nov 29, 2023

Abstract:The inverse imaging task in radio interferometry is a key limiting factor to retrieving Bayesian uncertainties in radio astronomy in a computationally effective manner. We use a score-based prior derived from optical images of galaxies to recover images of protoplanetary disks from the DSHARP survey. We demonstrate that our method produces plausible posterior samples despite the misspecified galaxy prior. We show that our approach produces results which are competitive with existing radio interferometry imaging algorithms.

A New Task: Deriving Semantic Class Targets for the Physical Sciences

Oct 27, 2022

Abstract:We define deriving semantic class targets as a novel multi-modal task. By doing so, we aim to improve classification schemes in the physical sciences which can be severely abstracted and obfuscating. We address this task for upcoming radio astronomy surveys and present the derived semantic radio galaxy morphology class targets.

Towards Galaxy Foundation Models with Hybrid Contrastive Learning

Jun 23, 2022

Abstract:New astronomical tasks are often related to earlier tasks for which labels have already been collected. We adapt the contrastive framework BYOL to leverage those labels as a pretraining task while also enforcing augmentation invariance. For large-scale pretraining, we introduce GZ-Evo v0.1, a set of 96.5M volunteer responses for 552k galaxy images plus a further 1.34M comparable unlabelled galaxies. Most of the 206 GZ-Evo answers are unknown for any given galaxy, and so our pretraining task uses a Dirichlet loss that naturally handles unknown answers. GZ-Evo pretraining, with or without hybrid learning, improves on direct training even with plentiful downstream labels (+4% accuracy with 44k labels). Our hybrid pretraining/contrastive method further improves downstream accuracy vs. pretraining or contrastive learning, especially in the low-label transfer regime (+6% accuracy with 750 labels).

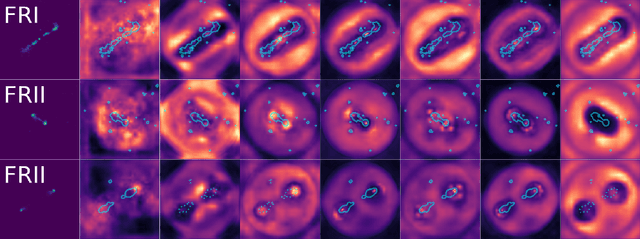

Radio Galaxy Zoo: Using semi-supervised learning to leverage large unlabelled data-sets for radio galaxy classification under data-set shift

Apr 21, 2022

Abstract:In this work we examine the classification accuracy and robustness of a state-of-the-art semi-supervised learning (SSL) algorithm applied to the morphological classification of radio galaxies. We test if SSL with fewer labels can achieve test accuracies comparable to the supervised state-of-the-art and whether this holds when incorporating previously unseen data. We find that for the radio galaxy classification problem considered, SSL provides additional regularisation and outperforms the baseline test accuracy. However, in contrast to model performance metrics reported on computer science benchmarking data-sets, we find that improvement is limited to a narrow range of label volumes, with performance falling off rapidly at low label volumes. Additionally, we show that SSL does not improve model calibration, regardless of whether classification is improved. Moreover, we find that when different underlying catalogues drawn from the same radio survey are used to provide the labelled and unlabelled data-sets required for SSL, a significant drop in classification performance is observered, highlighting the difficulty of applying SSL techniques under dataset shift. We show that a class-imbalanced unlabelled data pool negatively affects performance through prior probability shift, which we suggest may explain this performance drop, and that using the Frechet Distance between labelled and unlabelled data-sets as a measure of data-set shift can provide a prediction of model performance, but that for typical radio galaxy data-sets with labelled sample volumes of O(1000), the sample variance associated with this technique is high and the technique is in general not sufficiently robust to replace a train-test cycle.

Quantifying Uncertainty in Deep Learning Approaches to Radio Galaxy Classification

Jan 24, 2022

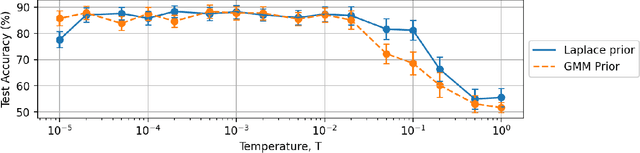

Abstract:In this work we use variational inference to quantify the degree of uncertainty in deep learning model predictions of radio galaxy classification. We show that the level of model posterior variance for individual test samples is correlated with human uncertainty when labelling radio galaxies. We explore the model performance and uncertainty calibration for different weight priors and suggest that a sparse prior produces more well-calibrated uncertainty estimates. Using the posterior distributions for individual weights, we demonstrate that we can prune 30% of the fully-connected layer weights without significant loss of performance by removing the weights with the lowest signal-to-noise ratio. A larger degree of pruning can be achieved using a Fisher information based ranking, but both pruning methods affect the uncertainty calibration for Fanaroff-Riley type I and type II radio galaxies differently. Like other work in this field, we experience a cold posterior effect, whereby the posterior must be down-weighted to achieve good predictive performance. We examine whether adapting the cost function to accommodate model misspecification can compensate for this effect, but find that it does not make a significant difference. We also examine the effect of principled data augmentation and find that this improves upon the baseline but also does not compensate for the observed effect. We interpret this as the cold posterior effect being due to the overly effective curation of our training sample leading to likelihood misspecification, and raise this as a potential issue for Bayesian deep learning approaches to radio galaxy classification in future.

E(2) Equivariant Self-Attention for Radio Astronomy

Nov 30, 2021

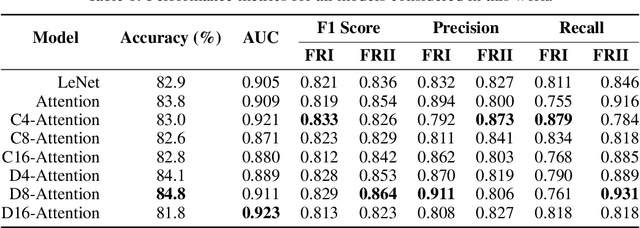

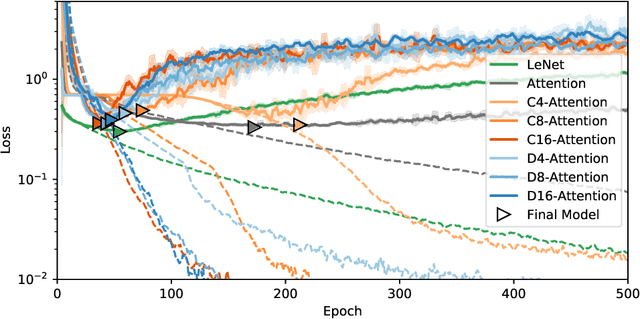

Abstract:In this work we introduce group-equivariant self-attention models to address the problem of explainable radio galaxy classification in astronomy. We evaluate various orders of both cyclic and dihedral equivariance, and show that including equivariance as a prior both reduces the number of epochs required to fit the data and results in improved performance. We highlight the benefits of equivariance when using self-attention as an explainable model and illustrate how equivariant models statistically attend the same features in their classifications as human astronomers.

Attention-gating for improved radio galaxy classification

Dec 02, 2020

Abstract:In this work we introduce attention as a state of the art mechanism for classification of radio galaxies using convolutional neural networks. We present an attention-based model that performs on par with previous classifiers while using more than 50\% fewer parameters than the next smallest classic CNN application in this field. We demonstrate quantitatively how the selection of normalisation and aggregation methods used in attention-gating can affect the output of individual models, and show that the resulting attention maps can be used to interpret the classification choices made by the model. We observe that the salient regions identified by the our model align well with the regions an expert human classifier would attend to make equivalent classifications. We show that while the selection of normalisation and aggregation may only minimally affect the performance of individual models, it can significantly affect the interpretability of the respective attention maps and by selecting a model which aligns well with how astronomers classify radio sources by eye, a user can employ the model in a more effective manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge