Shiva Pentyala

Machine Learning Explanations to Prevent Overtrust in Fake News Detection

Jul 27, 2020

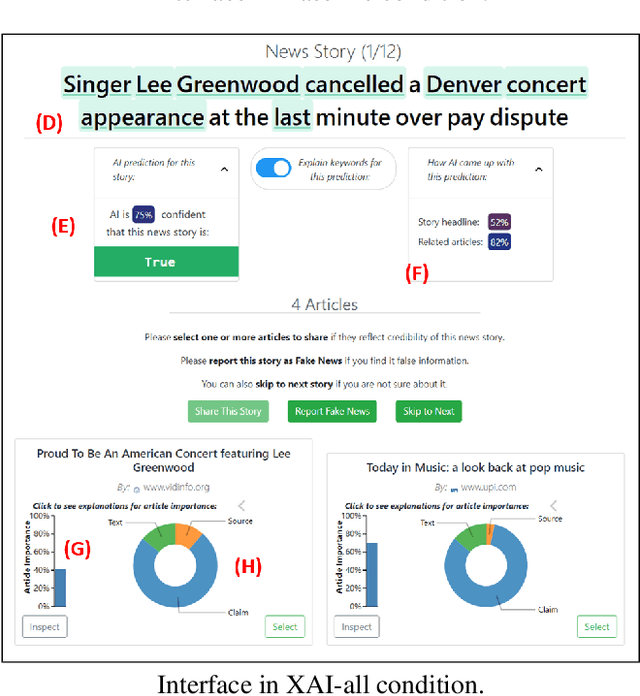

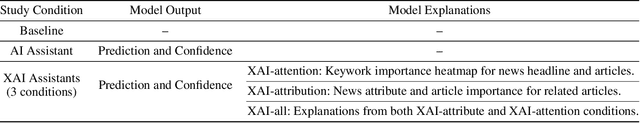

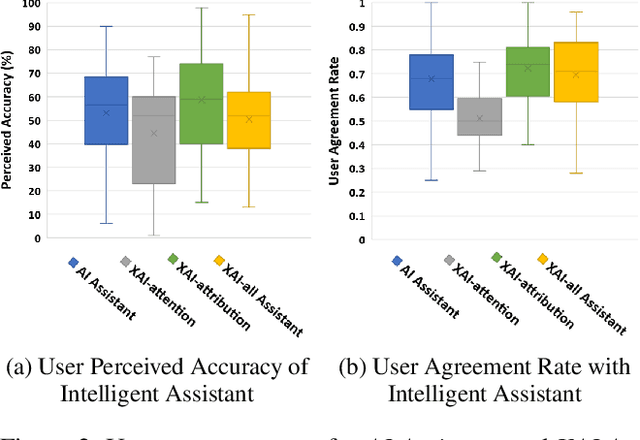

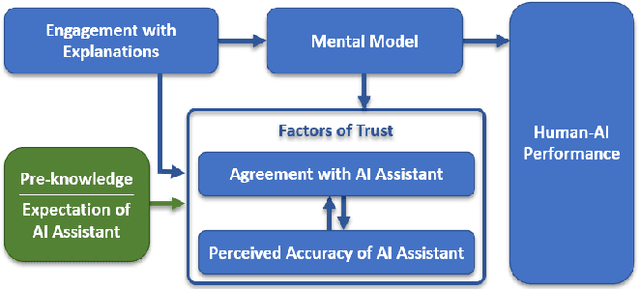

Abstract:Combating fake news and misinformation propagation is a challenging task in the post-truth era. News feed and search algorithms could potentially lead to unintentional large-scale propagation of false and fabricated information with users being exposed to algorithmically selected false content. Our research investigates the effects of an Explainable AI assistant embedded in news review platforms for combating the propagation of fake news. We design a news reviewing and sharing interface, create a dataset of news stories, and train four interpretable fake news detection algorithms to study the effects of algorithmic transparency on end-users. We present evaluation results and analysis from multiple controlled crowdsourced studies. For a deeper understanding of Explainable AI systems, we discuss interactions between user engagement, mental model, trust, and performance measures in the process of explaining. The study results indicate that explanations helped participants to build appropriate mental models of the intelligent assistants in different conditions and adjust their trust accordingly for model limitations.

Towards Generalizable Forgery Detection with Locality-aware AutoEncoder

Sep 13, 2019

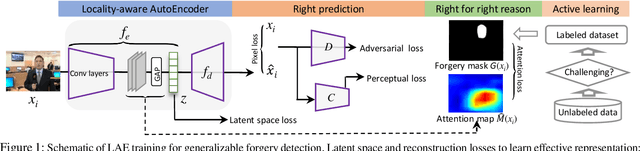

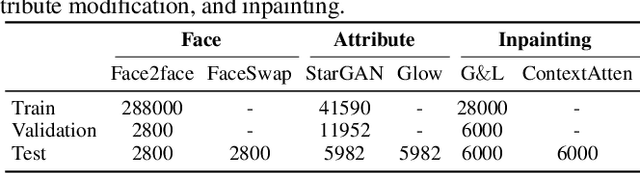

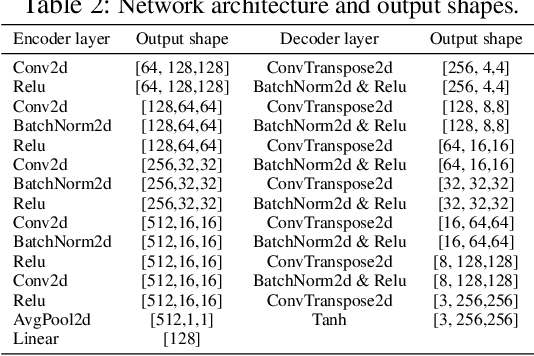

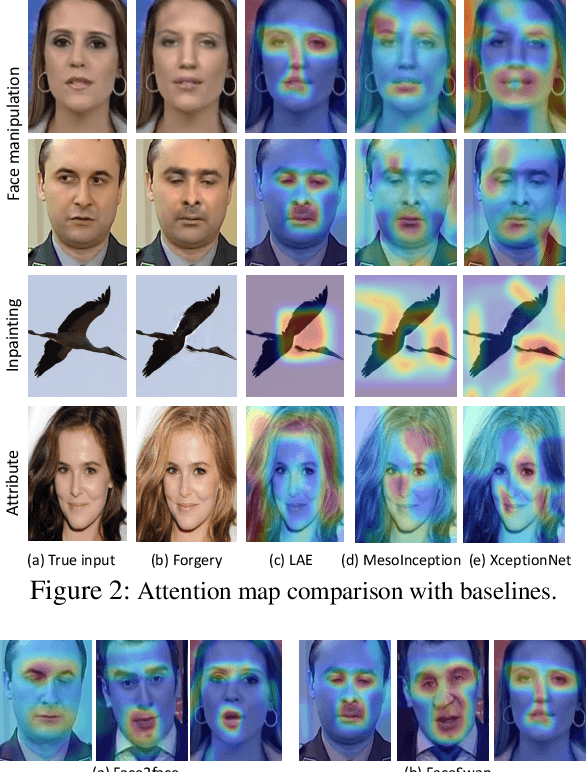

Abstract:With advancements of deep learning techniques, it is now possible to generate super-realistic fake images and videos. These manipulated forgeries could reach mass audience and result in adverse impacts on our society. Although lots of efforts have been devoted to detect forgeries, their performance drops significantly on previously unseen but related manipulations and the detection generalization capability remains a problem. To bridge this gap, in this paper we propose Locality-aware AutoEncoder (LAE), which combines fine-grained representation learning and enforcing locality in a unified framework. In the training process, we use pixel-wise mask to regularize local interpretation of LAE to enforce the model to learn intrinsic representation from the forgery region, instead of capturing artifacts in the training set and learning spurious correlations to perform detection. We further propose an active learning framework to select the challenging candidates for labeling, to reduce the annotation efforts to regularize interpretations. Experimental results indicate that LAE indeed could focus on the forgery regions to make decisions. The results further show that LAE achieves superior generalization performance compared to state-of-the-arts on forgeries generated by alternative manipulation methods.

Multi-Task Networks With Universe, Group, and Task Feature Learning

Jul 03, 2019

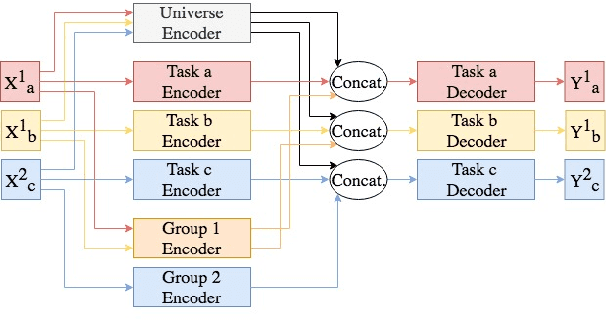

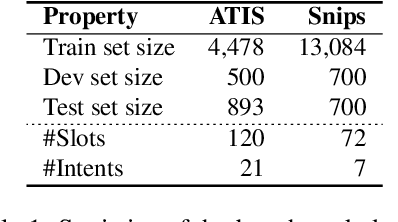

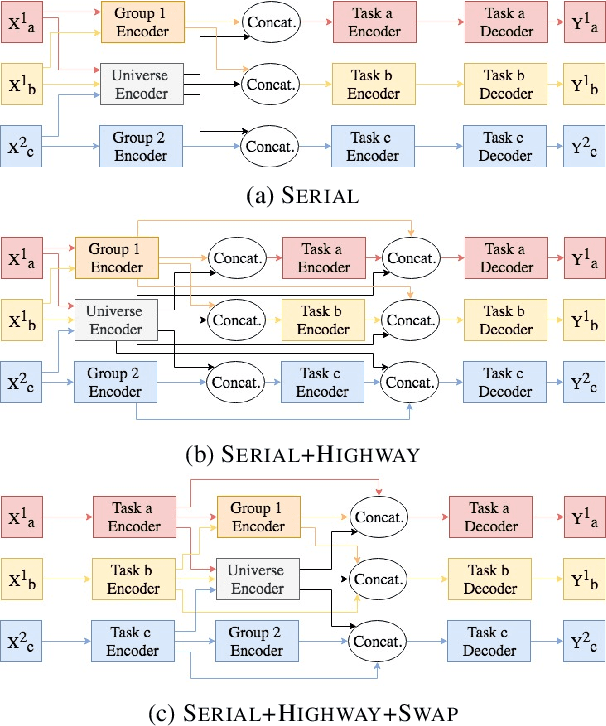

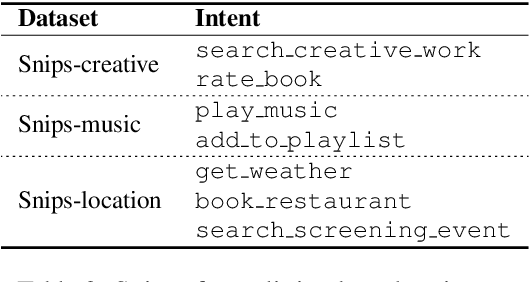

Abstract:We present methods for multi-task learning that take advantage of natural groupings of related tasks. Task groups may be defined along known properties of the tasks, such as task domain or language. Such task groups represent supervised information at the inter-task level and can be encoded into the model. We investigate two variants of neural network architectures that accomplish this, learning different feature spaces at the levels of individual tasks, task groups, as well as the universe of all tasks: (1) parallel architectures encode each input simultaneously into feature spaces at different levels; (2) serial architectures encode each input successively into feature spaces at different levels in the task hierarchy. We demonstrate the methods on natural language understanding (NLU) tasks, where a grouping of tasks into different task domains leads to improved performance on ATIS, Snips, and a large inhouse dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge