Nic Lupfer

How Could AI Support Design Education? A Study Across Fields Fuels Situating Analytics

Apr 26, 2024

Abstract:We use the process and findings from a case study of design educators' practices of assessment and feedback to fuel theorizing about how to make AI useful in service of human experience. We build on Suchman's theory of situated actions. We perform a qualitative study of 11 educators in 5 fields, who teach design processes situated in project-based learning contexts. Through qualitative data gathering and analysis, we derive codes: design process; assessment and feedback challenges; and computational support. We twice invoke creative cognition's family resemblance principle. First, to explain how design instructors already use assessment rubrics and second, to explain the analogous role for design creativity analytics: no particular trait is necessary or sufficient; each only tends to indicate good design work. Human teachers remain essential. We develop a set of situated design creativity analytics--Fluency, Flexibility, Visual Consistency, Multiscale Organization, and Legible Contrast--to support instructors' efforts, by providing on-demand, learning objectives-based assessment and feedback to students. We theorize a methodology, which we call situating analytics, firstly because making AI support living human activity depends on aligning what analytics measure with situated practices. Further, we realize that analytics can become most significant to users by situating them through interfaces that integrate them into the material contexts of their use. Here, this means situating design creativity analytics into actual design environments. Through the case study, we identify situating analytics as a methodology for explaining analytics to users, because the iterative process of alignment with practice has the potential to enable data scientists to derive analytics that make sense as part of and support situated human experiences.

Indexing Analytics to Instances: How Integrating a Dashboard can Support Design Education

Apr 08, 2024

Abstract:We investigate how to use AI-based analytics to support design education. The analytics at hand measure multiscale design, that is, students' use of space and scale to visually and conceptually organize their design work. With the goal of making the analytics intelligible to instructors, we developed a research artifact integrating a design analytics dashboard with design instances, and the design environment that students use to create them. We theorize about how Suchman's notion of mutual intelligibility requires contextualized investigation of AI in order to develop findings about how analytics work for people. We studied the research artifact in 5 situated course contexts, in 3 departments. A total of 236 students used the multiscale design environment. The 9 instructors who taught those students experienced the analytics via the new research artifact. We derive findings from a qualitative analysis of interviews with instructors regarding their experiences. Instructors reflected on how the analytics and their presentation in the dashboard have the potential to affect design education. We develop research implications addressing: (1) how indexing design analytics in the dashboard to actual design work instances helps design instructors reflect on what they mean and, more broadly, is a technique for how AI-based design analytics can support instructors' assessment and feedback experiences in situated course contexts; and (2) how multiscale design analytics, in particular, have the potential to support design education. By indexing, we mean linking which provides context, here connecting the numbers of the analytics with visually annotated design work instances.

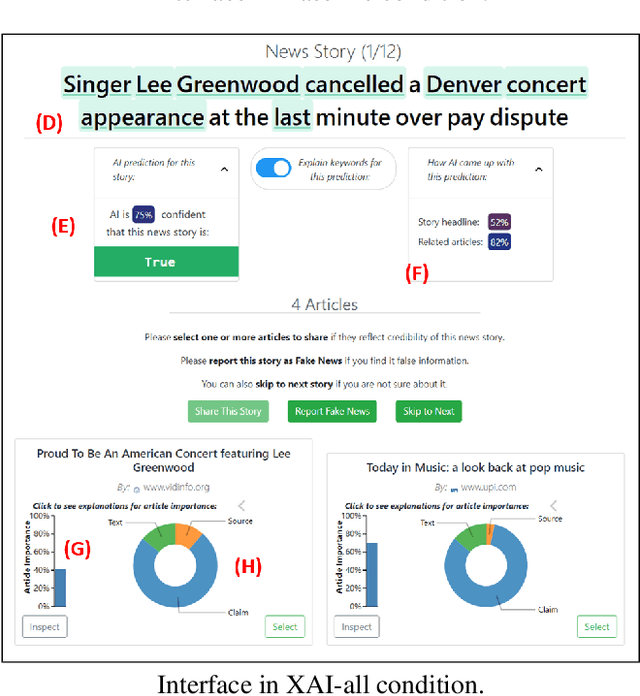

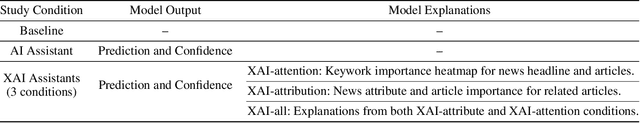

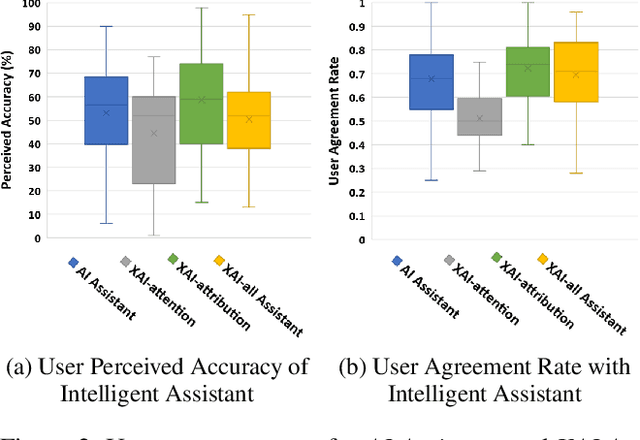

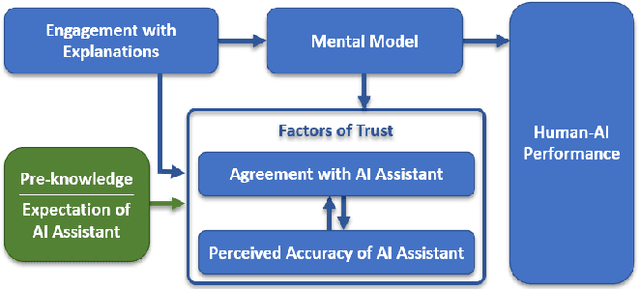

Machine Learning Explanations to Prevent Overtrust in Fake News Detection

Jul 27, 2020

Abstract:Combating fake news and misinformation propagation is a challenging task in the post-truth era. News feed and search algorithms could potentially lead to unintentional large-scale propagation of false and fabricated information with users being exposed to algorithmically selected false content. Our research investigates the effects of an Explainable AI assistant embedded in news review platforms for combating the propagation of fake news. We design a news reviewing and sharing interface, create a dataset of news stories, and train four interpretable fake news detection algorithms to study the effects of algorithmic transparency on end-users. We present evaluation results and analysis from multiple controlled crowdsourced studies. For a deeper understanding of Explainable AI systems, we discuss interactions between user engagement, mental model, trust, and performance measures in the process of explaining. The study results indicate that explanations helped participants to build appropriate mental models of the intelligent assistants in different conditions and adjust their trust accordingly for model limitations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge