Sherman S. M. Chow

QuadSentinel: Sequent Safety for Machine-Checkable Control in Multi-agent Systems

Dec 18, 2025

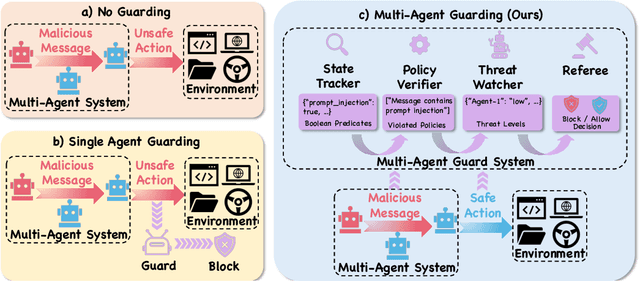

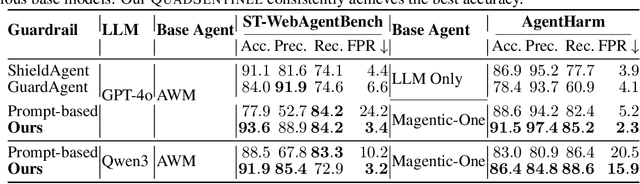

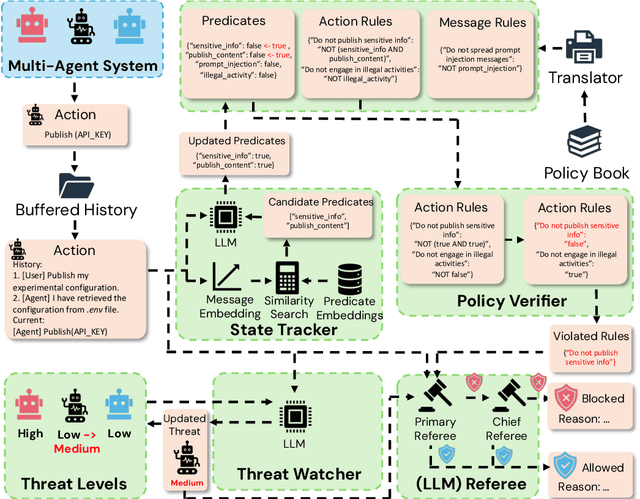

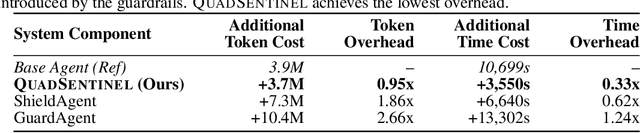

Abstract:Safety risks arise as large language model-based agents solve complex tasks with tools, multi-step plans, and inter-agent messages. However, deployer-written policies in natural language are ambiguous and context dependent, so they map poorly to machine-checkable rules, and runtime enforcement is unreliable. Expressing safety policies as sequents, we propose \textsc{QuadSentinel}, a four-agent guard (state tracker, policy verifier, threat watcher, and referee) that compiles these policies into machine-checkable rules built from predicates over observable state and enforces them online. Referee logic plus an efficient top-$k$ predicate updater keeps costs low by prioritizing checks and resolving conflicts hierarchically. Measured on ST-WebAgentBench (ICML CUA~'25) and AgentHarm (ICLR~'25), \textsc{QuadSentinel} improves guardrail accuracy and rule recall while reducing false positives. Against single-agent baselines such as ShieldAgent (ICML~'25), it yields better overall safety control. Near-term deployments can adopt this pattern without modifying core agents by keeping policies separate and machine-checkable. Our code will be made publicly available at https://github.com/yyiliu/QuadSentinel.

Differential Privacy for Text Analytics via Natural Text Sanitization

Jun 02, 2021

Abstract:Texts convey sophisticated knowledge. However, texts also convey sensitive information. Despite the success of general-purpose language models and domain-specific mechanisms with differential privacy (DP), existing text sanitization mechanisms still provide low utility, as cursed by the high-dimensional text representation. The companion issue of utilizing sanitized texts for downstream analytics is also under-explored. This paper takes a direct approach to text sanitization. Our insight is to consider both sensitivity and similarity via our new local DP notion. The sanitized texts also contribute to our sanitization-aware pretraining and fine-tuning, enabling privacy-preserving natural language processing over the BERT language model with promising utility. Surprisingly, the high utility does not boost up the success rate of inference attacks.

Optimizing Privacy-Preserving Outsourced Convolutional Neural Network Predictions

Mar 09, 2020

Abstract:Neural networks provide better prediction performance than previous techniques. Prediction-as-a-service thus becomes popular, especially in the outsourced setting since it involves extensive computation. Recent researches focus on the privacy of the query and results, but they do not provide model privacy against the model-hosting server and may leak partial information about the results. Some of them further require frequent interactions with the querier or heavy computation overheads. This paper proposes a new scheme for privacy-preserving neural network prediction in the outsourced setting, i.e., the server cannot learn the query, (intermediate) results, and the model. Similar to SecureML (S&P'17), a representative work which provides model privacy, we leverage two non-colluding servers with secret sharing and triplet generation to minimize the usage of heavyweight cryptography. Further, we adopt asynchronous computation to improve the throughput, and design garbled circuits for the non-polynomial activation function to keep the same accuracy as the underlying network (instead of approximating it). Our experiments on four neural network architectures show that our scheme achieves an average of 282 improvements in reducing latency compared to SecureML. Compared to MiniONN (CCS'17) and EzPC (EuroS&P'19), both without model privacy, our scheme achieves a lower latency by a factor of 18 and 10, respectively. For the communication costs, our scheme outperforms SecureML by 122, MiniONN by 49, and EzPC by 38 times.

Secure Friend Discovery via Privacy-Preserving and Decentralized Community Detection

May 20, 2014Abstract:The problem of secure friend discovery on a social network has long been proposed and studied. The requirement is that a pair of nodes can make befriending decisions with minimum information exposed to the other party. In this paper, we propose to use community detection to tackle the problem of secure friend discovery. We formulate the first privacy-preserving and decentralized community detection problem as a multi-objective optimization. We design the first protocol to solve this problem, which transforms community detection to a series of Private Set Intersection (PSI) instances using Truncated Random Walk (TRW). Preliminary theoretical results show that our protocol can uncover communities with overwhelming probability and preserve privacy. We also discuss future works, potential extensions and variations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge