Shaochu Wang

Depth-induced Saliency Comparison Network for Diagnosis of Alzheimer's Disease via Jointly Analysis of Visual Stimuli and Eye Movements

Mar 15, 2024

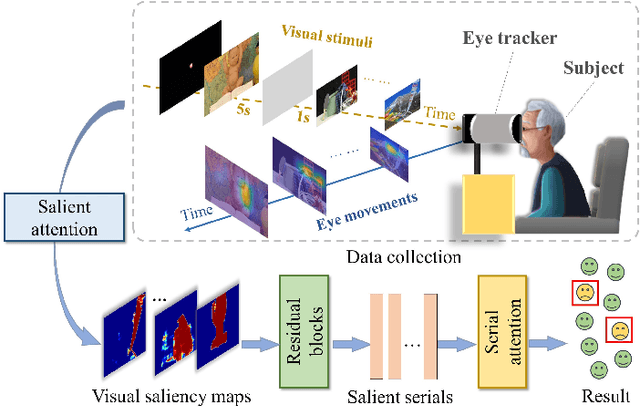

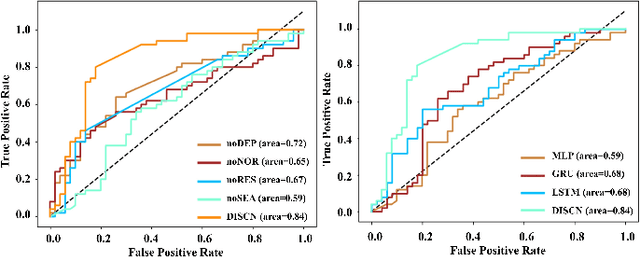

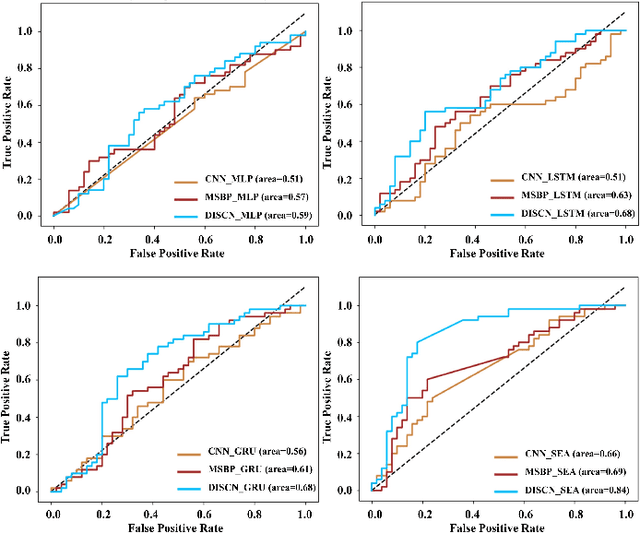

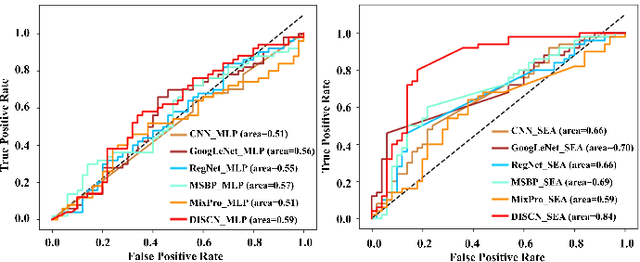

Abstract:Early diagnosis of Alzheimer's Disease (AD) is very important for following medical treatments, and eye movements under special visual stimuli may serve as a potential non-invasive biomarker for detecting cognitive abnormalities of AD patients. In this paper, we propose an Depth-induced saliency comparison network (DISCN) for eye movement analysis, which may be used for diagnosis the Alzheimers disease. In DISCN, a salient attention module fuses normal eye movements with RGB and depth maps of visual stimuli using hierarchical salient attention (SAA) to evaluate comprehensive saliency maps, which contain information from both visual stimuli and normal eye movement behaviors. In addition, we introduce serial attention module (SEA) to emphasis the most abnormal eye movement behaviors to reduce personal bias for a more robust result. According to our experiments, the DISCN achieves consistent validity in classifying the eye movements between the AD patients and normal controls.

Deep Learning-based Inertial Odometry for Pedestrian Tracking using Attention Mechanism and Res2Net Module

May 20, 2022

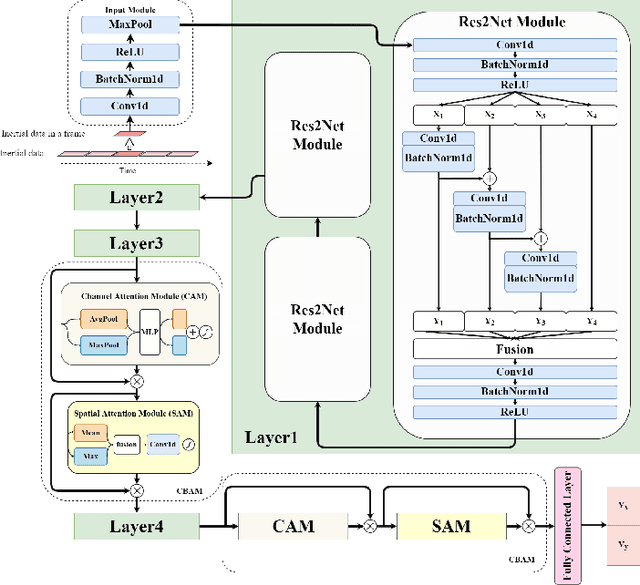

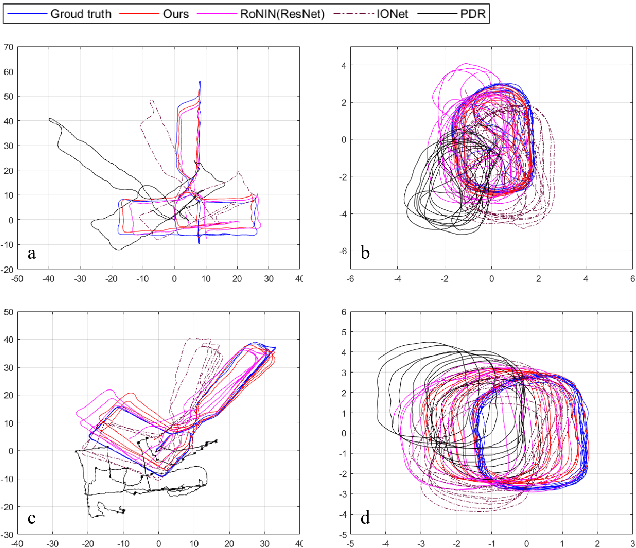

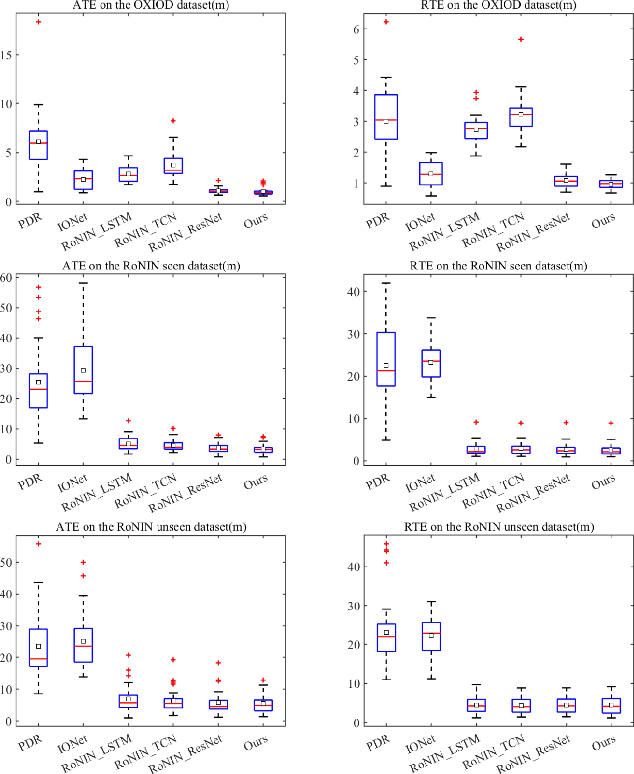

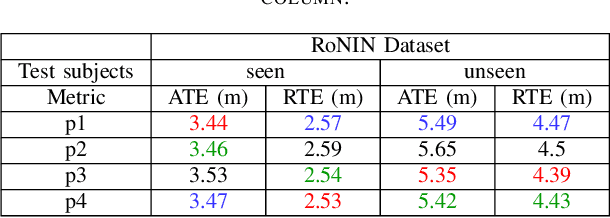

Abstract:Pedestrian dead reckoning is a challenging task due to the low-cost inertial sensor error accumulation. Recent research has shown that deep learning methods can achieve impressive performance in handling this issue. In this letter, we propose inertial odometry using a deep learning-based velocity estimation method. The deep neural network based on Res2Net modules and two convolutional block attention modules is leveraged to restore the potential connection between the horizontal velocity vector and raw inertial data from a smartphone. Our network is trained using only fifty percent of the public inertial odometry dataset (RoNIN) data. Then, it is validated on the RoNIN testing dataset and another public inertial odometry dataset (OXIOD). Compared with the traditional step-length and heading system-based algorithm, our approach decreases the absolute translation error (ATE) by 76%-86%. In addition, compared with the state-of-the-art deep learning method (RoNIN), our method improves its ATE by 6%-31.4%.

A Novel Unified Stereo Stimuli based Binocular Eye-Tracking System for Accurate 3D Gaze Estimation

Apr 25, 2021

Abstract:In addition to the high cost and complex setup, the main reason for the limitation of the three-dimensional (3D) display is the problem of accurately estimating the user's current point-of-gaze (PoG) in a 3D space. In this paper, we present a novel noncontact technique for the PoG estimation in a stereoscopic environment, which integrates a 3D stereoscopic display system and an eye-tracking system. The 3D stereoscopic display system can provide users with a friendly and immersive high-definition viewing experience without wearing any equipment. To accurately locate the user's 3D PoG in the field of view, we build a regression-based 3D eye-tracking model with the eye movement data and stereo stimulus videos as input. Besides, to train an optimal regression model, we also design and annotate a dataset that contains 30 users' eye-tracking data corresponding to two designed stereo test scenes. Innovatively, this dataset introduces feature vectors between eye region landmarks for the gaze vector estimation and a combined feature set for the gaze depth estimation. Moreover, five traditional regression models are trained and evaluated based on this dataset. Experimental results show that the average errors of the 3D PoG are about 0.90~cm on the X-axis, 0.83~cm on the Y-axis, and 1.48~cm$/$0.12~m along the Z-axis with the scene-depth range in 75~cm$/$8~m, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge