Shalom Lappin

University of Gothenburg, Queen Mary University of London, and King's College London

Coreference as an indicator of context scope in multimodal narrative

Mar 07, 2025Abstract:We demonstrate that large multimodal language models differ substantially from humans in the distribution of coreferential expressions in a visual storytelling task. We introduce a number of metrics to quantify the characteristics of coreferential patterns in both human- and machine-written texts. Humans distribute coreferential expressions in a way that maintains consistency across texts and images, interleaving references to different entities in a highly varied way. Machines are less able to track mixed references, despite achieving perceived improvements in generation quality.

Assessing the Unitary RNN as an End-to-End Compositional Model of Syntax

Aug 11, 2022

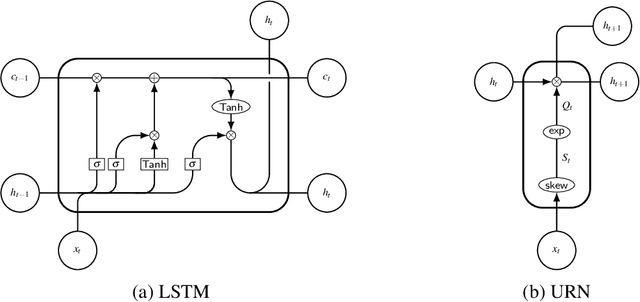

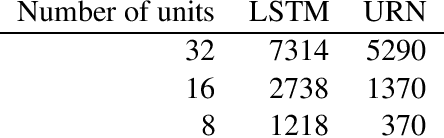

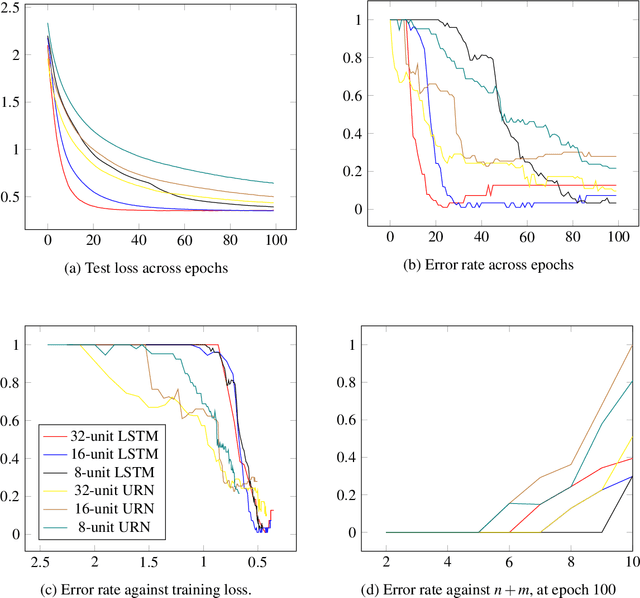

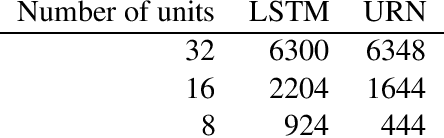

Abstract:We show that both an LSTM and a unitary-evolution recurrent neural network (URN) can achieve encouraging accuracy on two types of syntactic patterns: context-free long distance agreement, and mildly context-sensitive cross serial dependencies. This work extends recent experiments on deeply nested context-free long distance dependencies, with similar results. URNs differ from LSTMs in that they avoid non-linear activation functions, and they apply matrix multiplication to word embeddings encoded as unitary matrices. This permits them to retain all information in the processing of an input string over arbitrary distances. It also causes them to satisfy strict compositionality. URNs constitute a significant advance in the search for explainable models in deep learning applied to NLP.

* In Proceedings E2ECOMPVEC, arXiv:2208.05313

How Furiously Can Colourless Green Ideas Sleep? Sentence Acceptability in Context

Apr 02, 2020

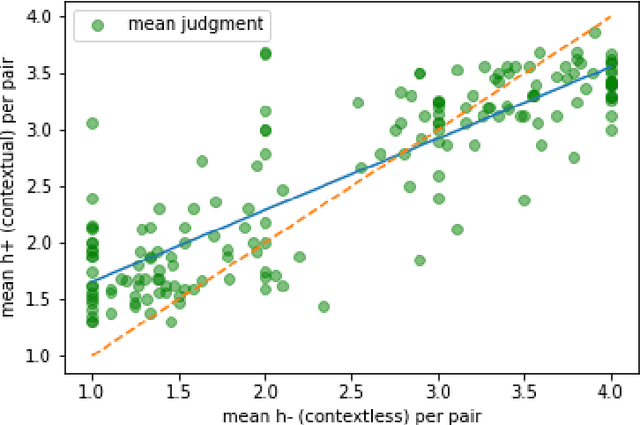

Abstract:We study the influence of context on sentence acceptability. First we compare the acceptability ratings of sentences judged in isolation, with a relevant context, and with an irrelevant context. Our results show that context induces a cognitive load for humans, which compresses the distribution of ratings. Moreover, in relevant contexts we observe a discourse coherence effect which uniformly raises acceptability. Next, we test unidirectional and bidirectional language models in their ability to predict acceptability ratings. The bidirectional models show very promising results, with the best model achieving a new state-of-the-art for unsupervised acceptability prediction. The two sets of experiments provide insights into the cognitive aspects of sentence processing and central issues in the computational modelling of text and discourse.

The Effect of Context on Metaphor Paraphrase Aptness Judgments

Sep 04, 2018

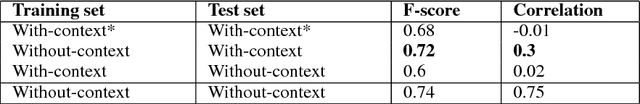

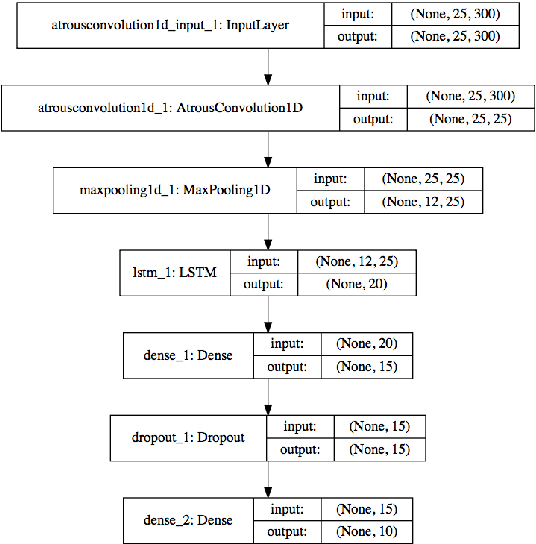

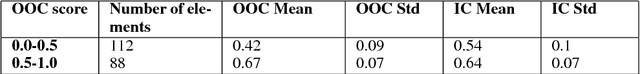

Abstract:We conduct two experiments to study the effect of context on metaphor paraphrase aptness judgments. The first is an AMT crowd source task in which speakers rank metaphor paraphrase candidate sentence pairs in short document contexts for paraphrase aptness. In the second we train a composite DNN to predict these human judgments, first in binary classifier mode, and then as gradient ratings. We found that for both mean human judgments and our DNN's predictions, adding document context compresses the aptness scores towards the center of the scale, raising low out of context ratings and decreasing high out of context scores. We offer a provisional explanation for this compression effect.

Compositionality, Synonymy, and the Systematic Representation of Meaning

Jan 09, 2000Abstract:In a recent issue of Linguistics and Philosophy Kasmi and Pelletier (1998) (K&P), and Westerstahl (1998) criticize Zadrozny's (1994) argument that any semantics can be represented compositionally. The argument is based upon Zadrozny's theorem that every meaning function m can be encoded by a function \mu such that (i) for any expression E of a specified language L, m(E) can be recovered from \mu(E), and (ii) \mu is a homomorphism from the syntactic structures of L to interpretations of L. In both cases, the primary motivation for the objections brought against Zadrozny's argument is the view that his encoding of the original meaning function does not properly reflect the synonymy relations posited for the language. In this paper, we argue that these technical criticisms do not go through. In particular, we prove that \mu properly encodes synonymy relations, i.e. if two expressions are synonymous, then their compositional meanings are identical. This corrects some misconceptions about the function \mu, e.g. Janssen (1997). We suggest that the reason that semanticists have been anxious to preserve compositionality as a significant constraint on semantic theory is that it has been mistakenly regarded as a condition that must be satisfied by any theory that sustains a systematic connection between the meaning of an expression and the meanings of its parts. Recent developments in formal and computational semantics show that systematic theories of meanings need not be compositional.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge