Shahab Nikkhoo

University of California, Riverside

PIMbot: Policy and Incentive Manipulation for Multi-Robot Reinforcement Learning in Social Dilemmas

Jul 29, 2023Abstract:Recent research has demonstrated the potential of reinforcement learning (RL) in enabling effective multi-robot collaboration, particularly in social dilemmas where robots face a trade-off between self-interests and collective benefits. However, environmental factors such as miscommunication and adversarial robots can impact cooperation, making it crucial to explore how multi-robot communication can be manipulated to achieve different outcomes. This paper presents a novel approach, namely PIMbot, to manipulating the reward function in multi-robot collaboration through two distinct forms of manipulation: policy and incentive manipulation. Our work introduces a new angle for manipulation in recent multi-agent RL social dilemmas that utilize a unique reward function for incentivization. By utilizing our proposed PIMbot mechanisms, a robot is able to manipulate the social dilemma environment effectively. PIMbot has the potential for both positive and negative impacts on the task outcome, where positive impacts lead to faster convergence to the global optimum and maximized rewards for any chosen robot. Conversely, negative impacts can have a detrimental effect on the overall task performance. We present comprehensive experimental results that demonstrate the effectiveness of our proposed methods in the Gazebo-simulated multi-robot environment. Our work provides insights into how inter-robot communication can be manipulated and has implications for various robotic applications. %, including robotics, transportation, and manufacturing.

MIMONet: Multi-Input Multi-Output On-Device Deep Learning

Jul 22, 2023

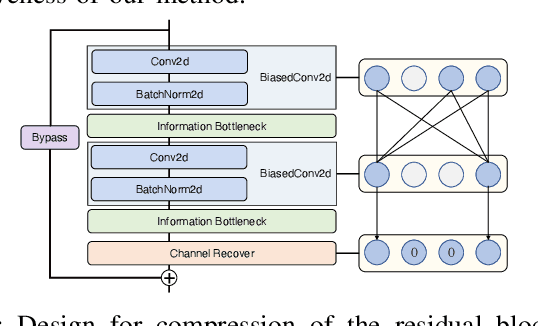

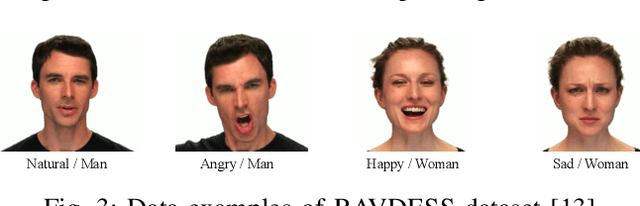

Abstract:Future intelligent robots are expected to process multiple inputs simultaneously (such as image and audio data) and generate multiple outputs accordingly (such as gender and emotion), similar to humans. Recent research has shown that multi-input single-output (MISO) deep neural networks (DNN) outperform traditional single-input single-output (SISO) models, representing a significant step towards this goal. In this paper, we propose MIMONet, a novel on-device multi-input multi-output (MIMO) DNN framework that achieves high accuracy and on-device efficiency in terms of critical performance metrics such as latency, energy, and memory usage. Leveraging existing SISO model compression techniques, MIMONet develops a new deep-compression method that is specifically tailored to MIMO models. This new method explores unique yet non-trivial properties of the MIMO model, resulting in boosted accuracy and on-device efficiency. Extensive experiments on three embedded platforms commonly used in robotic systems, as well as a case study using the TurtleBot3 robot, demonstrate that MIMONet achieves higher accuracy and superior on-device efficiency compared to state-of-the-art SISO and MISO models, as well as a baseline MIMO model we constructed. Our evaluation highlights the real-world applicability of MIMONet and its potential to significantly enhance the performance of intelligent robotic systems.

Pareto-Secure Machine Learning (PSML): Fingerprinting and Securing Inference Serving Systems

Jul 03, 2023Abstract:With the emergence of large foundational models, model-serving systems are becoming popular. In such a system, users send the queries to the server and specify the desired performance metrics (e.g., accuracy, latency, etc.). The server maintains a set of models (model zoo) in the back-end and serves the queries based on the specified metrics. This paper examines the security, specifically robustness against model extraction attacks, of such systems. Existing black-box attacks cannot be directly applied to extract a victim model, as models hide among the model zoo behind the inference serving interface, and attackers cannot identify which model is being used. An intermediate step is required to ensure that every input query gets the output from the victim model. To this end, we propose a query-efficient fingerprinting algorithm to enable the attacker to trigger any desired model consistently. We show that by using our fingerprinting algorithm, model extraction can have fidelity and accuracy scores within $1\%$ of the scores obtained if attacking in a single-model setting and up to $14.6\%$ gain in accuracy and up to $7.7\%$ gain in fidelity compared to the naive attack. Finally, we counter the proposed attack with a noise-based defense mechanism that thwarts fingerprinting by adding noise to the specified performance metrics. Our defense strategy reduces the attack's accuracy and fidelity by up to $9.8\%$ and $4.8\%$, respectively (on medium-sized model extraction). We show that the proposed defense induces a fundamental trade-off between the level of protection and system goodput, achieving configurable and significant victim model extraction protection while maintaining acceptable goodput ($>80\%$). We provide anonymous access to our code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge