Shahab Jalalvand

1SPU: 1-step Speech Processing Unit

Nov 10, 2023Abstract:Recent studies have made some progress in refining end-to-end (E2E) speech recognition encoders by applying Connectionist Temporal Classification (CTC) loss to enhance named entity recognition within transcriptions. However, these methods have been constrained by their exclusive use of the ASCII character set, allowing only a limited array of semantic labels. We propose 1SPU, a 1-step Speech Processing Unit which can recognize speech events (e.g: speaker change) or an NL event (Intent, Emotion) while also transcribing vocal content. It extends the E2E automatic speech recognition (ASR) system's vocabulary by adding a set of unused placeholder symbols, conceptually akin to the <pad> tokens used in sequence modeling. These placeholders are then assigned to represent semantic events (in form of tags) and are integrated into the transcription process as distinct tokens. We demonstrate notable improvements on the SLUE benchmark and yields results that are on par with those for the SLURP dataset. Additionally, we provide a visual analysis of the system's proficiency in accurately pinpointing meaningful tokens over time, illustrating the enhancement in transcription quality through the utilization of supplementary semantic tags.

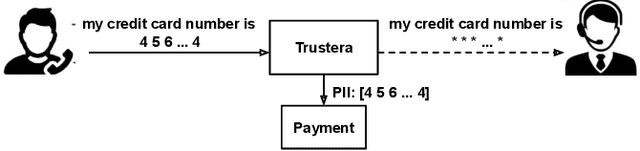

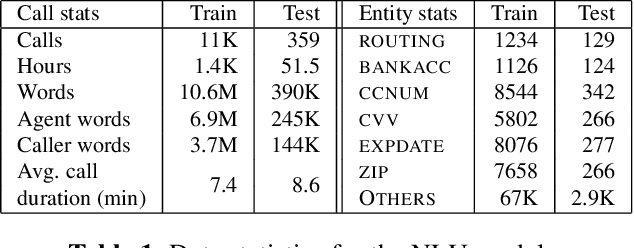

Trustera: A Live Conversation Redaction System

Mar 16, 2023

Abstract:Trustera, the first functional system that redacts personally identifiable information (PII) in real-time spoken conversations to remove agents' need to hear sensitive information while preserving the naturalness of live customer-agent conversations. As opposed to post-call redaction, audio masking starts as soon as the customer begins speaking to a PII entity. This significantly reduces the risk of PII being intercepted or stored in insecure data storage. Trustera's architecture consists of a pipeline of automatic speech recognition, natural language understanding, and a live audio redactor module. The system's goal is three-fold: redact entities that are PII, mask the audio that goes to the agent, and at the same time capture the entity, so that the captured PII can be used for a payment transaction or caller identification. Trustera is currently being used by thousands of agents to secure customers' sensitive information.

E2E Spoken Entity Extraction for Virtual Agents

Mar 01, 2023

Abstract:This paper reimagines some aspects of speech processing using speech encoders, specifically about extracting entities directly from speech, with no intermediate textual representation. In human-computer conversations, extracting entities such as names, postal addresses and email addresses from speech is a challenging task. In this paper, we study the impact of fine-tuning pre-trained speech encoders on extracting spoken entities in human-readable form directly from speech without the need for text transcription. We illustrate that such a direct approach optimizes the encoder to transcribe only the entity relevant portions of speech, ignoring the superfluous portions such as carrier phrases and spellings of entities. In the context of dialogs from an enterprise virtual agent, we demonstrate that the 1-step approach outperforms the typical 2-step cascade of first generating lexical transcriptions followed by text-based entity extraction for identifying spoken entities.

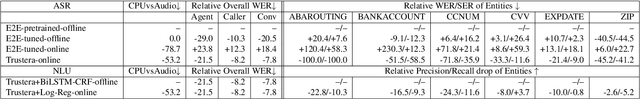

Seq-2-Seq based Refinement of ASR Output for Spoken Name Capture

Mar 29, 2022

Abstract:Person name capture from human speech is a difficult task in human-machine conversations. In this paper, we propose a novel approach to capture the person names from the caller utterances in response to the prompt "say and spell your first/last name". Inspired from work on spell correction, disfluency removal and text normalization, we propose a lightweight Seq-2-Seq system which generates a name spell from a varying user input. Our proposed method outperforms the strong baseline which is based on LM-driven rule-based approach.

Unsupervised Spoken Utterance Classification

Jul 02, 2021

Abstract:An intelligent virtual assistant (IVA) enables effortless conversations in call routing through spoken utterance classification (SUC) which is a special form of spoken language understanding (SLU). Building a SUC system requires a large amount of supervised in-domain data that is not always available. In this paper, we introduce an unsupervised spoken utterance classification approach (USUC) that does not require any in-domain data except for the intent labels and a few para-phrases per intent. USUC is consisting of a KNN classifier (K=1) and a complex embedding model trained on a large amount of unsupervised customer service corpus. Among all embedding models, we demonstrate that Elmo works best for USUC. However, an Elmo model is too slow to be used at run-time for call routing. To resolve this issue, first, we compute the uni- and bi-gram embedding vectors offline and we build a lookup table of n-grams and their corresponding embedding vector. Then we use this table to compute sentence embedding vectors at run-time, along with back-off techniques for unseen n-grams. Experiments show that USUC outperforms the traditional utterance classification methods by reducing the classification error rate from 32.9% to 27.0% without requiring supervised data. Moreover, our lookup and back-off technique increases the processing speed from 16 utterances per second to 118 utterances per second.

Automatic Data Expansion for Customer-care Spoken Language Understanding

Sep 27, 2018

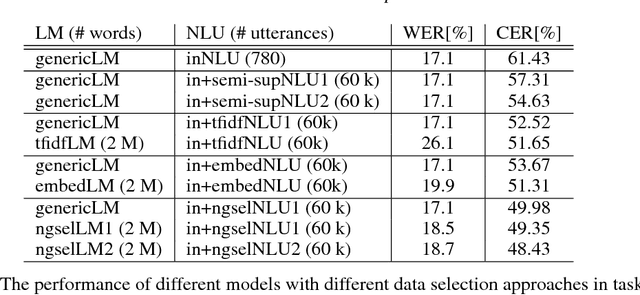

Abstract:Spoken language understanding (SLU) systems are widely used in handling of customer-care calls.A traditional SLU system consists of an acoustic model (AM) and a language model (LM) that areused to decode the utterance and a natural language understanding (NLU) model that predicts theintent. While AM can be shared across different domains, LM and NLU models need to be trainedspecifically for every new task. However, preparing enough data to train these models is prohibitivelyexpensive. In this paper, we introduce an efficient method to expand the limited in-domain data. Theprocess starts with training a preliminary NLU model based on logistic regression on the in-domaindata. Since the features are based onn= 1,2-grams, we can detect the most informative n-gramsfor each intent class. Using these n-grams, we find the samples in the out-of-domain corpus that1) contain the desired n-gram and/or 2) have similar intent label. The ones which meet the firstconstraint are used to train a new LM model and the ones that meet both constraints are used to train anew NLU model. Our results on two divergent experimental setups show that the proposed approachreduces by 30% the absolute classification error rate (CER) comparing to the preliminary modelsand it significantly outperforms the traditional data expansion algorithms such as the ones based onsemi-supervised learning, TF-IDF and embedding vectors.

Automatic Quality Estimation for ASR System Combination

Jun 22, 2017

Abstract:Recognizer Output Voting Error Reduction (ROVER) has been widely used for system combination in automatic speech recognition (ASR). In order to select the most appropriate words to insert at each position in the output transcriptions, some ROVER extensions rely on critical information such as confidence scores and other ASR decoder features. This information, which is not always available, highly depends on the decoding process and sometimes tends to over estimate the real quality of the recognized words. In this paper we propose a novel variant of ROVER that takes advantage of ASR quality estimation (QE) for ranking the transcriptions at "segment level" instead of: i) relying on confidence scores, or ii) feeding ROVER with randomly ordered hypotheses. We first introduce an effective set of features to compensate for the absence of ASR decoder information. Then, we apply QE techniques to perform accurate hypothesis ranking at segment-level before starting the fusion process. The evaluation is carried out on two different tasks, in which we respectively combine hypotheses coming from independent ASR systems and multi-microphone recordings. In both tasks, it is assumed that the ASR decoder information is not available. The proposed approach significantly outperforms standard ROVER and it is competitive with two strong oracles that e xploit prior knowledge about the real quality of the hypotheses to be combined. Compared to standard ROVER, the abs olute WER improvements in the two evaluation scenarios range from 0.5% to 7.3%.

DNN adaptation by automatic quality estimation of ASR hypotheses

Feb 06, 2017

Abstract:In this paper we propose to exploit the automatic Quality Estimation (QE) of ASR hypotheses to perform the unsupervised adaptation of a deep neural network modeling acoustic probabilities. Our hypothesis is that significant improvements can be achieved by: i)automatically transcribing the evaluation data we are currently trying to recognise, and ii) selecting from it a subset of "good quality" instances based on the word error rate (WER) scores predicted by a QE component. To validate this hypothesis, we run several experiments on the evaluation data sets released for the CHiME-3 challenge. First, we operate in oracle conditions in which manual transcriptions of the evaluation data are available, thus allowing us to compute the "true" sentence WER. In this scenario, we perform the adaptation with variable amounts of data, which are characterised by different levels of quality. Then, we move to realistic conditions in which the manual transcriptions of the evaluation data are not available. In this case, the adaptation is performed on data selected according to the WER scores "predicted" by a QE component. Our results indicate that: i) QE predictions allow us to closely approximate the adaptation results obtained in oracle conditions, and ii) the overall ASR performance based on the proposed QE-driven adaptation method is significantly better than the strong, most recent, CHiME-3 baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge