Sergii Skakun

Delineate Anything Flow: Fast, Country-Level Field Boundary Detection from Any Source

Nov 17, 2025Abstract:Accurate delineation of agricultural field boundaries from satellite imagery is essential for land management and crop monitoring, yet existing methods often produce incomplete boundaries, merge adjacent fields, and struggle to scale. We present the Delineate Anything Flow (DelAnyFlow) methodology, a resolution-agnostic approach for large-scale field boundary mapping. DelAnyFlow combines the DelAny instance segmentation model, based on a YOLOv11 backbone and trained on the large-scale Field Boundary Instance Segmentation-22M (FBIS 22M) dataset, with a structured post-processing, merging, and vectorization sequence to generate topologically consistent vector boundaries. FBIS 22M, the largest dataset of its kind, contains 672,909 multi-resolution image patches (0.25-10m) and 22.9million validated field instances. The DelAny model delivers state-of-the-art accuracy with over 100% higher mAP and 400x faster inference than SAM2. DelAny demonstrates strong zero-shot generalization and supports national-scale applications: using Sentinel 2 data for 2024, DelAnyFlow generated a complete field boundary layer for Ukraine (603,000km2) in under six hours on a single workstation. DelAnyFlow outputs significantly improve boundary completeness relative to operational products from Sinergise Solutions and NASA Harvest, particularly in smallholder and fragmented systems (0.25-1ha). For Ukraine, DelAnyFlow delineated 3.75M fields at 5m and 5.15M at 2.5m, compared to 2.66M detected by Sinergise Solutions and 1.69M by NASA Harvest. This work delivers a scalable, cost-effective methodology for field delineation in regions lacking digital cadastral data. A project landing page with links to model weights, code, national-scale vector outputs, and dataset is available at https://lavreniuk.github.io/Delineate-Anything/.

When are Foundation Models Effective? Understanding the Suitability for Pixel-Level Classification Using Multispectral Imagery

Apr 17, 2024

Abstract:Foundation models, i.e., very large deep learning models, have demonstrated impressive performances in various language and vision tasks that are otherwise difficult to reach using smaller-size models. The major success of GPT-type of language models is particularly exciting and raises expectations on the potential of foundation models in other domains including satellite remote sensing. In this context, great efforts have been made to build foundation models to test their capabilities in broader applications, and examples include Prithvi by NASA-IBM, Segment-Anything-Model, ViT, etc. This leads to an important question: Are foundation models always a suitable choice for different remote sensing tasks, and when or when not? This work aims to enhance the understanding of the status and suitability of foundation models for pixel-level classification using multispectral imagery at moderate resolution, through comparisons with traditional machine learning (ML) and regular-size deep learning models. Interestingly, the results reveal that in many scenarios traditional ML models still have similar or better performance compared to foundation models, especially for tasks where texture is less useful for classification. On the other hand, deep learning models did show more promising results for tasks where labels partially depend on texture (e.g., burn scar), while the difference in performance between foundation models and deep learning models is not obvious. The results conform with our analysis: The suitability of foundation models depend on the alignment between the self-supervised learning tasks and the real downstream tasks, and the typical masked autoencoder paradigm is not necessarily suitable for many remote sensing problems.

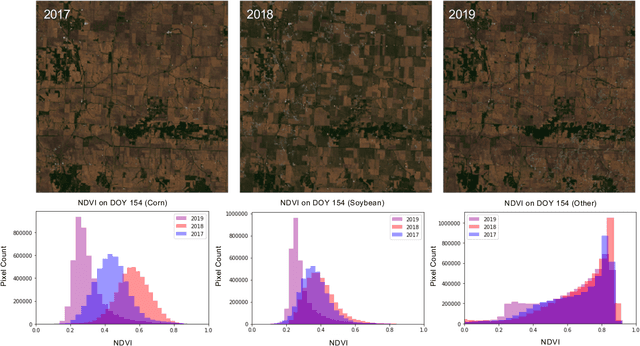

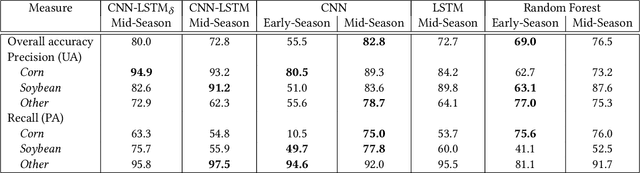

Resilient In-Season Crop Type Classification in Multispectral Satellite Observations using Growth Stage Normalization

Sep 21, 2020

Abstract:Crop type classification using satellite observations is an important tool for providing insights about planted area and enabling estimates of crop condition and yield, especially within the growing season when uncertainties around these quantities are highest. As the climate changes and extreme weather events become more frequent, these methods must be resilient to changes in domain shifts that may occur, for example, due to shifts in planting timelines. In this work, we present an approach for within-season crop type classification using moderate spatial resolution (30 m) satellite data that addresses domain shift related to planting timelines by normalizing inputs by crop growth stage. We use a neural network leveraging both convolutional and recurrent layers to predict if a pixel contains corn, soybeans, or another crop or land cover type. We evaluated this method for the 2019 growing season in the midwestern US, during which planting was delayed by as much as 1-2 months due to extreme weather that caused record flooding. We show that our approach using growth stage-normalized time series outperforms fixed-date time series, and achieves overall classification accuracy of 85.4% prior to harvest (September-November) and 82.8% by mid-season (July-September).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge