Scott Davidoff

Opening the Black Box of 3D Reconstruction Error Analysis with VECTOR

Aug 07, 2024

Abstract:Reconstruction of 3D scenes from 2D images is a technical challenge that impacts domains from Earth and planetary sciences and space exploration to augmented and virtual reality. Typically, reconstruction algorithms first identify common features across images and then minimize reconstruction errors after estimating the shape of the terrain. This bundle adjustment (BA) step optimizes around a single, simplifying scalar value that obfuscates many possible causes of reconstruction errors (e.g., initial estimate of the position and orientation of the camera, lighting conditions, ease of feature detection in the terrain). Reconstruction errors can lead to inaccurate scientific inferences or endanger a spacecraft exploring a remote environment. To address this challenge, we present VECTOR, a visual analysis tool that improves error inspection for stereo reconstruction BA. VECTOR provides analysts with previously unavailable visibility into feature locations, camera pose, and computed 3D points. VECTOR was developed in partnership with the Perseverance Mars Rover and Ingenuity Mars Helicopter terrain reconstruction team at the NASA Jet Propulsion Laboratory. We report on how this tool was used to debug and improve terrain reconstruction for the Mars 2020 mission.

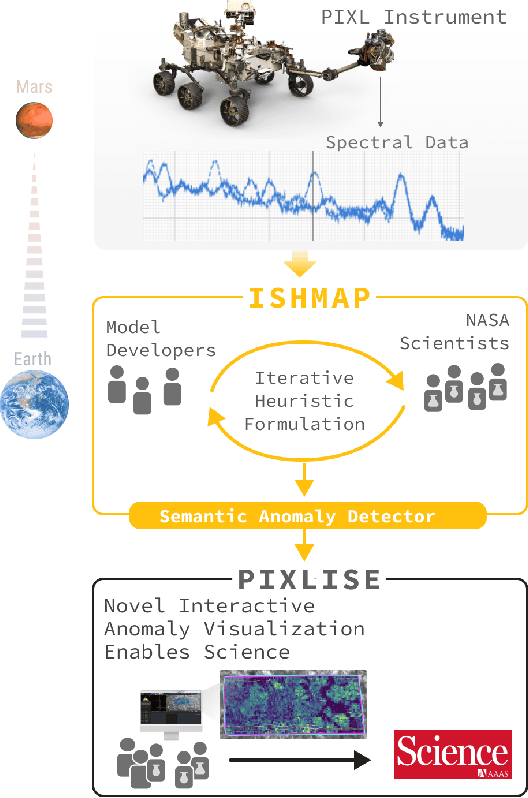

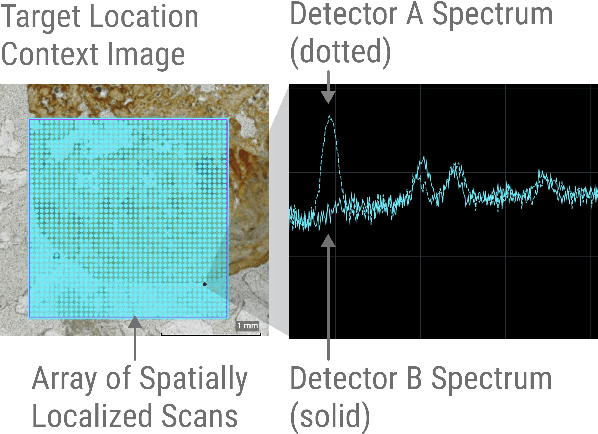

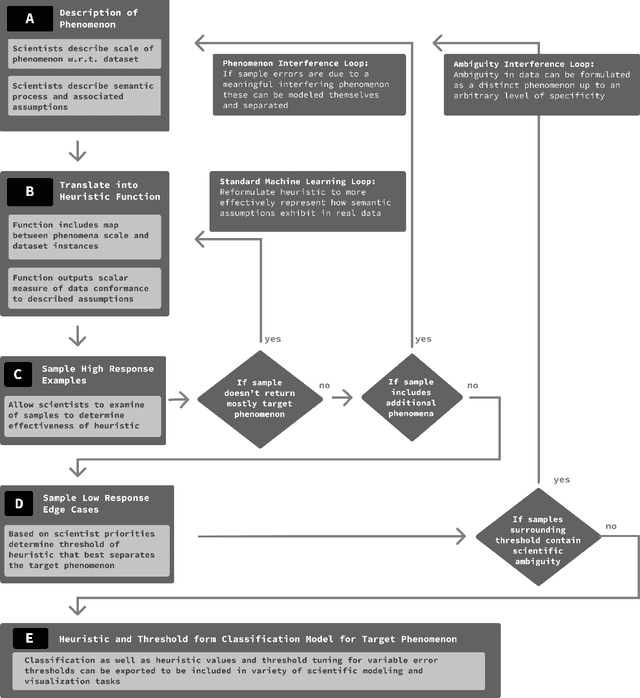

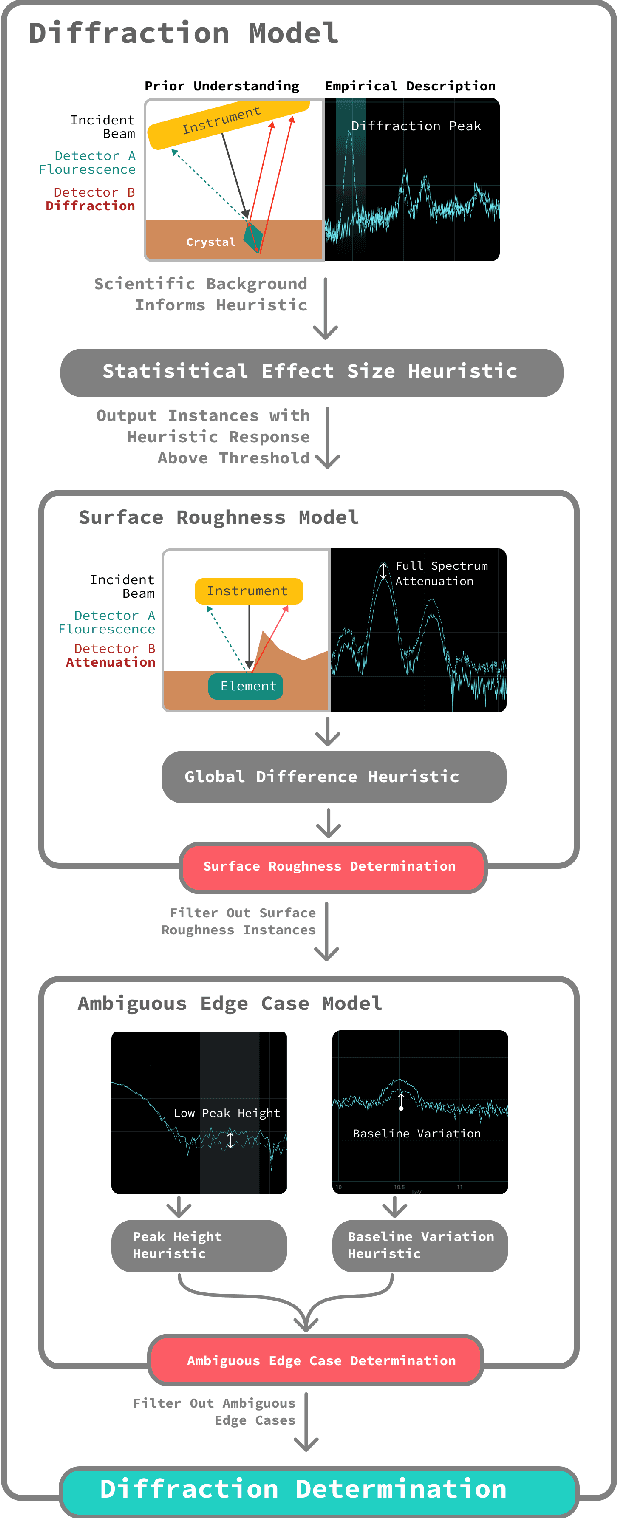

Lessons from the Development of an Anomaly Detection Interface on the Mars Perseverance Rover using the ISHMAP Framework

Feb 14, 2023

Abstract:While anomaly detection stands among the most important and valuable problems across many scientific domains, anomaly detection research often focuses on AI methods that can lack the nuance and interpretability so critical to conducting scientific inquiry. In this application paper we present the results of utilizing an alternative approach that situates the mathematical framing of machine learning based anomaly detection within a participatory design framework. In a collaboration with NASA scientists working with the PIXL instrument studying Martian planetary geochemistry as a part of the search for extra-terrestrial life; we report on over 18 months of in-context user research and co-design to define the key problems NASA scientists face when looking to detect and interpret spectral anomalies. We address these problems and develop a novel spectral anomaly detection toolkit for PIXL scientists that is highly accurate while maintaining strong transparency to scientific interpretation. We also describe outcomes from a yearlong field deployment of the algorithm and associated interface. Finally we introduce a new design framework which we developed through the course of this collaboration for co-creating anomaly detection algorithms: Iterative Semantic Heuristic Modeling of Anomalous Phenomena (ISHMAP), which provides a process for scientists and researchers to produce natively interpretable anomaly detection models. This work showcases an example of successfully bridging methodologies from AI and HCI within a scientific domain, and provides a resource in ISHMAP which may be used by other researchers and practitioners looking to partner with other scientific teams to achieve better science through more effective and interpretable anomaly detection tools.

Operations for Autonomous Spacecraft

Nov 22, 2021

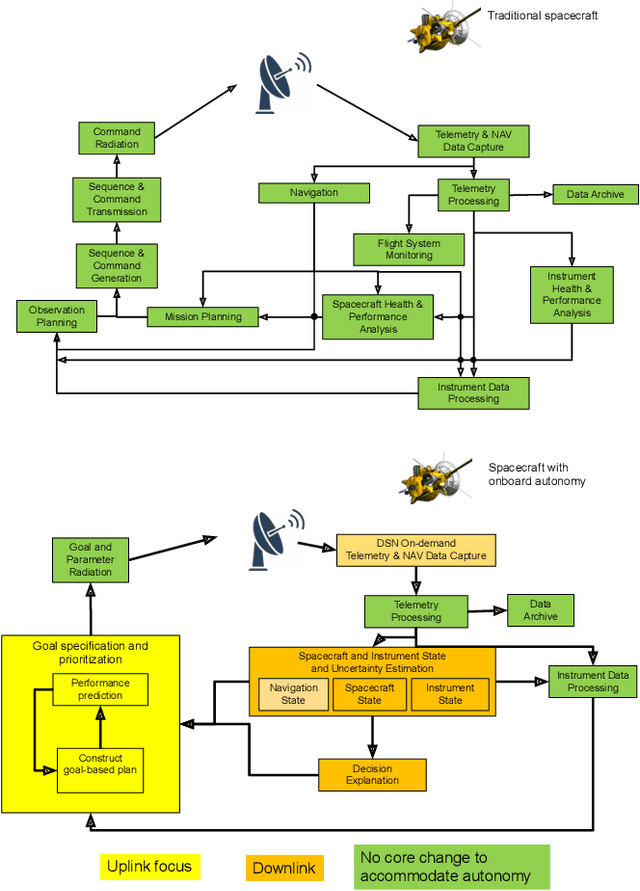

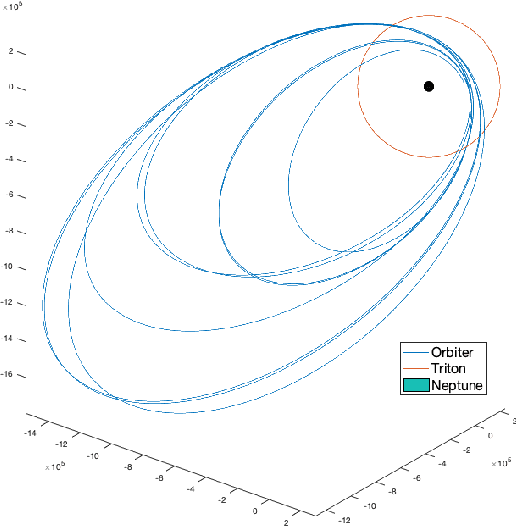

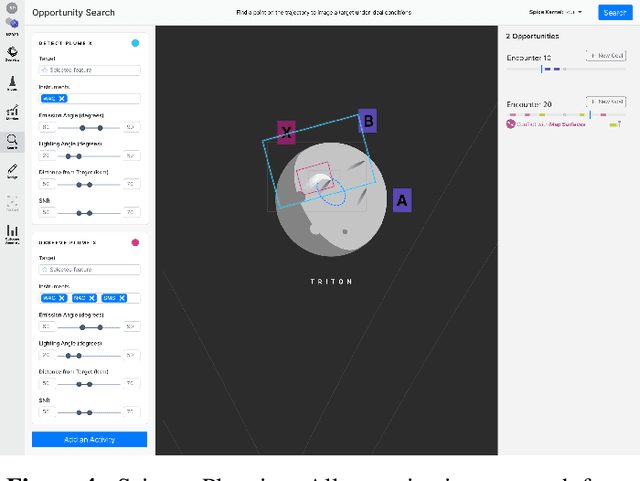

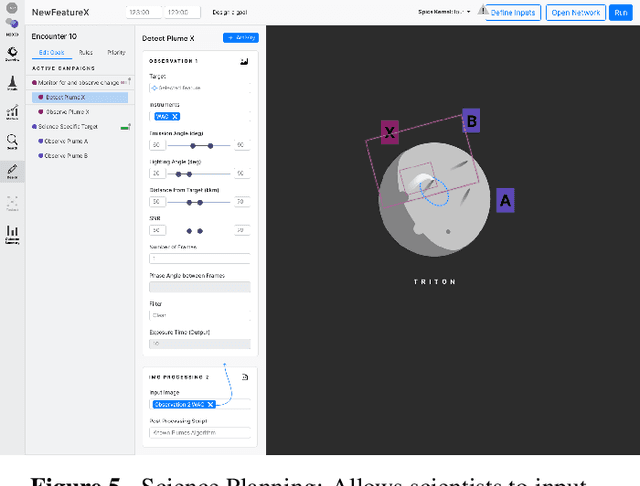

Abstract:Onboard autonomy technologies such as planning and scheduling, identification of scientific targets, and content-based data summarization, will lead to exciting new space science missions. However, the challenge of operating missions with such onboard autonomous capabilities has not been studied to a level of detail sufficient for consideration in mission concepts. These autonomy capabilities will require changes to current operations processes, practices, and tools. We have developed a case study to assess the changes needed to enable operators and scientists to operate an autonomous spacecraft by facilitating a common model between the ground personnel and the onboard algorithms. We assess the new operations tools and workflows necessary to enable operators and scientists to convey their desired intent to the spacecraft, and to be able to reconstruct and explain the decisions made onboard and the state of the spacecraft. Mock-ups of these tools were used in a user study to understand the effectiveness of the processes and tools in enabling a shared framework of understanding, and in the ability of the operators and scientists to effectively achieve mission science objectives.

Human-Computer Interaction Glow Up: Examining Operational Trust and Intention Towards Mars Autonomous Systems

Oct 28, 2021

Abstract:Tactful coordination on earth between hundreds of operators from diverse disciplines and backgrounds is needed to ensure that Martian rovers have a high likelihood of achieving their science goals while enduring the harsh environment of the red planet. The operations team includes many individuals, each with independent and overlapping objectives, working to decide what to execute on the Mars surface during the next planning period. The team must work together to understand each other's objectives and constraints within a fixed time period, often requiring frequent revision. This study examines the challenges faced during Mars surface operations, from high-level science objectives to formulating a valid, safe, and optimal activity plan that is ready to be radiated to the rover. Through this examination, we aim to illuminate how planning intent can be formulated and effectively communicated to future spacecrafts that will become more and more autonomous. Our findings reveal the intricate nature of human-to-human interactions that require a large array of soft skills and core competencies to communicate concurrently with science and engineering teams during plan formulation. Additionally, our findings exposed significant challenges in eliciting planning intent from operators, which will intensify in the future, as operators on the ground asynchronously co-operate the rover with the on board autonomy. Building a marvellous robot and landing it onto the Mars surface are remarkable feats -however, ensuring that scientists can get the best out of the mission is an ongoing challenge and will not cease to be a difficult task with increased autonomy.

A Visual Analytics Approach to Debugging Cooperative, Autonomous Multi-Robot Systems' Worldviews

Sep 03, 2020

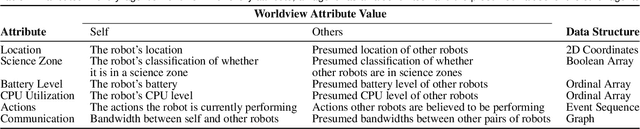

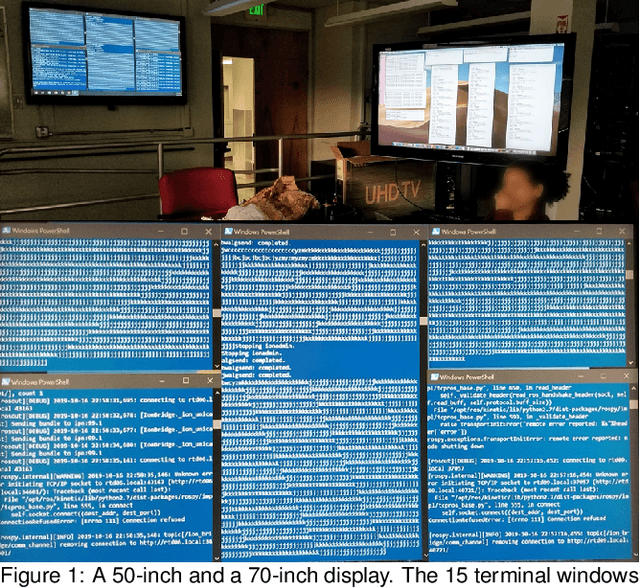

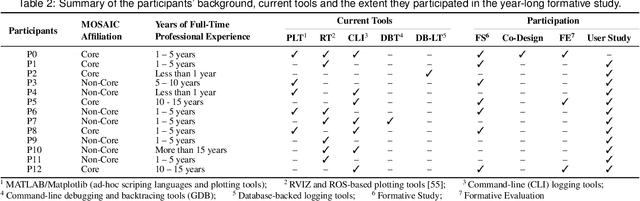

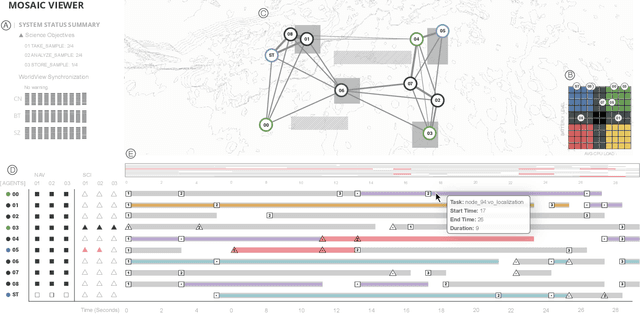

Abstract:Autonomous multi-robot systems, where a team of robots shares information to perform tasks that are beyond an individual robot's abilities, hold great promise for a number of applications, such as planetary exploration missions. Each robot in a multi-robot system that uses the shared-world coordination paradigm autonomously schedules which robot should perform a given task, and when, using its worldview--the robot's internal representation of its belief about both its own state, and other robots' states. A key problem for operators is that robots' worldviews can fall out of sync (often due to weak communication links), leading to desynchronization of the robots' scheduling decisions and inconsistent emergent behavior (e.g., tasks not performed, or performed by multiple robots). Operators face the time-consuming and difficult task of making sense of the robots' scheduling decisions, detecting de-synchronizations, and pinpointing the cause by comparing every robot's worldview. To address these challenges, we introduce MOSAIC Viewer, a visual analytics system that helps operators (i) make sense of the robots' schedules and (ii) detect and conduct a root cause analysis of the robots' desynchronized worldviews. Over a year-long partnership with roboticists at the NASA Jet Propulsion Laboratory, we conduct a formative study to identify the necessary system design requirements and a qualitative evaluation with 12 roboticists. We find that MOSAIC Viewer is faster- and easier-to-use than the users' current approaches, and it allows them to stitch low-level details to formulate a high-level understanding of the robots' schedules and detect and pinpoint the cause of the desynchronized worldviews.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge