S. Y. Kung

3D-FM GAN: Towards 3D-Controllable Face Manipulation

Aug 24, 2022

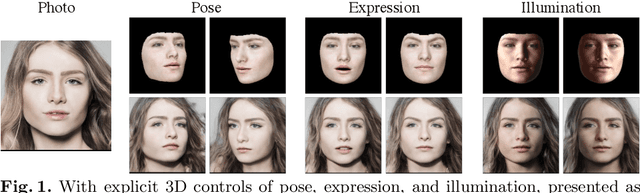

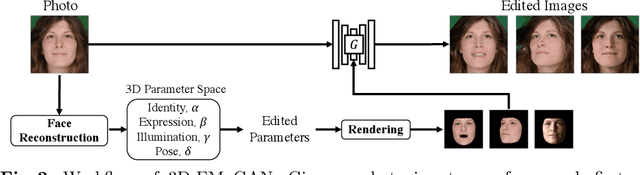

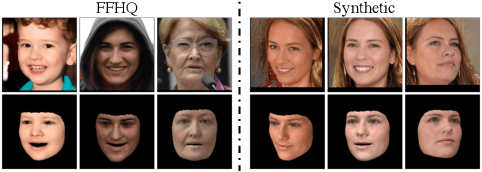

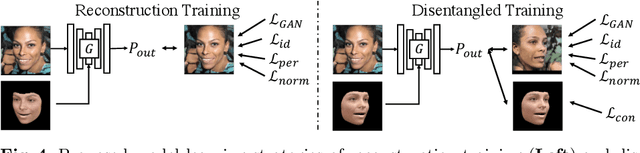

Abstract:3D-controllable portrait synthesis has significantly advanced, thanks to breakthroughs in generative adversarial networks (GANs). However, it is still challenging to manipulate existing face images with precise 3D control. While concatenating GAN inversion and a 3D-aware, noise-to-image GAN is a straight-forward solution, it is inefficient and may lead to noticeable drop in editing quality. To fill this gap, we propose 3D-FM GAN, a novel conditional GAN framework designed specifically for 3D-controllable face manipulation, and does not require any tuning after the end-to-end learning phase. By carefully encoding both the input face image and a physically-based rendering of 3D edits into a StyleGAN's latent spaces, our image generator provides high-quality, identity-preserved, 3D-controllable face manipulation. To effectively learn such novel framework, we develop two essential training strategies and a novel multiplicative co-modulation architecture that improves significantly upon naive schemes. With extensive evaluations, we show that our method outperforms the prior arts on various tasks, with better editability, stronger identity preservation, and higher photo-realism. In addition, we demonstrate a better generalizability of our design on large pose editing and out-of-domain images.

Evolving Transferable Pruning Functions

Oct 21, 2021

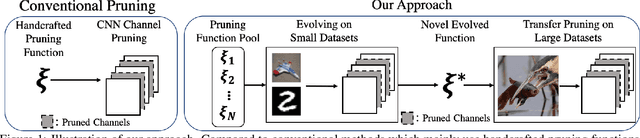

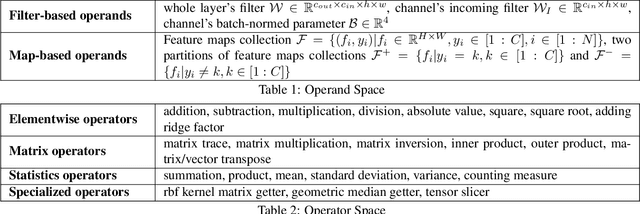

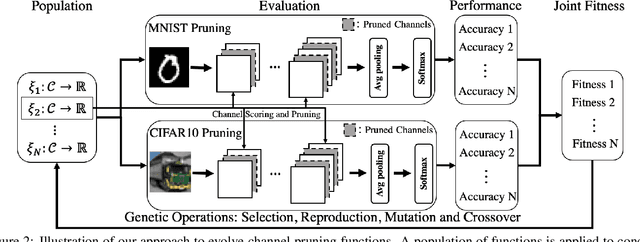

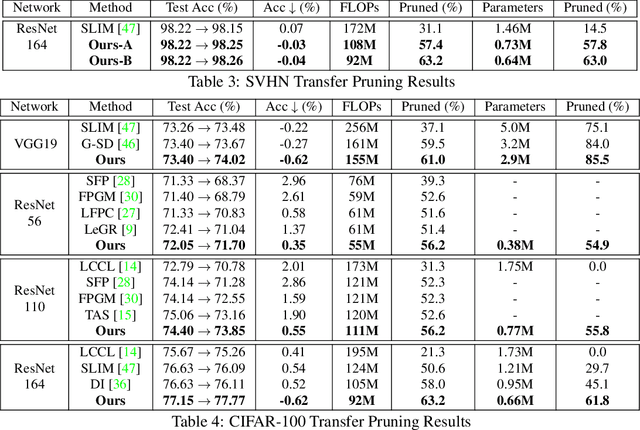

Abstract:Channel pruning has made major headway in the design of efficient deep learning models. Conventional approaches adopt human-made pruning functions to score channels' importance for channel pruning, which requires domain knowledge and could be sub-optimal. In this work, we propose an end-to-end framework to automatically discover strong pruning metrics. Specifically, we craft a novel design space for expressing pruning functions and leverage an evolution strategy, genetic programming, to evolve high-quality and transferable pruning functions. Unlike prior methods, our approach can not only provide compact pruned networks for efficient inference, but also novel closed-form pruning metrics that are mathematically explainable and thus generalizable to different pruning tasks. The evolution is conducted on small datasets while the learned functions are transferable to larger datasets without any manual modification. Compared to direct evolution on a large dataset, our strategy shows better cost-effectiveness. When applied to more challenging datasets, different from those used in the evolution process, e.g., ILSVRC-2012, an evolved function achieves state-of-the-art pruning results.

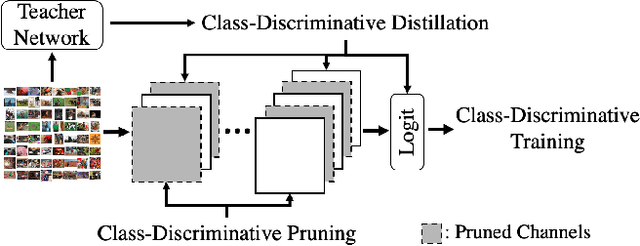

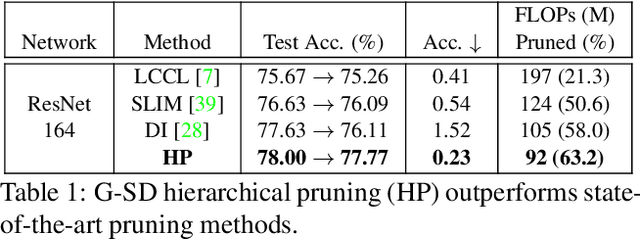

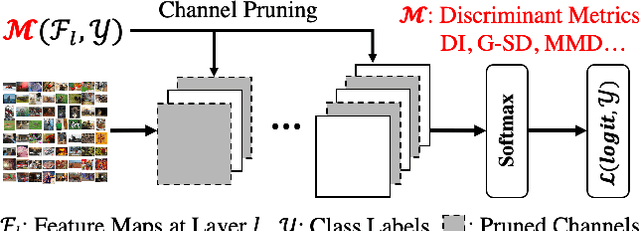

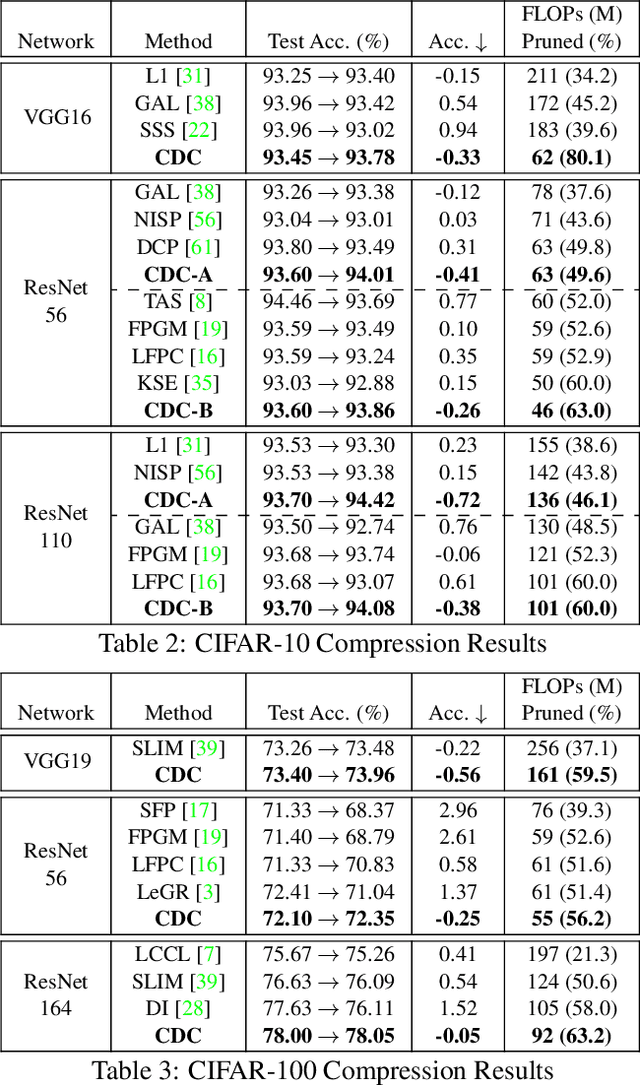

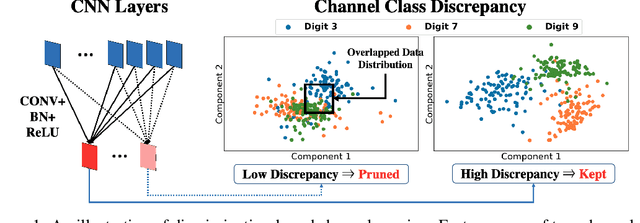

Class-Discriminative CNN Compression

Oct 21, 2021

Abstract:Compressing convolutional neural networks (CNNs) by pruning and distillation has received ever-increasing focus in the community. In particular, designing a class-discrimination based approach would be desired as it fits seamlessly with the CNNs training objective. In this paper, we propose class-discriminative compression (CDC), which injects class discrimination in both pruning and distillation to facilitate the CNNs training goal. We first study the effectiveness of a group of discriminant functions for channel pruning, where we include well-known single-variate binary-class statistics like Student's T-Test in our study via an intuitive generalization. We then propose a novel layer-adaptive hierarchical pruning approach, where we use a coarse class discrimination scheme for early layers and a fine one for later layers. This method naturally accords with the fact that CNNs process coarse semantics in the early layers and extract fine concepts at the later. Moreover, we leverage discriminant component analysis (DCA) to distill knowledge of intermediate representations in a subspace with rich discriminative information, which enhances hidden layers' linear separability and classification accuracy of the student. Combining pruning and distillation, CDC is evaluated on CIFAR and ILSVRC 2012, where we consistently outperform the state-of-the-art results.

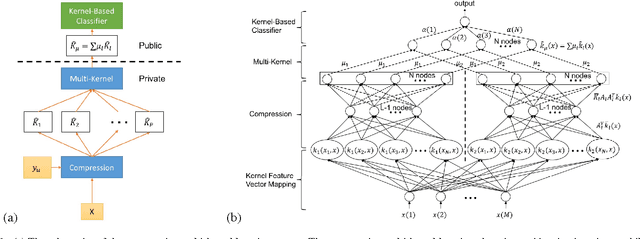

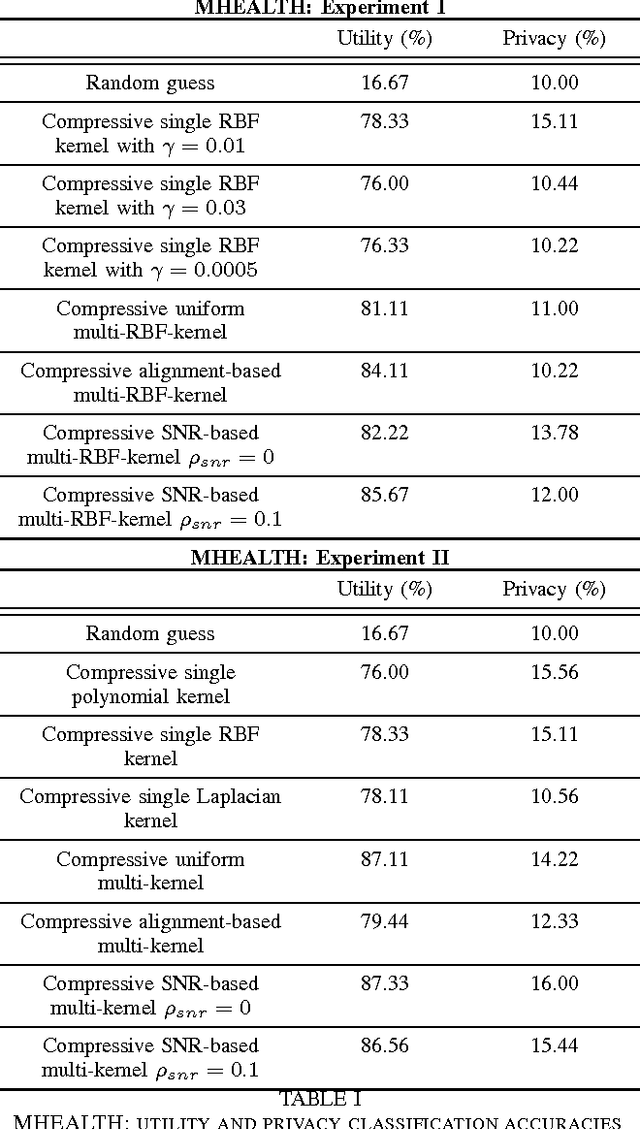

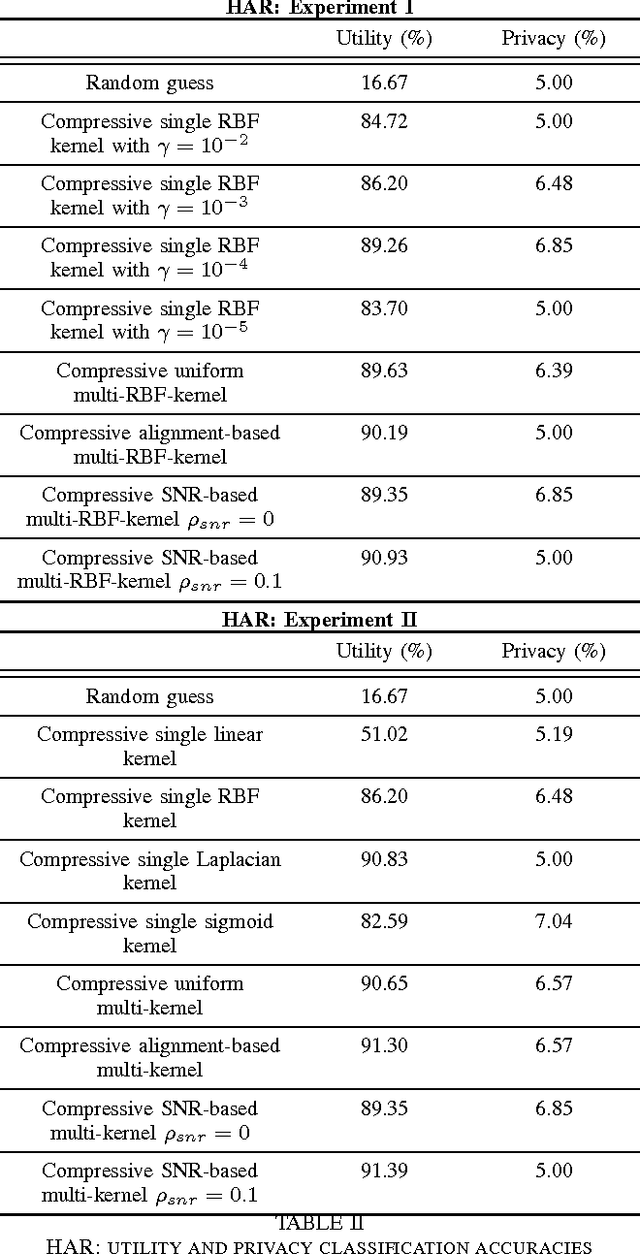

A compressive multi-kernel method for privacy-preserving machine learning

Jun 20, 2021

Abstract:As the analytic tools become more powerful, and more data are generated on a daily basis, the issue of data privacy arises. This leads to the study of the design of privacy-preserving machine learning algorithms. Given two objectives, namely, utility maximization and privacy-loss minimization, this work is based on two previously non-intersecting regimes -- Compressive Privacy and multi-kernel method. Compressive Privacy is a privacy framework that employs utility-preserving lossy-encoding scheme to protect the privacy of the data, while multi-kernel method is a kernel based machine learning regime that explores the idea of using multiple kernels for building better predictors. The compressive multi-kernel method proposed consists of two stages -- the compression stage and the multi-kernel stage. The compression stage follows the Compressive Privacy paradigm to provide the desired privacy protection. Each kernel matrix is compressed with a lossy projection matrix derived from the Discriminant Component Analysis (DCA). The multi-kernel stage uses the signal-to-noise ratio (SNR) score of each kernel to non-uniformly combine multiple compressive kernels. The proposed method is evaluated on two mobile-sensing datasets -- MHEALTH and HAR -- where activity recognition is defined as utility and person identification is defined as privacy. The results show that the compression regime is successful in privacy preservation as the privacy classification accuracies are almost at the random-guess level in all experiments. On the other hand, the novel SNR-based multi-kernel shows utility classification accuracy improvement upon the state-of-the-art in both datasets. These results indicate a promising direction for research in privacy-preserving machine learning.

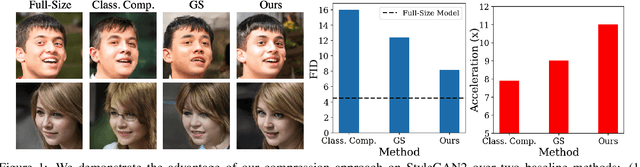

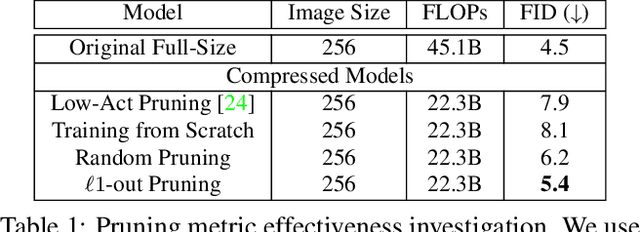

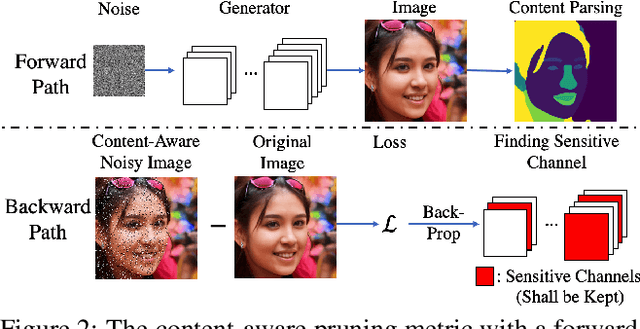

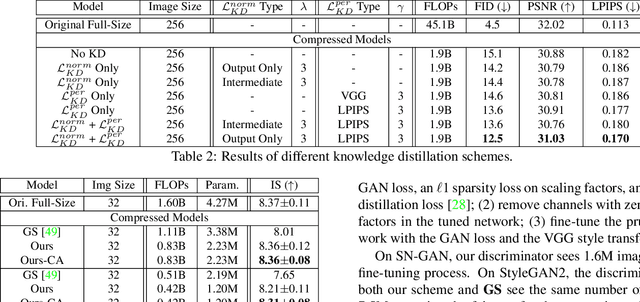

Content-Aware GAN Compression

Apr 06, 2021

Abstract:Generative adversarial networks (GANs), e.g., StyleGAN2, play a vital role in various image generation and synthesis tasks, yet their notoriously high computational cost hinders their efficient deployment on edge devices. Directly applying generic compression approaches yields poor results on GANs, which motivates a number of recent GAN compression works. While prior works mainly accelerate conditional GANs, e.g., pix2pix and CycleGAN, compressing state-of-the-art unconditional GANs has rarely been explored and is more challenging. In this paper, we propose novel approaches for unconditional GAN compression. We first introduce effective channel pruning and knowledge distillation schemes specialized for unconditional GANs. We then propose a novel content-aware method to guide the processes of both pruning and distillation. With content-awareness, we can effectively prune channels that are unimportant to the contents of interest, e.g., human faces, and focus our distillation on these regions, which significantly enhances the distillation quality. On StyleGAN2 and SN-GAN, we achieve a substantial improvement over the state-of-the-art compression method. Notably, we reduce the FLOPs of StyleGAN2 by 11x with visually negligible image quality loss compared to the full-size model. More interestingly, when applied to various image manipulation tasks, our compressed model forms a smoother and better disentangled latent manifold, making it more effective for image editing.

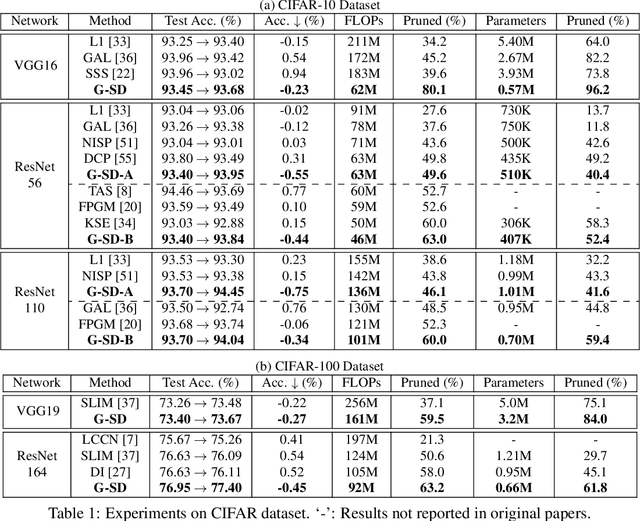

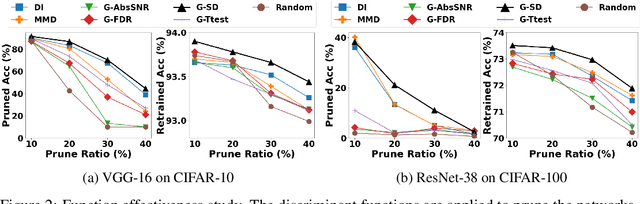

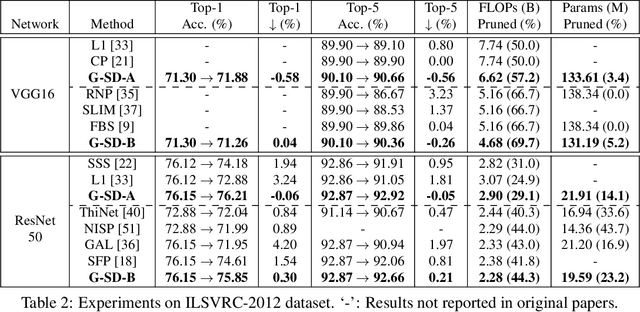

Rethinking Class-Discrimination Based CNN Channel Pruning

Apr 29, 2020

Abstract:Channel pruning has received ever-increasing focus on network compression. In particular, class-discrimination based channel pruning has made major headway, as it fits seamlessly with the classification objective of CNNs and provides good explainability. Prior works singly propose and evaluate their discriminant functions, while further study on the effectiveness of the adopted metrics is absent. To this end, we initiate the first study on the effectiveness of a broad range of discriminant functions on channel pruning. Conventional single-variate binary-class statistics like Student's T-Test are also included in our study via an intuitive generalization. The winning metric of our study has a greater ability to select informative channels over other state-of-the-art methods, which is substantiated by our qualitative and quantitative analysis. Moreover, we develop a FLOP-normalized sensitivity analysis scheme to automate the structural pruning procedure. On CIFAR-10, CIFAR-100, and ILSVRC-2012 datasets, our pruned models achieve higher accuracy with less inference cost compared to state-of-the-art results. For example, on ILSVRC-2012, our 44.3% FLOPs-pruned ResNet-50 has only a 0.3% top-1 accuracy drop, which significantly outperforms the state of the art.

Desensitized RDCA Subspaces for Compressive Privacy in Machine Learning

Jul 24, 2017

Abstract:The quest for better data analysis and artificial intelligence has lead to more and more data being collected and stored. As a consequence, more data are exposed to malicious entities. This paper examines the problem of privacy in machine learning for classification. We utilize the Ridge Discriminant Component Analysis (RDCA) to desensitize data with respect to a privacy label. Based on five experiments, we show that desensitization by RDCA can effectively protect privacy (i.e. low accuracy on the privacy label) with small loss in utility. On HAR and CMU Faces datasets, the use of desensitized data results in random guess level accuracies for privacy at a cost of 5.14% and 0.04%, on average, drop in the utility accuracies. For Semeion Handwritten Digit dataset, accuracies of the privacy-sensitive digits are almost zero, while the accuracies for the utility-relevant digits drop by 7.53% on average. This presents a promising solution to the problem of privacy in machine learning for classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge