Rethinking Class-Discrimination Based CNN Channel Pruning

Paper and Code

Apr 29, 2020

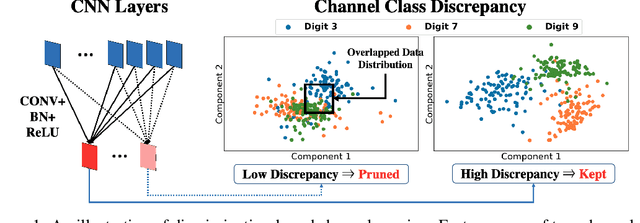

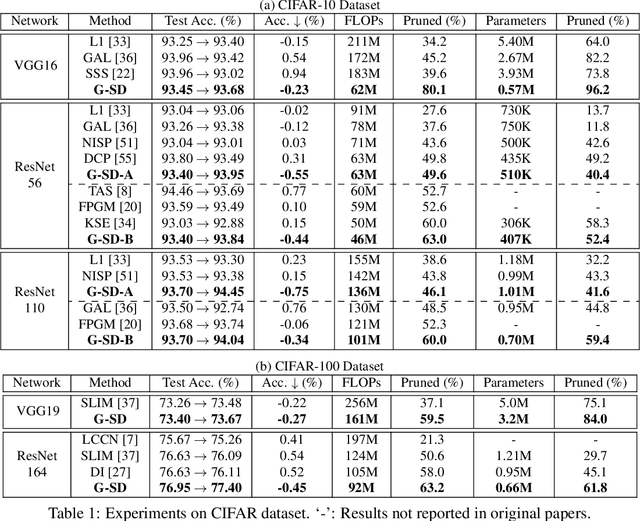

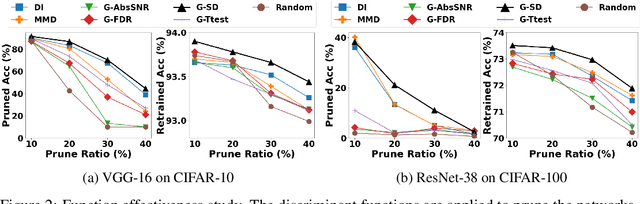

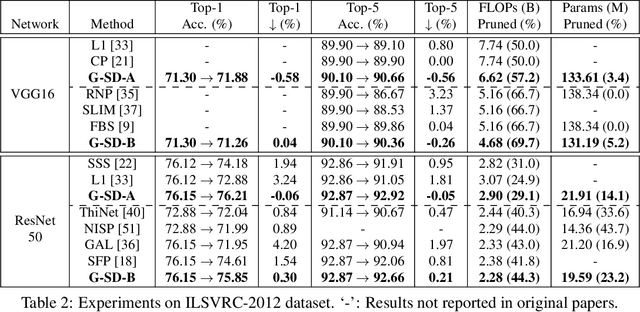

Channel pruning has received ever-increasing focus on network compression. In particular, class-discrimination based channel pruning has made major headway, as it fits seamlessly with the classification objective of CNNs and provides good explainability. Prior works singly propose and evaluate their discriminant functions, while further study on the effectiveness of the adopted metrics is absent. To this end, we initiate the first study on the effectiveness of a broad range of discriminant functions on channel pruning. Conventional single-variate binary-class statistics like Student's T-Test are also included in our study via an intuitive generalization. The winning metric of our study has a greater ability to select informative channels over other state-of-the-art methods, which is substantiated by our qualitative and quantitative analysis. Moreover, we develop a FLOP-normalized sensitivity analysis scheme to automate the structural pruning procedure. On CIFAR-10, CIFAR-100, and ILSVRC-2012 datasets, our pruned models achieve higher accuracy with less inference cost compared to state-of-the-art results. For example, on ILSVRC-2012, our 44.3% FLOPs-pruned ResNet-50 has only a 0.3% top-1 accuracy drop, which significantly outperforms the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge