Ryan Herbst

FPGA-Accelerated SpeckleNN with SNL for Real-time X-ray Single-Particle Imaging

Feb 27, 2025

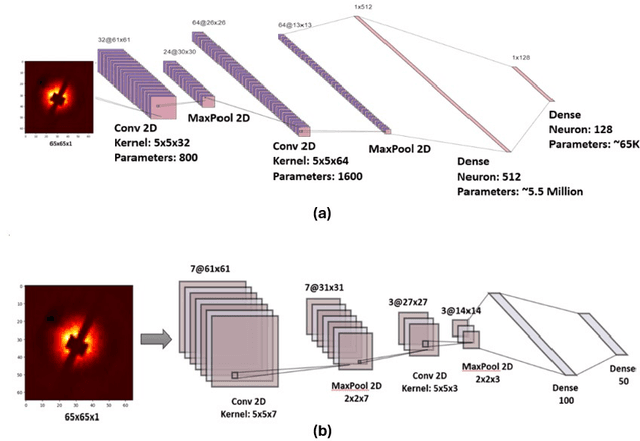

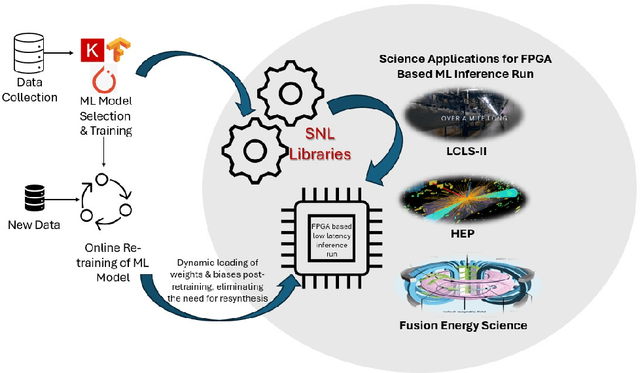

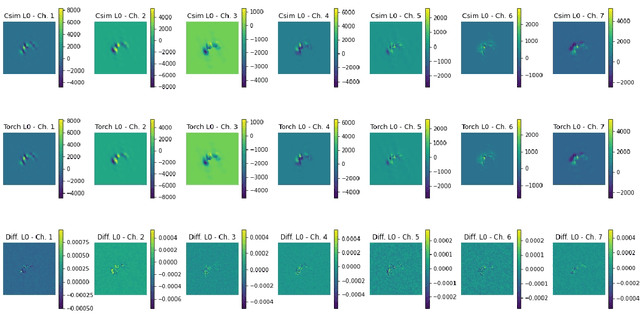

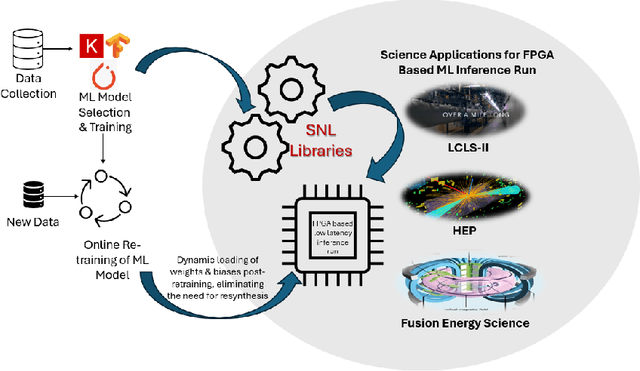

Abstract:We implement a specialized version of our SpeckleNN model for real-time speckle pattern classification in X-ray Single-Particle Imaging (SPI) using the SLAC Neural Network Library (SNL) on an FPGA. This hardware is optimized for inference near detectors in high-throughput X-ray free-electron laser (XFEL) facilities like the Linac Coherent Light Source (LCLS). To fit FPGA constraints, we optimized SpeckleNN, reducing parameters from 5.6M to 64.6K (98.8% reduction) with 90% accuracy. We also compressed the latent space from 128 to 50 dimensions. Deployed on a KCU1500 FPGA, the model used 71% of DSPs, 75% of LUTs, and 48% of FFs, with an average power consumption of 9.4W. The FPGA achieved 45.015us inference latency at 200 MHz. On an NVIDIA A100 GPU, the same inference consumed ~73W and had a 400us latency. Our FPGA version achieved an 8.9x speedup and 7.8x power reduction over the GPU. Key advancements include model specialization and dynamic weight loading through SNL, eliminating time-consuming FPGA re-synthesis for fast, continuous deployment of (re)trained models. These innovations enable real-time adaptive classification and efficient speckle pattern vetoing, making SpeckleNN ideal for XFEL facilities. This implementation accelerates SPI experiments and enhances adaptability to evolving conditions.

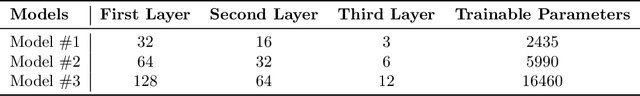

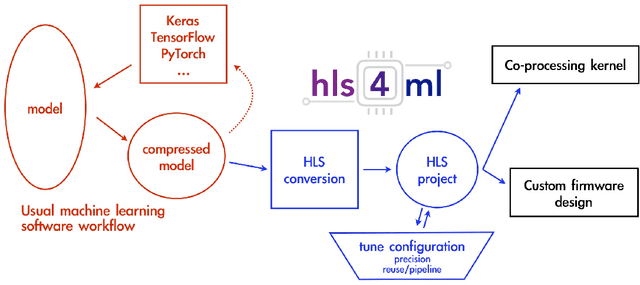

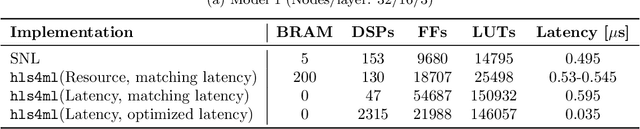

Analysis of Hardware Synthesis Strategies for Machine Learning in Collider Trigger and Data Acquisition

Nov 18, 2024

Abstract:To fully exploit the physics potential of current and future high energy particle colliders, machine learning (ML) can be implemented in detector electronics for intelligent data processing and acquisition. The implementation of ML in real-time at colliders requires very low latencies that are unachievable with a software-based approach, requiring optimization and synthesis of ML algorithms for deployment on hardware. An analysis of neural network inference efficiency is presented, focusing on the application of collider trigger algorithms in field programmable gate arrays (FPGAs). Trade-offs are evaluated between two frameworks, the SLAC Neural Network Library (SNL) and hls4ml, in terms of resources and latency for different model sizes. Results highlight the strengths and limitations of each approach, offering valuable insights for optimizing real-time neural network deployments at colliders. This work aims to guide researchers and engineers in selecting the most suitable hardware and software configurations for real-time, resource-constrained environments.

Embedded FPGA Developments in 130nm and 28nm CMOS for Machine Learning in Particle Detector Readout

Apr 26, 2024

Abstract:Embedded field programmable gate array (eFPGA) technology allows the implementation of reconfigurable logic within the design of an application-specific integrated circuit (ASIC). This approach offers the low power and efficiency of an ASIC along with the ease of FPGA configuration, particularly beneficial for the use case of machine learning in the data pipeline of next-generation collider experiments. An open-source framework called "FABulous" was used to design eFPGAs using 130 nm and 28 nm CMOS technology nodes, which were subsequently fabricated and verified through testing. The capability of an eFPGA to act as a front-end readout chip was tested using simulation of high energy particles passing through a silicon pixel sensor. A machine learning-based classifier, designed for reduction of sensor data at the source, was synthesized and configured onto the eFPGA. A successful proof-of-concept was demonstrated through reproduction of the expected algorithm result on the eFPGA with perfect accuracy. Further development of the eFPGA technology and its application to collider detector readout is discussed.

Implementation of a framework for deploying AI inference engines in FPGAs

May 30, 2023Abstract:The LCLS2 Free Electron Laser FEL will generate xray pulses to beamline experiments at up to 1Mhz These experimentals will require new ultrahigh rate UHR detectors that can operate at rates above 100 kHz and generate data throughputs upwards of 1 TBs a data velocity which requires prohibitively large investments in storage infrastructure Machine Learning has demonstrated the potential to digest large datasets to extract relevant insights however current implementations show latencies that are too high for realtime data reduction objectives SLAC has endeavored on the creation of a software framework which translates MLs structures for deployment on Field Programmable Gate Arrays FPGAs deployed at the Edge of the data chain close to the instrumentation This framework leverages Xilinxs HLS framework presenting an API modeled after the open source Keras interface to the TensorFlow library This SLAC Neural Network Library SNL framework is designed with a streaming data approach optimizing the data flow between layers while minimizing the buffer data buffering requirements The goal is to ensure the highest possible framerate while keeping the maximum latency constrained to the needs of the experiment Our framework is designed to ensure the RTL implementation of the network layers supporting full redeployment of weights and biases without requiring resynthesis after training The ability to reduce the precision of the implemented networks through quantization is necessary to optimize the use of both DSP and memory resources in the FPGA We currently have a preliminary version of the toolset and are experimenting with both general purpose example networks and networks being designed for specific LCLS2 experiments.

Bridge Data Center AI Systems with Edge Computing for Actionable Information Retrieval

May 28, 2021

Abstract:Extremely high data rates at modern synchrotron and X-ray free-electron lasers (XFELs) light source beamlines motivate the use of machine learning methods for data reduction, feature detection, and other purposes. Regardless of the application, the basic concept is the same: data collected in early stages of an experiment, data from past similar experiments, and/or data simulated for the upcoming experiment are used to train machine learning models that, in effect, learn specific characteristics of those data; these models are then used to process subsequent data more efficiently than would general-purpose models that lack knowledge of the specific dataset or data class. Thus, a key challenge is to be able to train models with sufficient rapidity that they can be deployed and used within useful timescales. We describe here how specialized data center AI systems can be used for this purpose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge