Ryan Coffee

Implementation of a framework for deploying AI inference engines in FPGAs

May 30, 2023Abstract:The LCLS2 Free Electron Laser FEL will generate xray pulses to beamline experiments at up to 1Mhz These experimentals will require new ultrahigh rate UHR detectors that can operate at rates above 100 kHz and generate data throughputs upwards of 1 TBs a data velocity which requires prohibitively large investments in storage infrastructure Machine Learning has demonstrated the potential to digest large datasets to extract relevant insights however current implementations show latencies that are too high for realtime data reduction objectives SLAC has endeavored on the creation of a software framework which translates MLs structures for deployment on Field Programmable Gate Arrays FPGAs deployed at the Edge of the data chain close to the instrumentation This framework leverages Xilinxs HLS framework presenting an API modeled after the open source Keras interface to the TensorFlow library This SLAC Neural Network Library SNL framework is designed with a streaming data approach optimizing the data flow between layers while minimizing the buffer data buffering requirements The goal is to ensure the highest possible framerate while keeping the maximum latency constrained to the needs of the experiment Our framework is designed to ensure the RTL implementation of the network layers supporting full redeployment of weights and biases without requiring resynthesis after training The ability to reduce the precision of the implemented networks through quantization is necessary to optimize the use of both DSP and memory resources in the FPGA We currently have a preliminary version of the toolset and are experimenting with both general purpose example networks and networks being designed for specific LCLS2 experiments.

fairDMS: Rapid Model Training by Data and Model Reuse

Apr 20, 2022

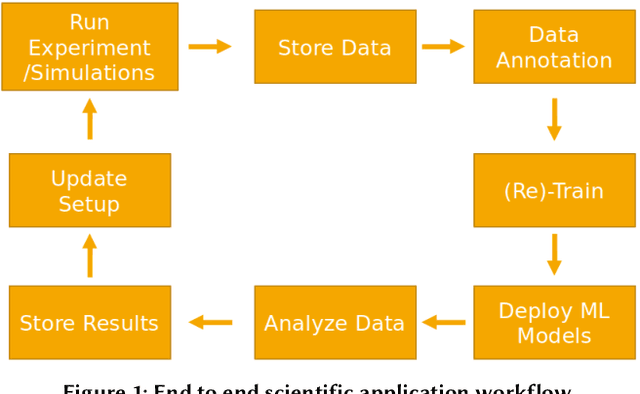

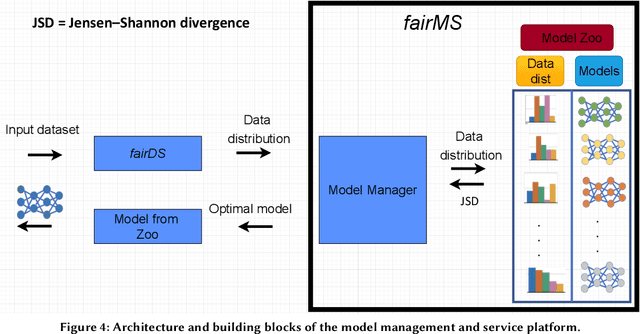

Abstract:Extracting actionable information from data sources such as the Linac Coherent Light Source (LCLS-II) and Advanced Photon Source Upgrade (APS-U) is becoming more challenging due to the fast-growing data generation rate. The rapid analysis possible with ML methods can enable fast feedback loops that can be used to adjust experimental setups in real-time, for example when errors occur or interesting events are detected. However, to avoid degradation in ML performance over time due to changes in an instrument or sample, we need a way to update ML models rapidly while an experiment is running. We present here a data service and model service to accelerate deep neural network training with a focus on ML-based scientific applications. Our proposed data service achieves 100x speedup in terms of data labeling compare to the current state-of-the-art. Further, our model service achieves up to 200x improvement in training speed. Overall, fairDMS achieves up to 92x speedup in terms of end-to-end model updating time.

Bridge Data Center AI Systems with Edge Computing for Actionable Information Retrieval

May 28, 2021

Abstract:Extremely high data rates at modern synchrotron and X-ray free-electron lasers (XFELs) light source beamlines motivate the use of machine learning methods for data reduction, feature detection, and other purposes. Regardless of the application, the basic concept is the same: data collected in early stages of an experiment, data from past similar experiments, and/or data simulated for the upcoming experiment are used to train machine learning models that, in effect, learn specific characteristics of those data; these models are then used to process subsequent data more efficiently than would general-purpose models that lack knowledge of the specific dataset or data class. Thus, a key challenge is to be able to train models with sufficient rapidity that they can be deployed and used within useful timescales. We describe here how specialized data center AI systems can be used for this purpose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge