Ryan Culkin

Iterative Paraphrastic Augmentation with Discriminative Span Alignment

Jul 01, 2020

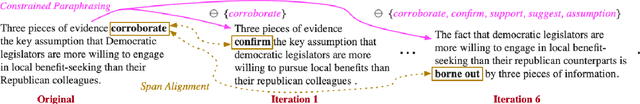

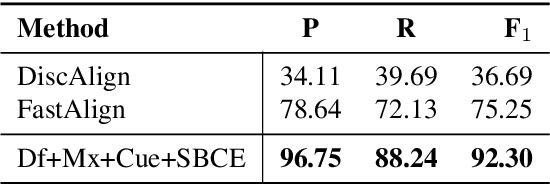

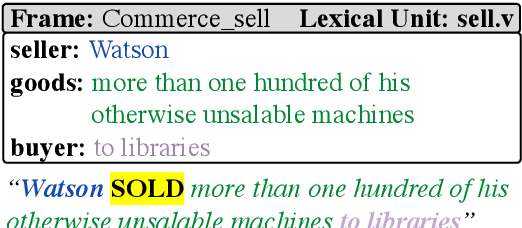

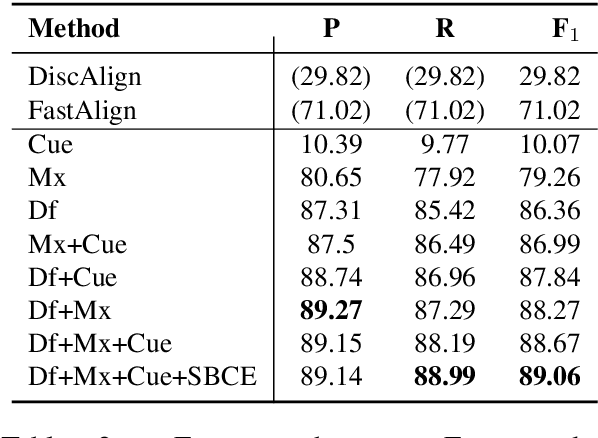

Abstract:We introduce a novel paraphrastic augmentation strategy based on sentence-level lexically constrained paraphrasing and discriminative span alignment. Our approach allows for the large-scale expansion of existing resources, or the rapid creation of new resources from a small, manually-produced seed corpus. We illustrate our framework on the Berkeley FrameNet Project, a large-scale language understanding effort spanning more than two decades of human labor. Based on roughly four days of collecting training data for the alignment model and approximately one day of parallel compute, we automatically generate 495,300 unique (Frame, Trigger) combinations annotated in context, a roughly 50x expansion atop FrameNet v1.7.

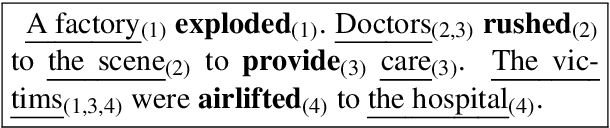

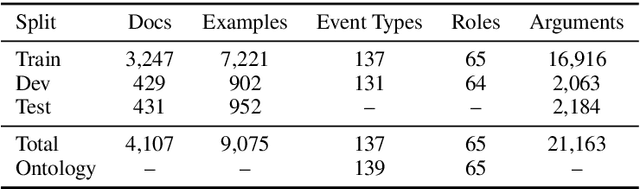

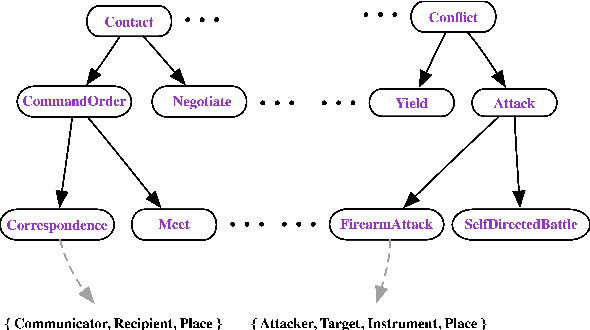

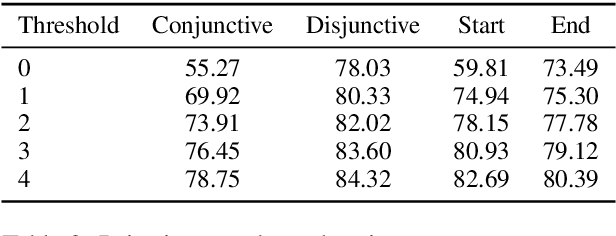

Multi-Sentence Argument Linking

Nov 09, 2019

Abstract:We introduce a dataset with annotated Roles Across Multiple Sentences (RAMS), consisting of over 9,000 annotated events. This enables the development of a novel span-based labeling framework that operates at the document level, which connects related ideas in sentence-level semantic role labeling and coreference resolution. We achieve 68.1 F1 on RAMS when given argument span boundaries and 73.2 F1 when also given gold event types. We additionally illustrate the applicability of the approach to the slot filling task in the Gun Violence Database.

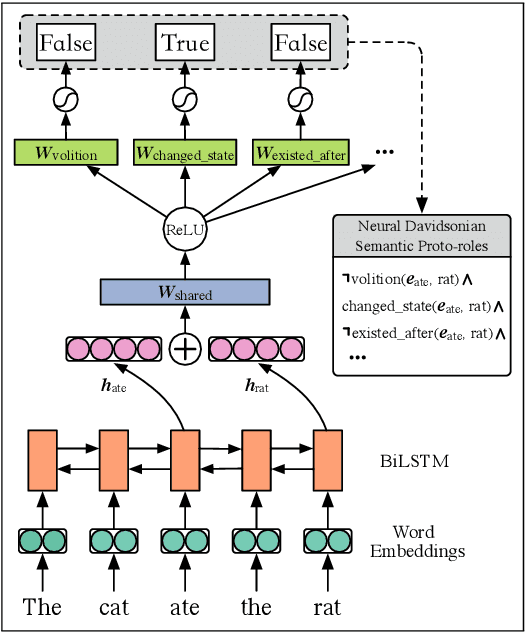

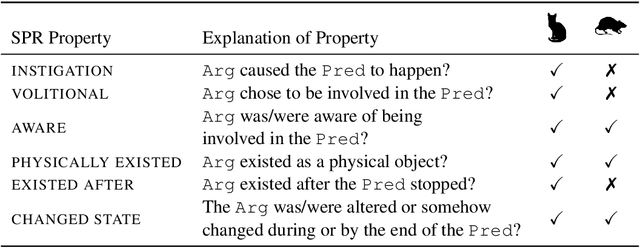

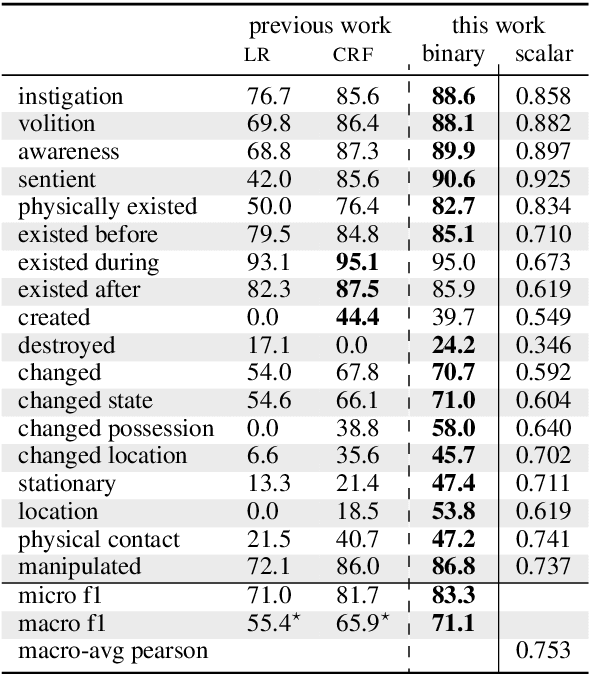

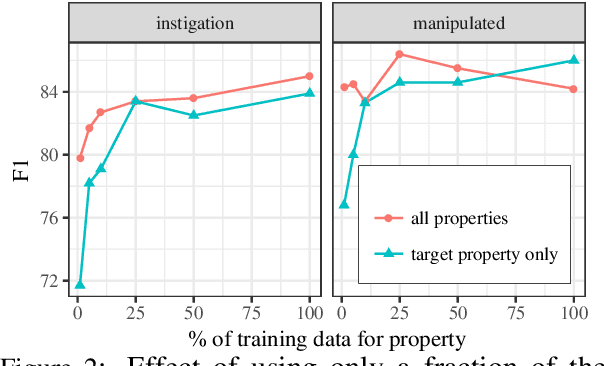

Neural-Davidsonian Semantic Proto-role Labeling

Oct 24, 2018

Abstract:We present a model for semantic proto-role labeling (SPRL) using an adapted bidirectional LSTM encoding strategy that we call "Neural-Davidsonian": predicate-argument structure is represented as pairs of hidden states corresponding to predicate and argument head tokens of the input sequence. We demonstrate: (1) state-of-the-art results in SPRL, and (2) that our network naturally shares parameters between attributes, allowing for learning new attribute types with limited added supervision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge