Ruxin Zheng

Deep Frequency Attention Networks for Single Snapshot Sparse Array Interpolation

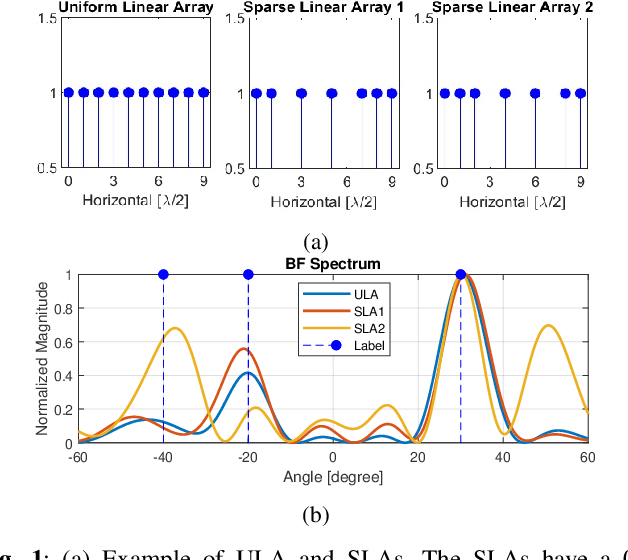

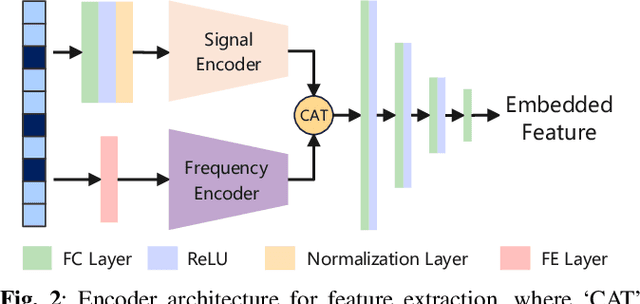

Mar 07, 2025Abstract:Sparse arrays have been widely exploited in radar systems because of their advantages in achieving large array aperture at low hardware cost, while significantly reducing mutual coupling. However, sparse arrays suffer from high sidelobes which may lead to false detections. Missing elements in sparse arrays can be interpolated using the sparse array measurements. In snapshot-limited scenarios, such as automotive radar, it is challenging to utilize difference coarrays which require a large number of snapshots to construct a covariance matrix for interpolation. For single snapshot sparse array interpolation, traditional model-based methods, while effective, require expert knowledge for hyperparameter tuning, lack task-specific adaptability, and incur high computational costs. In this paper, we propose a novel deep learning-based single snapshot sparse array interpolation network that addresses these challenges by leveraging a frequency-domain attention mechanism. The proposed approach transforms the sparse signal into the frequency domain, where the attention mechanism focuses on key spectral regions, enabling improved interpolation of missing elements even in low signal-to-noise ratio (SNR) conditions. By minimizing computational costs and enhancing interpolation accuracy, the proposed method demonstrates superior performance compared to traditional approaches, making it well-suited for automotive radar applications.

Advancing Single-Snapshot DOA Estimation with Siamese Neural Networks for Sparse Linear Arrays

Jan 13, 2025

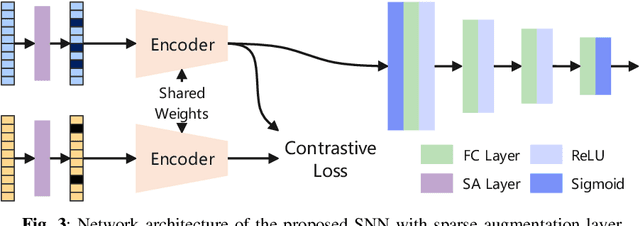

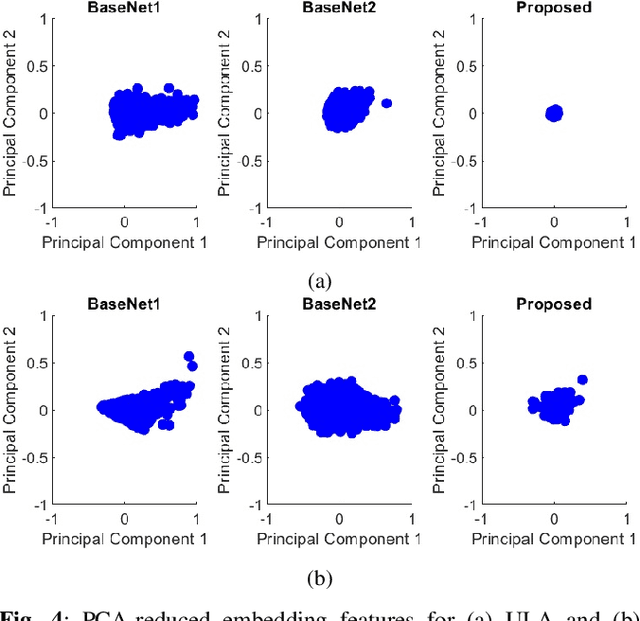

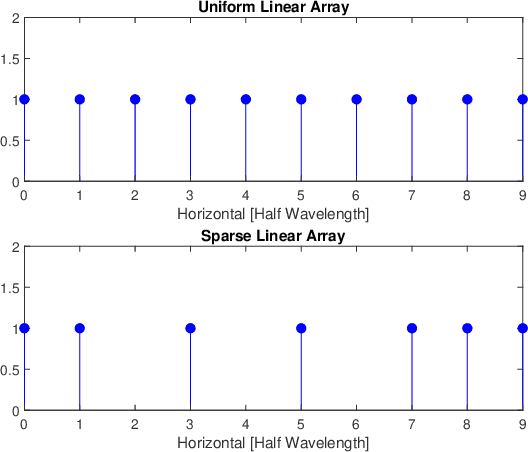

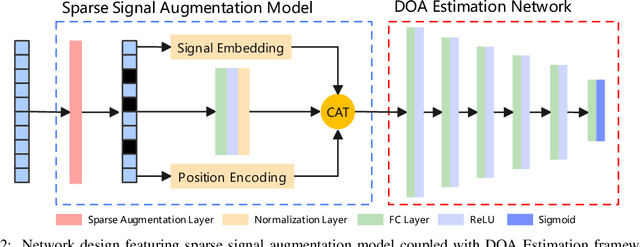

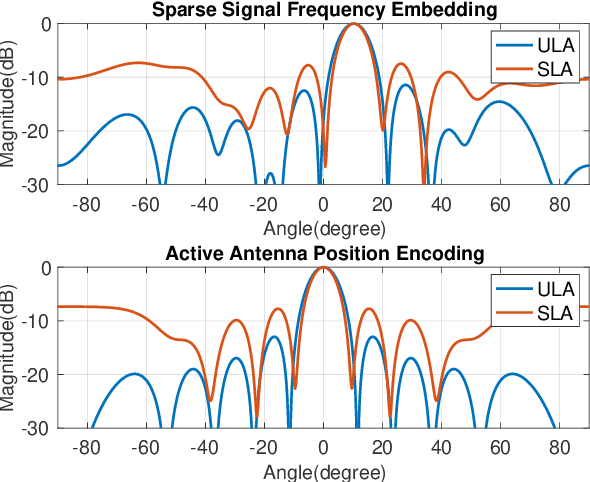

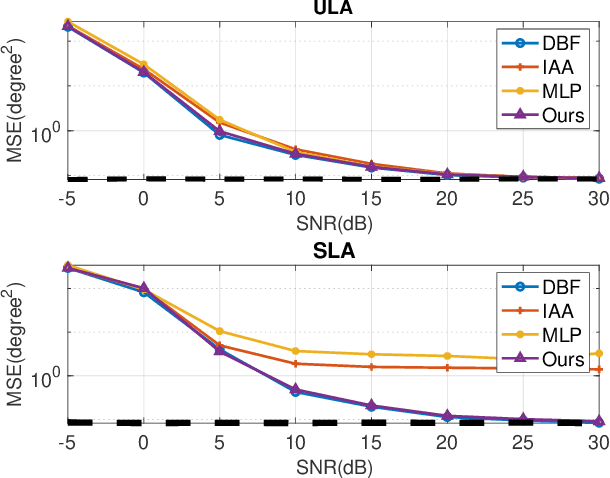

Abstract:Single-snapshot signal processing in sparse linear arrays has become increasingly vital, particularly in dynamic environments like automotive radar systems, where only limited snapshots are available. These arrays are often utilized either to cut manufacturing costs or result from unintended antenna failures, leading to challenges such as high sidelobe levels and compromised accuracy in direction-of-arrival (DOA) estimation. Despite deep learning's success in tasks such as DOA estimation, the need for extensive training data to increase target numbers or improve angular resolution poses significant challenges. In response, this paper presents a novel Siamese neural network (SNN) featuring a sparse augmentation layer, which enhances signal feature embedding and DOA estimation accuracy in sparse arrays. We demonstrate the enhanced DOA estimation performance of our approach through detailed feature analysis and performance evaluation. The code for this study is available at https://github.com/ruxinzh/SNNS_SLA.

Redefining Automotive Radar Imaging: A Domain-Informed 1D Deep Learning Approach for High-Resolution and Efficient Performance

Jun 11, 2024Abstract:Millimeter-wave (mmWave) radars are indispensable for perception tasks of autonomous vehicles, thanks to their resilience in challenging weather conditions. Yet, their deployment is often limited by insufficient spatial resolution for precise semantic scene interpretation. Classical super-resolution techniques adapted from optical imaging inadequately address the distinct characteristics of radar signal data. In response, our study redefines radar imaging super-resolution as a one-dimensional (1D) signal super-resolution spectra estimation problem by harnessing the radar signal processing domain knowledge, introducing innovative data normalization and a domain-informed signal-to-noise ratio (SNR)-guided loss function. Our tailored deep learning network for automotive radar imaging exhibits remarkable scalability, parameter efficiency and fast inference speed, alongside enhanced performance in terms of radar imaging quality and resolution. Extensive testing confirms that our SR-SPECNet sets a new benchmark in producing high-resolution radar range-azimuth images, outperforming existing methods across varied antenna configurations and dataset sizes. Source code and new radar dataset will be made publicly available online.

Antenna Failure Resilience: Deep Learning-Enabled Robust DOA Estimation with Single Snapshot Sparse Arrays

May 05, 2024

Abstract:Recent advancements in Deep Learning (DL) for Direction of Arrival (DOA) estimation have highlighted its superiority over traditional methods, offering faster inference, enhanced super-resolution, and robust performance in low Signal-to-Noise Ratio (SNR) environments. Despite these advancements, existing research predominantly focuses on multi-snapshot scenarios, a limitation in the context of automotive radar systems which demand high angular resolution and often rely on limited snapshots, sometimes as scarce as a single snapshot. Furthermore, the increasing interest in sparse arrays for automotive radar, owing to their cost-effectiveness and reduced antenna element coupling, presents additional challenges including susceptibility to random sensor failures. This paper introduces a pioneering DL framework featuring a sparse signal augmentation layer, meticulously crafted to bolster single snapshot DOA estimation across diverse sparse array setups and amidst antenna failures. To our best knowledge, this is the first work to tackle this issue. Our approach improves the adaptability of deep learning techniques to overcome the unique difficulties posed by sparse arrays with single snapshot. We conduct thorough evaluations of our network's performance using simulated and real-world data, showcasing the efficacy and real-world viability of our proposed solution. The code and real-world dataset employed in this study are available at https://github.com/ruxinzh/Deep_RSA_DOA.

Interpretable and Efficient Beamforming-Based Deep Learning for Single Snapshot DOA Estimation

Sep 14, 2023

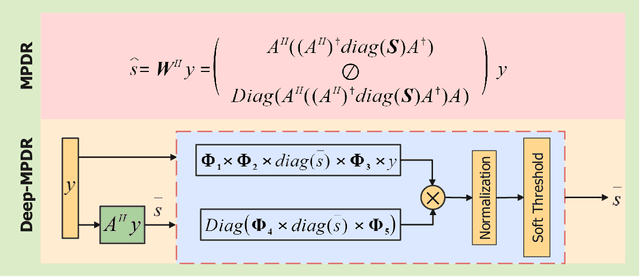

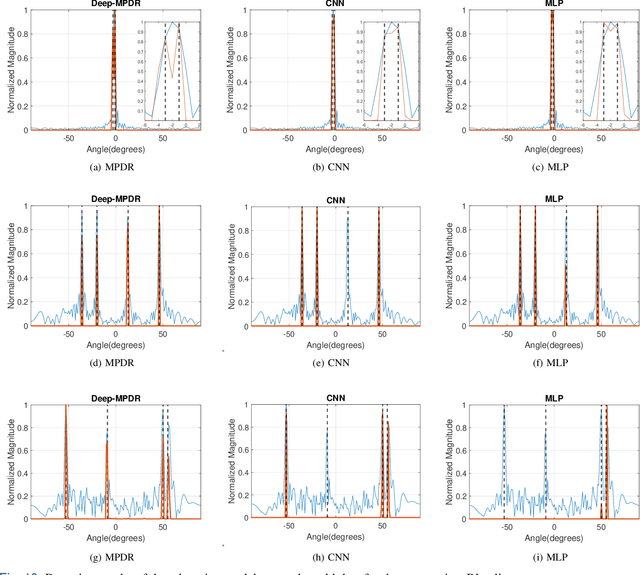

Abstract:We introduce an interpretable deep learning approach for direction of arrival (DOA) estimation with a single snapshot. Classical subspace-based methods like MUSIC and ESPRIT use spatial smoothing on uniform linear arrays for single snapshot DOA estimation but face drawbacks in reduced array aperture and inapplicability to sparse arrays. Single-snapshot methods such as compressive sensing and iterative adaptation approach (IAA) encounter challenges with high computational costs and slow convergence, hampering real-time use. Recent deep learning DOA methods offer promising accuracy and speed. However, the practical deployment of deep networks is hindered by their black-box nature. To address this, we propose a deep-MPDR network translating minimum power distortionless response (MPDR)-type beamformer into deep learning, enhancing generalization and efficiency. Comprehensive experiments conducted using both simulated and real-world datasets substantiate its dominance in terms of inference time and accuracy in comparison to conventional methods. Moreover, it excels in terms of efficiency, generalizability, and interpretability when contrasted with other deep learning DOA estimation networks.

Beyond Point Clouds: A Knowledge-Aided High Resolution Imaging Radar Deep Detector for Autonomous Driving

Nov 01, 2021

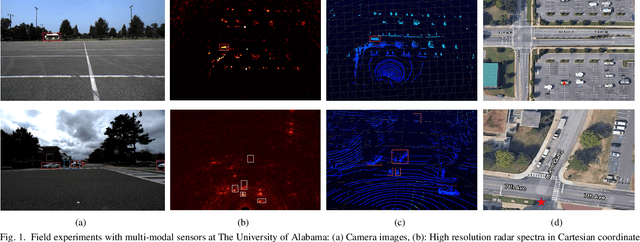

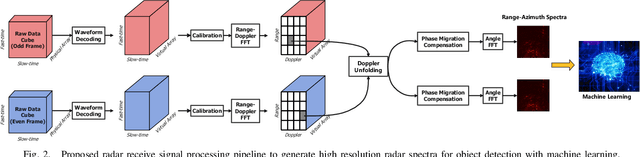

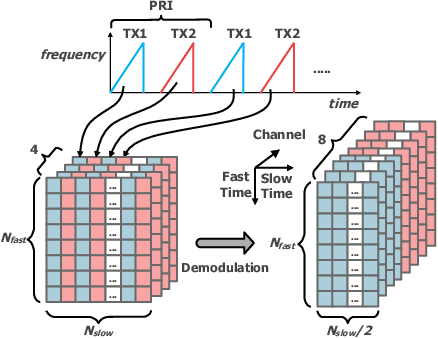

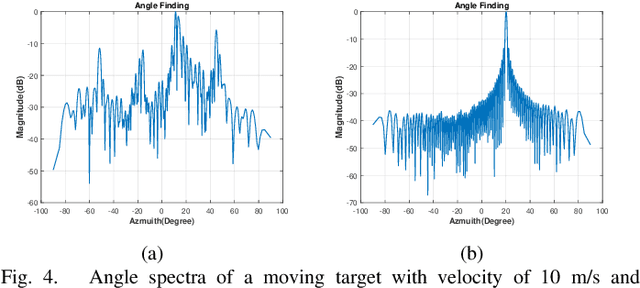

Abstract:The potentials of automotive radar for autonomous driving have not been fully exploited. We present a multi-input multi-output (MIMO) radar transmit and receive signal processing chain, a knowledge-aided approach exploiting the radar domain knowledge and signal structure, to generate high resolution radar range-azimuth spectra for object detection and classification using deep neural networks. To achieve waveform orthogonality among a large number of transmit antennas cascaded by four automotive radar transceivers, we propose a staggered time division multiplexing (TDM) scheme and velocity unfolding algorithm using both Chinese remainder theorem and overlapped array. Field experiments with multi-modal sensors were conducted at The University of Alabama. High resolution radar spectra were obtained and labeled using the camera and LiDAR recordings. Initial experiments show promising performance of object detection using an image-oriented deep neural network with an average precision of 96.1% at an intersection of union (IoU) of typically 0.5 on 2,000 radar frames.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge