Ruohan Meng

Semantic Deep Hiding for Robust Unlearnable Examples

Jun 25, 2024Abstract:Ensuring data privacy and protection has become paramount in the era of deep learning. Unlearnable examples are proposed to mislead the deep learning models and prevent data from unauthorized exploration by adding small perturbations to data. However, such perturbations (e.g., noise, texture, color change) predominantly impact low-level features, making them vulnerable to common countermeasures. In contrast, semantic images with intricate shapes have a wealth of high-level features, making them more resilient to countermeasures and potential for producing robust unlearnable examples. In this paper, we propose a Deep Hiding (DH) scheme that adaptively hides semantic images enriched with high-level features. We employ an Invertible Neural Network (INN) to invisibly integrate predefined images, inherently hiding them with deceptive perturbations. To enhance data unlearnability, we introduce a Latent Feature Concentration module, designed to work with the INN, regularizing the intra-class variance of these perturbations. To further boost the robustness of unlearnable examples, we design a Semantic Images Generation module that produces hidden semantic images. By utilizing similar semantic information, this module generates similar semantic images for samples within the same classes, thereby enlarging the inter-class distance and narrowing the intra-class distance. Extensive experiments on CIFAR-10, CIFAR-100, and an ImageNet subset, against 18 countermeasures, reveal that our proposed method exhibits outstanding robustness for unlearnable examples, demonstrating its efficacy in preventing unauthorized data exploitation.

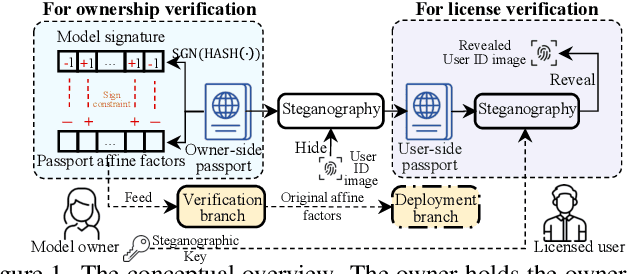

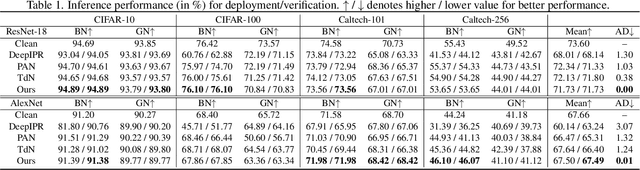

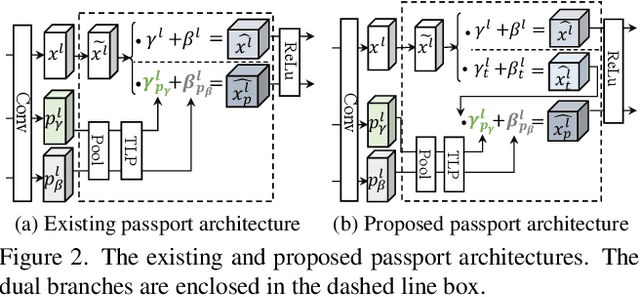

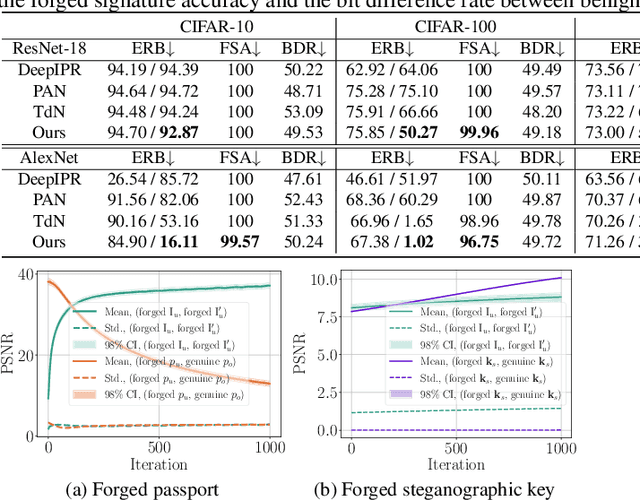

Steganographic Passport: An Owner and User Verifiable Credential for Deep Model IP Protection Without Retraining

Apr 03, 2024

Abstract:Ensuring the legal usage of deep models is crucial to promoting trustable, accountable, and responsible artificial intelligence innovation. Current passport-based methods that obfuscate model functionality for license-to-use and ownership verifications suffer from capacity and quality constraints, as they require retraining the owner model for new users. They are also vulnerable to advanced Expanded Residual Block ambiguity attacks. We propose Steganographic Passport, which uses an invertible steganographic network to decouple license-to-use from ownership verification by hiding the user's identity images into the owner-side passport and recovering them from their respective user-side passports. An irreversible and collision-resistant hash function is used to avoid exposing the owner-side passport from the derived user-side passports and increase the uniqueness of the model signature. To safeguard both the passport and model's weights against advanced ambiguity attacks, an activation-level obfuscation is proposed for the verification branch of the owner's model. By jointly training the verification and deployment branches, their weights become tightly coupled. The proposed method supports agile licensing of deep models by providing a strong ownership proof and license accountability without requiring a separate model retraining for the admission of every new user. Experiment results show that our Steganographic Passport outperforms other passport-based deep model protection methods in robustness against various known attacks.

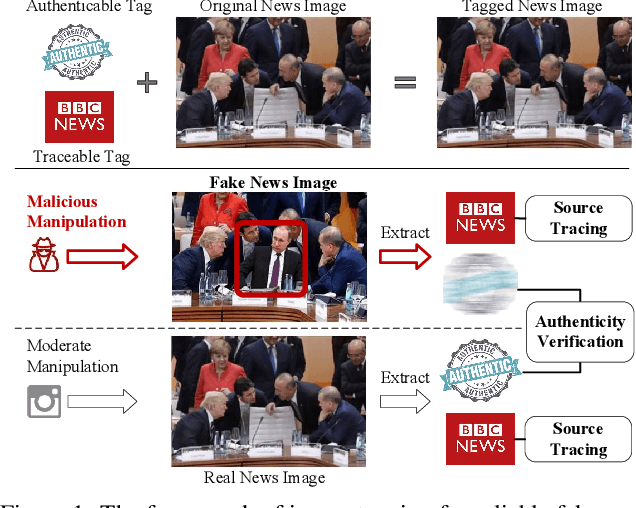

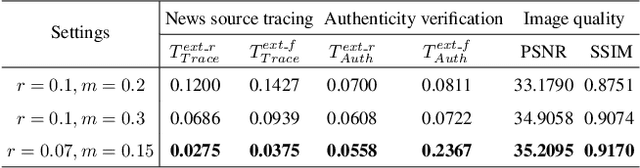

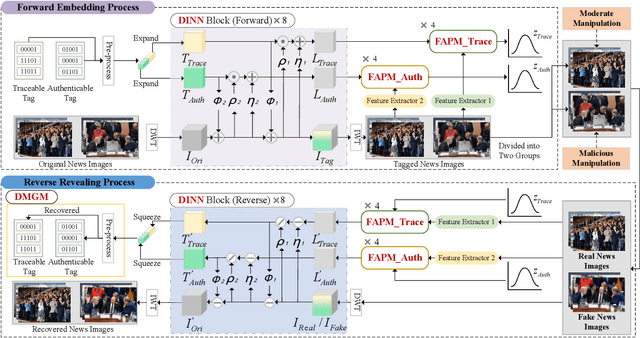

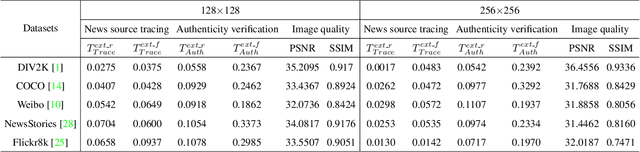

Traceable and Authenticable Image Tagging for Fake News Detection

Nov 20, 2022

Abstract:To prevent fake news images from misleading the public, it is desirable not only to verify the authenticity of news images but also to trace the source of fake news, so as to provide a complete forensic chain for reliable fake news detection. To simultaneously achieve the goals of authenticity verification and source tracing, we propose a traceable and authenticable image tagging approach that is based on a design of Decoupled Invertible Neural Network (DINN). The designed DINN can simultaneously embed the dual-tags, \textit{i.e.}, authenticable tag and traceable tag, into each news image before publishing, and then separately extract them for authenticity verification and source tracing. Moreover, to improve the accuracy of dual-tags extraction, we design a parallel Feature Aware Projection Model (FAPM) to help the DINN preserve essential tag information. In addition, we define a Distance Metric-Guided Module (DMGM) that learns asymmetric one-class representations to enable the dual-tags to achieve different robustness performances under malicious manipulations. Extensive experiments, on diverse datasets and unseen manipulations, demonstrate that the proposed tagging approach achieves excellent performance in the aspects of both authenticity verification and source tracing for reliable fake news detection and outperforms the prior works.

Continual Learning for Steganalysis

Sep 03, 2022

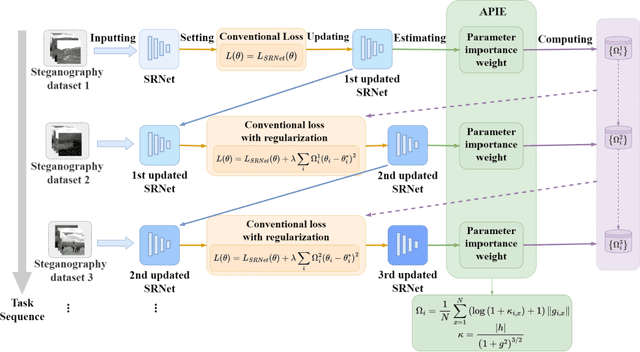

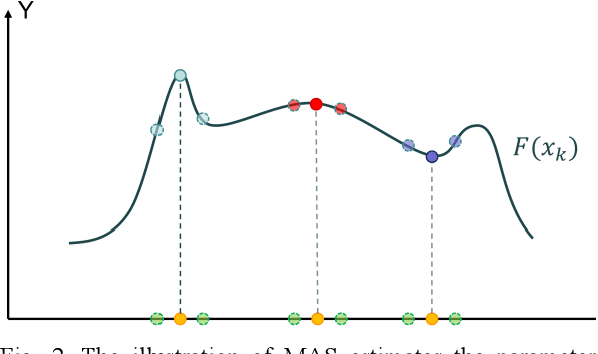

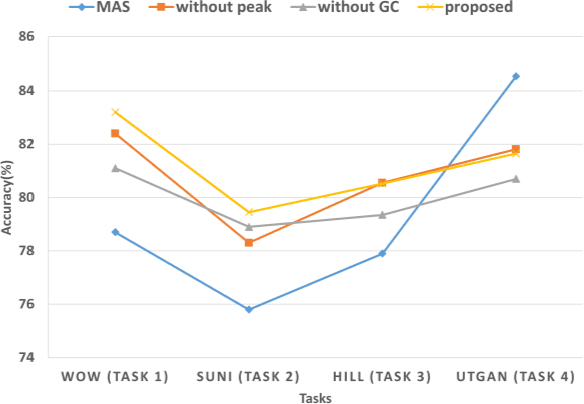

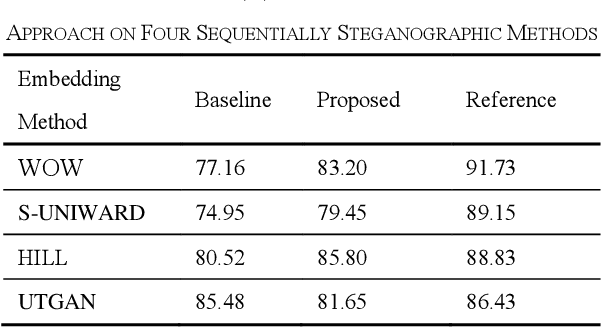

Abstract:To detect the existing steganographic algorithms, recent steganalysis methods usually train a Convolutional Neural Network (CNN) model on the dataset consisting of corresponding paired cover/stego-images. However, it is inefficient and impractical for those steganalysis tools to completely retrain the CNN model to make it effective against both the existing steganographic algorithms and a new emerging steganographic algorithm. Thus, existing steganalysis models usually lack dynamic extensibility for new steganographic algorithms, which limits their application in real-world scenarios. To address this issue, we propose an accurate parameter importance estimation (APIE) based-continual learning scheme for steganalysis. In this scheme, when a steganalysis model is trained on the new image dataset generated by the new steganographic algorithm, its network parameters are effectively and efficiently updated with sufficient consideration of their importance evaluated in the previous training process. This approach can guide the steganalysis model to learn the patterns of the new steganographic algorithm without significantly degrading the detectability against the previous steganographic algorithms. Experimental results demonstrate the proposed scheme has promising extensibility for new emerging steganographic algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge