Chaohui Xu

CHIP: Chameleon Hash-based Irreversible Passport for Robust Deep Model Ownership Verification and Active Usage Control

May 30, 2025Abstract:The pervasion of large-scale Deep Neural Networks (DNNs) and their enormous training costs make their intellectual property (IP) protection of paramount importance. Recently introduced passport-based methods attempt to steer DNN watermarking towards strengthening ownership verification against ambiguity attacks by modulating the affine parameters of normalization layers. Unfortunately, neither watermarking nor passport-based methods provide a holistic protection with robust ownership proof, high fidelity, active usage authorization and user traceability for offline access distributed models and multi-user Machine-Learning as a Service (MLaaS) cloud model. In this paper, we propose a Chameleon Hash-based Irreversible Passport (CHIP) protection framework that utilizes the cryptographic chameleon hash function to achieve all these goals. The collision-resistant property of chameleon hash allows for strong model ownership claim upon IP infringement and liable user traceability, while the trapdoor-collision property enables hashing of multiple user passports and licensee certificates to the same immutable signature to realize active usage control. Using the owner passport as an oracle, multiple user-specific triplets, each contains a passport-aware user model, a user passport, and a licensee certificate can be created for secure offline distribution. The watermarked master model can also be deployed for MLaaS with usage permission verifiable by the provision of any trapdoor-colliding user passports. CHIP is extensively evaluated on four datasets and two architectures to demonstrate its protection versatility and robustness. Our code is released at https://github.com/Dshm212/CHIP.

BadHMP: Backdoor Attack against Human Motion Prediction

Sep 29, 2024Abstract:Precise future human motion prediction over subsecond horizons from past observations is crucial for various safety-critical applications. To date, only one study has examined the vulnerability of human motion prediction to evasion attacks. In this paper, we propose BadHMP, the first backdoor attack that targets specifically human motion prediction. Our approach involves generating poisoned training samples by embedding a localized backdoor trigger in one arm of the skeleton, causing selected joints to remain relatively still or follow predefined motion in historical time steps. Subsequently, the future sequences are globally modified to the target sequences, and the entire training dataset is traversed to select the most suitable samples for poisoning. Our carefully designed backdoor triggers and targets guarantee the smoothness and naturalness of the poisoned samples, making them stealthy enough to evade detection by the model trainer while keeping the poisoned model unobtrusive in terms of prediction fidelity to untainted sequences. The target sequences can be successfully activated by the designed input sequences even with a low poisoned sample injection ratio. Experimental results on two datasets (Human3.6M and CMU-Mocap) and two network architectures (LTD and HRI) demonstrate the high-fidelity, effectiveness, and stealthiness of BadHMP. Robustness of our attack against fine-tuning defense is also verified.

Steganographic Passport: An Owner and User Verifiable Credential for Deep Model IP Protection Without Retraining

Apr 03, 2024

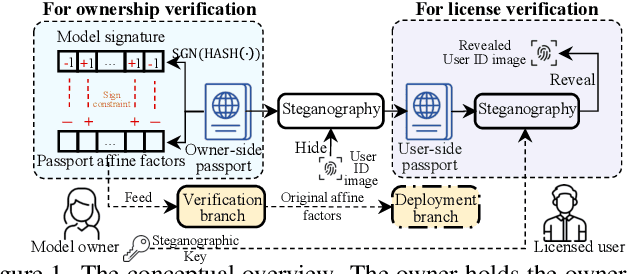

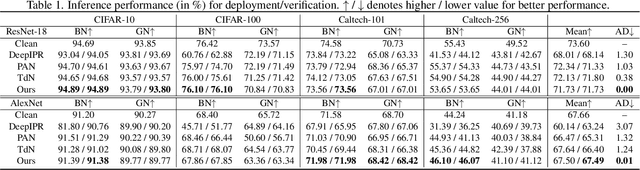

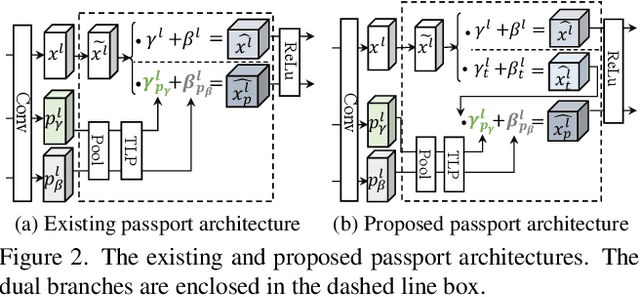

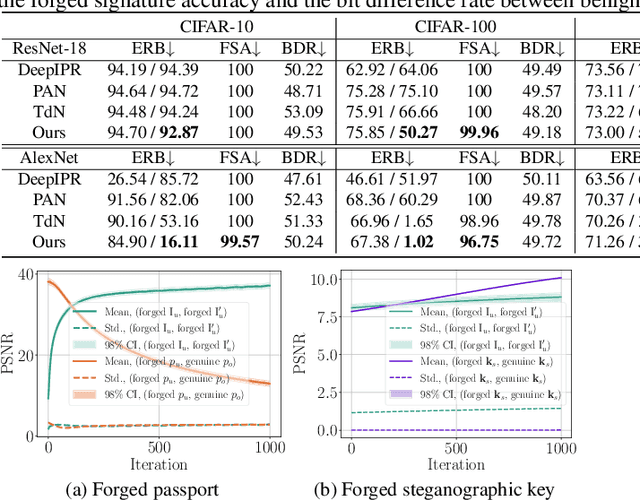

Abstract:Ensuring the legal usage of deep models is crucial to promoting trustable, accountable, and responsible artificial intelligence innovation. Current passport-based methods that obfuscate model functionality for license-to-use and ownership verifications suffer from capacity and quality constraints, as they require retraining the owner model for new users. They are also vulnerable to advanced Expanded Residual Block ambiguity attacks. We propose Steganographic Passport, which uses an invertible steganographic network to decouple license-to-use from ownership verification by hiding the user's identity images into the owner-side passport and recovering them from their respective user-side passports. An irreversible and collision-resistant hash function is used to avoid exposing the owner-side passport from the derived user-side passports and increase the uniqueness of the model signature. To safeguard both the passport and model's weights against advanced ambiguity attacks, an activation-level obfuscation is proposed for the verification branch of the owner's model. By jointly training the verification and deployment branches, their weights become tightly coupled. The proposed method supports agile licensing of deep models by providing a strong ownership proof and license accountability without requiring a separate model retraining for the admission of every new user. Experiment results show that our Steganographic Passport outperforms other passport-based deep model protection methods in robustness against various known attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge