Runyuan Ma

OPV: Outcome-based Process Verifier for Efficient Long Chain-of-Thought Verification

Dec 11, 2025Abstract:Large language models (LLMs) have achieved significant progress in solving complex reasoning tasks by Reinforcement Learning with Verifiable Rewards (RLVR). This advancement is also inseparable from the oversight automated by reliable verifiers. However, current outcome-based verifiers (OVs) are unable to inspect the unreliable intermediate steps in the long reasoning chains of thought (CoTs). Meanwhile, current process-based verifiers (PVs) have difficulties in reliably detecting errors in the complex long CoTs, limited by the scarcity of high-quality annotations due to the prohibitive costs of human annotations. Therefore, we propose the Outcome-based Process Verifier (OPV), which verifies the rationale process of summarized outcomes from long CoTs to achieve both accurate and efficient verification and enable large-scale annotation. To empower the proposed verifier, we adopt an iterative active learning framework with expert annotations to progressively improve the verification capability of OPV with fewer annotation costs. Specifically, in each iteration, the most uncertain cases of the current best OPV are annotated and then subsequently used to train a new OPV through Rejection Fine-Tuning (RFT) and RLVR for the next round. Extensive experiments demonstrate OPV's superior performance and broad applicability. It achieves new state-of-the-art results on our held-out OPV-Bench, outperforming much larger open-source models such as Qwen3-Max-Preview with an F1 score of 83.1 compared to 76.3. Furthermore, OPV effectively detects false positives within synthetic dataset, closely align with expert assessment. When collaborating with policy models, OPV consistently yields performance gains, e.g., raising the accuracy of DeepSeek-R1-Distill-Qwen-32B from 55.2% to 73.3% on AIME2025 as the compute budget scales.

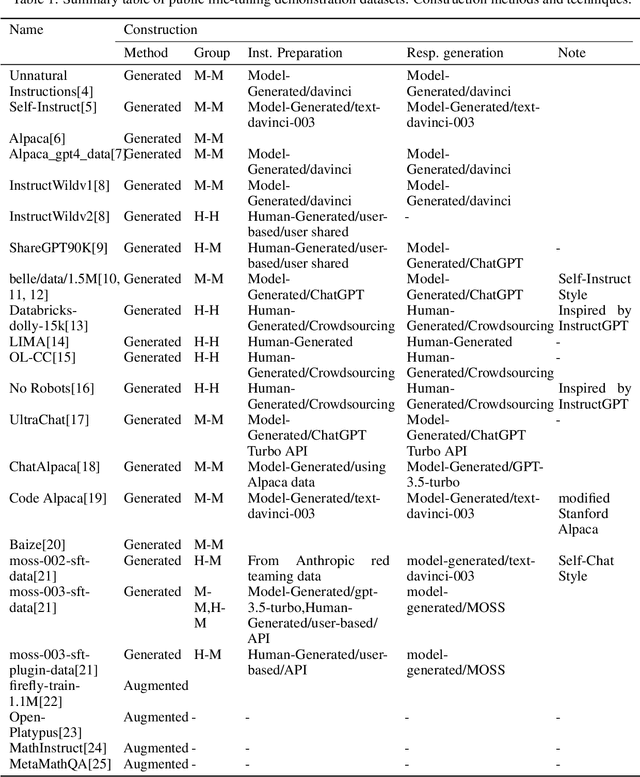

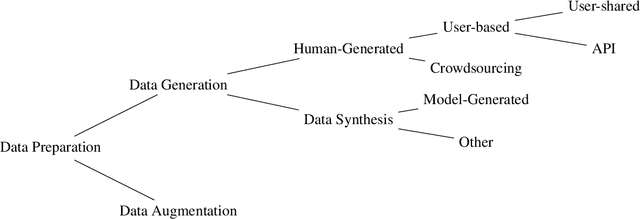

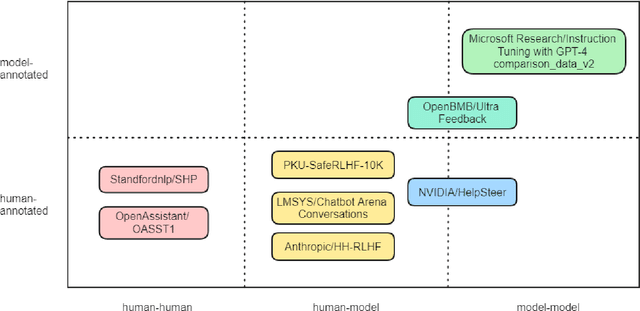

Investigating Public Fine-Tuning Datasets: A Complex Review of Current Practices from a Construction Perspective

Jul 11, 2024

Abstract:With the rapid development of the large model domain, research related to fine-tuning has concurrently seen significant advancement, given that fine-tuning is a constituent part of the training process for large-scale models. Data engineering plays a fundamental role in the training process of models, which includes data infrastructure, data processing, etc. Data during fine-tuning likewise forms the base for large models. In order to embrace the power and explore new possibilities of fine-tuning datasets, this paper reviews current public fine-tuning datasets from the perspective of data construction. An overview of public fine-tuning datasets from two sides: evolution and taxonomy, is provided in this review, aiming to chart the development trajectory. Construction techniques and methods for public fine-tuning datasets of Large Language Models (LLMs), including data generation and data augmentation among others, are detailed. This elaboration follows the aforementioned taxonomy, specifically across demonstration, comparison, and generalist categories. Additionally, a category tree of data generation techniques has been abstracted in our review to assist researchers in gaining a deeper understanding of fine-tuning datasets from the construction dimension. Our review also summarizes the construction features in different data preparation phases of current practices in this field, aiming to provide a comprehensive overview and inform future research. Fine-tuning dataset practices, encompassing various data modalities, are also discussed from a construction perspective in our review. Towards the end of the article, we offer insights and considerations regarding the future construction and developments of fine-tuning datasets.

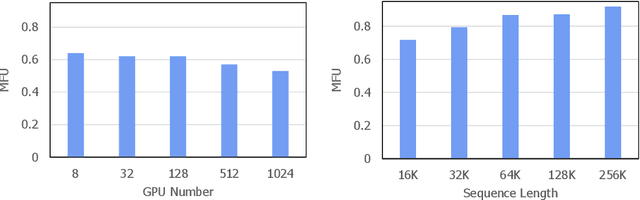

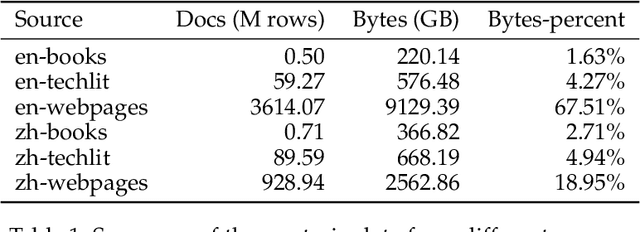

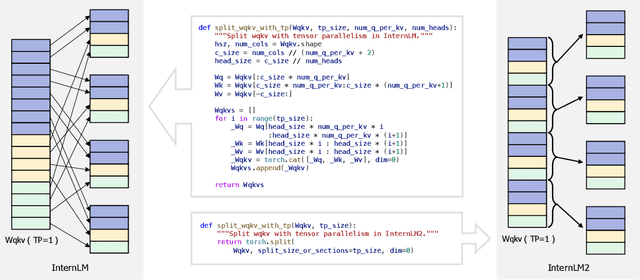

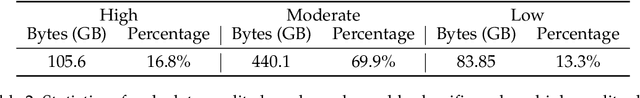

InternLM2 Technical Report

Mar 26, 2024

Abstract:The evolution of Large Language Models (LLMs) like ChatGPT and GPT-4 has sparked discussions on the advent of Artificial General Intelligence (AGI). However, replicating such advancements in open-source models has been challenging. This paper introduces InternLM2, an open-source LLM that outperforms its predecessors in comprehensive evaluations across 6 dimensions and 30 benchmarks, long-context modeling, and open-ended subjective evaluations through innovative pre-training and optimization techniques. The pre-training process of InternLM2 is meticulously detailed, highlighting the preparation of diverse data types including text, code, and long-context data. InternLM2 efficiently captures long-term dependencies, initially trained on 4k tokens before advancing to 32k tokens in pre-training and fine-tuning stages, exhibiting remarkable performance on the 200k ``Needle-in-a-Haystack" test. InternLM2 is further aligned using Supervised Fine-Tuning (SFT) and a novel Conditional Online Reinforcement Learning from Human Feedback (COOL RLHF) strategy that addresses conflicting human preferences and reward hacking. By releasing InternLM2 models in different training stages and model sizes, we provide the community with insights into the model's evolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge