Runjie Ma

GraphStorm: all-in-one graph machine learning framework for industry applications

Jun 10, 2024

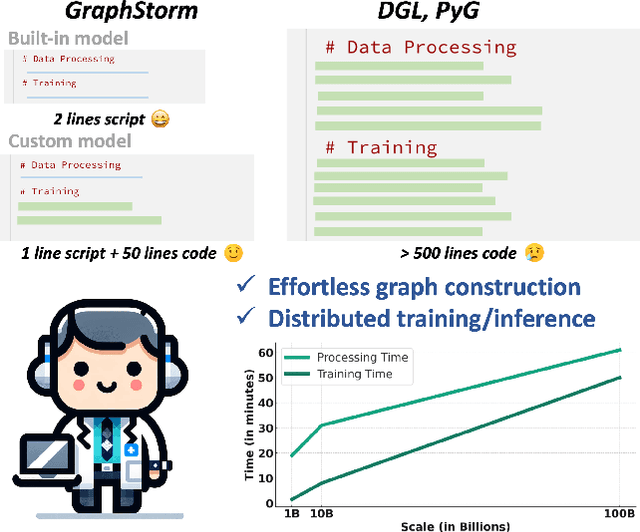

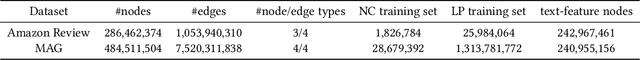

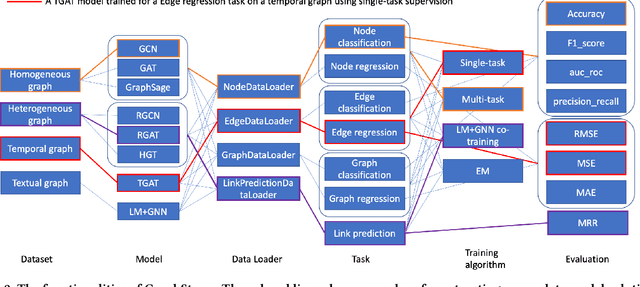

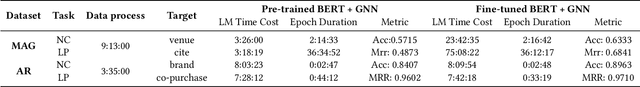

Abstract:Graph machine learning (GML) is effective in many business applications. However, making GML easy to use and applicable to industry applications with massive datasets remain challenging. We developed GraphStorm, which provides an end-to-end solution for scalable graph construction, graph model training and inference. GraphStorm has the following desirable properties: (a) Easy to use: it can perform graph construction and model training and inference with just a single command; (b) Expert-friendly: GraphStorm contains many advanced GML modeling techniques to handle complex graph data and improve model performance; (c) Scalable: every component in GraphStorm can operate on graphs with billions of nodes and can scale model training and inference to different hardware without changing any code. GraphStorm has been used and deployed for over a dozen billion-scale industry applications after its release in May 2023. It is open-sourced in Github: https://github.com/awslabs/graphstorm.

Network In Graph Neural Network

Nov 23, 2021

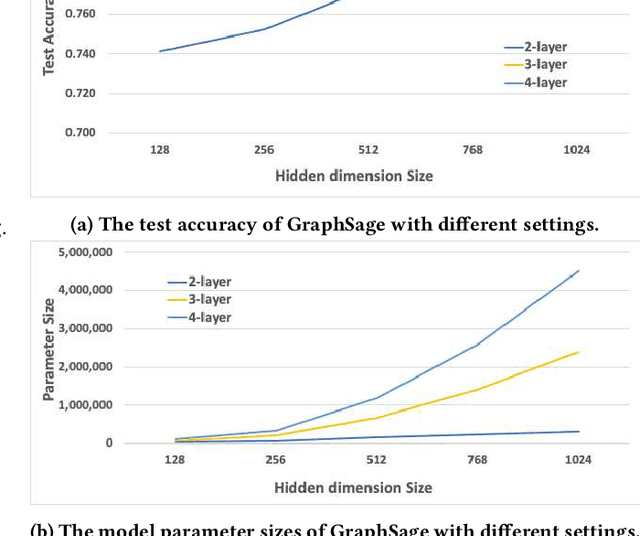

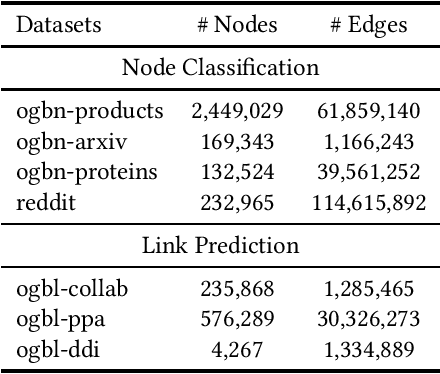

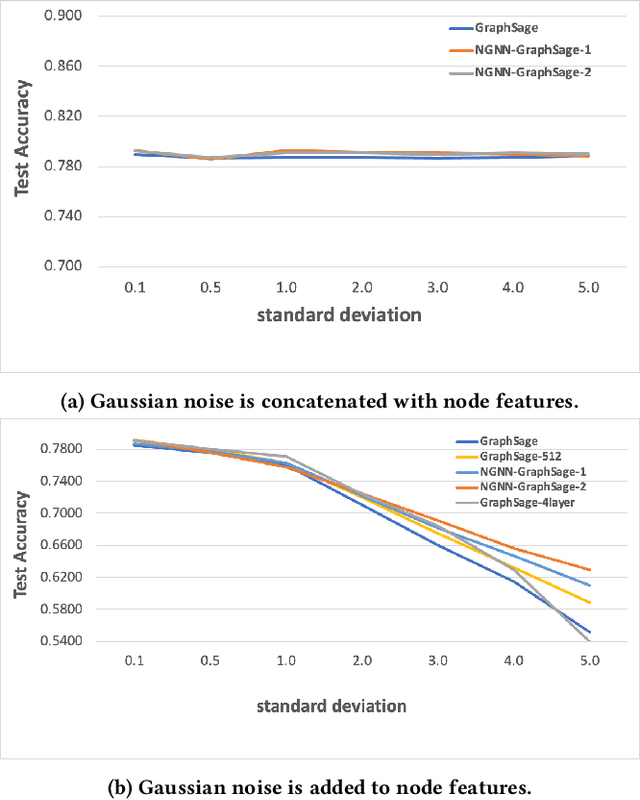

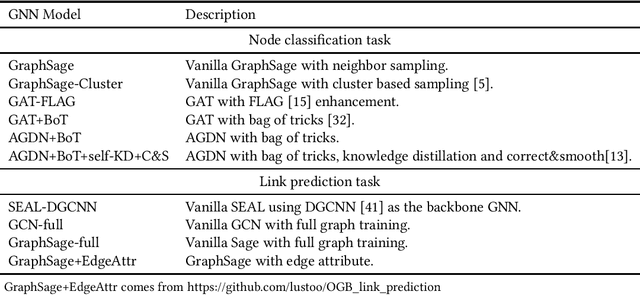

Abstract:Graph Neural Networks (GNNs) have shown success in learning from graph structured data containing node/edge feature information, with application to social networks, recommendation, fraud detection and knowledge graph reasoning. In this regard, various strategies have been proposed in the past to improve the expressiveness of GNNs. For example, one straightforward option is to simply increase the parameter size by either expanding the hid-den dimension or increasing the number of GNN layers. However, wider hidden layers can easily lead to overfitting, and incrementally adding more GNN layers can potentially result in over-smoothing.In this paper, we present a model-agnostic methodology, namely Network In Graph Neural Network (NGNN ), that allows arbitrary GNN models to increase their model capacity by making the model deeper. However, instead of adding or widening GNN layers, NGNN deepens a GNN model by inserting non-linear feedforward neural network layer(s) within each GNN layer. An analysis of NGNN as applied to a GraphSage base GNN on ogbn-products data demonstrate that it can keep the model stable against either node feature or graph structure perturbations. Furthermore, wide-ranging evaluation results on both node classification and link prediction tasks show that NGNN works reliably across diverse GNN architectures.For instance, it improves the test accuracy of GraphSage on the ogbn-products by 1.6% and improves the hits@100 score of SEAL on ogbl-ppa by 7.08% and the hits@20 score of GraphSage+Edge-Attr on ogbl-ppi by 6.22%. And at the time of this submission, it achieved two first places on the OGB link prediction leaderboard.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge