David Paul Wipf

On the Initialization of Graph Neural Networks

Dec 05, 2023

Abstract:Graph Neural Networks (GNNs) have displayed considerable promise in graph representation learning across various applications. The core learning process requires the initialization of model weight matrices within each GNN layer, which is typically accomplished via classic initialization methods such as Xavier initialization. However, these methods were originally motivated to stabilize the variance of hidden embeddings and gradients across layers of Feedforward Neural Networks (FNNs) and Convolutional Neural Networks (CNNs) to avoid vanishing gradients and maintain steady information flow. In contrast, within the GNN context classical initializations disregard the impact of the input graph structure and message passing on variance. In this paper, we analyze the variance of forward and backward propagation across GNN layers and show that the variance instability of GNN initializations comes from the combined effect of the activation function, hidden dimension, graph structure and message passing. To better account for these influence factors, we propose a new initialization method for Variance Instability Reduction within GNN Optimization (Virgo), which naturally tends to equate forward and backward variances across successive layers. We conduct comprehensive experiments on 15 datasets to show that Virgo can lead to superior model performance and more stable variance at initialization on node classification, link prediction and graph classification tasks. Codes are in https://github.com/LspongebobJH/virgo_icml2023.

Structured Graph Variational Autoencoders for Indoor Furniture layout Generation

Apr 13, 2022

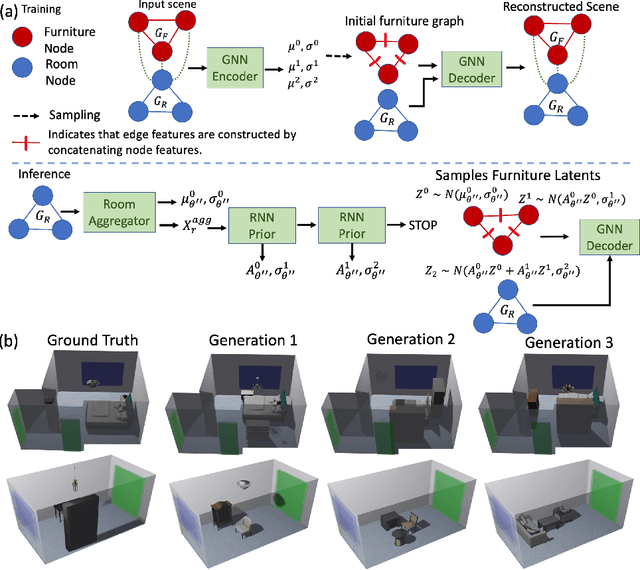

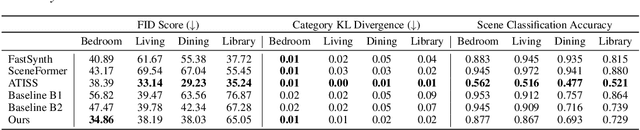

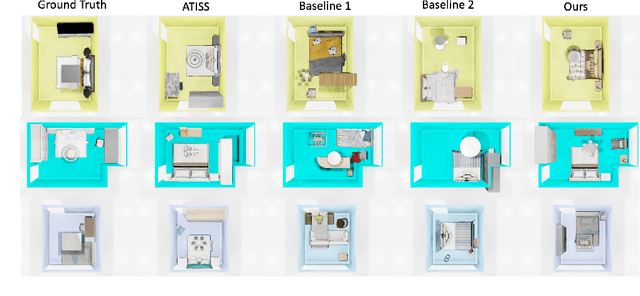

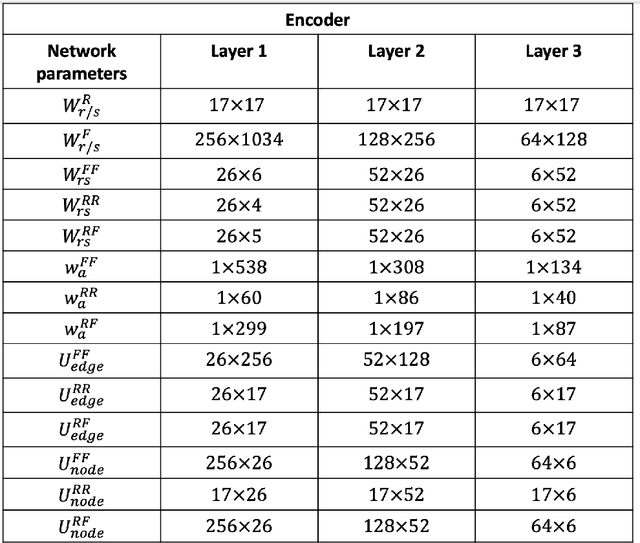

Abstract:We present a structured graph variational autoencoder for generating the layout of indoor 3D scenes. Given the room type (e.g., living room or library) and the room layout (e.g., room elements such as floor and walls), our architecture generates a collection of objects (e.g., furniture items such as sofa, table and chairs) that is consistent with the room type and layout. This is a challenging problem because the generated scene should satisfy multiple constrains, e.g., each object must lie inside the room and two objects cannot occupy the same volume. To address these challenges, we propose a deep generative model that encodes these relationships as soft constraints on an attributed graph (e.g., the nodes capture attributes of room and furniture elements, such as class, pose and size, and the edges capture geometric relationships such as relative orientation). The architecture consists of a graph encoder that maps the input graph to a structured latent space, and a graph decoder that generates a furniture graph, given a latent code and the room graph. The latent space is modeled with auto-regressive priors, which facilitates the generation of highly structured scenes. We also propose an efficient training procedure that combines matching and constrained learning. Experiments on the 3D-FRONT dataset show that our method produces scenes that are diverse and are adapted to the room layout.

Network In Graph Neural Network

Nov 23, 2021

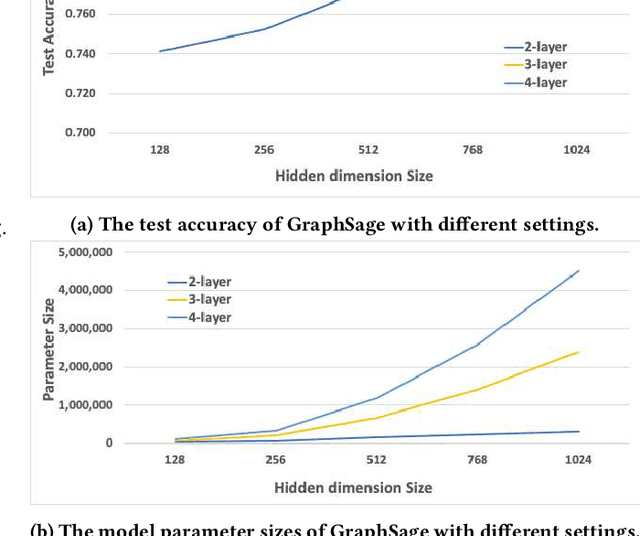

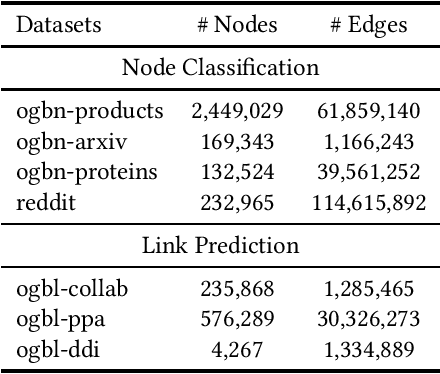

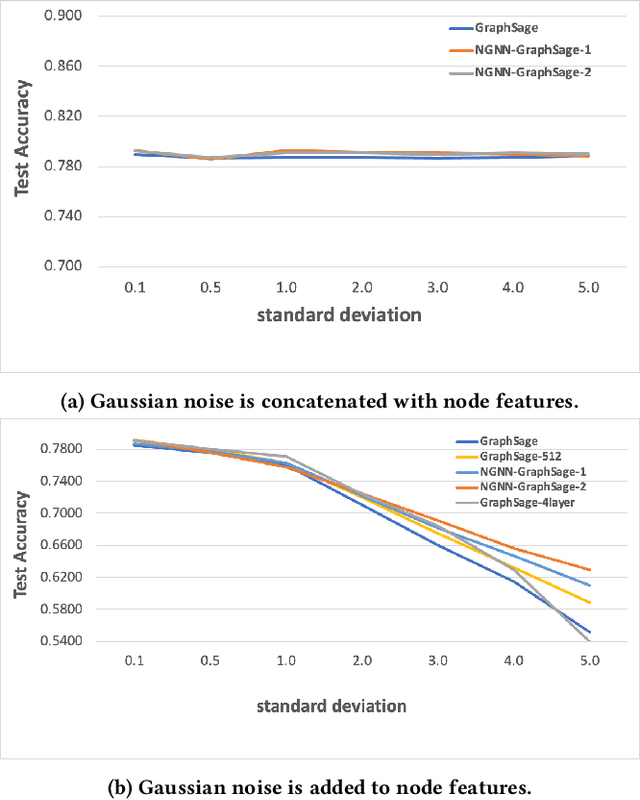

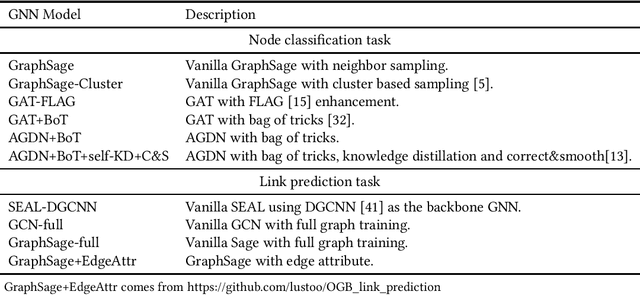

Abstract:Graph Neural Networks (GNNs) have shown success in learning from graph structured data containing node/edge feature information, with application to social networks, recommendation, fraud detection and knowledge graph reasoning. In this regard, various strategies have been proposed in the past to improve the expressiveness of GNNs. For example, one straightforward option is to simply increase the parameter size by either expanding the hid-den dimension or increasing the number of GNN layers. However, wider hidden layers can easily lead to overfitting, and incrementally adding more GNN layers can potentially result in over-smoothing.In this paper, we present a model-agnostic methodology, namely Network In Graph Neural Network (NGNN ), that allows arbitrary GNN models to increase their model capacity by making the model deeper. However, instead of adding or widening GNN layers, NGNN deepens a GNN model by inserting non-linear feedforward neural network layer(s) within each GNN layer. An analysis of NGNN as applied to a GraphSage base GNN on ogbn-products data demonstrate that it can keep the model stable against either node feature or graph structure perturbations. Furthermore, wide-ranging evaluation results on both node classification and link prediction tasks show that NGNN works reliably across diverse GNN architectures.For instance, it improves the test accuracy of GraphSage on the ogbn-products by 1.6% and improves the hits@100 score of SEAL on ogbl-ppa by 7.08% and the hits@20 score of GraphSage+Edge-Attr on ogbl-ppi by 6.22%. And at the time of this submission, it achieved two first places on the OGB link prediction leaderboard.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge