Ruiqing Mao

Optimization of Layer Skipping and Frequency Scaling for Convolutional Neural Networks under Latency Constraint

Mar 31, 2025

Abstract:The energy consumption of Convolutional Neural Networks (CNNs) is a critical factor in deploying deep learning models on resource-limited equipment such as mobile devices and autonomous vehicles. We propose an approach involving Proportional Layer Skipping (PLS) and Frequency Scaling (FS). Layer skipping reduces computational complexity by selectively bypassing network layers, whereas frequency scaling adjusts the frequency of the processor to optimize energy use under latency constraints. Experiments of PLS and FS on ResNet-152 with the CIFAR-10 dataset demonstrated significant reductions in computational demands and energy consumption with minimal accuracy loss. This study offers practical solutions for improving real-time processing in resource-limited settings and provides insights into balancing computational efficiency and model performance.

DiffCP: Ultra-Low Bit Collaborative Perception via Diffusion Model

Sep 29, 2024

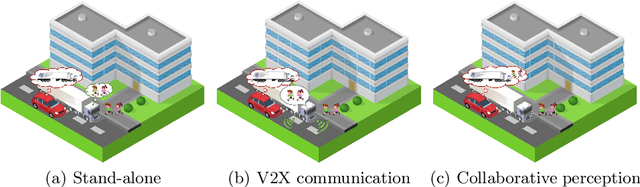

Abstract:Collaborative perception (CP) is emerging as a promising solution to the inherent limitations of stand-alone intelligence. However, current wireless communication systems are unable to support feature-level and raw-level collaborative algorithms due to their enormous bandwidth demands. In this paper, we propose DiffCP, a novel CP paradigm that utilizes a specialized diffusion model to efficiently compress the sensing information of collaborators. By incorporating both geometric and semantic conditions into the generative model, DiffCP enables feature-level collaboration with an ultra-low communication cost, advancing the practical implementation of CP systems. This paradigm can be seamlessly integrated into existing CP algorithms to enhance a wide range of downstream tasks. Through extensive experimentation, we investigate the trade-offs between communication, computation, and performance. Numerical results demonstrate that DiffCP can significantly reduce communication costs by 14.5-fold while maintaining the same performance as the state-of-the-art algorithm.

C-MASS: Combinatorial Mobility-Aware Sensor Scheduling for Collaborative Perception with Second-Order Topology Approximation

Jun 29, 2024

Abstract:Collaborative Perception (CP) has been a promising solution to address occlusions in the traffic environment by sharing sensor data among collaborative vehicles (CoV) via vehicle-to-everything (V2X) network. With limited wireless bandwidth, CP necessitates task-oriented and receiver-aware sensor scheduling to prioritize important and complementary sensor data. However, due to vehicular mobility, it is challenging and costly to obtain the up-to-date perception topology, i.e., whether a combination of CoVs can jointly detect an object. In this paper, we propose a combinatorial mobility-aware sensor scheduling (C-MASS) framework for CP with minimal communication overhead. Specifically, detections are replayed with sensor data from individual CoVs and pairs of CoVs to maintain an empirical perception topology up to the second order, which approximately represents the complete perception topology. A hybrid greedy algorithm is then proposed to solve a variant of the budgeted maximum coverage problem with a worst-case performance guarantee. The C-MASS scheduling algorithm adapts the greedy algorithm by incorporating the topological uncertainty and the unexplored time of CoVs to balance exploration and exploitation, addressing the mobility challenge. Extensive numerical experiments demonstrate the near-optimality of the proposed C-MASS framework in both edge-assisted and distributed CP configurations. The weighted recall improvements over object-level CP are 5.8% and 4.2%, respectively. Compared to distance-based and area-based greedy heuristics, the gaps to the offline optimal solutions are reduced by up to 75% and 71%, respectively.

Task-Oriented Wireless Communications for Collaborative Perception in Intelligent Unmanned Systems

Jun 05, 2024

Abstract:Collaborative Perception (CP) has shown great potential to achieve more holistic and reliable environmental perception in intelligent unmanned systems (IUSs). However, implementing CP still faces key challenges due to the characteristics of the CP task and the dynamics of wireless channels. In this article, a task-oriented wireless communication framework is proposed to jointly optimize the communication scheme and the CP procedure. We first propose channel-adaptive compression and robust fusion approaches to extract and exploit the most valuable semantic information under wireless communication constraints. We then propose a task-oriented distributed scheduling algorithm to identify the best collaborators for CP under dynamic environments. The main idea is learning while scheduling, where the collaboration utility is effectively learned with low computation and communication overhead. Case studies are carried out in connected autonomous driving scenarios to verify the proposed framework. Finally, we identify several future research directions.

Infrastructure-Assisted Collaborative Perception in Automated Valet Parking: A Safety Perspective

Mar 22, 2024Abstract:Environmental perception in Automated Valet Parking (AVP) has been a challenging task due to severe occlusions in parking garages. Although Collaborative Perception (CP) can be applied to broaden the field of view of connected vehicles, the limited bandwidth of vehicular communications restricts its application. In this work, we propose a BEV feature-based CP network architecture for infrastructure-assisted AVP systems. The model takes the roadside camera and LiDAR as optional inputs and adaptively fuses them with onboard sensors in a unified BEV representation. Autoencoder and downsampling are applied for channel-wise and spatial-wise dimension reduction, while sparsification and quantization further compress the feature map with little loss in data precision. Combining these techniques, the size of a BEV feature map is effectively compressed to fit in the feasible data rate of the NR-V2X network. With the synthetic AVP dataset, we observe that CP can effectively increase perception performance, especially for pedestrians. Moreover, the advantage of infrastructure-assisted CP is demonstrated in two typical safety-critical scenarios in the AVP setting, increasing the maximum safe cruising speed by up to 3m/s in both scenarios.

MASS: Mobility-Aware Sensor Scheduling of Cooperative Perception for Connected Automated Driving

Feb 25, 2023Abstract:Timely and reliable environment perception is fundamental to safe and efficient automated driving. However, the perception of standalone intelligence inevitably suffers from occlusions. A new paradigm, Cooperative Perception (CP), comes to the rescue by sharing sensor data from another perspective, i.e., from a cooperative vehicle (CoV). Due to the limited communication bandwidth, it is essential to schedule the most beneficial CoV, considering both the viewpoints and communication quality. Existing methods rely on the exchange of meta-information, such as visibility maps, to predict the perception gains from nearby vehicles, which induces extra communication and processing overhead. In this paper, we propose a new approach, learning while scheduling, for distributed scheduling of CP. The solution enables CoVs to predict the perception gains using past observations, leveraging the temporal continuity of perception gains. Specifically, we design a mobility-aware sensor scheduling (MASS) algorithm based on the restless multi-armed bandit (RMAB) theory to maximize the expected average perception gain. An upper bound on the expected average learning regret is proved, which matches the lower bound of any online algorithm up to a logarithmic factor. Extensive simulations are carried out on realistic traffic traces. The results show that the proposed MASS algorithm achieves the best average perception gain and improves recall by up to 4.2 percentage points compared to other learning-based algorithms. Finally, a case study on a trace of LiDAR frames qualitatively demonstrates the superiority of adaptive exploration, the key element of the MASS algorithm.

DOLPHINS: Dataset for Collaborative Perception enabled Harmonious and Interconnected Self-driving

Jul 15, 2022

Abstract:Vehicle-to-Everything (V2X) network has enabled collaborative perception in autonomous driving, which is a promising solution to the fundamental defect of stand-alone intelligence including blind zones and long-range perception. However, the lack of datasets has severely blocked the development of collaborative perception algorithms. In this work, we release DOLPHINS: Dataset for cOllaborative Perception enabled Harmonious and INterconnected Self-driving, as a new simulated large-scale various-scenario multi-view multi-modality autonomous driving dataset, which provides a ground-breaking benchmark platform for interconnected autonomous driving. DOLPHINS outperforms current datasets in six dimensions: temporally-aligned images and point clouds from both vehicles and Road Side Units (RSUs) enabling both Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) based collaborative perception; 6 typical scenarios with dynamic weather conditions make the most various interconnected autonomous driving dataset; meticulously selected viewpoints providing full coverage of the key areas and every object; 42376 frames and 292549 objects, as well as the corresponding 3D annotations, geo-positions, and calibrations, compose the largest dataset for collaborative perception; Full-HD images and 64-line LiDARs construct high-resolution data with sufficient details; well-organized APIs and open-source codes ensure the extensibility of DOLPHINS. We also construct a benchmark of 2D detection, 3D detection, and multi-view collaborative perception tasks on DOLPHINS. The experiment results show that the raw-level fusion scheme through V2X communication can help to improve the precision as well as to reduce the necessity of expensive LiDAR equipment on vehicles when RSUs exist, which may accelerate the popularity of interconnected self-driving vehicles. DOLPHINS is now available on https://dolphins-dataset.net/.

Online V2X Scheduling for Raw-Level Cooperative Perception

Feb 12, 2022

Abstract:Cooperative perception of connected vehicles comes to the rescue when the field of view restricts stand-alone intelligence. While raw-level cooperative perception preserves most information to guarantee accuracy, it is demanding in communication bandwidth and computation power. Therefore, it is important to schedule the most beneficial vehicle to share its sensor in terms of supplementary view and stable network connection. In this paper, we present a model of raw-level cooperative perception and formulate the energy minimization problem of sensor sharing scheduling as a variant of the Multi-Armed Bandit (MAB) problem. Specifically, volatility of the neighboring vehicles, heterogeneity of V2X channels, and the time-varying traffic context are taken into consideration. Then we propose an online learning-based algorithm with logarithmic performance loss, achieving a decent trade-off between exploration and exploitation. Simulation results under different scenarios indicate that the proposed algorithm quickly learns to schedule the optimal cooperative vehicle and saves more energy as compared to baseline algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge